- Submit source code

- Submit implementation details

- Submit appendex

- Perfect link to the paper

- Citation

- Acknowledgment

- Getting Started

- Reference

KUNet $\rightarrow$ Paper Link

IJCAI2022 (long oral)

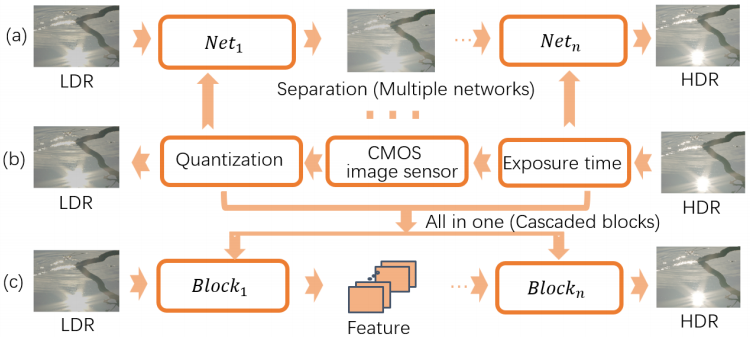

Comparison between the method of reversing the camera imaging pipeline and our method:

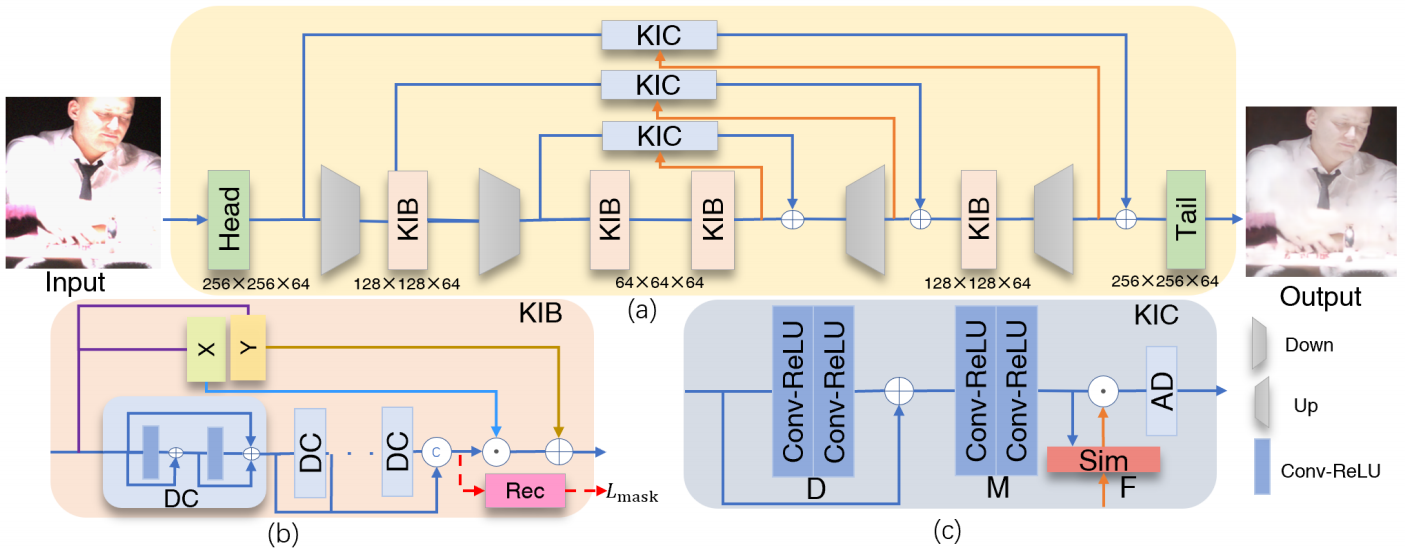

Overview of the method:

For the Head, Tail, D block of KIB module and KIC module, we use

We use two data sets to evaluate our method, i.e.,for image and video tasks. Both of these two data sets contain information about moving light sources, rich colors, highlights and bright. For the image task ,we use NTIRE 2021 dataset. They are 1416 paired training images and 78 test images. For the video task, we conduct experiment on HDRTV[3]. This dataset contains 1235 paired training pictures and 117 test pictures. Please refer to the paper for the details on the processing of this dataset. This dataset can be downloaded from Baidu Netdisk(accsee code: mpr6) .

h5py==3.6.0

lmdb==1.2.1

pandas==1.1.5

pillow==8.4.0

prefetch-generator==1.0.1

py-opencv==4.5.2

python==3.7.0

pytorch==1.9.0

scikit-image==0.18.3

scipy==1.7.0

tensorboard==2.8.0

torchvision==0.2.2

tqdm==4.61.2

yaml==0.2.5we provide the pretrained models to test, which can be downloaded from the link provided by the dataset. Please put the obtained files into models according to the instructions.

-

Modify the pictures and model paths in the *.yml file(

cd code/options/test.) -

cd code/ python /test.py -opt /options/test/KIB_mask.yml

-

Prepare the data. Generate the sub-images with specific path size using

./scripts/extract_subimgs_single.py -

make sure that the paths and settings in

./options/train/train_KIB.ymlare correct,then runcd codes/ -

For the image task

python train.py -opt option/train/train_KIB.yml

-

For the video task

python train.py -opt option/train/train_hdrtv.yml

-

All models and training states are stored in

./experiments.

Two metrics are used to evaluate the quantitative performance of different methods on image tasks, including ./metrics for convenient usage.

-

W. Shi, J. Caballero, F. Husz´ar, J. Totz, A.P.

Aitken, R. Bishop, D. Rueckert, and Z. Wang. Real-time single image and video super-resolution using an effificient sub-pixel convolutional neural network. In CVPR, pages 1874–1883, 2016.

-

H. Scharr. Optimal filters for extended optical flow. In IWCM, pages 14–29. Springer

-

X. Chen, Z. Zhang, J. Ren, L. Tian, Y. Qiao, and C. Dong. A new journey from SDRTV to HDRTV. In ICCV,

pages 4500–4509, 2021.

If our work is helpful to you, please cite our paper:

@inproceedings{wang2022kunet,

title={KUNet: Imaging Knowledge-Inspired Single HDR Image Reconstruction},

author={Wang, Hu and Ye, Mao and ZHU, XIATIAN and Li, Shuai and Zhu, Ce and Li, Xue},

booktitle={IJCAI-ECAI 2022}

}The code and format is inspired by HDRTV