ParSeq is a framework that makes it easier to write asynchronous code in Java.

Some of the key benefits of ParSeq include:

- Parallelization of asynchronous operations (such as IO)

- Serialized execution for non-blocking computation

- Code reuse via task composition

- Simple error propagation and recovery

- Execution tracing and visualization

- Batching of asynchronous operations

- Tasks with retry policy

Our Wiki includes an introductory example, a User's Guide, javadoc, and more.

See CHANGELOG for list of changes.

In this example we show how to fetch several pages in parallel and how to combine them once they've all been retrieved.

You can find source code here: IntroductoryExample.

First we can retrieve a single page using an asynchronous HTTP client as follows:

final Task<Response> google = HttpClient.get("http://www.google.com").task();

engine.run(google);

google.await();

System.out.println("Google Page: " + google.get().getResponseBody());This will print:

Google Page: <!doctype html><html>...

In this code snippet we don't really get any benefit from ParSeq. Essentially we create a task that can be run asynchronously, but then we block for completion using google.await(). In this case, the code is more complicated than issuing a simple synchronous call. We can improve this by making it asynchronous:

final Task<String> google = HttpClient.get("http://www.google.com").task()

.map(Response::getResponseBody)

.andThen(body -> System.out.println("Google Page: " + body));

engine.run(google);We used map method to transform Response into the String and andThen method to print out result.

Now, let's expand the example so that we can fetch a few more pages in parallel.

First, let's create a helper method that creates a task responsible for fetching page body given a URL.

private Task<String> fetchBody(String url) {

return HttpClient.get(url).task().map(Response::getResponseBody);

}Next, we will compose tasks to run in parallel using Task.par.

final Task<String> google = fetchBody("http://www.google.com");

final Task<String> yahoo = fetchBody("http://www.yahoo.com");

final Task<String> bing = fetchBody("http://www.bing.com");

final Task<String> plan = Task.par(google, yahoo, bing)

.map((g, y, b) -> "Google Page: " + g +" \n" +

"Yahoo Page: " + y + "\n" +

"Bing Page: " + b + "\n")

.andThen(System.out::println);

engine.run(plan);This example is fully asynchronous. The home pages for Google, Yahoo, and Bing are all fetched in parallel while the original thread has returned to the calling code. We used Tasks.par to tell the engine to parallelize these HTTP requests. Once all of the responses have been retrieved they are transformed into a String that is finally printed out.

We can do various transforms on the data we retrieved. Here's a very simple transform that sums the length of the 3 pages that were fetched:

final Task<Integer> sumLengths =

Task.par(google.map(String::length),

yahoo.map(String::length),

bing.map(String::length))

.map("sum", (g, y, b) -> g + y + b);The sumLengths task can be given to an engine for execution and its result value will be set to the sum of the length of the 3 fetched pages.

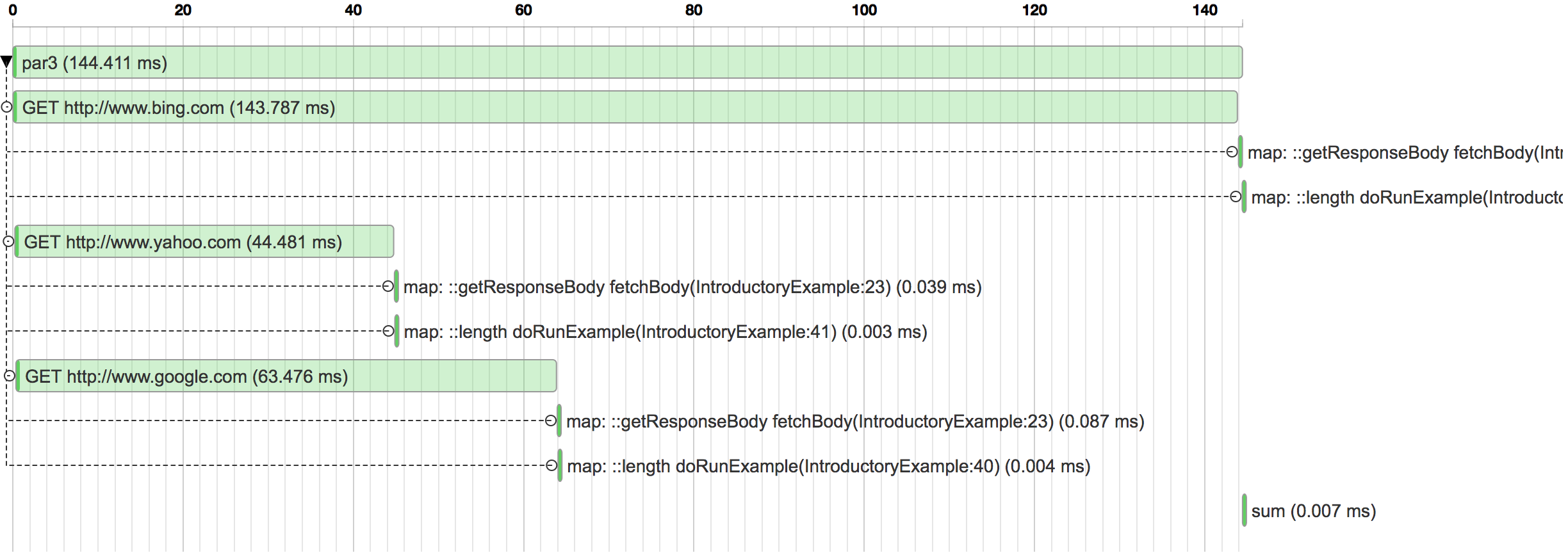

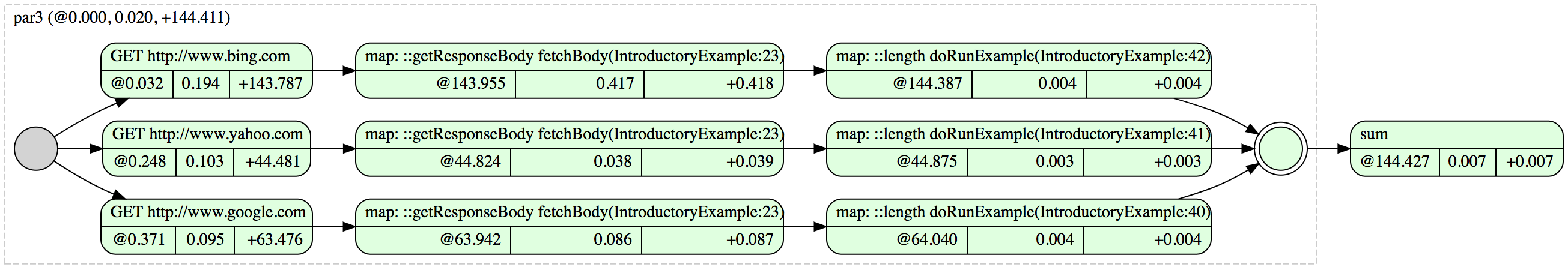

Notice that we added descriptions to tasks. e.g. map("sum", (g, y, b) -> g + y + b). Using ParSeq's trace visualization tools we can visualize execution of the plan.

Waterfall graph shows tasks execution in time (notice how all GET requests are executed in parallel):

Graphviz diagram best describes relationships between tasks:

For more in-depth description of ParSeq please visit User's Guide.

For many more examples, please see the parseq-examples contrib project in the source code.

ParSeq is licensed under the terms of the Apache License, Version 2.0.