A simple project to scrape data from Zhihu.

This project provides a way to scrape data from Zhi Hu site. We use this project to scrape dataset for Open Assistant LLM project (https://open-assistant.io/).

Use the scrape_process.py to get started.

The project in is Python. We primarily use

- Playwright as headless browser,

- Ray for parallel processing

- BeautifulSoup for html extraction

- DuckDB for data persistance

Install all dependencies

-

pip

pip install -r requirements.txt

-

Install playwright

playwright install

-

Run the following commands to install necessary library if needed

sudo apt install libatk1.0-0 libatk-bridge2.0-0 libcups2 libatspi2.0-0 libxcomposite1 libxdamage1 libxfixes3 libxrandr2 libgbm1 libxkbcommon0 libpango-1.0-0 libcairo2 libasound2To understand how we scrape data from Zhi Hu,

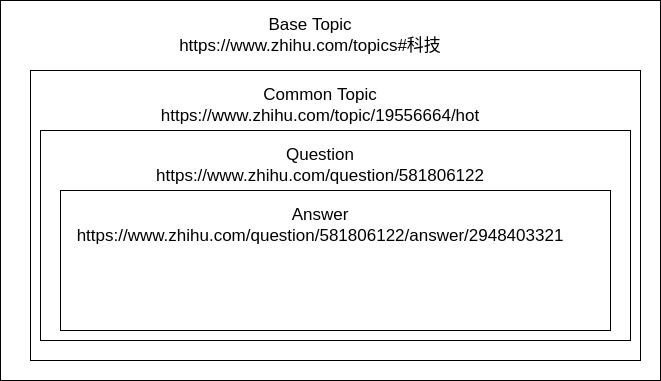

it is useful to define the hierarchy of question-answer categorization.

Currently we work on 4 level of abstraction categorization.

Each level of abstraction contains multiple different instance of abstraction in the next level, i.e. 1 Common Topic might contains hundreds of unique questions and 1 question can contain hundreds of unique answers.An example of such categorization is shown below.

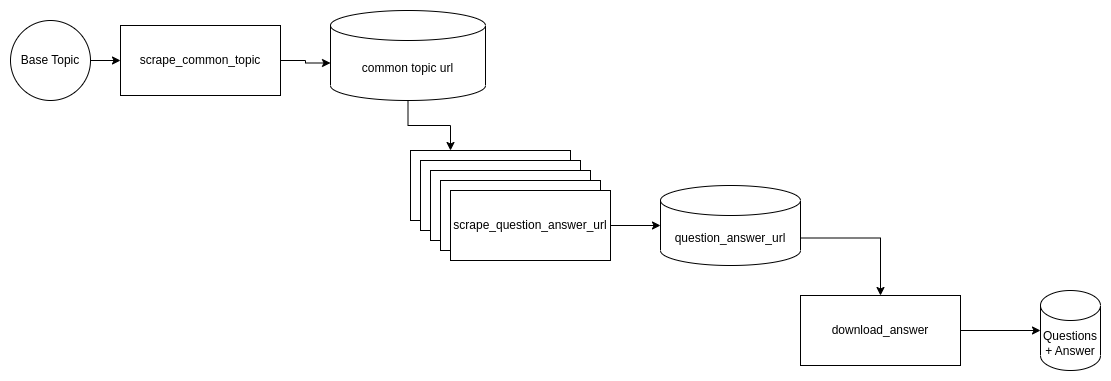

Currently, the scraping process consists of 3 independent scraping process that scrapes 3 different level of categorization. Each scraping process are independent of each other and only requires input from local files to start working. We provide a list of base topic to initialise the scraping process. The first process scrape_common_topic will scrape common topic for all base topic. All common topic will be saved periodically to local file system. The second process is the heaviest process in the system as it utilized headless browser to scrape answer url. This process also utilize ray for parallel processing to speed up the entire chain of processes. The question-answer-url will be saved periodically to local file system. The last process aims to download the exact answer using API request. Shadow socks 5 proxies are used to bypass API rate limit. The extracted answers will be persisted into database.

- Decouple all scraping processes

- Add Ray For Parallel Processing

- Add shadow socks for API rate limit bypass

- Add duckdb for persistence

- Scrape comment sections

- Scale up scraping processes

Distributed under the MIT License. See LICENSE.txt for more information.