Tongze Wang, Xiaohui Xie, Wenduo Wang, Chuyi Wang, Youjian Zhao, Yong Cui

ICNP 2024 (arXiv paper)

- Create python environment

conda create -n NetMamba python=3.10.13conda activate NetMamba

- Install PyTorch 2.1.1+cu121 (we conduct experiments on this version)

pip install torch==2.1.1 torchvision==0.16.1 --index-url https://download.pytorch.org/whl/cu121

- Install Mamba 1.1.1

cd mamba-1p1p1pip install -e .

- Install other dependent libraries

pip install -r requirements.txt

For simplicity, you are welcome to download our processed datasets on which our experiments are conducted from google drive.

Each dataset is organized into the following structure:

.

|-- train

| |-- Category 1

| | |-- Sample 1

| | |-- Sample 2

| | |-- ...

| | `-- Sample M

| |-- Category 2

| |-- ...

| `-- Catergory N

|-- test

`-- valid

If you'd like to generate customized datasets, please refer to preprocessing scripts provided in dataset. Note that you need to change several file paths accordingly.

- Run pre-training:

CUDA_VISIBLE_DEVICES=0 python src/pre-train.py \\

--batch_size 128 \\

--blr 1e-3 \\

--steps 150000 \\

--mask_ratio 0.9 \\

--data_path <your-dataset-dir> \\

--output_dir <your-output-dir> \\

--log_dir <your-output-dir> \\

--model net_mamba_pretrain \\

--no_amp- Run fine-tuning (including evaluation)

CUDA_VISIBLE_DEVICES=0 python src/fine-tune.py \\

--blr 2e-3 \\

--epochs 120 \\

--nb_classes <num-class> \\

--finetune <pretrain-checkpoint-path> \\

--data_path <your-dataset-dir> \\

--output_dir <your-output-dir> \\

--log_dir <your-output-dir> \\

--model net_mamba_classifier \\

--no_ampNote that you should replace variable in the < > format with your actual values.

The pre-trained checkpoint of NetMamba is available for download on our huggingface repo. Feel free to access it at your convenience. If you require any other type of checkpoints, please contact us via email (wangtz23@mails.tsinghua.edu.cn).

@misc{wang2024netmamba,

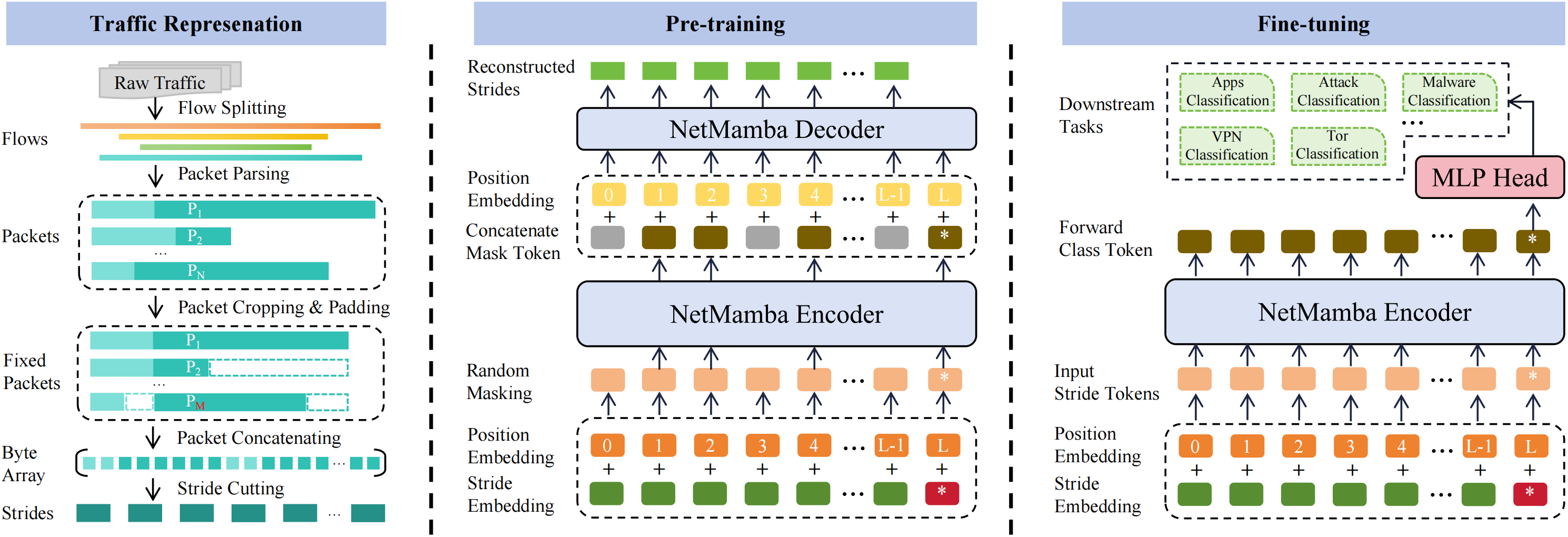

title={NetMamba: Efficient Network Traffic Classification via Pre-training Unidirectional Mamba},

author={Tongze Wang and Xiaohui Xie and Wenduo Wang and Chuyi Wang and Youjian Zhao and Yong Cui},

year={2024},

eprint={2405.11449},

archivePrefix={arXiv},

primaryClass={cs.LG}

}