This repository is a collection of tutorials and pratices related to Generative Adversarial Networks.

01 to 07 are based on tenforflow official generative tutorials.

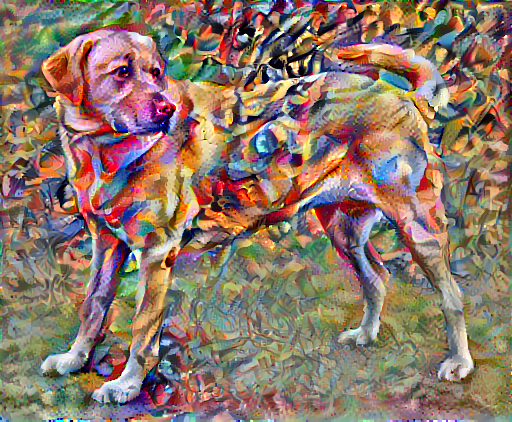

01: Neural Style Transfer --- transfer an image to a certain style based on the paper A Neural Algorithm of Artistic Style.

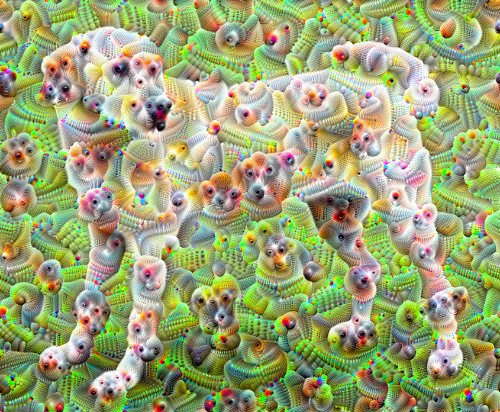

02: Deep Dream --- transfer an image to dream-like style via exciting intermediate features based on the blog Inceptionism: Going Deeper into Neural Networks.

03: DCGAN --- train a generative adversarial network to generate handwriting numbers.

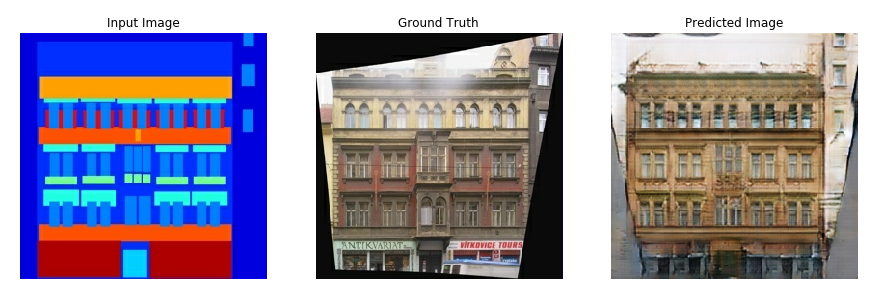

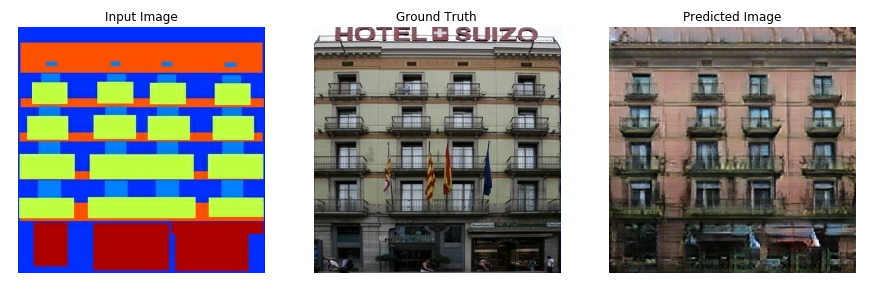

04: Pix2Pix --- Pixelwise image to image translation with conditional GANs, as described in Image-to-Image Translation with Conditional Adversarial Networks.

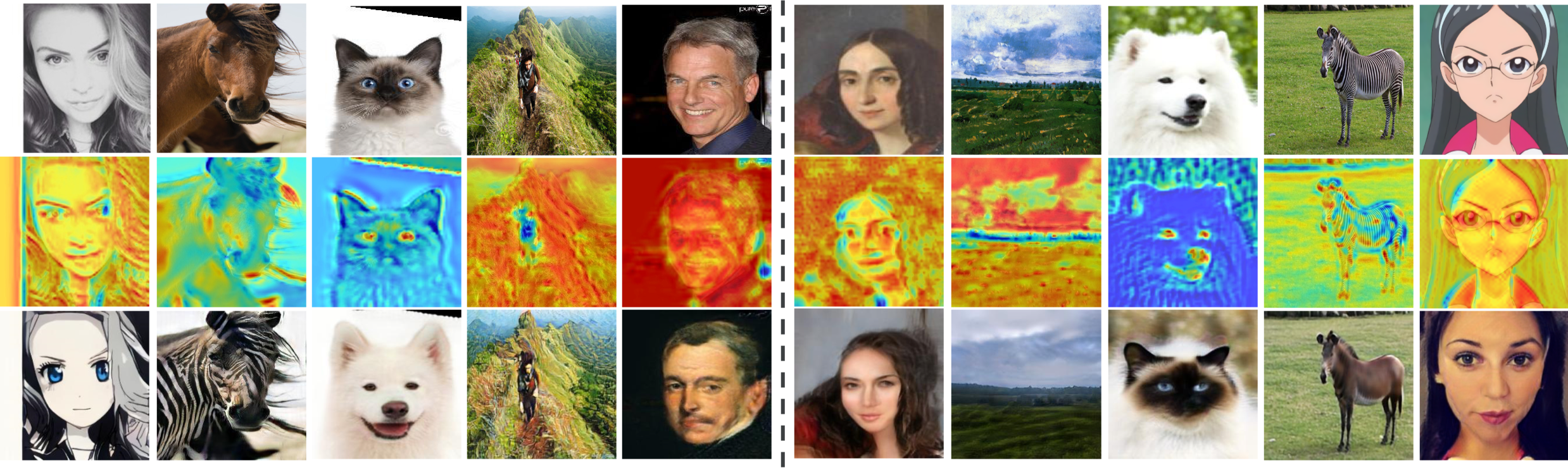

05: CycleGAN --- image style transfer (without paired inputs) based on Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks.

06: FGSM --- learn to fool a neural network using Fast Gradient Signed Method.

07: AutoEncoder --- Latent space based image reconstruction and generation with autoencoder (AE) and variational autoencoder (VAE). (addition: take a look at conditional VAE)

08: U-GAT-IT --- improved unpaired image style transfer based on Unsupervised Generative Attentional Networks with Adaptive Layer-Instance Normalization for Image-to-Image Translation (ICLR 2020).