- Based on

TensorRT-v8.2,speed up object tracking ofYOLOv5-v5.0+deepsort; - Deployment on

Jetson nano; - It is also feasible on the

Linux x86_64, just all the Makefile and CMakeLists.txt in this project need to be modified. The path of the TensorRT and OpenCV header files and library files need be changed to the corresponding system, and the path of CUDA is generally not modified, it is best to confirm it.

- The system image burned on

Jetson nanoisJetpack 4.6.1, and the original environment ofjetpackis as follows:

| CUDA | cuDNN | TensorRT | OpenCV |

|---|---|---|---|

| 10.2 | 8.2 | 8.2.1 | 4.1.1 |

- install library:

Eigen

apt install libeigen3-devConvert YOLO detection model, and ReID feature extraction model, into a serialized file of TensorRT, suffix .plan (my custom, it can also be .engine or other)

- link: https://pan.baidu.com/s/1YG-A8dXL4zWvecsD6mW2ug

- extract code: y2oz

Download and unzip,

The files in the directory are described as follows:

Deepsort

|

├── ReID # This directory stores the model of ReID feature extraction network

│ ├── ckpt.t7 # Official PyTorch format model file

│ └── deepsort.onnx # onnx format model file exported from ckpt.t7

|

└── YOLOv5-v5.0 # This directory stores the model of the YOLOv5 object detection network

├── yolov5s.pt # Official PyTorch format model file

└── para.wts # Model file in wts format exported from yolov5s.pt- Convert the above

yolov5s.pttomodel.plan, orpara.wtstomodel.plan - The specific conversion method can be found in the link below, which is also a project published by the myself

https://github.com/emptysoal/TensorRT-v8-YOLOv5-v5.0/tree/main

Note : When using, remember to modify Makefile , header files, library files path of TensorRT and OpenCV shuould be replaced for your own Jetson nano

After completion, you can get Model.plan, which is the TensorRT serialization model file of YOLOv5 object detection network.

- Convert the above

ckpt.t7todeepsort.plan,ordeepsort.onnxtodeepsort.plan - Follow these steps:

# enter into directory reid_torch2trt in this project

cd reid_torch2trt

# run command:

# if jetson nano does not have pytorch,this step can be run in any environment with pytorch

python onnx_export.py

# after this command,ckpt.t7 convert to deepsort.onnx

# However, I have given deepsort.onnx, so you can skip this step and start with the following

# Execute sequentially

make

./trt_export

# after these commands,deepsort.onnx convert to deepsort.planAfter completion, you can get deepsort.plan, which is the TensorRT serialization model file of the feature extraction network.

- Start compiling and running the code for the object tracking

- Follow these steps:

# enter into directory yolov5-deepsort-tensorrt in this project

cd yolov5-deepsort-tensorrt

mkdir resources

# Copy the 2 plan files converted above to the directory resources

cp {TensorRT-v8-YOLOv5-v5.0}/model.plan ./resources

cp ../reid_torch2trt/deepsort.plan ./resources

mkdir test_videos # Put the test video file into it and name it demo.mp4

vim src/main.cpp # You can modify some configuration information according to your requirements

mkdir build

cd build

cmake ..

make

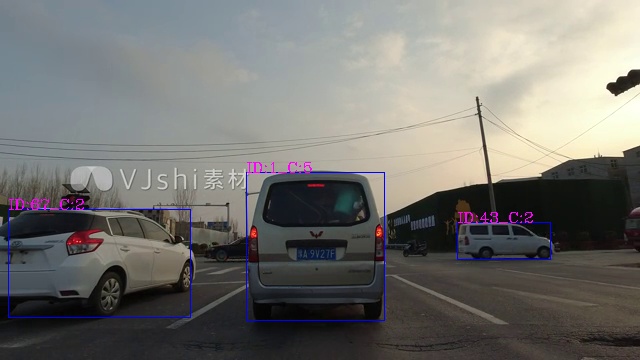

./yolosort # then you can see the tracking effectMainly refer to the following projects:

- https://github.com/RichardoMrMu/yolov5-deepsort-tensorrt

- https://blog.csdn.net/weixin_42264234/article/details/120152117

- The code for this project mostly references the content of the above project

- But I have made alot of changes, as follows:

-

The reference project

ReIDmodel output is a512dimension vector, but the code is256dimension, I made corrections; -

For

YOLOv5-v5.0object detection network:2.1 The TensorRT model transformation was carried out using my own project, and speed up pre-processing with CUDA.

2.2 The TensorRT inference of YOLOv5 is encapsulated in a C++ class. It is also very convenient to use in other projects.

-

The category filtering function is implemented. You can set the category you want to track in main.cpp, ignoring those that you do not care about.

-

In order to adapt to TensorRT version 8, some changes have been made to the model reasoning.