This repository is the PyTorch implementation of the paper in CVPR 2022

Domain Generalization via Shuffled Style Assembly for Face Anti-Spoofing

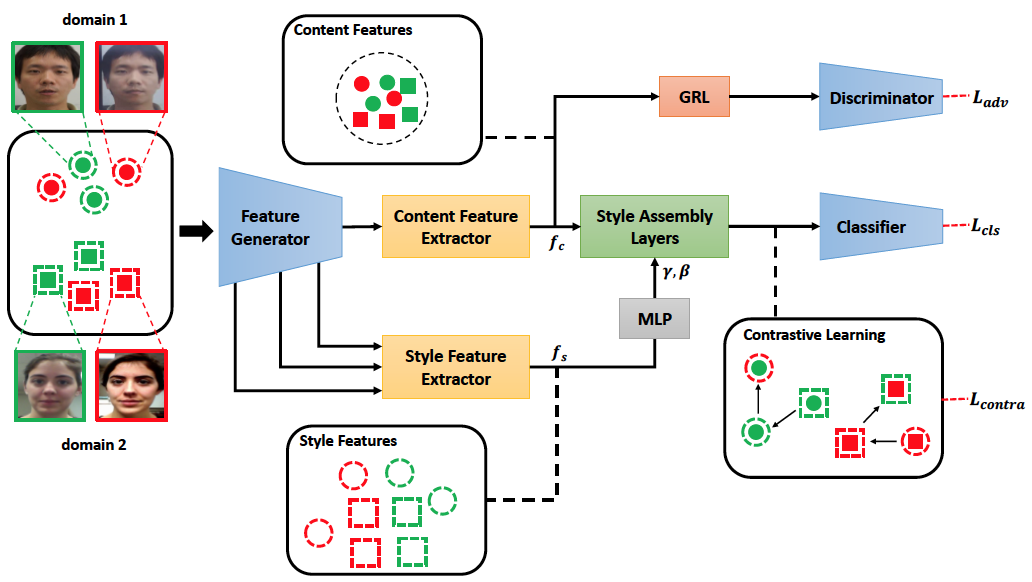

Abstract With diverse presentation attacks emerging continually, generalizable face anti-spoofing (FAS) has drawn growing attention. Most existing methods implement domain generalization (DG) on the complete representations. However, different image statistics may have unique properties for the FAS tasks. In this work, we separate the complete representation into content and style ones. A novel Shuffled Style Assembly Network (SSAN) is proposed to extract and reassemble different content and style features for a stylized feature space. Then, to obtain a generalized representation, a contrastive learning strategy is developed to emphasize liveness-related style information while suppress the domain-specific one. Finally, the representations of the correct assemblies are used to distinguish between living and spoofing during the inferring. On the other hand, despite the decent performance, there still exists a gap between academia and industry, due to the difference in data quantity and distribution. Thus, a new large-scale benchmark for FAS is built up to further evaluate the performance of algorithms in reality. Both qualitative and quantitative results on existing and proposed benchmarks demonstrate the effectiveness of our methods.

- Linux or macOS

- CPU or NVIDIA GPU + CUDA CuDNN

- Python 3.x

- Pytorch 1.1.0 or higher, torchvision 0.3.0 or higher

└── YOUR_Data_Dir

├── OULU_NPU

├── MSU_MFSD

├── CASIA_MFSD

├── REPLAY_ATTACK

├── SiW

└── ...

python -u solver.py

Four datasets are utilized to evaluate the effectiveness of our method in different cross-domain scenarios: OULU-NPU (denoted as O), CASIA-MFSD (denoted as C), Replay-Attack (denoted as I), MSU-MFSD (denoted as M).

We use a leave-one-out (LOO) strategy: three datasets are randomly selected for training, and the rest one for testing.

We used the benchmark settings Large-Scale FAS Benchmarks.

All face images are detected by MTCNN, and then cropped.

Please cite our paper if the code is helpful to your research.

@inproceedings{wang2022domain,

author = {Wang, Zhuo and Wang, Zezheng and Yu, Zitong and Deng, Weihong and Li, Jiahong and Li, Size and Wang, Zhongyuan},

title = {Domain Generalization via Shuffled Style Assembly for Face Anti-Spoofing},

booktitle = {CVPR},

year = {2022}

}