Code for this paper Decoupling segmental and prosodic cues of non-native speech through vector quantization

Waris Quamer, Anurag Das, Ricardo Gutierrez-Osuna

See details and Audio Samples here. Link

- Install ffmpeg.

- Install Kaldi

- Install PyKaldi

- Install packages using environment.yml file.

- Download pretrained TDNN-F model, extract it, and set

PRETRAIN_ROOTinkaldi_scripts/extract_features_kaldi.shto the pretrained model directory.

- Acoustic Model: LibriSpeech. Download pretrained TDNN-F acoustic model here.

- You also need to set

KALDI_ROOTandPRETRAIN_ROOTinkaldi_scripts/extract_features_kaldi.shaccordingly.

- You also need to set

- Speaker Encoder: LibriSpeech, see here for detailed training process.

- Vector Quantization: [ARCTIC and L2-ARCTIC, see here for detailed training process.

- Synthesizer (i.e., Seq2seq model): ARCTIC and L2-ARCTIC. Please see here for a merged version.

- Vocoder (HiFiGAN): LibriSpeech (Training code to be updated).

All the pretrained the models are available (To be updated) here

datatset_root

├── speaker 1

├── speaker 2

│ ├── wav # contains all the wav files from speaker 2

│ └── kaldi # Kaldi files (auto-generated after running kaldi-scripts

.

.

└── speaker N

- Use Kaldi to extract BNF for individual speakers (Do it for all speakers)

./kaldi_scripts/extract_features_kaldi.sh /path/to/speaker

- Preprocessing

python preprocess_bnfs.py path/to/dataset

python generate_speaker_embeds.py path/to/dataset

python make_data_all.py #Edit the file to specify dataset path

-

Vector Quantize the BNFs see here

-

Setting Training params See conf/

-

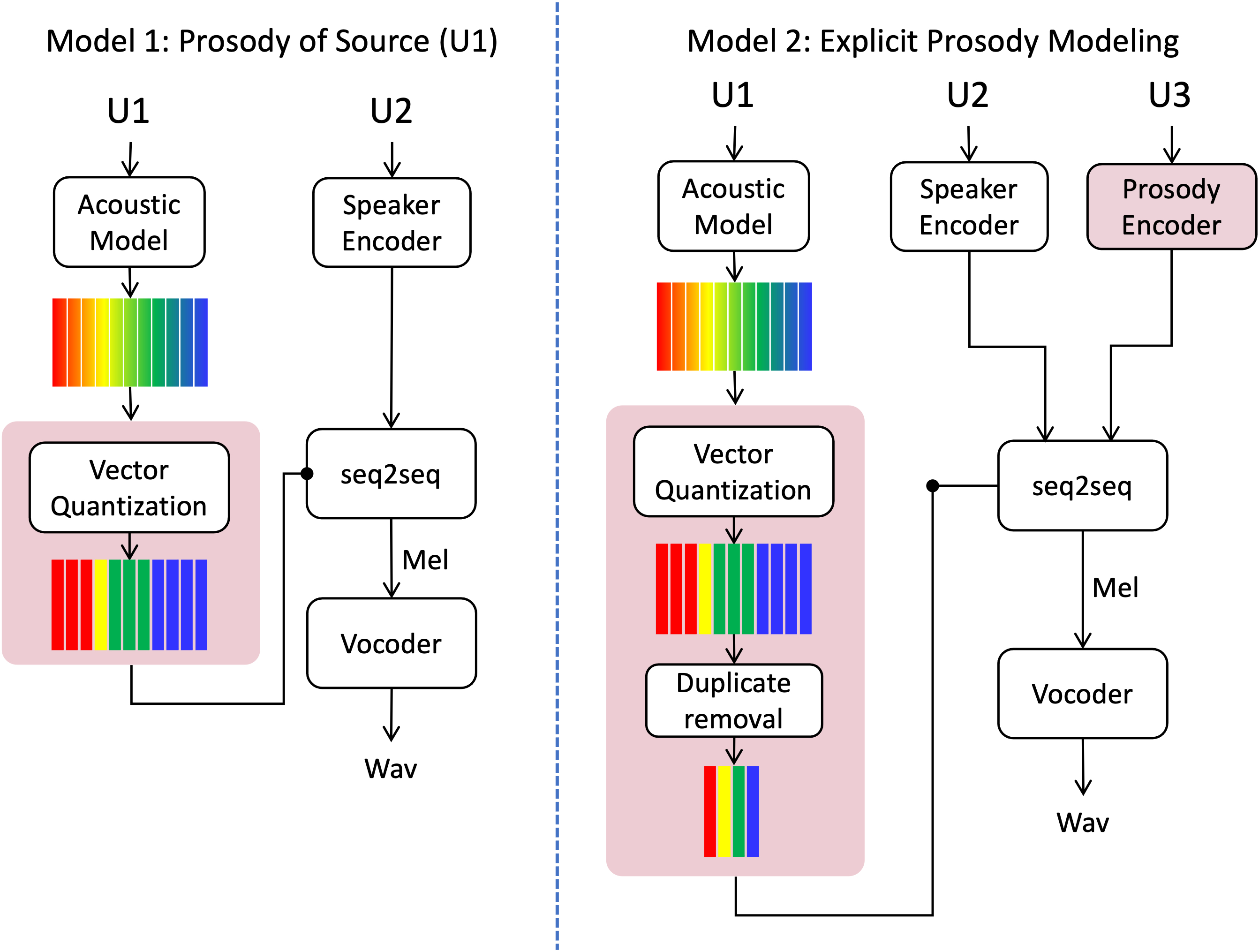

Training Model 1

./train_vc128_all.sh

- Training Model 2

./train_vc128_all_prosody_ecapa.sh