[ECCV2022] Official pytorch implementation of "Learning Depth from Focus in the Wild"

- Paper

- Contact email : cywon1997@gm.gist.ac.kr

- Python == 3.7.7

- imageio==2.16.1

- h5py==3.6.0

- numpy==1.21.5

- matplotlib==3.5.1

- opencv-python==4.5.5.62

- OpenEXR==1.3.7

- Pillow==7.2.0

- scikit-image==0.19.2

- scipy==1.7.3

- torch==1.6.0

- torchvision==0.7.0

- tensorboard==2.8.0

- tqdm==4.46.0

- mat73==0.58

- typing-extensions==4.1.1

- Our depth estimation network is implemented based on the codes released by PSMNet [1] and CFNet [2].

- DDFF-12-Scene Dataset [5] : Download offical link (https://vision.in.tum.de/webarchive/hazirbas/ddff12scene/ddff-dataset-test.h5)

- DefocusNet Dataset [6], 4D Light Field Dataset [9], Middlebury Dataset [8] : Follow the procedure written on the official github of AiFDepthNet [7] (https://github.com/albert100121/AiFDepthNet)

- Smartphone dataset [10] : Download dataet from the offical website of Learning to Autofocus (https://learntoautofocus-google.github.io/)

- Put the datasets in folders in 'Depth_Estimation_Test/Datasets/'

- Put the datasets in folders in 'train_codes/Datasets/' .

- Run train codes in 'train_codes'

python train_code_[Dataset].py --lr [learning rate]

python test.py --dataset [Dataset]

- Official_link : http://horatio.cs.nyu.edu/mit/silberman/nyu_depth_v2/nyu_depth_v2_labeled.mat

- Put 'nyu_depth_v2_labeled.mat' in 'Simulator' folder.

- This simulator is implemented based on the codes released by [3].

python synthetic_blur_movement.py

- Put your focal stacks and meta-data in 'End_to_End/Datasets/folder'.

- The name of metadata should be 'focus_distance.txt' and 'focal_length.txt' for focus distances and focal length of lens, respectively.

- The pretrained weight is trained by the synthetic dataset whose focal stacks consist of 10 images generated by our simulator.

- Please refer the uploaded dataset( folder 'ball')

python test_real_scenes.py

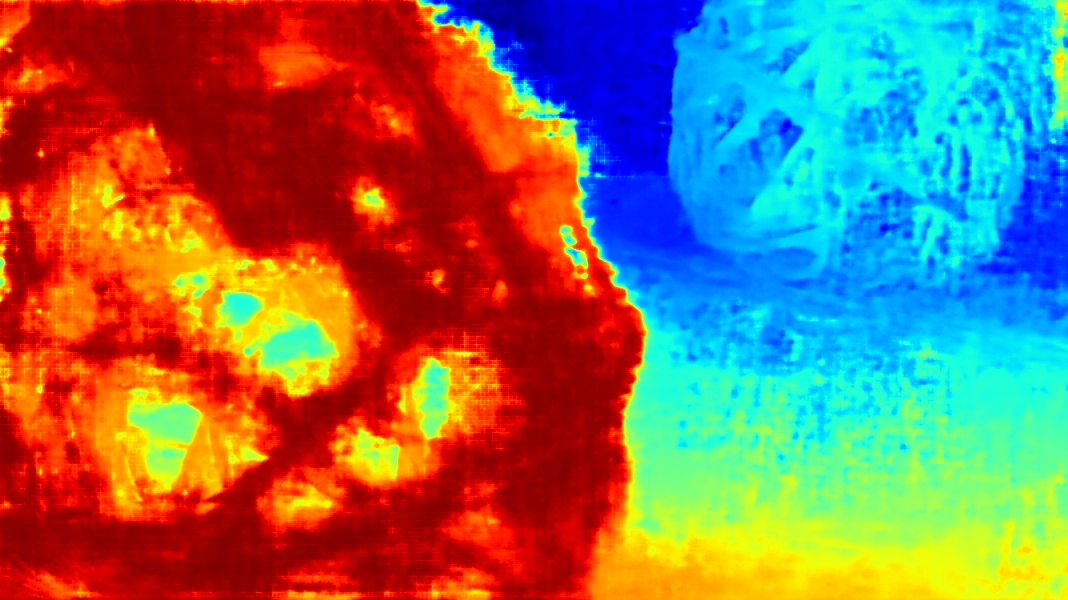

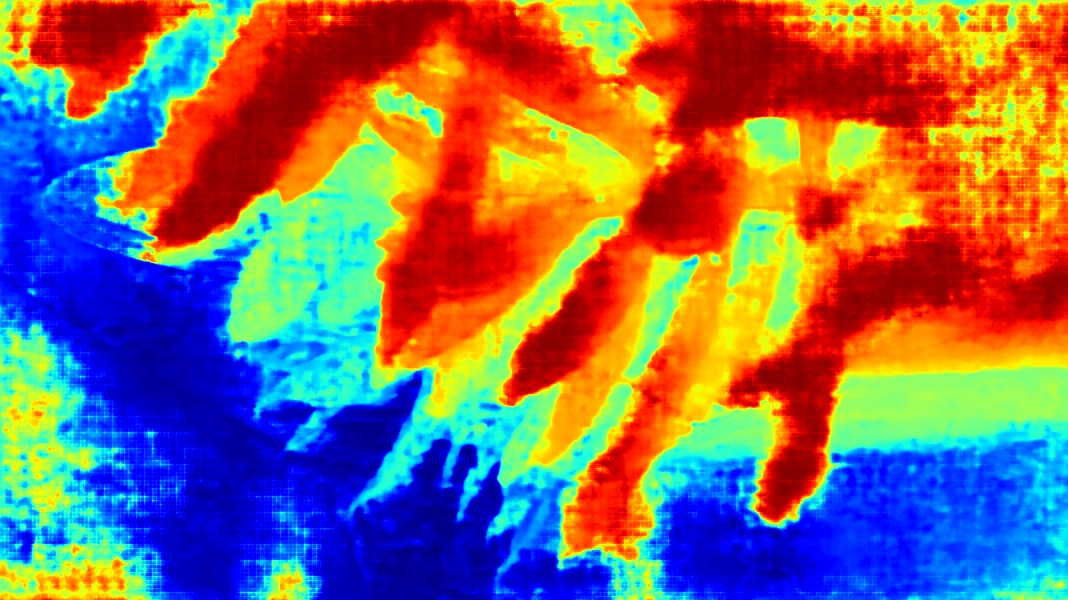

- Before alignment network (input focal stack)

- After alignment network (aligned focal stack)

- After depth estimation network (depth map)

- Our network only handles 3 basic motions, which can't cover all motions, so performance is poor when dealing with complex motions.

- There are rooms between the synthetic dataset generated by the simulator and real datasets. Please note that handling the parameters of our simulator and tuning the training epochs will yield better results than ours.

[1] Chang, Jia-Ren, and Yong-Sheng Chen. "Pyramid stereo matching network." Proceedings of the IEEE conference on computer vision and pattern recognition. 2018. code paper

[2] Shen, Zhelun, Yuchao Dai, and Zhibo Rao. "Cfnet: Cascade and fused cost volume for robust stereo matching." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2021. code paper

[3] Abuolaim, Abdullah, et al. "Learning to reduce defocus blur by realistically modeling dual-pixel data." Proceedings of the IEEE/CVF International Conference on Computer Vision. 2021. code paper

[4]Silberman, Nathan, et al. "Indoor segmentation and support inference from rgbd images." European conference on computer vision. Springer, Berlin, Heidelberg, 2012. page

[5]Hazirbas, Caner, et al. "Deep depth from focus." Asian Conference on Computer Vision. Springer, Cham, 2018. code paper

[6]Maximov, Maxim, Kevin Galim, and Laura Leal-Taixé. "Focus on defocus: bridging the synthetic to real domain gap for depth estimation." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2020. code paper

[7]Wang, Ning-Hsu, et al. "Bridging Unsupervised and Supervised Depth from Focus via All-in-Focus Supervision." Proceedings of the IEEE/CVF International Conference on Computer Vision. 2021. code paper

[8] Scharstein, Daniel, et al. "High-resolution stereo datasets with subpixel-accurate ground truth." German conference on pattern recognition. Springer, Cham, 2014. page

[9] Honauer, Katrin, et al. "A dataset and evaluation methodology for depth estimation on 4D light fields." Asian Conference on Computer Vision. Springer, Cham, 2016. page

[10] Herrmann, Charles, et al. "Learning to autofocus." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2020. page