This repo contains our implementation of a Bootstrapped DQN with options to add a Randomized Prior, Dueling, and Double DQN in ALE games.

Deep Exploration via Bootstrapped DQN

Randomized Prior Functions for Deep Reinforcement Learning

This gif depicts the orange agent from below winning the first game of Breakout and eventually winning a second game. The agent reaches a high score of 830 in this evaluation. There are several gaps in playback due to file size. We show agent steps [1000-1500], [2400-2600], [3000-4500], and [16000-16300].

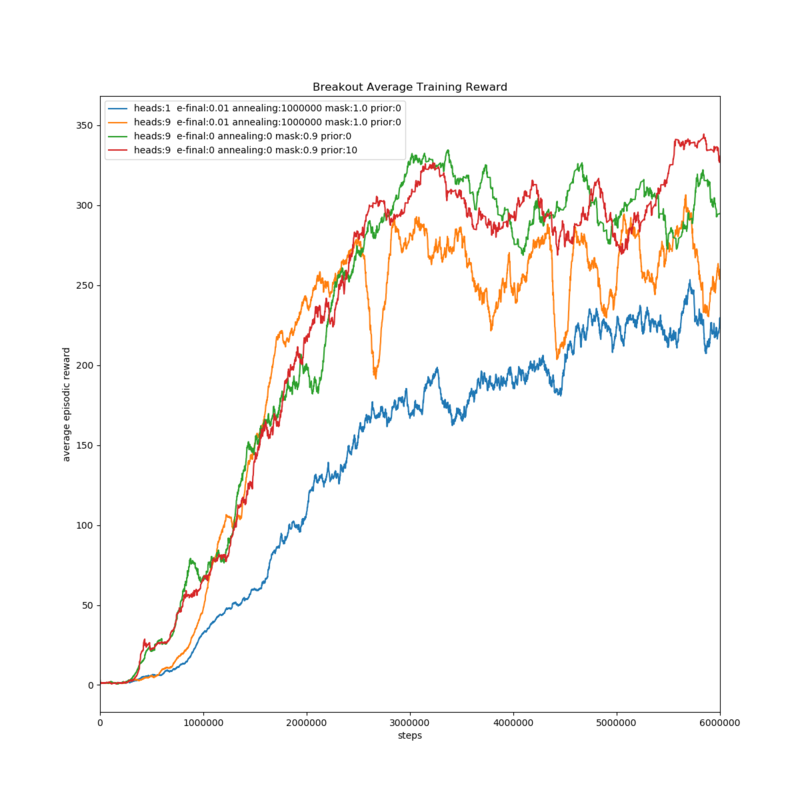

- (blue) DQN with epsilon greed annealed between 1 and 0.01

- (orange) Bootstrap with epsilon greedy annealed between 1 and 0.01

- (green) Bootstrap without epsilon greedy exploration

- (red) Bootstrap with randomized prior

All agents were implemented as Dueling, Double DQNs. The xlabel in these plots, "steps", refers to the number of states the agent observed thus far in training. Multiply by 4 to account for a frame-skip of 4 to describe the total number of frames the emulator has progressed.

Our agents are sent a terminal signal at the end of life. They face a deterministic state progression after a random number<30 of no-op steps at the beginning of each episode.

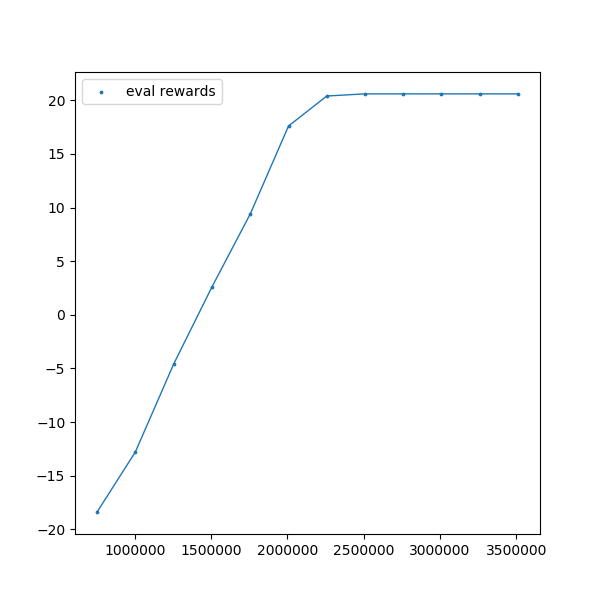

Here are some results on Pong with Boostrap DQN w/ a Randomized Prior. A optimal strategy is learned within 2.5m steps.

Pong agent score in evaluation - reward vs steps

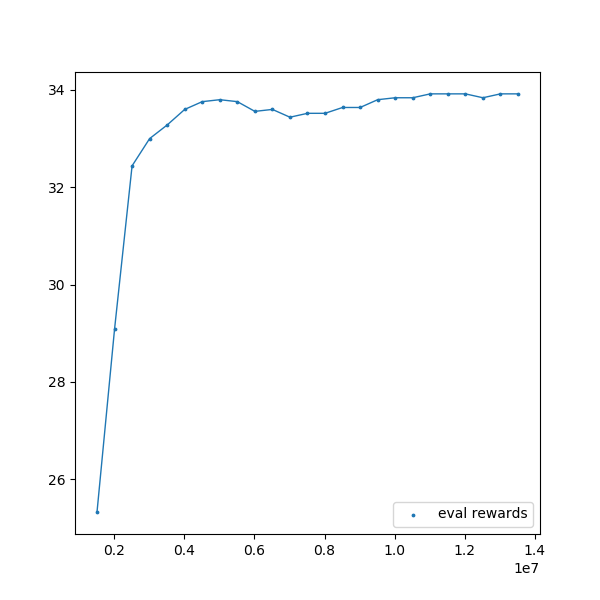

Here are some results on Freeway with Boostrap DQN w/ a Randomized Prior. The random prior allowed us to solve this "hard exploration" problem within 4 millions steps.

Freeway agent score in evaluation - reward vs steps

atari-py installed from https://github.com/kastnerkyle/atari-py

torch='1.0.1.post2'

cv2='4.0.0'

We referenced several execellent examples/blogposts to build this codebase: