👨🏾🍳 Blogpost - Building Multimodal AI in TypeScript

First, clone the project with the command below

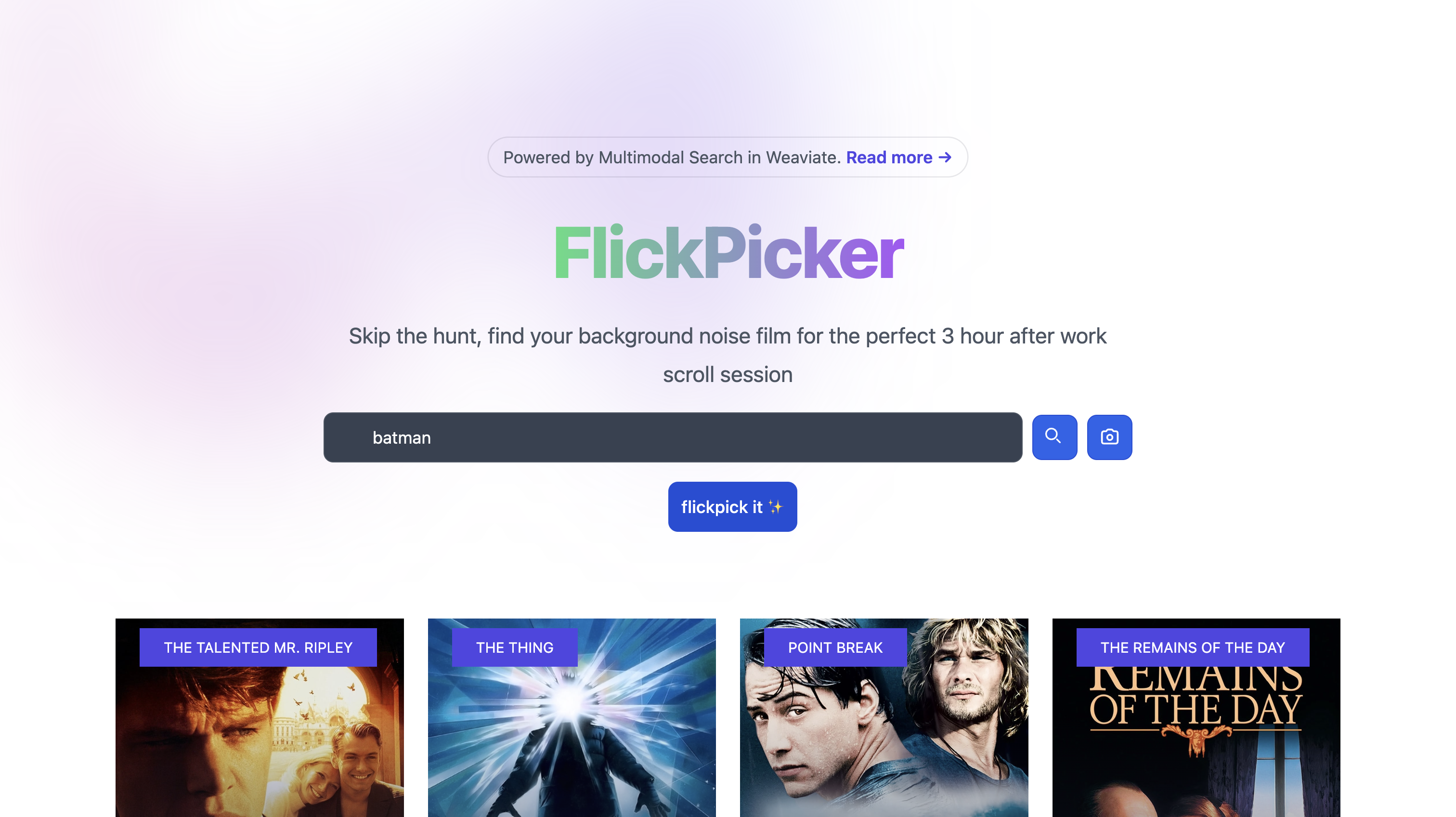

git clone https://github.com/weaviate-tutorials/flick-pickerThe repository lets us do three things

- Run the Nuxt.js Web App.

- Import images, and text into your Weaviate database.

- Search like it's 2034!

Create a Weaviate instance on Weaviate Cloud Services as described in this guide

- your Google Vertex API key as

NUXT_GOOGLE_API_KEY(you can get this in your Vertex AI settings) - your Open AI API key as

NUXT_OPENAI_API_KEY(you can get this in your Open AI settings) - your Weaviate API key as

NUXT_WEAVIATE_ADMIN_KEY(you can get this in your Weaviate dashboard under sandbox details) - your Weaviate host URL as

NUXT_WEAVIATE_HOST_URL(you can get this in your Weaviate dashboard under sandbox details)

Before you can import data, add any files to their respective media type in the

public/folder. You will have to download a dataset of movie posters and place them in/public/image.

With your data in the right folder, run yarn install to install all project dependencies and to import your data into Weaviate and initialize a collection, run:

yarn run importthis may take a minute or two.

Make sure you have your Weaviate instance running with data imported before starting your Nuxt.js Web App.

To run the Web App

yarn dev... and you can search away!!

Learn more about multimodal applications

- Check out the Weaviate Docs

- Open an Issue

Some credit goes to Steven for his Spirals template