-

Configure the Runtime Environment

Execute the following script to set up the necessary environment:

sh env.sh

-

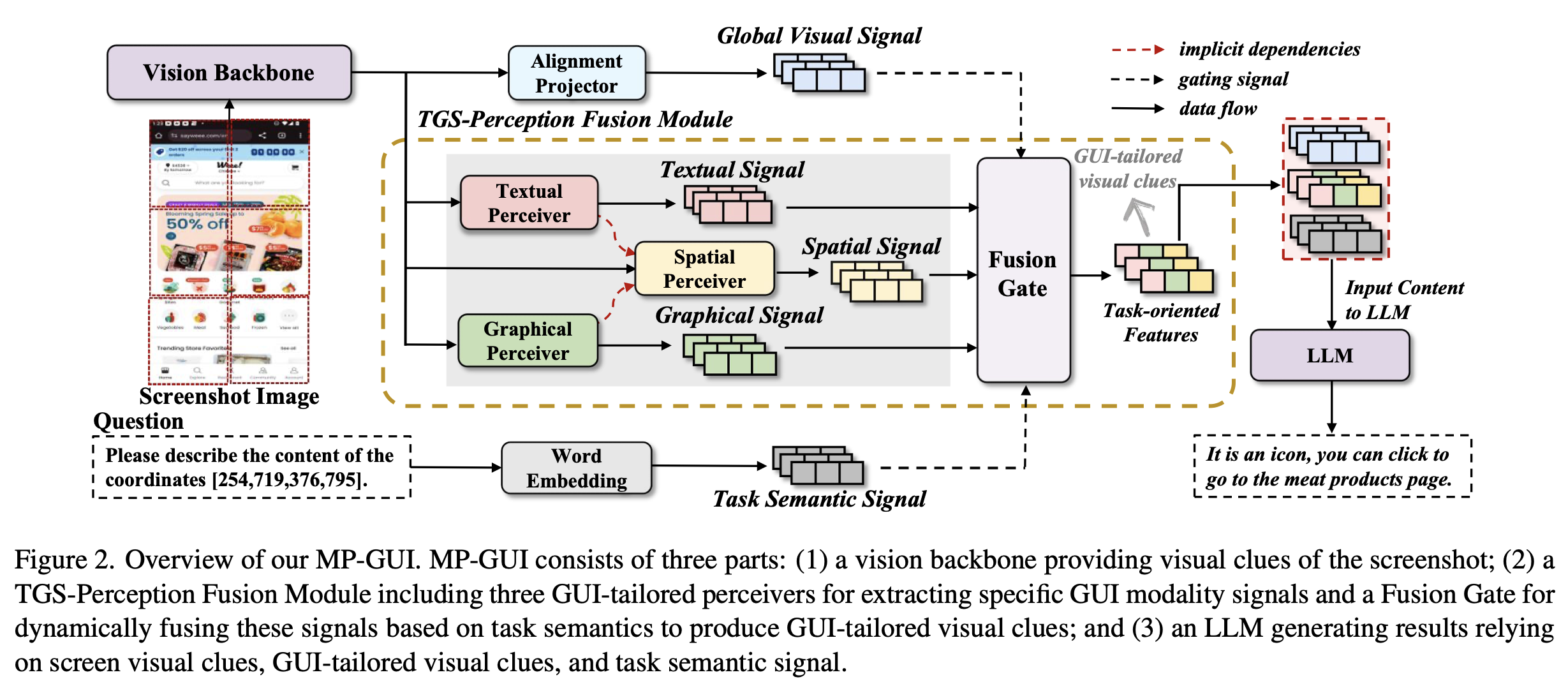

Download Intern2-VL-8B Intern2-VL-8B will be used to initialize the visual backbone, alignment projector and LLM. The other modules of MP-GUI are randomly initialized and trained from scratch as follows: [https://modelscope.cn/models/OpenGVLab/InternVL2-8B]

Follow the steps below to complete the multi-step training process:

-

Training Textual Perceiver

sh MP-GUI/model/shell/multi_step_training/train_textual_perceiver.sh

-

Training Graphical Perceiver

sh MP-GUI/model/shell/multi_step_training/train_graphical_perceiver.sh

-

Training Spatial Perceiver

sh MP-GUI/model/shell/multi_step_training/train_spatial_perceiver.sh

-

Training Fusion Gate

sh MP-GUI/model/shell/multi_step_training/train_fusion_gate.sh

-

Complete Training on Benchmark

sh MP-GUI/model/shell/multi_step_training/benchmark.sh

The following open-source datasets are used in this project:

-

Download the Datasets

Download each dataset and place them in the

MP-GUI/training_datadirectory. -

Update Image Paths

Replace the

image_pathentries in the datasets with your local image paths. -

AITW Specific Processing

For AITW, we randomly sample from the "general" and "install" categories and process them using the following script:

python data_tools/get_small_icon_grounding_data.py

After completing each training step, merge the LoRA parameters and update the weight paths in the training scripts:

-

Merge LoRA Parameters

python MP-GUI/model/tools/merge_lora.py

-

Update Weight Paths

Replace the corresponding weight paths in your training scripts with the merged weights.

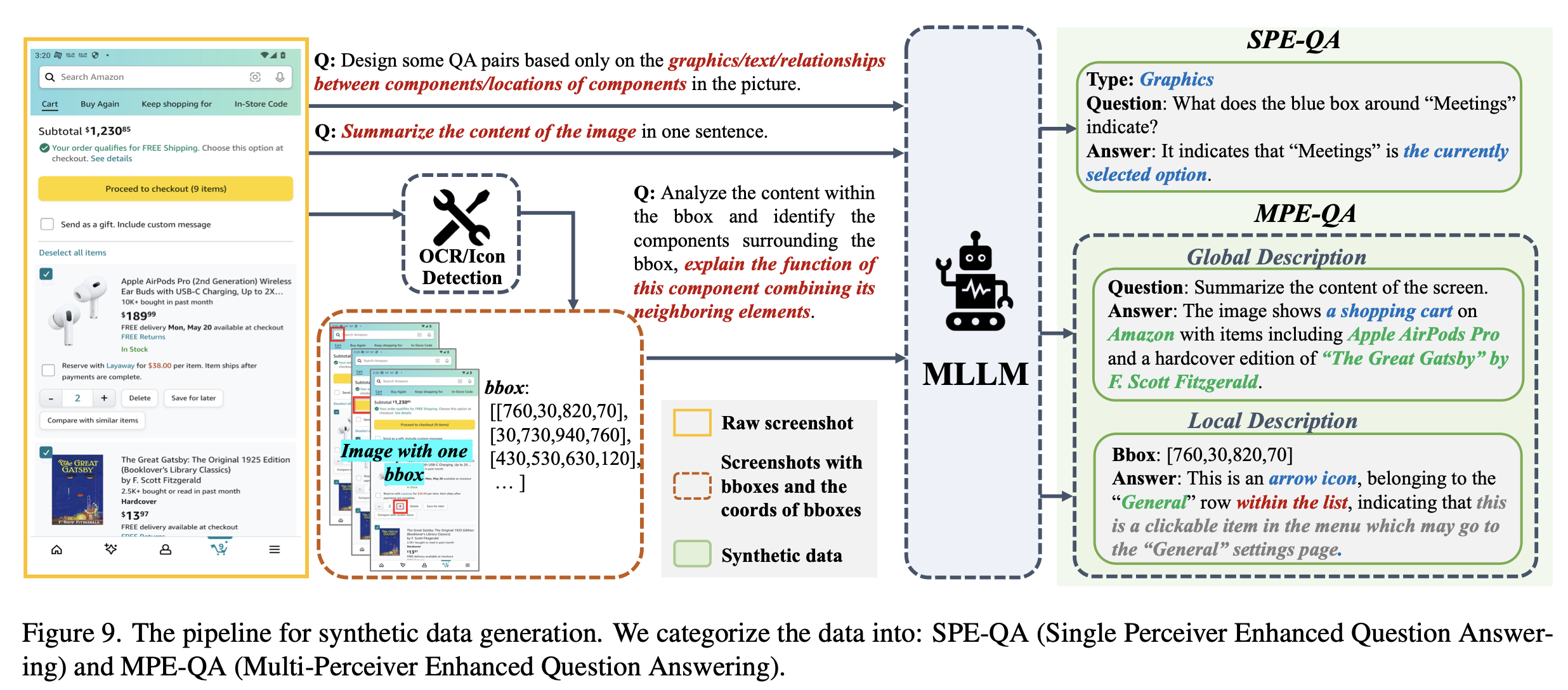

You can generate a vast amount of synthetic data using the Qwen2-VL-72B model within the vLLM architecture:

python -m vllm.entrypoints.openai.api_server --served-model-name Qwen2-VL-72B-Instruct --model Qwen/Qwen2-VL-72B-Instruct -tp 8python pipeline/vllm_pipeline_v2.pyAdditionally, the MP-GUI/data_tools directory provides scripts to create spatial relationship prediction data based on the Semantic UI dataset.

@misc{wang2025mpguimodalityperceptionmllms,

title={MP-GUI: Modality Perception with MLLMs for GUI Understanding},

author={Ziwei Wang and Weizhi Chen and Leyang Yang and Sheng Zhou and Shengchu Zhao and Hanbei Zhan and Jiongchao Jin and Liangcheng Li and Zirui Shao and Jiajun Bu},

year={2025},

eprint={2503.14021},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2503.14021},

}