This is official implementation for the paper

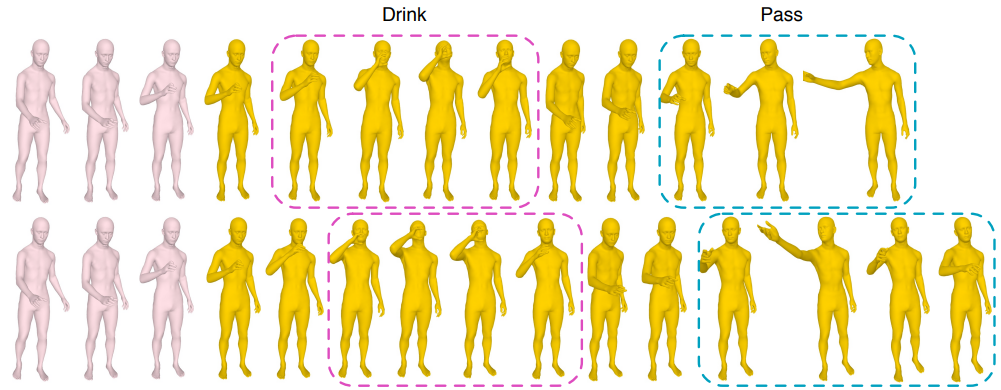

Weakly-supervised Action Transition Learning for Stochastic Human Motion Prediction. In CVPR 22.

Wei Mao, Miaomiao Liu, Mathieu Salzmann.

- Python >= 3.8

- PyTorch >= 1.8

- Tensorboard

- numba

tested on pytorch == 1.8.1

The original dataset is from here.

The original dataset is from here.

We use the preprocessed version from Action2Motion.

Note that, we processed all the dataset to discard sequences that are too short or too long. The processed datasets can be downloaded from GoogleDrive. Download all the files to ./data folder.

- We provide YAML configs inside

motion_pred/cfg:[dataset]_rnn.ymland[dataset]_act_classifier.ymlfor the main model and the classifier (for evaluation) respectively. These configs correspond to pretrained models insideresults. - The training and evaluation command is included in

run.shfile.

Download smpl-(h,x) models from their official websites and put them in ./SMPL_models folder. The data structure should looks like this

SMPL_models

├── smpl

│ ├── SMPL_FEMALE.pkl

│ └── SMPL_MALE.pkl

│

├── smplh

│ ├── MANO_LEFT.pkl

│ ├── MANO_RIGHT.pkl

│ ├── SMPLH_FEMALE.pkl

│ └── SMPLH_MALE.pkl

│

└── smplx

│

├── SMPLX_FEMALE.pkl

└── SMPLX_MALE.pkl

You can then run the following code to render the results of your model to a video.

python eval_vae_act_render_video.py --cfg grab_rnn --cfg_classifier grab_act_classifierNote that when visualizing the results of BABEL dataset, there may be an error due to the reason that SMPLH_(FE)MALE.pkl does not contain the hand components. In this case, you may need to manually load the hand components from MANO_LEFT(RIGHT).pkl.

If you use our code, please cite our work

@inproceedings{mao2022weakly,

title={Weakly-supervised Action Transition Learning for Stochastic Human Motion Prediction},

author={Mao, Wei and Liu, Miaomiao and Salzmann, Mathieu},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={8151--8160},

year={2022}

}

The overall code framework (dataloading, training, testing etc.) is adapted from DLow.

MIT