In this project, we work with the TIAGo robot to execute a service task: When a person is offering TIAGo a cup, he is able to react to it and grasp the cup. Then he looks around, searches for the Professor and delivers the cup.

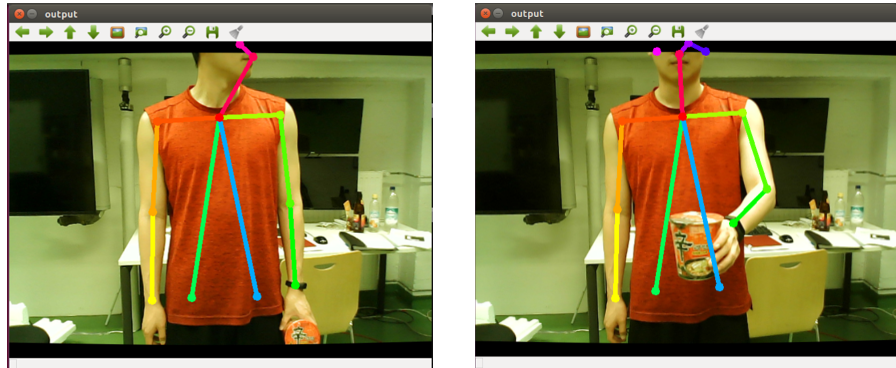

OpenPose is the state-of-the-art pose estimation algorithm, which enables a real-time multi-person key-point detection for body, face, and hands estimation.

In the project, the gesture detection is aimed to detect the gesture of offering something, which triggers the start of the Pick-and-Deliver-Objects task.

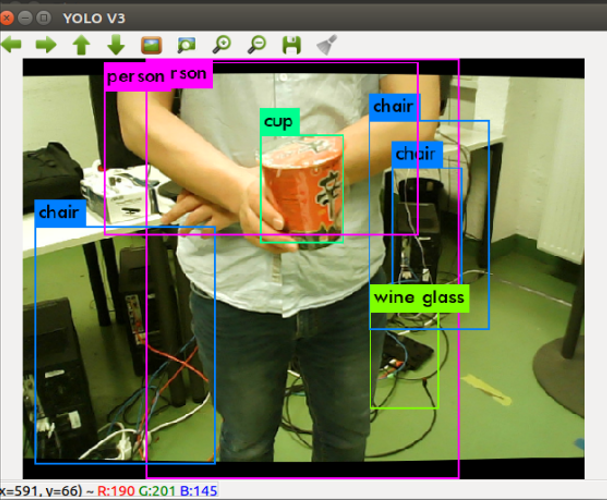

You only look once (YOLO) is a real-time object detection system. On a Pascal Titan X it processes images at 30 FPS and has a mAP of 57.9% on COCO test-dev.

The bounding boxes obtained with YOLO helps to specify the position of the object, hence TIAGo is able to reach his hand to grasp the target object.

Face Recognition is a Python Library which is built using dlib's face recognition built with deep learning. The model has an accuracy of 99.38% on the Labeled Faces in the Wild benchmark.

With face detection TIAGo is able to distinguish professor from other unknown person and deliver the cup.

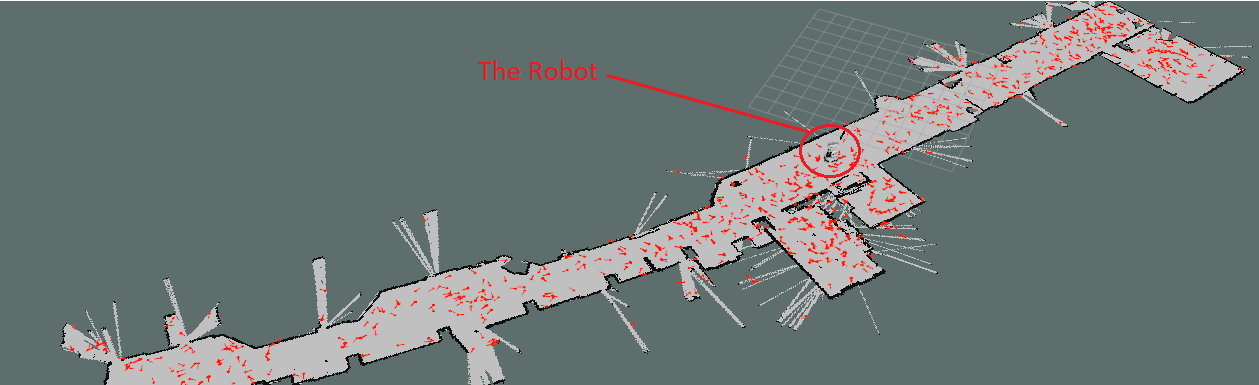

Using the collected laser and pose data, TIAGo is able create a 2-D occupancy grid map with the help of OpenSlam's Gmapping, which provides a laser-based SLAM library.

The robot navigation functionality is completed using the 2D navigation stack of ROS, which takes in information from odometry, sensor streams as well as a goal pose, and outputs safe velocity commands that are sent to the mobile base of the TIAGo robot.