This architecture creates a virus scanner to scan files uploaded to Oracle Cloud Infrastructure (OCI) Object Storage. The virus scanner is deployed on Oracle Container Engine for Kubernetes and uses Kubernetes Event-driven Autoscaling to manage virus scan jobs.

Virus scan jobs are configured to scan single files and zip files. When multiple files are uploaded to the created object storage bucket, virus scan jobs are executed on Oracle Container Engine for Kubernetes using OCI Events and OCI Queue (max 3 jobs simultaneously by default, but this can be changed using the Kubernetes Event-driven Autoscaling configuration). After scanning, files are moved to object storage buckets depending on the scan result (clean or infected). If there are no files to scan, the Kubernetes Event-driven Autoscaler scales down the nodes in pool 2 to zero. When scanning, the Kubernetes Event-driven Autoscaler scales nodes up.

The virus scanner uses a third-party named Trellix's free trial uvscan. The application code is written mostly in NodeJS and uses the Oracle Cloud Infrastructure SDK for JS.

git clone https://github.com/oracle-devrel/arch-virus-scanning-with-oke-autoscaling

- In Cloud UI create for the function (resource principal):

ALL {resource.type = 'fnfunc', resource.compartment.id = 'ocid1.compartment.oc1..'}

- For OKE and other (instance principal):

ANY {instance.compartment.id = 'ocid1.compartment.oc1..'}

- In Cloud UI create for example:

Allow dynamic-group <YOUR FUNCTION DYNAMIC GROUP> to manage all-resources in compartment <YOUR COMPARTMENT> Allow dynamic-group <YOUR OTHER DYNAMIC GROUP> to manage all-resources in compartment <YOUR COMPARTMENT>

- In Cloud UI create Container registry

scanning-writeqfor the function created in the next step

This function scanning-writeq will ingest the events emitted by the object storage bucket scanning-ms when file(s) are uploaded to the bucket and then the function will write the file to OCI Queue scanning for OKE jobs to process with virus scanning.

-

In Cloud UI create Function Application

scanning-ms -

In Cloud UI enable also logging for the

scanning-msapplication -

In Cloud Shell (as part of the Cloud UI) follow the instructions of the "Getting started" for the application

scanning-msand run:

fn init --runtime node scanning-writeq Creating function at: ./scanning-writeq Function boilerplate generated. func.yaml created.

-

In Cloud Code Editor (as part of the Cloud UI) navigate to

scanning-writeqdirectory and copy/pastefunc.jsandpackage.jsonfile contents from localhostscanning-writeqdirectory -

Then in Cloud Shell run:

cd scanning-writeq fn -v deploy --app scanning-ms

This will create and push the OCIR image and deploy the Function scanning-writeq to the application scanning-ms

-

In Cloud UI create OKE cluster using the "Quick create" option

-

Use default settings for the cluster creation, except for the

node pool sizethat can be 1 -

Add a second Node Pool

pool2withpool size 0with defaults for the rest of the settings. If preferred the shape can be adjusted to use a larger shape to process the virus scans faster -

Create cluster access from

localhostto the OKE cluster. Click theAccess Clusterbutton for details for theLocal Accessoption. This requiresoci cliinstalled inlocalhost

-

In Cloud UI create Resource Manager Stack

-

Drag&drop

terraformdirectory fromlocalhostto Stack Configuration -

Use default settings and click continue

-

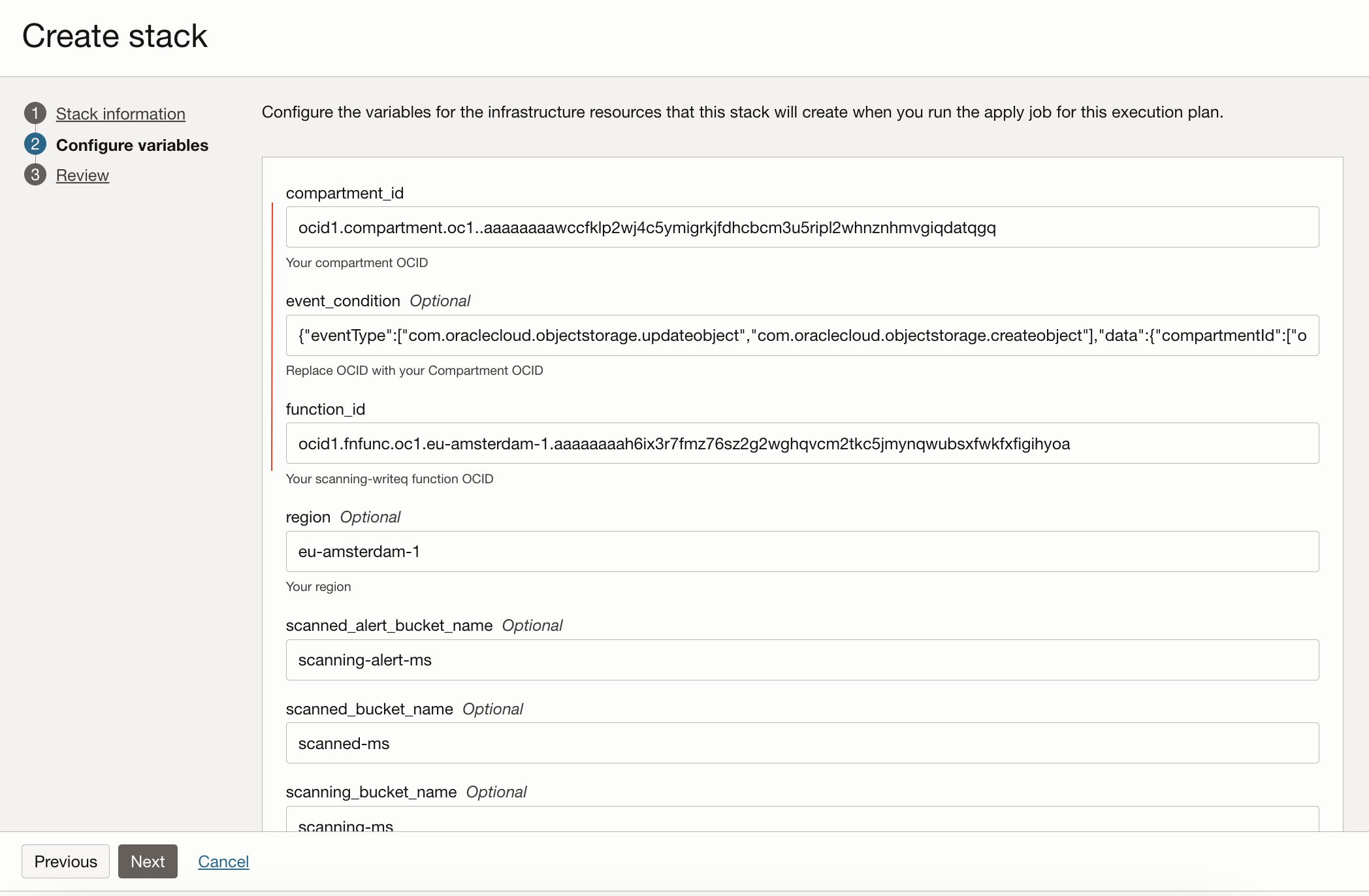

In the Configure variables (Step 2 for the Stack creation) fill in the

compartment_idof your compartment OCID,function_idof yourscanning-writeqfunction OCID and replace theOCIDof theevent_conditionwith your compartment OCID

- Click continue and create the Stack. Create the resources by clicking

Applybutton

This will create three Object Storage buckets, an Event rule, a Log Group and a Log and an OCI Queue for the virus scanning to operate on OKE

-

In Cloud UI add

scanning-writeqfunction configuration -

Add configuration key

QUEUEwith value of the OCID of thescanning-msqueue and keyENDPOINTwith theendpointvalue of thescanning-msqueue

-

Download

uvscanCommand Line Scanner for Linux-64bit free trial from https://www.trellix.com/en-us/downloads/trials.html?selectedTab=endpointprotection -

Download uvscan

datafilewith wget e.g.

wget https://update.nai.com/products/commonupdater/current/vscandat1000/dat/0000/avvdat-10637.zip

Copy the downloaded files under scanning-readq-job directory in localhost

cd scanning-readq-job ls -la .. avvdat-10637.zip cls-l64-703-e.tar.gz ..

Note that the actual file names can be different from the ones above.

In Cloud UI create Container registries scanning-readq and scanning-readq-job

In localhost build the application images using Docker and push to OCIR:

cd scanning-readq docker build -t <REGION-CODE>.ocir.io/<YOUR TENANCY NAMESPACE>/scanning-readq:1.0 docker push <REGION-CODE>.ocir.io/<YOUR TENANCY NAMESPACE>/scanning-readq:1.0

For the scanning-readq-job modify the file names in Dockerfile for

uvscan and it's data file to match the filenames that were downloaded earlier in

line 15 and lines 19-21

before building.

cd scanning-readq-job docker build -t <REGION-CODE>.ocir.io/<YOUR TENANCY NAMESPACE>/scanning-readq-job:1.0 docker push <REGION-CODE>.ocir.io/<YOUR TENANCY NAMESPACE>/scanning-readq-job:1.0

Create secret ocirsecret for the OKE cluster to be able to pull the application images from OCIR:

kubectl create secret docker-registry ocirsecret --docker-username '<YOUR TENANCY NAMESPACE>/oracleidentitycloudservice/<YOUR USERNAME>' --docker-password '<YOUR ACCESS TOKEN>' --docker-server '<REGION-CODE>.ocir.io'

To deploy scanning-readq image modify the scanning-readq/scanning-readq.yaml in localhost to match your values (in bold):

apiVersion: apps/v1

kind: Deployment

metadata:

name: scanning-readq

spec:

replicas: 1

selector:

matchLabels:

app: scanning-readq

name: scanning-readq

template:

metadata:

labels:

app: scanning-readq

name: scanning-readq

spec:

containers:

- name: scanning-readq

image: REGION-KEY.ocir.io/TENANCY-NAMESPACE/scanning-readq:1.0

imagePullPolicy: Always

ports:

- containerPort: 3000

name: readq-http

env:

- name: QUEUE

value: "ocid1.queue.oc1.."

- name: ENDPOINT

value: "https://cell-1.queue.messaging..oci.oraclecloud.com"

imagePullSecrets:

- name: ocirsecret

Note: Env variable QUEUE is the OCID of the scanning queue created in the earlier step with Terraform using Resource Manager Stack. Copy it from the Cloud UI. Copy also the value for the env var ENDPOINT from the Queue settings using Cloud UI

Then run:

kubectl create -f scanning-readq/scanning-readq.yaml

To deploy matching scanning-readq service in port 3000 for the

scanning-readq run:

kubectl create -f scanning-readq/scanning-readq-svc.yaml

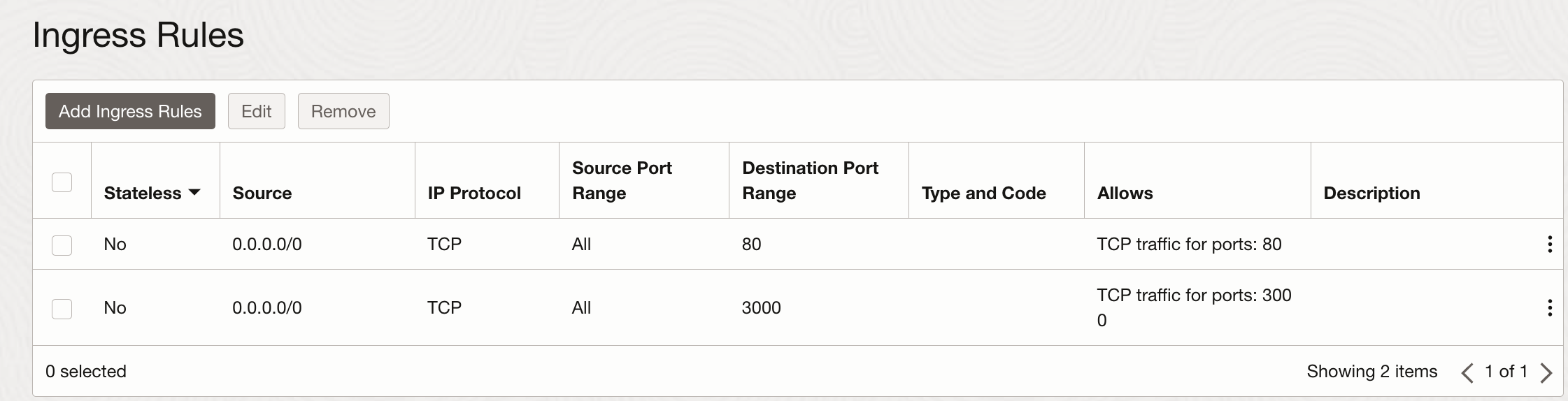

Modify the OKE security list oke-svclbseclist-quick-cluster1-xxxxxxxxxx by adding ingress

rule for the port 3000 to enable traffic to the service:

After adding the security rule to get the EXTERNAL-IP of the service run:

kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE scanning-readq-lb LoadBalancer 10.96.84.40 141.122.194.89 3000:30777/TCP 6d23h

Access the url of the scanning-readq service http://EXTERNAL-IP:3000/stats with curl

or from your browser to test the access to it:

curl http://<EXTERNAL-IP>:3000/stats

{"queueStats":{"queue":{"visibleMessages":0,"inFlightMessages":0,"sizeInBytes":0},"dlq":{"visibleMessages":0,"inFlightMessages":0,"sizeInBytes":0}},"opcRequestId":"07857530C320-11ED-AE89-FFC729A3C/BCA92AC274B1CC09FB9C7A6975DC609B/7D9970C765A85603727C2E125DB0F9B0"}

To deploy scanning-readq-job first deploy the KEDA operator

with Helm to your OKE cluster

Then modify the scanning-readq-job/keda.yaml in localhost to match your values (in bold):

apiVersion: keda.sh/v1alpha1

kind: ScaledJob

metadata:

name: scanning-readq-job-scaler

spec:

jobTargetRef:

template:

spec:

nodeSelector:

name: pool2

containers:

- name: scanning-readq-job

image: REGION-KEY.ocir.io/TENANCY-NAMESPACE/scanning-readq-job:1.0

imagePullPolicy: Always

resources:

requests:

cpu: "500m"

env:

- name: QUEUE

value: "ocid1.queue.oc1.."

- name: ENDPOINT

value: "https://cell-1.queue.messaging..oci.oraclecloud.com"

- name: LOG

value: "ocid1.log.oc1.."

restartPolicy: OnFailure

imagePullSecrets:

- name: ocirsecret

backoffLimit: 0

pollingInterval: 5 # Optional. Default: 30 seconds

maxReplicaCount: 3 # Optional. Default: 100

successfulJobsHistoryLimit: 3 # Optional. Default: 100. How many completed jobs should be kept.

failedJobsHistoryLimit: 2 # Optional. Default: 100. How many failed jobs should be kept.

scalingStrategy:

strategy: "default"

triggers:

- type: metrics-api

metadata:

targetValue: "1"

url: "http://EXTERNAL-IP:3000/stats"

valueLocation: 'queueStats.queue.visibleMessages'

Then run:

kubectl create -f scanning-readq-job/keda.yaml

Note: Env variable QUEUE is the OCID of the scanning queue created in the earlier step with Terraform using Resource Manager Stack. Copy it from the Cloud UI. Copy also the value for the env var ENDPOINT from the Queue settings using Cloud UI. Env variable LOG is the OCID of the scanning log created in the earlier step with Terraform using Resource Manager Stack. Copy it from the Cloud UI, too. Configure also the scanning-readq service EXTERNAL-IP as the endpoint url for the metrics-api

To autoscale the nodes in the OKE pool2 from zero to one and scanning-readq-job jobs to run on the OKE autoscaler needs to be installed

To do this edit the scanning-readq-job/cluster-autoscaler.yaml in localhost to match your values (in bold):

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

name: cluster-autoscaler

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: cluster-autoscaler

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

rules:

- apiGroups: [""]

resources: ["events", "endpoints"]

verbs: ["create", "patch"]

- apiGroups: [""]

resources: ["pods/eviction"]

verbs: ["create"]

- apiGroups: [""]

resources: ["pods/status"]

verbs: ["update"]

- apiGroups: [""]

resources: ["endpoints"]

resourceNames: ["cluster-autoscaler"]

verbs: ["get", "update"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["watch", "list", "get", "patch", "update"]

- apiGroups: [""]

resources:

- "pods"

- "services"

- "replicationcontrollers"

- "persistentvolumeclaims"

- "persistentvolumes"

verbs: ["watch", "list", "get"]

- apiGroups: ["extensions"]

resources: ["replicasets", "daemonsets"]

verbs: ["watch", "list", "get"]

- apiGroups: ["policy"]

resources: ["poddisruptionbudgets"]

verbs: ["watch", "list"]

- apiGroups: ["apps"]

resources: ["statefulsets", "replicasets", "daemonsets"]

verbs: ["watch", "list", "get"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses", "csinodes"]

verbs: ["watch", "list", "get"]

- apiGroups: ["batch", "extensions"]

resources: ["jobs"]

verbs: ["get", "list", "watch", "patch"]

- apiGroups: ["coordination.k8s.io"]

resources: ["leases"]

verbs: ["create"]

- apiGroups: ["coordination.k8s.io"]

resourceNames: ["cluster-autoscaler"]

resources: ["leases"]

verbs: ["get", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: cluster-autoscaler

namespace: kube-system

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create","list","watch"]

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["cluster-autoscaler-status", "cluster-autoscaler-priority-expander"]

verbs: ["delete", "get", "update", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: cluster-autoscaler

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-autoscaler

subjects:

- kind: ServiceAccount

name: cluster-autoscaler

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: cluster-autoscaler

namespace: kube-system

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: cluster-autoscaler

subjects:

- kind: ServiceAccount

name: cluster-autoscaler

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: cluster-autoscaler-2

namespace: kube-system

labels:

app: cluster-autoscaler

spec:

replicas: 1

selector:

matchLabels:

app: cluster-autoscaler

template:

metadata:

labels:

app: cluster-autoscaler

annotations:

prometheus.io/scrape: 'true'

prometheus.io/port: '8085'

spec:

serviceAccountName: cluster-autoscaler

containers:

- image: fra.ocir.io/oracle/oci-cluster-autoscaler:1.25.0-6

name: cluster-autoscaler

resources:

limits:

cpu: 100m

requests:

cpu: 100m

command:

- ./cluster-autoscaler

- --v=4

- --stderrthreshold=info

- --cloud-provider=oci-oke

- --max-node-provision-time=25m

- --nodes=0:5:ocid1.nodepool.oc1..

- --scale-down-delay-after-add=10m

- --scale-down-unneeded-time=10m

- --unremovable-node-recheck-timeout=5m

- --balance-similar-node-groups

- --balancing-ignore-label=displayName

- --balancing-ignore-label=hostname

- --balancing-ignore-label=internal_addr

- --balancing-ignore-label=oci.oraclecloud.com/fault-domain

imagePullPolicy: "Always"

env:

- name: OKE_USE_INSTANCE_PRINCIPAL

value: "true"

- name: OCI_SDK_APPEND_USER_AGENT

value: "oci-oke-cluster-autoscaler"

For the autoscaler proper tag in the YAML above please check the autoscaler documentation

The node pool is the OCID of the OKE Cluster pool2

To create the autoscaler run:

kubectl create -f scanning-readq-job/cluster-autoscaler.yaml

Upload a test file files.zip using oci cli from localhost

oci os object put --bucket-name scanning-ms --region <YOUR REGION> --file files.zip

{

"etag": "59dc11dc-62f3-4df4-886d-adf9c9c00dc4",

"last-modified": "Wed, 15 Mar 2023 10:46:34 GMT",

"opc-content-md5": "5D53dhf9MeT+gS8qJzbOAw=="

}

Monitor the Q length using the scanning-readq service:

curl http://<EXTERNAL-IP>:3000/stats

{"queueStats":{"queue":{"visibleMessages":0,"inFlightMessages":0,"sizeInBytes":0},"dlq":{"visibleMessages":0,"inFlightMessages":0,"sizeInBytes":0}},"opcRequestId":"07857530C320-11ED-AE89-FFC729A3C/BCA92AC274B1CC09FB9C7A6975DC609B/7D9970C765A85603727C2E125DB0F9B0"}

Q length will increase to 1 after the object storage event has triggered the scanning-writeq function:

curl http://<EXTERNAL-IP>:3000/stats

{"queueStats":{"queue":{"visibleMessages":1,"inFlightMessages":0,"sizeInBytes":9},"dlq":{"visibleMessages":0,"inFlightMessages":0,"sizeInBytes":0}},"opcRequestId":"0A1F2850C31F-11ED-AE89-FFC729A3C/41F3E07FC383D9E2F4EE58E4996FC179/D8097243379228D86AC64378A6701FEA"}

scanning-readq-job job are scheduled:

kubectl get pods --watch NAME READY STATUS RESTARTS AGE scanning-readq-58d6bdd64c-9bbsq 1/1 Running 1 24h scanning-readq-job-scaler-n2fs6-pn2ns 0/1 Pending 0 0s

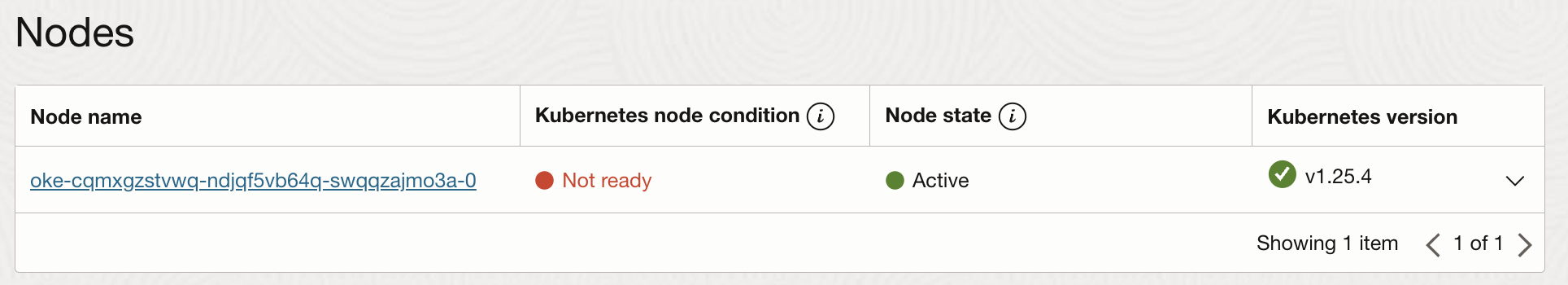

Wait for a while for the node in pool2 to become available as provisioned by the OKE cluster autoscaler

for the jobs to run on:

Once the node is available the job will run:

kubectl get pods --watch NAME READY STATUS RESTARTS AGE scanning-readq-58d6bdd64c-9bbsq 1/1 Running 1 24h scanning-readq-job-scaler-n2fs6-pn2ns 0/1 Pending 0 0s scanning-readq-job-scaler-n2fs6-pn2ns 0/1 Pending 0 0s scanning-readq-job-scaler-n2fs6-pn2ns 0/1 Pending 0 3m13s scanning-readq-job-scaler-n2fs6-pn2ns 0/1 ContainerCreating 0 3m13s scanning-readq-job-scaler-n2fs6-pn2ns 1/1 Running 0 5m11s

While the job is running the Q will move the message to inFlight:

curl http://<EXTERNAL-IP>:3000/stats

{"queueStats":{"queue":{"visibleMessages":0,"inFlightMessages":1,"sizeInBytes":9},"dlq":{"visibleMessages":0,"inFlightMessages":0,"sizeInBytes":0}},"opcRequestId":"0A1F2850C31F-11ED-AE89-FFC729A3C/41F3E07FC383D9E2F4EE58E4996FC179/D8097243379228D86AC64378A6701FEA"}

After job has run for the virus scanning the job will remain in completed state:

kubectl get pods NAME READY STATUS RESTARTS AGE scanning-readq-58d6bdd64c-9bbsq 1/1 Running 1 24h scanning-readq-job-scaler-n2fs6-pn2ns 0/1 Completed 0 6m1s

Also the Q goes back to it's original state with zero messages since it was processed.

To see the log for the job run:

kubectl logs scanning-readq-job-scaler-n2fs6-pn2ns Job reading from Q .. Scanning files.zip ################# Scanning found no infected files ######################### Job reading from Q .. Q empty - finishing up

After a while the pool2 will be scaled down to zero by the autoscaler if no further

scanning jobs are running

The uploaded test files.zip file was moved from the scanning-ms bucket to

scanned-ms bucket in the process (assuming the test file was not infected)

You can also upload multiple files and see several jobs being scheduled and run simultaneously for scanning

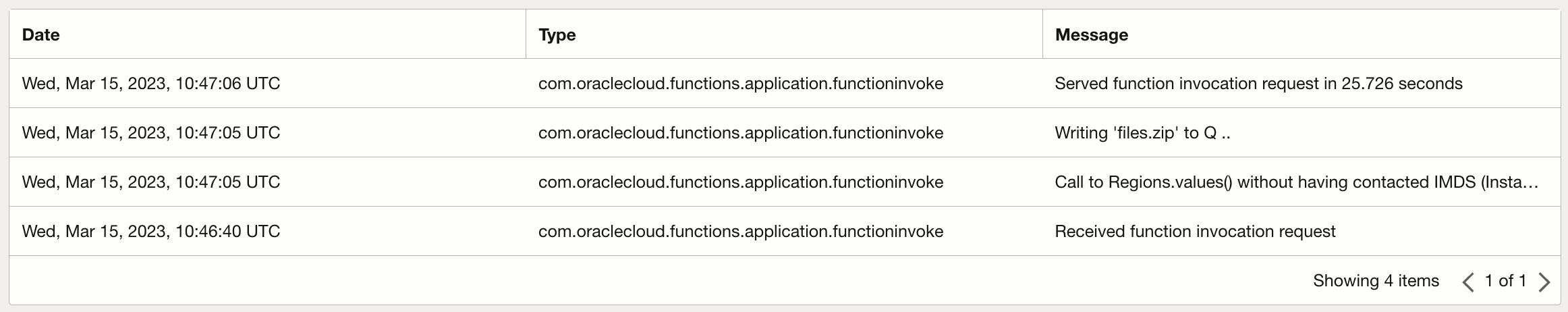

In the Cloud UI see the log for the function application scanning-ms:

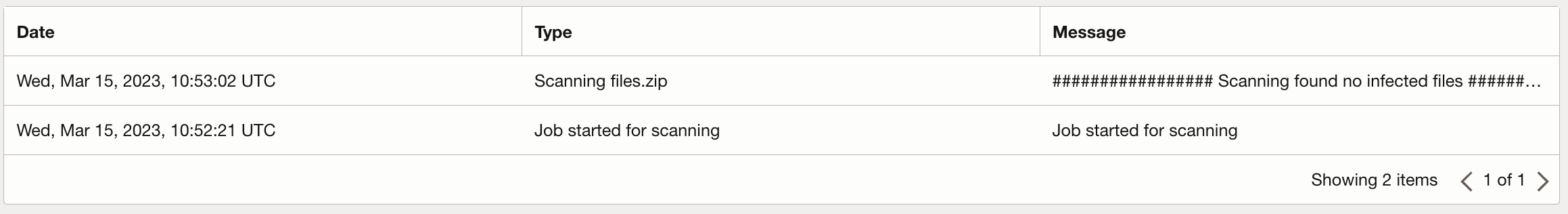

In the Cloud UI see the custom log scanning for the scanning-readq-job job(s):

OKE cluster with oci cli access from localhost and OCI cloud shell

This project is open source. Please submit your contributions by forking this repository and submitting a pull request! Oracle appreciates any contributions that are made by the open source community.

Copyright (c) 2022 Oracle and/or its affiliates.

Licensed under the Universal Permissive License (UPL), Version 1.0.

See LICENSE for more details.

ORACLE AND ITS AFFILIATES DO NOT PROVIDE ANY WARRANTY WHATSOEVER, EXPRESS OR IMPLIED, FOR ANY SOFTWARE, MATERIAL OR CONTENT OF ANY KIND CONTAINED OR PRODUCED WITHIN THIS REPOSITORY, AND IN PARTICULAR SPECIFICALLY DISCLAIM ANY AND ALL IMPLIED WARRANTIES OF TITLE, NON-INFRINGEMENT, MERCHANTABILITY, AND FITNESS FOR A PARTICULAR PURPOSE. FURTHERMORE, ORACLE AND ITS AFFILIATES DO NOT REPRESENT THAT ANY CUSTOMARY SECURITY REVIEW HAS BEEN PERFORMED WITH RESPECT TO ANY SOFTWARE, MATERIAL OR CONTENT CONTAINED OR PRODUCED WITHIN THIS REPOSITORY. IN ADDITION, AND WITHOUT LIMITING THE FOREGOING, THIRD PARTIES MAY HAVE POSTED SOFTWARE, MATERIAL OR CONTENT TO THIS REPOSITORY WITHOUT ANY REVIEW. USE AT YOUR OWN RISK.