Automated tool for scraping job postings into a .csv file.

- Never see the same job twice!

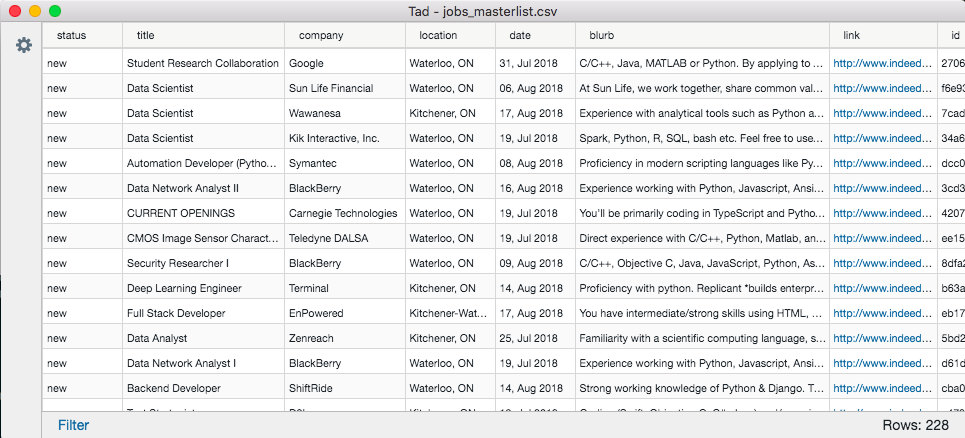

- Browse all search results at once, in an easy to read/sort spreadsheet.

- Keep track of all explicitly new job postings in your area.

- See jobs from multiple job search sites all in one place.

The spreadsheet for managing your job search:

JobFunnel requires Python 3.6 or later.

All dependencies are listed in setup.py, and can be installed automatically with pip when installing JobFunnel.

pip install git+https://github.com/PaulMcInnis/JobFunnel.git

funnel --help

If you want to develop JobFunnel, you may want to install it in-place:

git clone git@github.com:PaulMcInnis/JobFunnel.git jobfunnel

pip install -e ./jobfunnel

funnel --help

- Set your job search preferences in the

yamlconfiguration file (or use-kw). - Run

funnelto scrape all-available job listings. - Review jobs in the master-list, update the job

statusto other values such asintervieworoffer. - Set any undesired job

statustoarchive, these jobs will be removed from the.csvnext time you runfunnel. - Check out demo/readme.md if you want to try the demo.

Note: rejected jobs will be filtered out and will disappear from the output .csv.

-

Custom Status

Note that any custom states (i.eapplied) are preserved in the spreadsheet. -

Running Filters

To update active filters and to see anynewjobs going forwards, just runfunnelagain, and review the.csvfile. -

Recovering Lost Master-list

If ever your master-list gets deleted you still have the historic pickle files.

Simply runfunnel --recoverto generate a new master-list. -

Managing Multiple Searches

You can keep multiple search results across multiple.csvfiles:funnel -kw Python -o python_search funnel -kw AI Machine Learning -o ML_search -

Filtering Undesired Companies

Filter undesired companies by providing your ownyamlconfiguration and adding them to the black list (seeJobFunnel/jobfunnel/config/settings.yaml). -

Automating Searches

JobFunnel can be easily automated to run nightly with crontab

For more information see the crontab document. -

Reviewing Jobs in Terminal

You can review the job list in the command line:column -s, -t < master_list.csv | less -#2 -N -S -

Saving Duplicates

You can save removed duplicates in a separate file, which is stored in the same place as your master list:funnel --save_dup -

Respectful Delaying

Respectfully scrape your job posts with our built-in delaying algorithm, which can be configured using a config file (seeJobFunnel/jobfunnel/config/settings.yaml) or with command line arguments:-dlets you set your max delay value:funnel -s demo/settings.yaml -kw AI -d 15-rlets you specify if you want to use random delaying, and uses-dto control the range of randoms we pull from:

funnel -s demo/settings.yaml -kw AI -r-cspecifies converging random delay, which is an alternative mode of random delay. Random delay needed to be turned on as well for it to work. Proper usage would look something like this:

funnel -s demo/settings.yaml -kw AI -r -c-mdlets you set a minimum delay value:

funnel -s demo/settings.yaml -d 15 -md 5--funcan be used to set which mathematical function (constant,linear, orsigmoid) is used to calculate delay:

funnel -s demo/settings.yaml --fun sigmoid--no_delayTurns off delaying, but it's usage is not recommended.

To better understand how to configure delaying, check out this Jupyter Notebook breaking down the algorithm step by step with code and visualizations.