This repository contains several heatmap based approaches like stacked Hourglass and stacked Scale Aggregation Topology (SAT) for robust 2D and 3D face alignment. Some popular blocks such as bottleneck residual block, inception residual block, parallel and multi-scale (HPM) residual block and channel aggregation block (CAB) are also provided for building the topology of the deep face alignment network. All the codes in this repo are implemented in Python and MXNet.

The models for 2D face alignment are verified on IBUG, COFW and 300W test datasets by the normalised mean error (NME) respectively. For 3D face alignment, the 3D pre-trained models are compared on AFLW2000-3D with the most recent state-of-the-art methods.

The training/validation dataset and testset are in below table:

| Data | Download Link | Description |

|---|---|---|

| train_testset2d.zip | BaiduCloud or GoogleDrive, 490M | 2D training/validation dataset and IBUG, COFW, 300W testset |

| train_testset3d.zip | BaiduCloud or GoogleDrive, 1.54G | 3D training/validation dataset and AFLW2000-3D testset |

The performances of 2D pre-trained models are shown below. Accuracy is reported as the Normalised Mean Error (NME). To facilitate comparison with other methods on these datasets, we give mean error normalised by the eye centre distance. Each training model is denoted by Topology^StackBlock (d = DownSampling Steps) - BlockType - OtherParameters.

| Model | Model Size | IBUG | COFW | 300W | Download Link |

|---|---|---|---|---|---|

| Hourglass2(d=4)-Resnet | 26MB | 7.719 | 6.776 | 6.482 | BaiduCloud or GoogleDrive |

| Hourglass2(d=3)-HPM | 38MB | 7.249 | 6.378 | 6.049 | BaiduCloud or GoogleDrive |

| Hourglass2(d=4)-CAB | 46MB | 7.168 | 6.123 | 5.684 | BaiduCloud or GoogleDrive |

| SAT2(d=3)-CAB | 40MB | 7.052 | 5.999 | 5.618 | BaiduCloud or GoogleDrive |

| Hourglass2(d=3)-CAB | 37MB | 6.974 | 5.983 | 5.647 | BaiduCloud or GoogleDrive |

The performances of 3D pre-trained models are shown below. Accuracy is reported as the Normalised Mean Error (NME). The mean error is normalised by the square root of the ground truth bounding box size.

| Model | Model Size | AFLW2000-3D | Download Link |

|---|---|---|---|

| SAT2(d=3)-CAB-3D | 40MB | 3.072 | BaiduCloud or GoogleDrive |

| Hourglass2(d=3)-CAB-3D | 37MB | 3.005 | BaiduCloud or GoogleDrive |

Note: More pre-trained models will be added soon.

This repository has been tested under the following environment:

- Python 2.7

- Ubuntu 18.04

- Mxnet-cu90 (==1.3.0)

-

Prepare the environment.

-

Clone the repository.

-

Type

maketo build necessary cxx libs.

-

Download the training/validation dataset and unzip it to your project directory.

-

You can define different loss-type/network topology/dataset in

config.py(fromsample_config.py). -

You can edit

train.shand runsh train.shor usepython train.pyto train your models. The following commands are some examples. Our experiments were conducted on a GTX 1080Ti GPU.

(1) Train stacked Scale Aggregation Topology (SAT) networks with channel aggregation block (CAB).

CUDA_VISIBLE_DEVICES='0' python train.py --network satnet --prefix ./model/model-sat2d3-cab/model --per-batch-size 16 --lr 1e-4 --lr-epoch-step '20,35,45'

(2) Train stacked Hourglass models with parallel and multi-scale (HPM) residual block.

CUDA_VISIBLE_DEVICES='0' python train.py --network hourglass --prefix ./model/model-hg2d3-hpm/model --per-batch-size 16 --lr 1e-4 --lr-epoch-step '20,35,45'

-

Download the ESSH model from BaiduCloud or GoogleDrive and place it in

./essh-model/. -

Download the pre-trained model and place it in

./models/. -

You can use

python test.pyto test your models for 2D and 3D face alignment.

To evaluate pre-trained models on IBUG, COFW, 300W and AFLW2000-3D testset, you can use 'python test_rec_nme.py' to obtain the Normalised Mean Error (NME) on the testset. We give some examples below.

- Evaluate model Hourglass2(d=3)-CAB with 2D landmarks on IBUG testset.

python test_rec_nme.py --dataset ibug --prefix ./models/model-hg2d3-cab/model --epoch 0 --gpu 0 --landmark-type 2d

- Evaluate model SAT2(d=3)-CAB with 2D landmarks on COFW testset.

python test_rec_nme.py --dataset cofw_testset --prefix ./models/model-sat2d3-cab/model --epoch 0 --gpu 0 --landmark-type 2d

- Evaluate model SAT2(d=3)-HPM with 2D landmarks on 300W testset.

python test_rec_nme.py --dataset 300W --prefix ./models/model-hg2d3-hpm/model --epoch 0 --gpu 0 --landmark-type 2d

- Evaluate model Hourglass2(d=3)-CAB-3D with 3D landmarks on AFLW2000-3D testset.

python test_rec_nme.py --dataset AFLW2000-3D --prefix ./models/model-hg2d3-cab-3d/model --epoch 0 --gpu 0 --landmark-type 3d

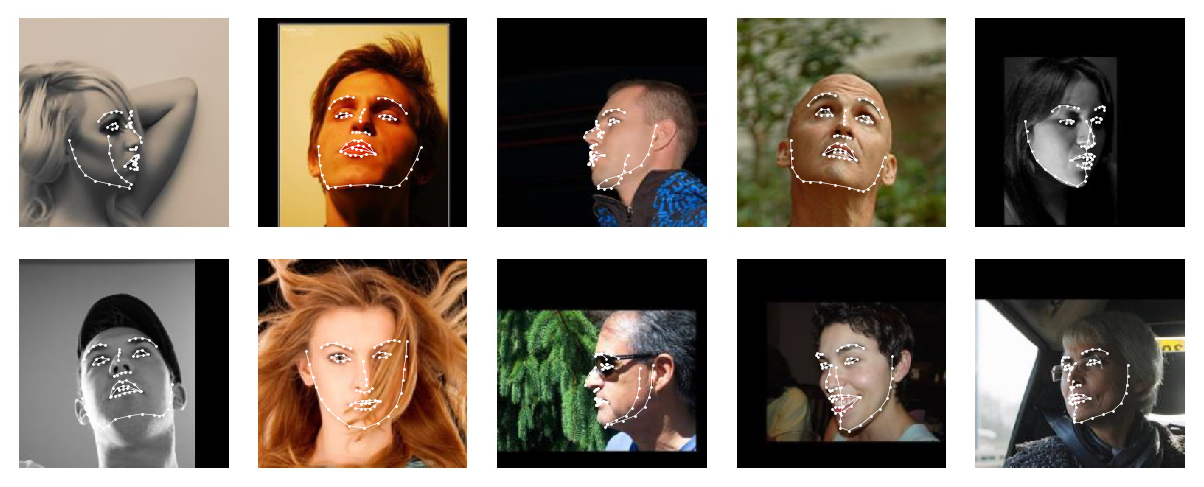

Results of 2D face alignment (inferenced from model Hourglass2(d=3)-CAB) are shown below.

Results on ALFW2000-3D dataset (inferenced from model Hourglass2(d=3)-CAB-3D) are shown below.

MIT LICENSE

@article{guo2018stacked,

title={Stacked Dense U-Nets with Dual Transformers for Robust Face Alignment},

author={Guo, Jia and Deng, Jiankang and Xue, Niannan and Zafeiriou, Stefanos},

journal={arXiv preprint arXiv:1812.01936},

year={2018}

}

@inproceedings{Deng2018Cascade,

title={Cascade Multi-View Hourglass Model for Robust 3D Face Alignment},

author={Deng, Jiankang and Zhou, Yuxiang and Cheng, Shiyang and Zaferiou, Stefanos},

booktitle={2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018)},

pages={399-403},

year={2018},

}

@article{Bulat2018Hierarchical,

title={Hierarchical binary CNNs for landmark localization with limited resources},

author={Bulat, Adrian and Tzimiropoulos, Yorgos},

journal={IEEE Transactions on Pattern Analysis & Machine Intelligence},

year={2018},

}

@inproceedings{Jing2017Stacked,

title={Stacked Hourglass Network for Robust Facial Landmark Localisation},

author={Jing, Yang and Liu, Qingshan and Zhang, Kaihua and Jing, Yang and Liu, Qingshan and Zhang, Kaihua and Jing, Yang and Liu, Qingshan and Zhang, Kaihua},

booktitle={IEEE Conference on Computer Vision & Pattern Recognition Workshops},

year={2017},

}

The code is adapted based on an intial fork from the insightface repository.