This is a Tensorflow implementation of "SuperPoint: Self-Supervised Interest Point Detection and Description." Daniel DeTone, Tomasz Malisiewicz, Andrew Rabinovich. ArXiv 2018.

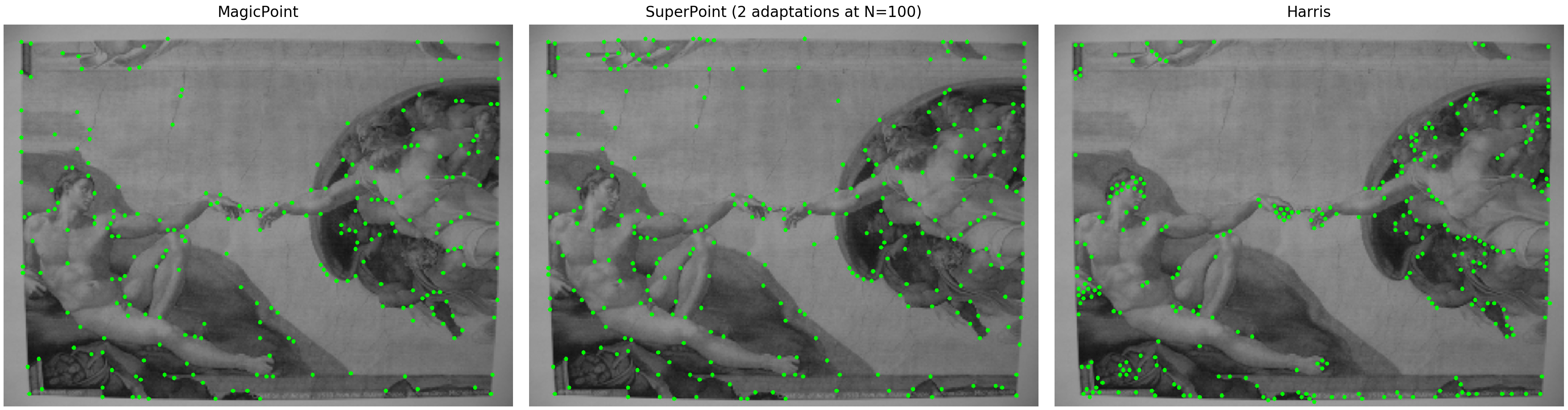

Repeatability on HPatches computed with 300 points detected in common between pairs of images and with a NMS of 4:

| Illumination changes | Viewpoint changes | |

|---|---|---|

| SuperPoint (our implementation) | 0.661 | 0.409 |

| SuperPoint (pretrained model of MagicLeap) | 0.641 | 0.379 |

| FAST | 0.577 | 0.415 |

| Harris | 0.630 | 0.474 |

| Shi | 0.583 | 0.407 |

Homography estimation on HPatches computed with a maximum of 1000 points detected in common between pairs of images, a threshold of correctness of 3 and with a NMS of 8:

| Illumination changes | Viewpoint changes | |

|---|---|---|

| SuperPoint (our implementation) | 0.944 | 0.244 |

| SuperPoint (pretrained model of MagicLeap) | 0.881 | 0.251 |

| SIFT | 0.811 | 0.258 |

| ORB | 0.547 | 0.129 |

Homography estimation on HPatches computed with a maximum of 1000 points detected in common between pairs of images, with all kind of changes (viewpoint and illumination) and with a NMS of 8:

| Correctness threshold | e = 1 | e = 3 | e = 5 |

|---|---|---|---|

| SuperPoint (our implementation) | 0.334 | 0.588 | 0.631 |

| SIFT | 0.302 | 0.517 | 0.556 |

| ORB | 0.121 | 0.303 | 0.378 |

make install # install the Python requirements and setup the pathsPython 3.6.1 is required. You will be asked to provide a path to an experiment directory (containing the training and prediction outputs, referred as $EXPER_DIR) and a dataset directory (referred as $DATA_DIR). Create them wherever you wish and make sure to provide their absolute paths.

MS-COCO 2014 and HPatches should be downloaded into $DATA_DIR. The Synthetic Shapes dataset will also be generated there. The folder structure should look like:

$DATA_DIR

|-- COCO

| |-- train2014

| | |-- file1.jpg

| | `-- ...

| `-- val2014

| |-- file1.jpg

| `-- ...

`-- HPatches

| |-- i_ajuntament

| `-- ...

`-- synthetic_shapes # will be automatically created

All commands should be executed within the superpoint/ subfolder. When training a model or exporting its predictions, you will often have to change the relevant configuration file in superpoint/configs/. Both multi-GPU training and export are supported.

python experiment.py train configs/magic-point_shapes.yaml magic-point_synth

where magic-point_synth is the experiment name, which may be changed to anything. The training can be interrupted at any time using Ctrl+C and the weights will be saved in $EXPER_DIR/magic-point_synth/. The Tensorboard summaries are also dumped there. When training for the first time, the Synthetic Shapes dataset will be generated.

python export_detections.py configs/magic-point_coco_export.yaml magic-point_synth --pred_only --batch_size=5 --export_name=magic-point_coco-export1

This will save the pseudo-ground truth interest point labels to $EXPER_DIR/outputs/magic-point_coco-export1/. You might enable or disable the Homographic Adaptation in the configuration file.

python experiment.py train configs/magic-point_coco_train.yaml magic-point_coco

You will need to indicate the paths to the interest point labels in magic-point_coco_train.yaml by setting the entry data/labels, for example to outputs/magic-point_coco-export1. You might repeat steps 2) and 3) several times.

python export_detections_repeatability.py configs/magic-point_repeatability.yaml magic-point_coco --export_name=magic-point_hpatches-repeatability-v

You will need to decide whether you want to evaluate for viewpoint or illumination by setting the entry data/alteration in the configuration file. The predictions of the image pairs will be saved in $EXPER_DIR/outputs/magic-point_hpatches-repeatability-v/. To proceed to the evaluation, head over to notebooks/detector_repeatability_coco.ipynb. You can also evaluate the repeatability of the classical detectors using the configuration file classical-detectors_repeatability.yaml.

It is also possible to evaluate the repeatability on a validation split of COCO. You will first need to generate warped image pairs using generate_coco_patches.py.

Once you have trained MagicPoint with several rounds of homographic adaptation (one or two should be enough), you can export again the detections on MS-COCO as in step 2) and use these detections to train SuperPoint by setting the entry data/labels:

python experiment.py train configs/superpoint_coco.yaml superpoint_coco

python export_descriptors.py configs/superpoint_hpatches.yaml superpoint_coco --export_name=superpoint_hpatches-v

You will need to decide again whether you want to evaluate for viewpoint or illumination by setting the entry data/alteration in the configuration file. The predictions of the image pairs will be saved in $EXPER_DIR/outputs/superpoint_hpatches-v/. To proceed to the evaluation, head over to notebooks/descriptors_evaluation_on_hpatches.ipynb. You can also evaluate the repeatability of the classical detectors using the configuration file classical-descriptors.yaml.

This implementation was developed by Rémi Pautrat and Paul-Edouard Sarlin. Please contact Rémi for any enquiry.