3D surface reconstruction and simulation based on 3D neural rendering.

This repository primarily addresses two topics:

- Efficient and detailed reconstruction of implicit surfaces across different scenarios.

- Including object-centric / street-view, indoor / outdoor, large-scale (WIP) and multi-object (WIP) datasets.

- Highlighted implementations include neus_in_10_minutes, neus_in_10_minutes#indoor and streetsurf.

- Multi-object implicit surface reconstruction, manipulation, and multi-modal sensor simulation.

- With particular focus on autonomous driving datasets.

TOC

- Implicit surface is all you need !

- Ecosystem

- Highlights

- Usage

- Roadmap & TODOs

- Acknowledgements & citations

Single-object / multi-object / indoor / outdoor / large-scale surface reconstruction and multi-modal sensor simulation

instant_neus_gundam_nvs_camera_1l.mp4

instant_neus_stump_nvs_ds.1.0_camera_1l.mp4

instant_neus_bicycle_nvs_ds.1.0_camera_1l.mp4

| 🚀 Object surface reconstruction in minutes ! Input: posed images without mask Get started: neus_in_10_minutes Credits: Jianfei Guo  |

🚀 Outdoor surface reconstruction in minutes ! Input: posed images without mask Get started: neus_in_10_minutes Credits: Jianfei Guo  |

| 🚀 Indoor surface reconstruction in minutes ! Input: posed images, monocular cues Get started: neus_in_10_minutes#indoor Credits: Jianfei Guo  |

🚗 Categorical surface reconstruction in the wild ! Input: multi-instance multi-view categorical images [To be released 2023.09] Credits: Qiusheng Huang, Jianfei Guo, Xinyang Li  |

| 🛣️ Street-view surface reconstruction in 2 hours ! Input: posed images, monocular cues (and optional LiDAR) Get started: streetsurf Credits: Jianfei Guo, Nianchen Deng teaser_seg100613_0.5.mp4 |

🛣️ Street-view multi-modal sensor simulation ! Using reconstructed asset-bank Get started: streetsurf#lidarsim Credits: Jianfei Guo, Xinyu Cai, Nianchen Deng teaser_seg405841_lidar_0.5.mp4 |

| 🛣️ Street-view multi-object surfaces reconstruction in hours ! Input: posed images, LiDAR, 3D tracklets [To be released 2023.09] Credits: Jianfei Guo, Nianchen Deng teaser_seg767010_mix_4x3_0.5.mp4 |

🛣️ Street-view scenario editing ! Using reconstructed asset-bank [To be released 2023.09] Credits: Jianfei Guo, Nianchen Deng teaser_seg767010_manipulate.mp4 |

| 🏙️ Large-scale multi-view surface reconstruction ... (WIP) | 🛣️ Street-view light editing ... (WIP) |

%%{init: {'theme': 'neutral', "flowchart" : { "curve" : "basis" } } }%%

graph LR;

0("fa:fa-wrench <b>Basic models & operators</b><br/>(e.g. LoTD & pack_ops)<br/><a href='https://github.com/pjlab-ADG/nr3d_lib' target='_blank'>nr3d_lib</a>")

A("fa:fa-road <b>Single scene</b><br/>[paper] StreetSurf<br/>[repo] <a href='https://github.com/pjlab-ADG/neuralsim' target='_blank'>neuralsim</a>/code_single")

B("fa:fa-car <b>Categorical objects</b><br/>[paper] CatRecon<br/>[repo] <a href='https://github.com/pjlab-ADG/neuralgen' target='_blank'>neuralgen</a>")

C("fa:fa-globe <b>Large scale scene</b><br/>[repo] neuralsim/code_large<br/>[release date] Sept. 2023")

D("fa:fa-sitemap <b>Multi-object scene</b><br/>[repo] neuralsim/code_multi<br/>[release date] Sept. 2023")

B --> D

A --> D

A --> C

C --> D

Pull requests and collaborations are warmly welcomed 🤗! Please follow our code style if you want to make any contribution.

Feel free to open an issue or contact Jianfei Guo (guojianfei@pjlab.org.cn) or Nianchen Deng (dengnianchen@pjlab.org.cn) if you have any questions or proposals.

| Methods | 🚀 Get started | Official / Un-official | Notes, major difference from paper, etc. |

|---|---|---|---|

| StreetSurf | readme | Official | - LiDAR loss improved |

| NeuS in minutes | readme | Un-official | - support object-centric datasets as well as indoor datasets - fast and stable convergence without needing mask - support using NGP / LoTD or MLPs as fg&bg representations - large pixel batch size (4096) & pixel error maps |

| NGP with LiDAR | readme | Un-official | - using Urban-NeRF's LiDAR loss |

| Multi-object reconstruction with unisim's CNN decoder | [WIP] | Un-official |

- - volumetric ray buffer mering, instead of feature grid spatial merging - our version of foreground hypernetworks and background model StreetSurf (the details of theirs are not released up to now) |

Code: app/renderers/general_volume_renderer.py

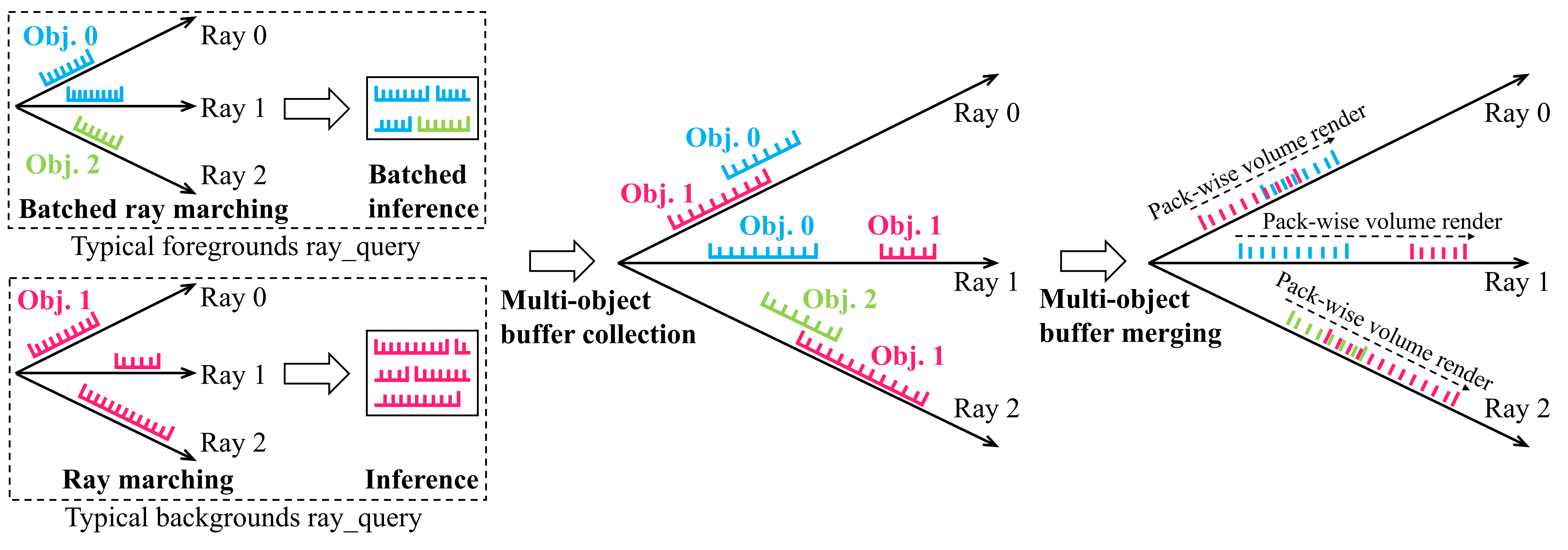

We provide a universal implementation of multi-object volume rendering that supports any kind of methods built for volume rendering, as long as a model can be queried with rays and can output opacity_alpha, depth samples t, and other optional fields like rgb, nablas, features, etc.

This renderer is efficient mainly due to:

- Frustum culling

- Occupancy-grid-based single / batched ray marching and pack merging implemented with pack_ops

- (optional) Batched / indiced inference of LoTD

The figure below depicts the idea of the whole rendering process.

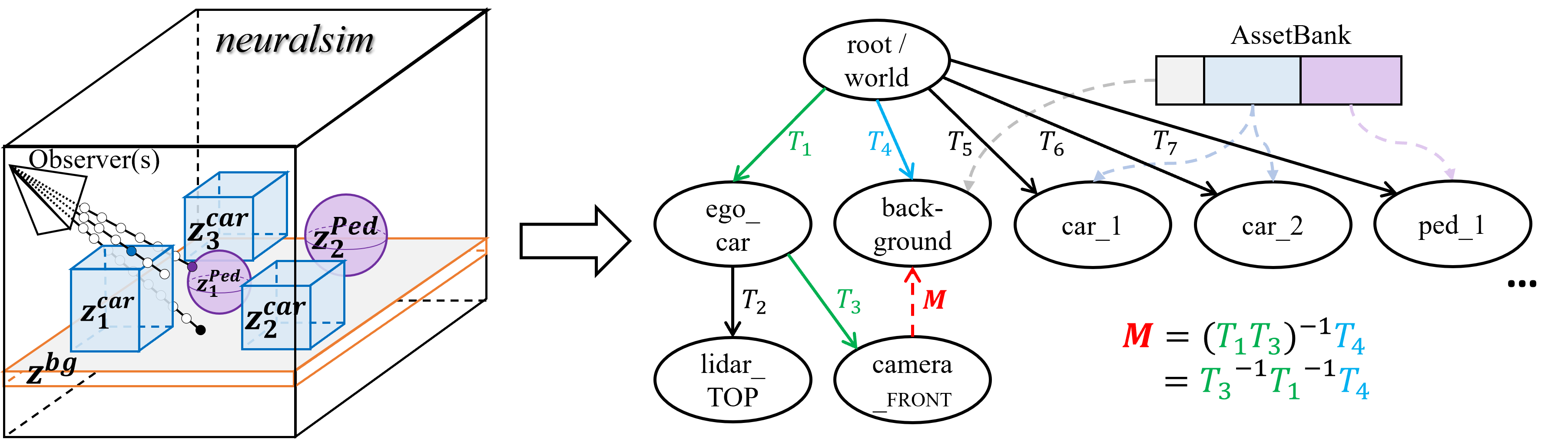

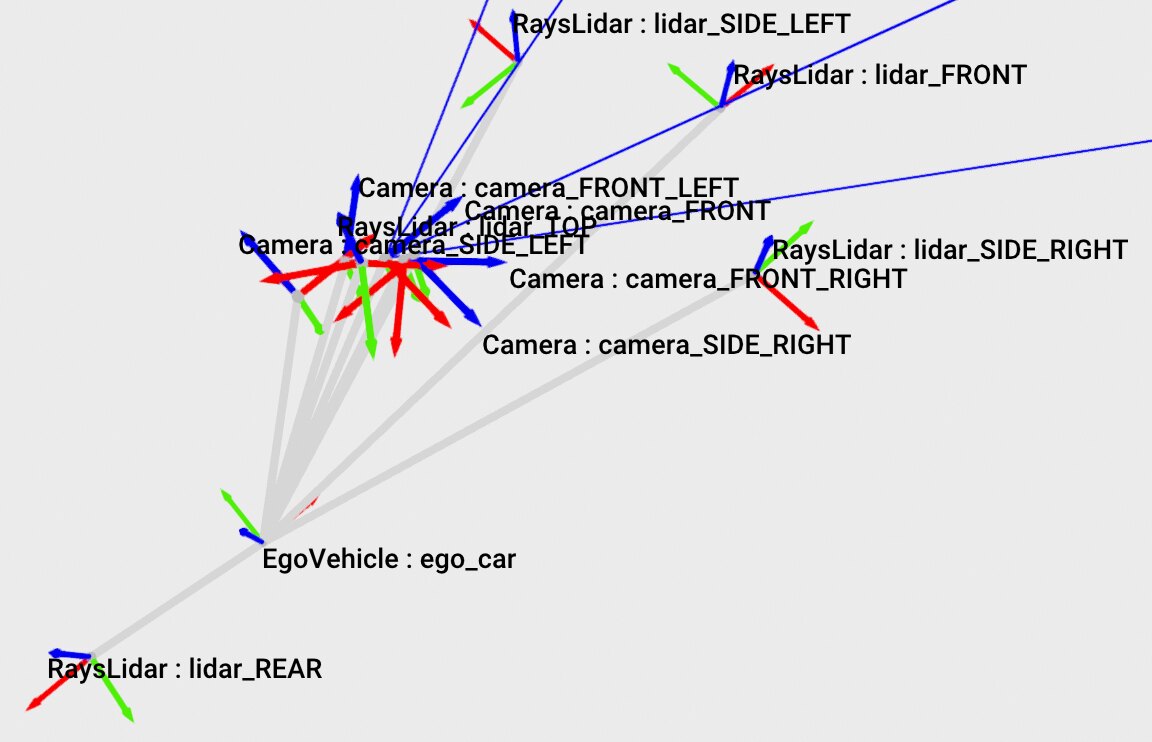

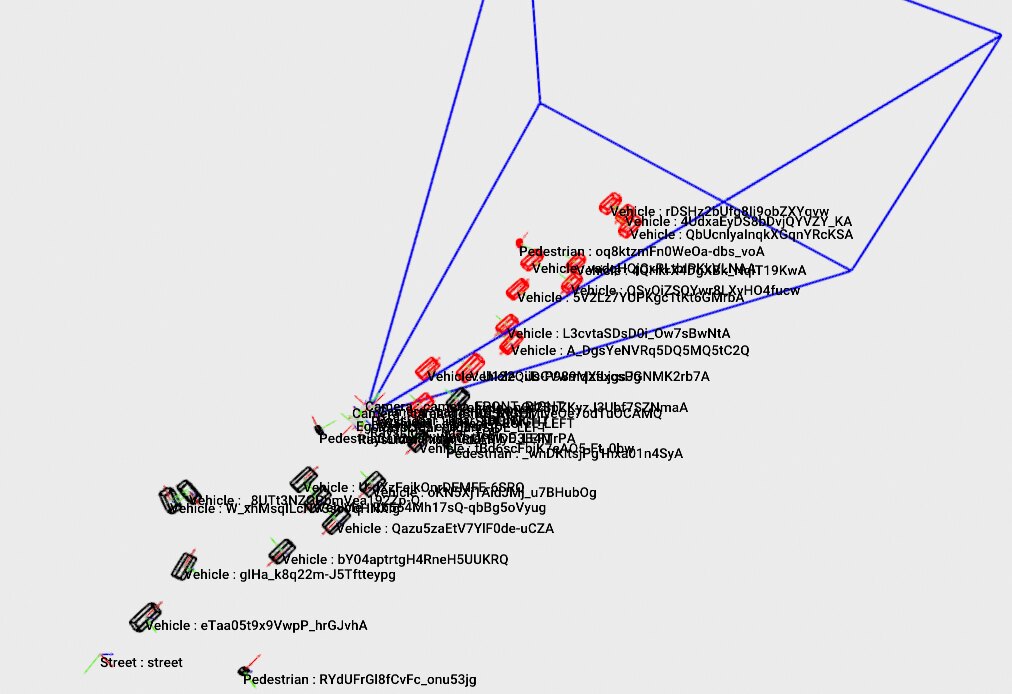

Code: app/resources/scenes.py app/resources/nodes.py

To streamline the organization of assets and transformations, we adopt the concept of generic scene graphs used in modern graphics engines like magnum.

Any entity that possesses a pose or position is considered a node. Certain nodes are equipped with special functionalities, such as camera operations or drawable models (i.e. renderable assets in AssetBank).

| Real-data scene graph | Real-data frustum culling |

|---|---|

|

|

Code: code_multi/tools/manipulate.py (WIP)

Given that different objects are represented by unique networks (for categorical or shared models, they have unique latents or embeddings), it's possible to explicitly add, remove or modify the reconstructed assets in a scene.

We offer a toolkit for performing such scene manipulations. Some of the intriguing edits are showcased below.

| 💃 Let them dance ! | 🔀 Multi-verse | 🎨 Change their style ! |

|---|---|---|

teaser_seg767010_manipulate.mp4 |

teaser_seg767010_multiverse_1.mp4 |

teaser_seg767010_style_4x3_2.mp4Credits to Qiusheng Huang and Xinyang Li. |

Please note, this toolkit is currently in its early development stages and only basic edits have been released. Stay tuned for updates, and contributions are always welcome :)

Code: app/resources/observers/lidars.py

Get started:

Credits to Xinyu Cai's team work, we now support simulation of various real-world LiDAR models.

The volume rendering process is guided by our reconstructed implicit surface scene geometry, which guarantees accurate depths. More details on this are in our StreetSurf paper section 5.1.

Code: app/resources/observers/cameras.py

We now support pinhole camera, standard OpenCV camera models with distortion, and an experimental fisheye camera model.

First, clone with submodules:

git clone https://github.com/pjlab-ADG/neuralsim --recurse-submodules -j8 ...Then, cd into nr3d_lib and refer to nr3d_lib/README.md for the following steps.

- Object-centric scenarios (indoor / outdoor, with / without mask)

- Street-view or autonomous driving scenarios

Please refer to code_single/README.md

(WIP)

(WIP)

- Unofficial implementation of unisim

- Release our methods on multi-object reconstruction for autonomous driving

- Release our methods on large-scale representation and neus

- Factorization of embient light and object textures

- Dataloaders for more autonomous driving datasets (KITTI, NuScenes, Waymo v2.0, ZOD, PandarSet)

- nr3d_lib Containing most of our basic modules and operators

- LiDARSimLib LiDAR models

- StreetSurf Our recent paper studying street-view implicit surface reconstruction

@article{guo2023streetsurf,

title = {StreetSurf: Extending Multi-view Implicit Surface Reconstruction to Street Views},

author = {Guo, Jianfei and Deng, Nianchen and Li, Xinyang and Bai, Yeqi and Shi, Botian and Wang, Chiyu and Ding, Chenjing and Wang, Dongliang and Li, Yikang},

journal = {arXiv preprint arXiv:2306.04988},

year = {2023}

}- NeuS Most of our methods are derived from NeuS

@inproceedings{wang2021neus,

title={NeuS: Learning Neural Implicit Surfaces by Volume Rendering for Multi-view Reconstruction},

author={Wang, Peng and Liu, Lingjie and Liu, Yuan and Theobalt, Christian and Komura, Taku and Wang, Wenping},

booktitle={Proc. Advances in Neural Information Processing Systems (NeurIPS)},

volume={34},

pages={27171--27183},

year={2021}

}