Wenhao Wu1,2, Huanjin Yao2,3, Mengxi Zhang2,4, Yuxin Song2, Wanli Ouyang5, Jingdong Wang2

1The University of Sydney, 2Baidu, 3Tsinghua University, 4Tianjin University, 5The Chinese University of Hong Kong

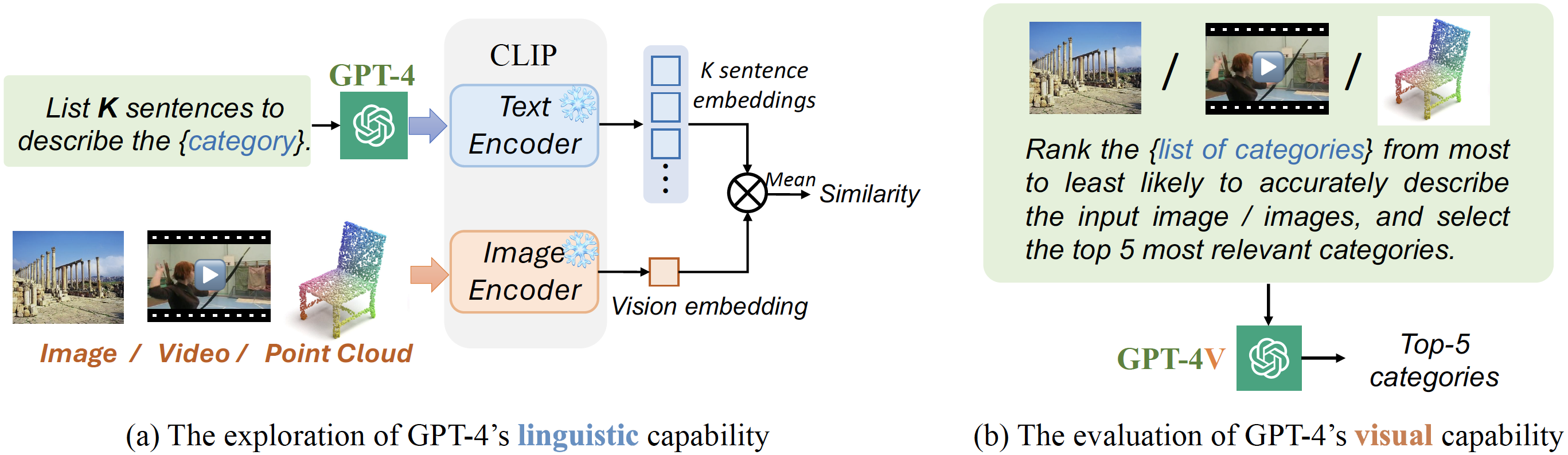

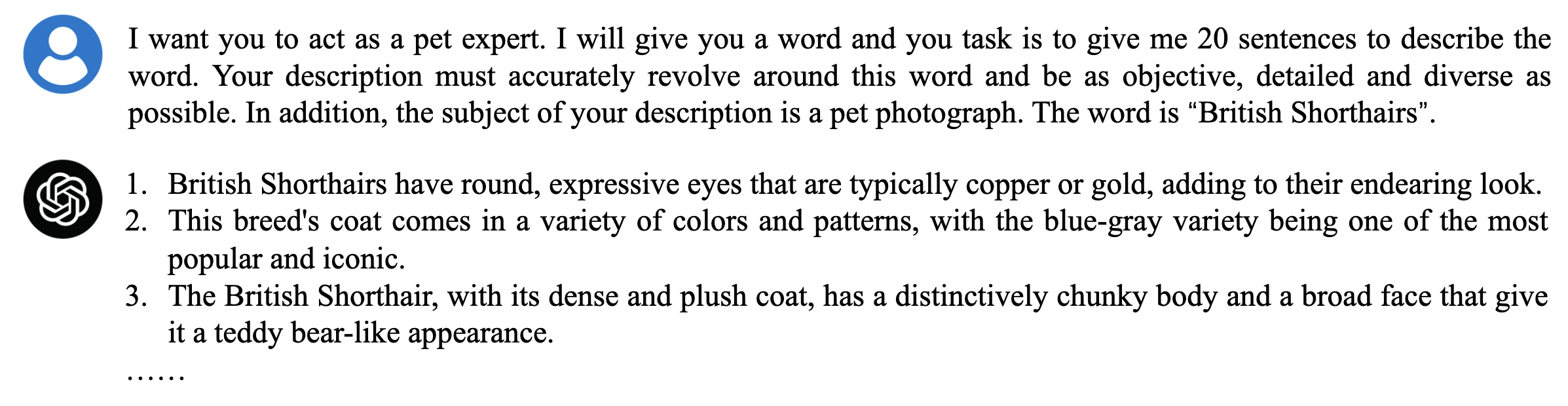

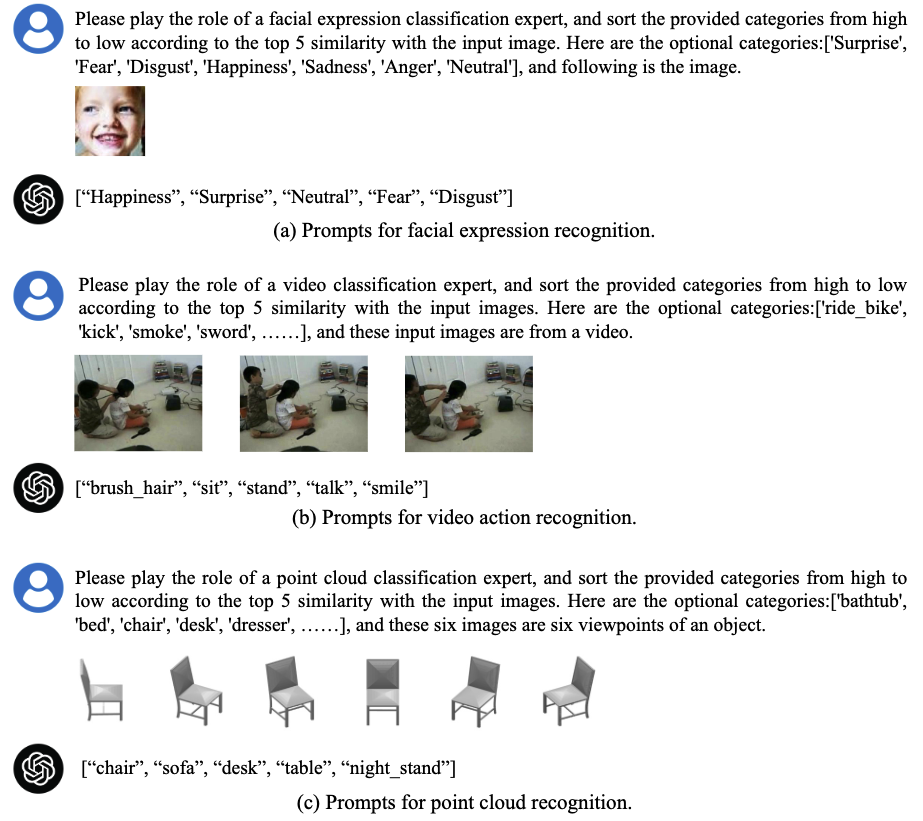

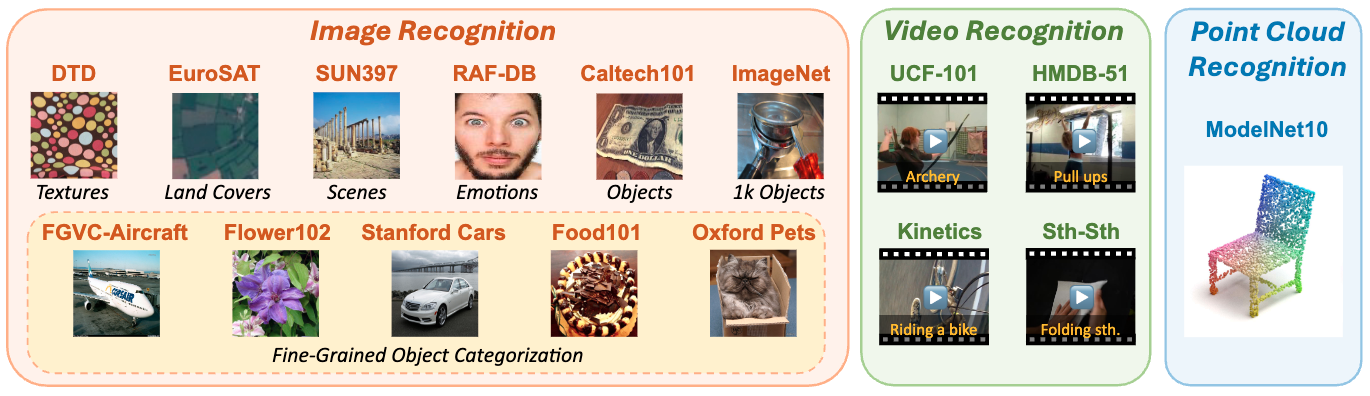

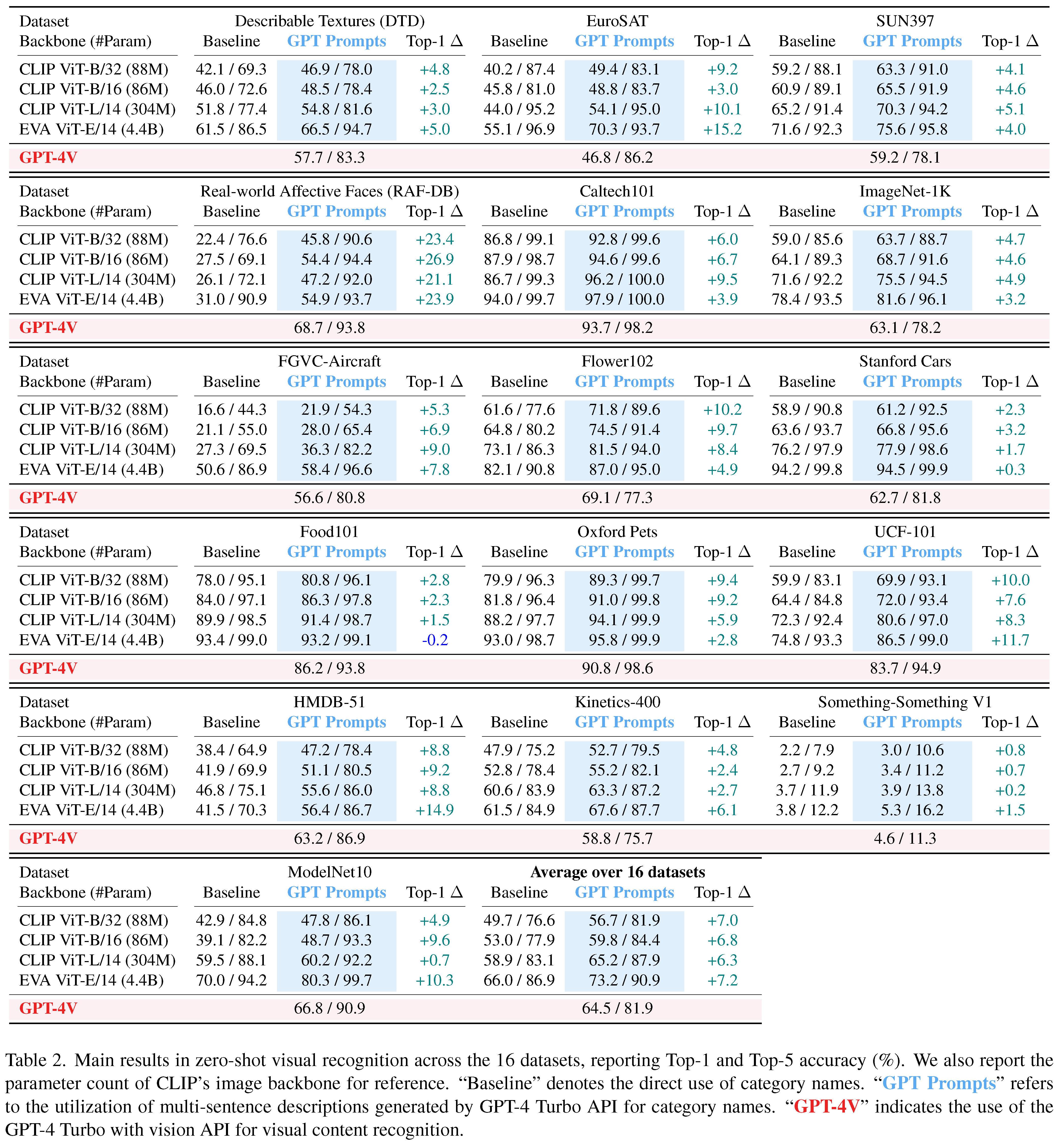

This work delves into an essential, yet must-know baseline in light of the latest advancements in Generative Artificial Intelligence (GenAI): the utilization of GPT-4 for visual understanding. We center on the evaluation of GPT-4's linguistic and visual capabilities in zero-shot visual recognition tasks. To ensure a comprehensive evaluation, we have conducted experiments across three modalities—images, videos, and point clouds—spanning a total of 16 popular academic benchmark.

📣 I also have other cross-modal projects that may interest you ✨.

Revisiting Classifier: Transferring Vision-Language Models for Video Recognition

Wenhao Wu, Zhun Sun, Wanli Ouyang

Bidirectional Cross-Modal Knowledge Exploration for Video Recognition with Pre-trained Vision-Language Models

Wenhao Wu, Xiaohan Wang, Haipeng Luo, Jingdong Wang, Yi Yang, Wanli Ouyang

Cap4Video: What Can Auxiliary Captions Do for Text-Video Retrieval?

Wenhao Wu, Haipeng Luo, Bo Fang, Jingdong Wang, Wanli Ouyang

Accepted by CVPR 2023 as 🌟Highlight🌟 |

- [Mar 7, 2024] Due to the recent removal of RPD (request per day) limits on the GPT-4V API, we've updated our predictions for all datasets using standard single testing (one sample per request). Check out the GPT4V Results, Ground Truth and Datasets we've shared for you! As a heads-up, 😭running all tests once costs around 💰$4000+💰.

- [Nov 28, 2023] We release our report in Arxiv.

- [Nov 27, 2023] Our prompts have been released. Thanks for your star 😝.

Zero-shot visual recognition leveraging GPT-4's linguistic and visual capabilities.

-

We have pre-generated descriptive sentences for all the categories across the datasets, which you can find in the GPT_generated_prompts folder. Enjoy exploring!

-

We've also provided the example script to help you generate descriptions using GPT-4. For guidance on this, please refer to the generate_prompt.py file. Happy coding! Please refer to the config folder for detailed information on all datasets used in our project.

-

Execute the following command to generate descriptions with GPT-4.

# To run the script for specific dataset, simply update the following line with the name of the dataset you're working with: # dataset_name = ["Dataset Name Here"] # e.g., dtd python generate_prompt.py

-

We share an example script that demonstrates how to use the GPT-4V API for zero-shot predictions on the DTD dataset. Please refer to the GPT4V_ZS.py file for a step-by-step guide on implementing this. We hope it helps you get started with ease!

# GPT4V zero-shot recognition script. # dataset_name = ["Dataset Name Here"] # e.g., dtd python GPT4V_ZS.py

-

All results are available in the GPT4V_ZS_Results folder! In addition, we've provided the Datasets link along with their corresponding ground truths (annotations folder) to help readers in replicating the results. Note: For certain datasets, we may have removed prefixes from the sample IDs. For instance, in the case of ImageNet, "ILSVRC2012_val_00031094.JPEG" was modified to "00031094.JPEG".

| DTD | EuroSAT | SUN397 | RAF-DB | Caltech101 | ImageNet-1K | FGVC-Aircraft | Flower102 |

|---|---|---|---|---|---|---|---|

| 57.7 | 46.8 | 59.2 | 68.7 | 93.7 | 63.1 | 56.6 | 69.1 |

| Label | Label | Label | Label | Label | Label | Label | Label |

| Stanford Cars | Food101 | Oxford Pets | UCF-101 | HMDB-51 | Kinetics-400 | ModelNet-10 |

|---|---|---|---|---|---|---|

| 62.7 | 86.2 | 90.8 | 83.7 | 58.8 | 58.8 | 66.9 |

| Label | Label | Label | Label | Label | Label | Label |

-

With the provided prediction and annotation files, you can reproduce our top-1/top-5 accuracy results with the calculate_acc.py script.

# pred_json_path = 'GPT4V_ZS_Results/imagenet.json' # gt_json_path = 'annotations/imagenet_gt.json' python calculate_acc.py

For guidance on setting up and running the GPT-4 API, we recommend checking out the official OpenAI Quickstart documentation available at: OpenAI Quickstart Guide.

If you use our code in your research or wish to refer to the results, please star 🌟 this repo and use the following BibTeX 📑 entry.

@article{GPT4Vis,

title={GPT4Vis: What Can GPT-4 Do for Zero-shot Visual Recognition?},

author={Wu, Wenhao and Yao, Huanjin and Zhang, Mengxi and Song, Yuxin and Ouyang, Wanli and Wang, Jingdong},

booktitle={arXiv preprint arXiv:2311.15732},

year={2023}

}This evaluation is built on the excellent works:

- CLIP: Learning Transferable Visual Models From Natural Language Supervision

- GPT-4

- Text4Vis: Transferring Vision-Language Models for Visual Recognition: A Classifier Perspective

We extend our sincere gratitude to these contributors.

For any questions, please feel free to file an issue.