stolon is a cloud native PostgreSQL manager for PostgreSQL high availability. It's cloud native because it'll let you keep an high available PostgreSQL inside your containers (kubernetes integration) but also on every other kind of infrastructure (cloud IaaS, old style infrastructures etc...)

For an introduction to stolon you can also take a look at this post

- Leverages PostgreSQL streaming replication.

- Resilient to any kind of partitioning. While trying to keep the maximum availability, it prefers consistency over availability.

- kubernetes integration letting you achieve postgreSQL high availability.

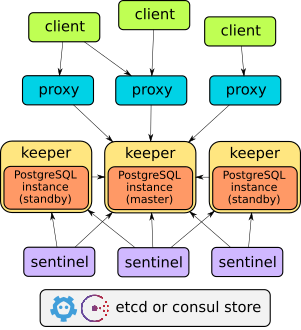

- Uses a cluster store like etcd or consul as an high available data store and for leader election

- Asynchronous (default) and synchronous replication.

- Full cluster setup in minutes.

- Easy cluster admininistration

- Automatic service discovery and dynamic reconfiguration (handles postgres and stolon processes changing their addresses).

- Can use pg_rewind for fast instance resyncronization with current master.

Stolon is composed of 3 main components

- keeper: it manages a PostgreSQL instance converging to the clusterview provided by the sentinel(s).

- sentinel: it discovers and monitors keepers and calculates the optimal clusterview.

- proxy: the client's access point. It enforce connections to the right PostgreSQL master and forcibly closes connections to unelected masters.

Stolon is under active development and used in different environments. Probably its on disk format (store hierarchy and key contents) will change in future to support new features. If a breaking change is needed it'll be documented in the release notes and an upgrade path will be provided.

Anyway it's quite easy to reset a cluster from scratch keeping the current master instance working and without losing any data.

- PostgreSQL >= 9.4

- etcd >= 2.0 or consul >=0.6

./build

Stolon tries to be resilient to any partitioning problem. The cluster view is computed by the leader sentinel and is useful to avoid data loss (one example over all avoid that old dead masters coming back are elected as the new master).

There can be tons of different partitioning cases. The primary ones are covered (and in future more will be added) by various integration tests

Since stolon by default leverages consistency over availability, there's the need for the clients to be connected to the current cluster elected master and be disconnected to unelected ones. For example, if you are connected to the current elected master and subsequently the cluster (for any valid reason, like network partitioning) elects a new master, to achieve consistency, the client needs to be disconnected from the old master (or it'll write data to it that will be lost when it resyncs). This is the purpose of the stolon proxy.

For our need to forcibly close connections to unelected masters and handle keepers/sentinel that can come and go and change their addresses we implemented a dedicated proxy that's directly reading it's state from the store. Thanks to go goroutines it's very fast.

We are open to alternative solutions (PRs are welcome) like using haproxy if they can met the above requirements. For example, an hypothetical haproxy based proxy needs a way to work with changing ip addresses, get the current cluster information and being able to forcibly close a connection when an haproxy backend is marked as failed (as a note, to achieve the latter, a possible solution that needs testing will be to use the on-marked-down shutdown-sessions haproxy server option).

stolon is an open source project under the Apache 2.0 license, and contributions are gladly welcomed!