Repository for the paper "Collecting Visually-Grounded Dialogue with A Game Of Sorts" presented at LREC 2022. Please cite the following work if you use anything from this repository or from our paper:

@inproceedings{willemsen-etal-2022-collecting,

title = "Collecting Visually-Grounded Dialogue with A Game Of Sorts",

author = "Willemsen, Bram and

Kalpakchi, Dmytro and

Skantze, Gabriel",

booktitle = "Proceedings of the Thirteenth Language Resources and Evaluation Conference",

month = jun,

year = "2022",

address = "Marseille, France",

publisher = "European Language Resources Association",

url = "https://aclanthology.org/2022.lrec-1.242",

pages = "2257--2268"

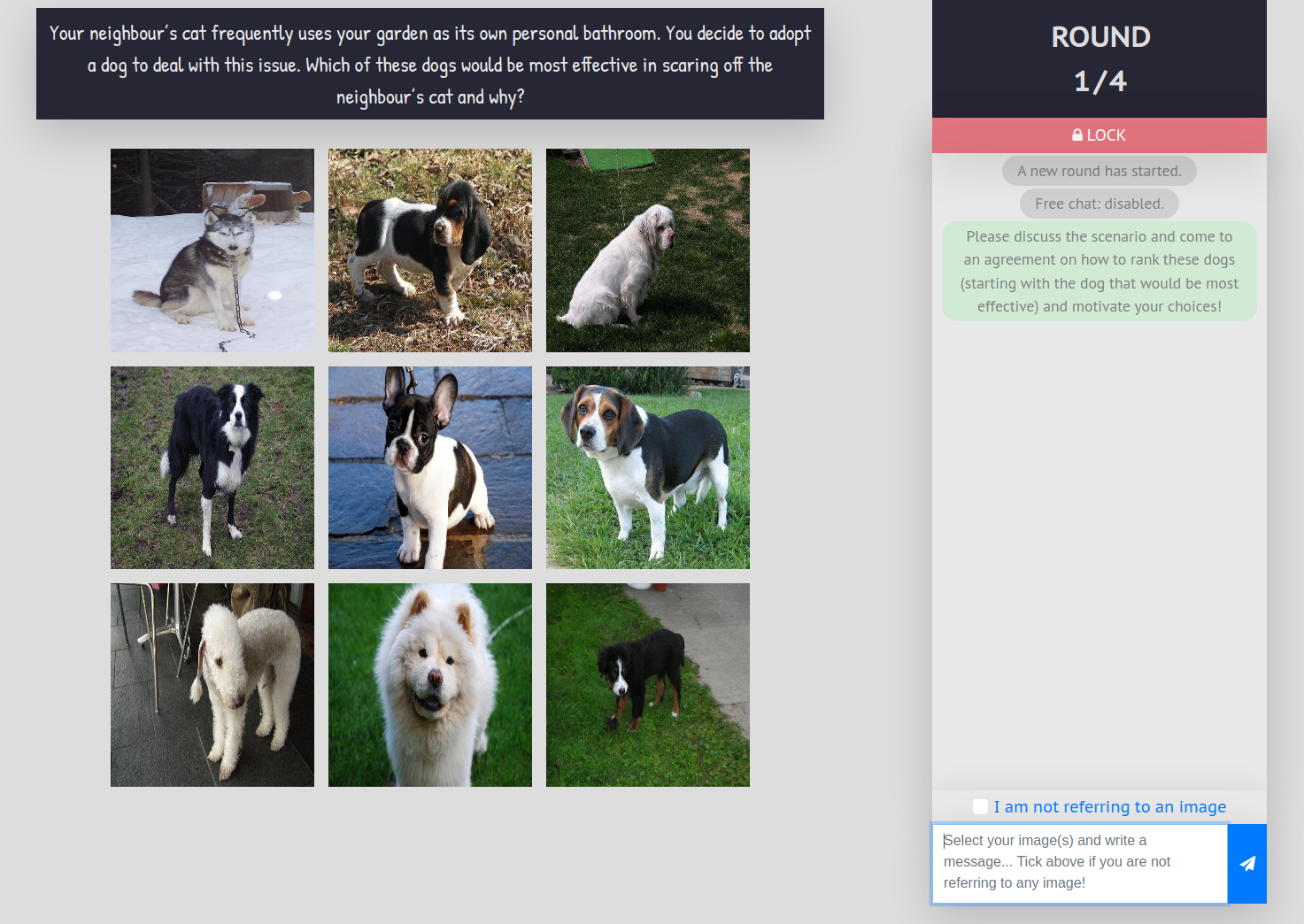

}A Game Of Sorts is a collaborative image ranking task. The default version of this "game" has two players tasked with reaching agreement on how to rank a set of nine images given some sorting criterion. Communication between players takes the form of a written dialogue which takes place under partial knowledge, as the position of the images on the gameboard is randomized and players cannot see each other's perspective. The game is played over four rounds with a recurring set of images. For a more detailed explanation of the game, we refer the reader to Section 3 of our paper.

Figure 1 provides an overview of the game's user interface. The gameboard consists of a sorting criterion, embedded in a scenario, and a set of nine images1, laid out in a 3x3 grid. When a user hovers over an image with their mouse cursor, the image will pop over the other images, revealing a slightly zoomed-in version of the image in its original aspect ratio for improved visibility. To the right of the gameboard is the chat area. Before being able to send a message, the player is expected to indicate whether they are referencing one or more images in their message (i.e., self-annotation). To do so, they either select the image(s) in question on the gameboard by clicking on the referenced image(s) or they select the "I'm not referring to an image" option in case their message does not contain a reference to any of the images. Above the chat area, a red button is visible that reads "LOCK": when the players have managed to reach an agreement on how to rank one or more of the images, they will need to click this button after having selected the image in question on the gameboard; note that images can only be locked in one at a time. If both players lock the same image, the image is assigned a rank; ranks are assigned in descending order. The assigned rank of a successfully ranked image cannot be changed.

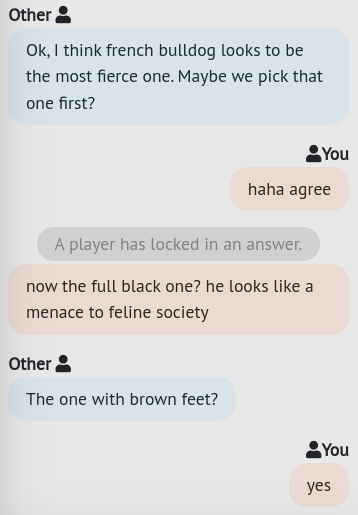

Figure 2 shows an excerpt of a dialogue in which the players discussed the scenario presented in Figure 1.

The game's codebase can be found in the ./game directory.

The application (client and server-side) is written in JavaScript and makes use of Embedded JavaScript (EJS) templates.

The database is MongoDB.

We use Docker to provide a containerized version of the application.

🚧 NOTE: We are refactoring the code to improve readability and usability; the repo will be updated and code added once necessary improvements have been made.

The collected data, as reported in our paper, can be found in the ./dataset directory.

These include the dialogues, player actions, answers to the post-game questionnaire, the specific game configurations and other meta data required to reconstruct the interactions.

This data is stored in JSON Lines-formatted files.

{

"interaction_id": "61c09b4ee90a3cc04fdce194",

"game_id": "game_1_dogs_1",

"board_id": "game_1_dogs_1_board_1",

"round_number": 1,

"round_active": true,

"message_number": 30,

"username": "tiny-elephant-9291",

"body_text": "Ok, how about the white curly dog. Looks like it has a strange haircut.",

"image_ids": [

"n02093647_2585"

],

"timestamp": 1640013899727

}| Name | Type | Description |

|---|---|---|

| interaction_id | str | Interaction ID |

| game_id | str | Game ID |

| board_id | str | Gameboard ID |

| round_number | int | Round number; starts at 0 if practice round, otherwise starts at 1 |

| round_active | bool | Indicates whether the message was sent during (true) or after (false) a round |

| message_number | int | Message number; resets each round, starts at 1 |

| username | str | Username of the player that sent this message |

| body_text | str | Text content of this message |

| image_ids | array | Array of image IDs (as str) that were selected by the player to indicate which images were referenced in the message: self-annotation |

| timestamp | int | Unix timestamp indicating the time at which the server received the message |

More examples and an explanation of the content of each file can be found in the ./dataset README.

The 45 images used in the data collection (as reported in our paper) were taken from various publicly-available datasets.

Pointers are provided in the ./dataset README.

A bash script is provided to automatically download the images to ./dataset/images.

The paper "Resolving References in Visually-Grounded Dialogue via Text Generation" contributes, among other things, mention annotations at the span level and has all annotated mentions aligned with the images they denote.

git clone https://github.com/willemsenbram/reference-resolution-via-text-generation.git

See the ./annotations directory.

Please cite their work if you make use of these annotations or other material from their repo or paper.

Footnotes

-

The images shown here were taken from Open Images V6. ↩