- ICT.OPEN, 7 April 2022 - OpenCSD: Unified Architecture for eBPF-powered Computational Storage Devices (CSD) with Filesystem Support

- arXiv, 13 December 2021 - Past, Present and Future of Computational Storage: A Survey

- arXiv, 29 November 2021 - ZCSD: a Computational Storage Device over Zoned Namespaces (ZNS) SSDs

on-going / pending

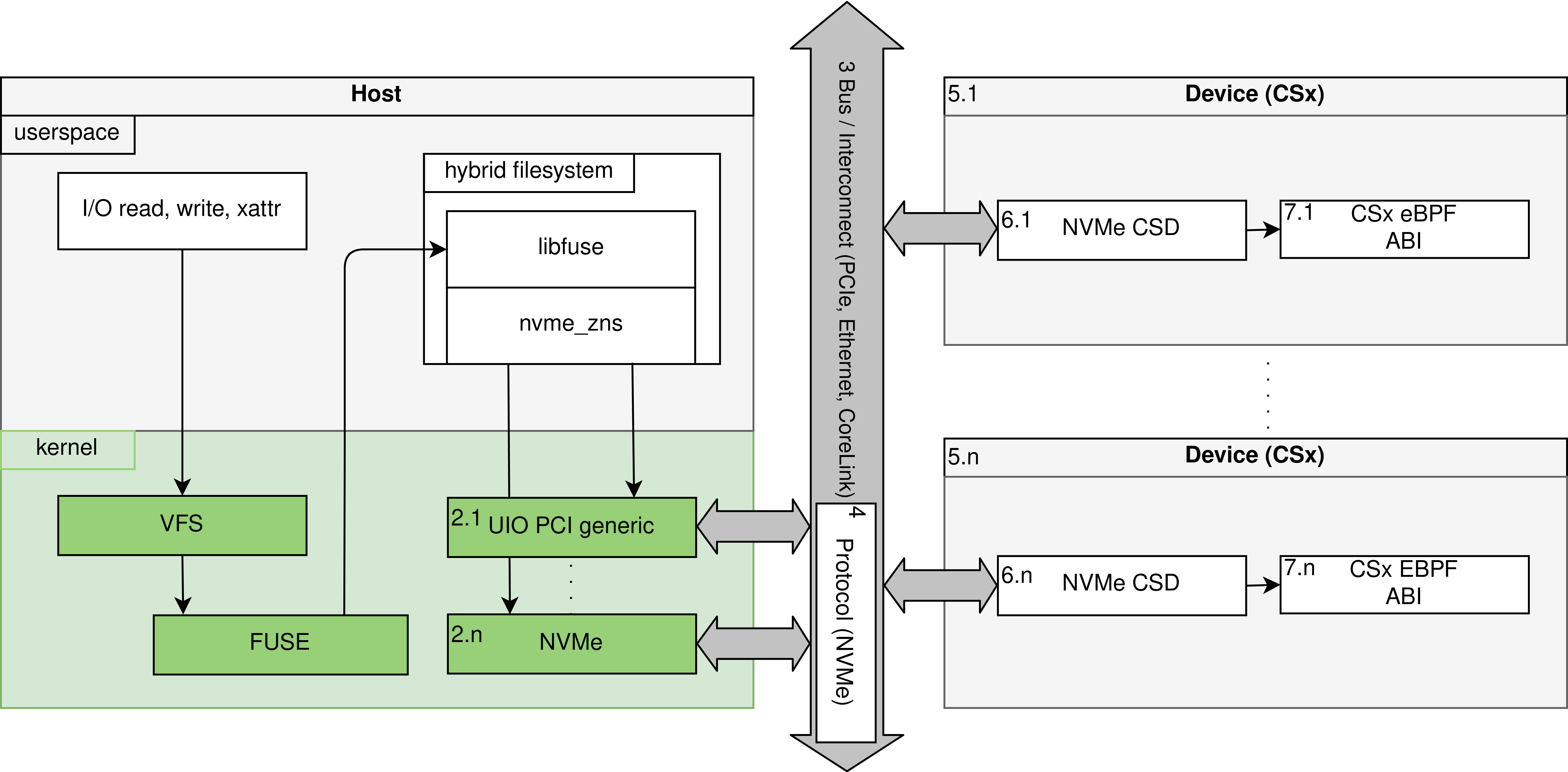

OpenCSD is an improved version of ZCSD achieving snapshot consistency log-structured filesystem (LFS) (FluffleFS) integration on Zoned Namespaces (ZNS) Computational Storage Devices (CSD). Below is a diagram of the overall architecture as presented to the end user. However, the actual implementation differs due to the use of emulation using technologies such as QEMU, uBPF and SPDK.

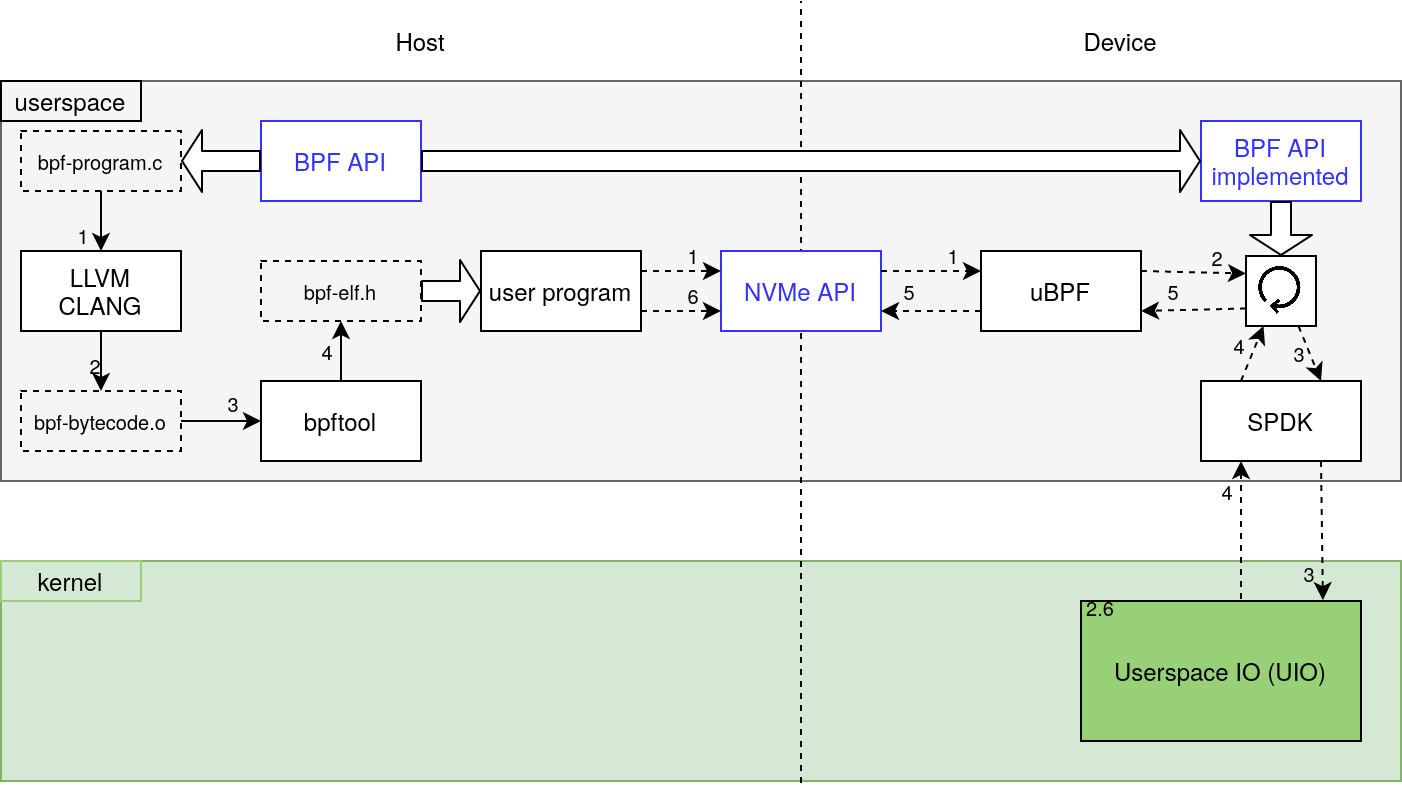

ZCSD is a full stack prototype to execute eBPF programs as if they are running on a ZNS CSD SSDs. The entire prototype can be run from userspace by utilizing existing technologies such as SPDK and uBPF. Since consumer ZNS SSDs are still unavailable, QEMU can be used to create a virtual ZNS SSD. The programming and interactive steps of individual components is shown below.

The getting started & examples are actively being reworked to be easier to follow and a lower barrier to entry. The Setup section should still be complete but alternatively the old readme of the ZCSD prototype is still readily available.

- Directory structure

- Modules

- Dependencies

- Setup

- Examples

- Contributing

- Licensing

- References

- Progress Report

- Logbook

- qemu-csd - Project source files

- cmake - Small cmake snippets to enable various features

- dependencies - Project dependencies

- docs - Doxygen generated source code documentation

- playground - Small toy examples or other experiments

- python - Python scripts to aid in visualization or measurements

- scripts - Shell scripts primarily used by CMake to install project dependencies

- tests - Unit tests and possibly integration tests

- thesis - Thesis written on OpenCSD using LaTeX

- zcsd - Documentation on the previous prototype.

- compsys 2021 - CompSys 2021 presentation written in LaTeX

- documentation - Individual Systems Project report written in LaTeX

- presentation - Individual Systems Project midterm presentation written in LaTeX

- .vscode - Launch targets and settings to debug programs running inside QEMU over SSH

| Module | Task |

|---|---|

| arguments | Parse commandline arguments to relevant components |

| bpf_helpers | Headers to define functions available from within BPF |

| bpf_programs | BPF programs ready to run on a CSD using bpf_helpers |

| fuse_lfs | Log Structured Filesystem in FUSE |

| nvme_csd | Emulated additional NVMe commands to enable BPF CSDs |

| nvme_zns | Interface to handle zoned I/O using abstracted backends |

| nvme_zns_memory | Non-persistent memory backed emulated ZNS SSD backend |

| nvme_zns_spdk | Persistent SPDK backed ZNS SSD backend |

| output | Neatly control messages to stdout and stderr with levels |

| spdk_init | Provides SPDK initialization and handles for nvme_zns & nvme_csd |

This project has a large selection of dependencies as shown below. Note however, these dependencies are already available in the image QEMU base image.

Warning Meson must be below version 0.60 due to a bug in DPDK

- General

- Linux 5.5 or higher

- compiler with c++17 support

- clang 10 or higher

- cmake 3.18 or higher

- python 3.x

- mesonbuild < 0.60 (

pip3 install meson==0.59) - pyelftools (

pip3 install pyelftools) - ninja

- cunit

- Documentation

- doxygen

- LaTeX

- Code Coverage

- ctest

- lcov

- gcov

- gcovr

- Continuous Integration

- valgrind

- Python scripts

- virtualenv

The following dependencies are automatically compiled and installed into the build directory.

| Dependency | System | Version |

|---|---|---|

| backward | ZCSD | 1.6 |

| booost | ZCSD | 1.74.0 |

| bpftool | ZCSD | 5.14 |

| bpf_load | ZCSD | 5.10 |

| dpdk | ZCSD | spdk-21.11 |

| generic-ebpf | ZCSD | c9cee73 |

| fuse-lfs | OpenCSD | 526454b |

| libbpf | ZCSD | 0.5 |

| libfuse | OpenCSD | 3.10.5 |

| libbpf-bootstrap | ZCSD | 67a29e5 |

| linux | ZCSD | 5.14 |

| spdk | ZCSD | 22.01 |

| isa-l | ZCSD | spdk-v2.30.0 |

| rocksdb | OpenCSD | 6.25.3 |

| qemu | ZCSD | 6.1.0 |

| uBPF | ZCSD | 9eb26b4 |

| xenium | OpenCSD | f1d28d0 |

The project requires between 15 and 30 GB of disc space depending on your configuration. While there are no particular system memory or performance requirements for running OpenCSD, debugging requires between 10 and 16 GB of reserved system memory. The table shown below explains the differences between the possible configurations and their requirements.

| Storage Mode | Debugging | Disc space | System Memory | Cmake Parameters |

|---|---|---|---|---|

| Non-persistent | No | 15 GB | < 2 GB | -DCMAKE_BUILD_TYPE=Release -DIS_DEPLOYED=on -DENABLE_TESTS=off |

| Non-persisten | Yes | 15 GB | 13 GB | -DCMAKE_BUILD_TYPE=Debug -DIS_DEPLOYED=on |

| Persistent | No | 30 GB | 10 GB | -DCMAKE_BUILD_TYPE=Release -DENABLE_TESTS=off |

| Persistent | Yes | 30 GB | 16 GB | default |

OpenCSD its initial configuration and compilation must be performed prior to its use. After checking out the OpenCSD repository this can be achieved by executing the commands shown below. Each section of individual commands must be executed from the root of the project directory.

git submodule update --init

mkdir build

cd build

cmake .. # For non default configurations copy the cmake parameters before the ..

cmake --build .

# Do not use make -j $(nproc), CMake is not able to solve concurrent dependency chain

cmake .. # this prevents re-compiling dependencies on every next make commandcd build/qemu-csd

source activate

qemu-img create -f raw znsssd.img 16777216 # 34359738368

# By default qemu will use 4 CPU cores and 8GB of memory

./qemu-start.sh

# Wait for QEMU VM to fully boot... (might take some time)

git bundle create deploy.git HEAD

rsync -avz -e "ssh -p 7777" deploy.git arch@localhost:~/

# Type password (arch)

ssh arch@localhost -p 7777

# Type password (arch)

git clone deploy.git qemu-csd

rm deploy.git

cd qemu-csd

git -c submodule."dependencies/qemu".update=none submodule update --init

mkdir build

cd build

cmake -DENABLE_DOCUMENTATION=off -DIS_DEPLOYED=on ..

# Do not use make -j $(nproc), CMake is not able to solve concurrent dependency chain

cmake --build .git remote set-url origin git@github.com:Dantali0n/qemu-csd.git

ssh-keygen -t rsa -b 4096

eval $(ssh-agent) # must be done after each login

ssh-add ~/.ssh/NAME_OF_KEYvirtualenv -p python3 python

cd python

source bin/activate

pip install -r requirements.txtRunning and debugging programs is an essential part of development. Often, barrier to entry and clumsy development procedures can severely hinder productivity. Qemu-csd comes with a variety of scripts preconfigured to reduce this initial barrier and enable quick development iterations.

Within the build folder will be a qemu-csd/activate script. This script can be

sourced using any shell source qemu-csd/activate. This script configures

environment variables such as LD_LIBRARY_PATH while also exposing an essential

sudo alias: ld-sudo.

The environment variables ensure any linked libraries can be found for targets

compiled by Cmake. Additionally, ld-sudo provides a mechanism to start targets

with sudo privileges while retaining these environment variables. The

environment can be deactivated at any time by executing deactivate.

TODO: Generate integer data file, describe qemucsd and spdk-native applications, usage parameters, relevant code segments to write your own BPF program, relevant code segments to extend the prototype.

For debugging, several mechanisms are put in place to simplify this process. Firstly, vscode launch files are created to debug applications even though the require environmental configuration. Any application can be launched using the following set of commands:

source qemu-csd/activate

# For when the target does not require sudo

gdbserver localhost:2222 playground/play-boost-locale

# For when the target requires sudo privileges

ld-sudo gdbserver localhost:2222 playground/play-spdkNote, that when QEMU is running the port 2222 will be used by QEMU instead.

The launch targets in .vscode/launch.json can be easily modified or extended.

When gdbserver is running simply open vscode and select the root folder of qemu-csd, navigate to the source files of interest and set breakpoints and select the launch target from the dropdown (top left). The debugging panel in vscode can be accessed quickly by pressing ctrl+shift+d.

Alternative debugging methods such as using gdb TUI or gdbgui should work but will require more manual setup.

Debugging on QEMU is similar but uses different launch targets in vscode. This target automatically logs-in using SSH and forwards the gdbserver connection.

More native debugging sessions are also supported. Simply login to QEMU and

start the gdbserver manually. On the host connect to this gdbserver and set up

substitute-path.

On QEMU:

# from the root of the project folder.

cd build

source qemu-csd/activate

ld-sudo gdbserver localhost:2000 playground/play-spdkOn host:

gdb

target remote localhost:2222

set substitute-path /home/arch/qemu-csd/ /path/to/root/of/projectMore detailed information about development & debugging for this project can be found in the report.

Debugging FUSE filesystem operations can be done through the compiled filesystem

binaries by adding the -f argument. This argument will keep the FUSE

filesystem process in the foreground.

gdb ./filesystem

b ...

run -f mountpointThis section documents all configuration parameters that the CMake project exposes and how they influence the project. For more information about the CMake project see the report generated from the documentation folder. Below all parameters are listed along their default value and a brief description.

| Parameter | Default | Use case |

|---|---|---|

| ENABLE_TESTS | ON | Enables unit tests and adds tests target |

| ENABLE_CODECOV | OFF | Produce code coverage report \w unit tests |

| ENABLE_DOCUMENTATION | ON | Produce code documentation using doxygen & LaTeX |

| ENABLE_PLAYGROUND | OFF | Enables playground targets |

| ENABLE_LEAK_TESTS | OFF | Add compile parameter for address sanitizer |

| IS_DEPLOYED | OFF | Indicate that CMake project is deployed in QEMU |

For several parameters a more in depth explanation is required, primarily IS_DEPLOYED. This parameter is used as the Cmake project is both used to compile QEMU and configure it as well as compile binaries to run inside QEMU. As a results, the CMake project needs to be able to identify if it is being executed outside of QEMU or not. This is what IS_DEPLOYED facilitates. Particularly, IS_DEPLOYED prevents the compilation of QEMU from source.

This project is available under the MIT license, several limitations apply including:

- Source files with an alternative author or license statement other than Dantali0n and MIT respectively.

- Images subject to copyright or usage terms, such the VU and UvA logo.

- CERN beamer template files by Jerome Belleman.

- Configuration files that can't be subject to licensing such as

doxygen.cnfor.vscode/launch.json

- ZNS

- Filesystems

- Linux Inode

- Filesystem Benchmarks

- FUSE

- To FUSE or Not to FUSE: Performance of User-Space File Systems

- FUSE kermel documentation

- FUSE forget

- Other FUSE3 filesystems that can be used for reference

- LFS

- BPF

- Linux Kernel related

- BPF-CO-RE & BTF

- Linux BTF documentation

- BPF portability and CO-RE Highly Recommended Read

- libbpf / standalone related

- BCC to libbpf conversion

- Cilium BPF + XDP reference guide Highly Recommended Read

- bpf_load

- bpf-bootstrap

- Userspace BPF execution / interpretation

- Verifiers

- Hardware implementations

- Various

- Repositories / Libraries

- Patchsets

- Week 1 -> Goal: get fuse-lfs working with libfuse

- Add libfuse, fuse-lfs and rocksdb as dependencies

- Create custom libfuse fork to support non-privileged installation

- Configure CMake to install libfuse

- Configure environment script to setup pkg-config path

- Use Docker in Docker (dind) to build docker image for Gitlab CI pipeline

- Investigate and document how to debug fuse filesystems

- Determine and document RocksDB required syscalls

- Setup persistent memory that can be shared across processes

- Split into daemon and client modes

- Week 2 -> Goal get a working LFS filesystem

- Create solid digital logbook to track discussions

- Week 3 -> Investigate FUSE I/O calls and fadvise

- Get a working LFS filesystem using FUSE

- What are the requirements for these filesystems.

- Create FUSE LFS path to inode function.

- Test path to inode function using unit tests.

- Setup research questions in thesis.

- Run filesystem benchmarks with strace

- RocksDB DBBench

- Filebench

- Use fsetxattr for 'process' attributes in FUSE

- Document how this can enable CSD functionality in regular filesystems

- Get a working LFS filesystem using FUSE

- Week 4 -> FUSE LFS filesystem

- Get a working LFS filesystem using FUSE

- What are the requirements for these filesystems? (research question)

- Snapshots

- GC

- What are the requirements for these filesystems? (research question)

- Test path to inode function using unit tests.

- Get a working LFS filesystem using FUSE

- Week 5 -> FUSE LFS filesystem

- Get a working LFS filesystem using FUSE

- Filesystem considerations for fair testing against proven filesystems

- fsync must actually flush to disc.

- In memory caching is only allowed if filesystem can recover to a stable state upon crash or power loss.

- Filesystem considerations to achieve functionality

- Upon initialization all directory / filename and inode relations are

restored from disc and stored in memory. These datastructures

utilize maps as the lookup is

log(n). - Periodically all changes are flushed to disc (every 5 seconds).

- Use bitmaps to determine occupied sectors.

- Snapshots are memory backed and remain as long as the file is open.

- GC needs to check both open snapshot sectors and occupied sector bitmap.

- GC uses two modes

- (foreground) blocking if there is no more drive space to perform the append.

- (background) periodic to clear entirely unoccupied zones.

- Reserve last two zones from total space for GC operations.

- Upon initialization all directory / filename and inode relations are

restored from disc and stored in memory. These datastructures

utilize maps as the lookup is

- Filesystem considerations for fair testing against proven filesystems

- Get a working LFS filesystem using FUSE

- Week 6 -> FUSE LFS filesystem

- Get a working LFS filesystem using FUSE

- Filesystem constraints / limitations

- No power atomicity

- Test path to inode function using unit tests.

- Test checkpoint functionality

- Write a nat block to the drive

- Function to append nat block

- Write an inode block to the drive

- Inode append function

- Decide location of size and filename fields on disc

- inode vs file / data block

- Filesystem constraints / limitations

- Account for zone capacity vs zone size differences

- Ensure lba_to_position and position_to_lba solve these gaps.

- Configurable zone cap / zone size gap in NvmeZnsMemoryBackend

- Correctly determine zone cap / zone size gap in NvmeZnsSpdkBackend

- Get a working LFS filesystem using FUSE

- Week 7 -> FUSE LFS filesystem

- Get a working LFS filesystem using FUSE

- Write an inode block to the drive

- Inode append function

- Decide location of size and filename fields on disc

- inode vs file / data block

- Write an inode block to the drive

- Get a working LFS filesystem using FUSE

- Week 8 -> FUSE LFS filesystem

- Run filesystem benchmarks with strace

- RocksDB DBBench

- Filebench

- Run filesystem benchmarks with strace

- Week 10 -> FUSE LFS Filesystem

- Write inode block to drive

- Inode create / update / append

- Decide location of size and filename fields on disc

- Read file data from drive

- Write file data to drive

- SIT block management for determining used sectors (use bitfields)

- log_pos to artificially move the start of the log zone (same as random_pos)

- Garbage collection & compaction

- rename, unlink and rmdir

- Callback interface using nlookup / forget to prevent premature firing

- Temporary file duplication? for renamed files and directories

- What if an open handle deletes the file / directory that has been renamed??

- Week 12 -> FUSE LFS Filesystem

- Implement statfs

- Implement truncate

- Implement CSD state management using extended attributes

- Implement in-memory snapshots

- In-memory snapshots with write changes become persistent after the kernel finishes execution. The files use special filenames that are reserved to the filesystem (use filename + filehandle).

- Week 14 -> FUSE LFS Filesystem

- Run DBBench & Filebench early benchmarks

- Week 16 -> FUSE LFS Filesystem

- Optimizations, parallelism and queue depth > 1

- Wrap all critical datastructures in wrapper classes that intrinsically manages locks (mutexes)

- Figure out how SPDK can notify the caller of where the data was written

- Optimizations, parallelism and queue depth > 1

Serves as a place to quickly store digital information until it can be refined and processed into the master thesis.

- In order to analyze the exact calls RocksDB makes during its benchmarks tools

like

stracecan be used. - Several methods exist to prototype filesystem integration for CSDs. Among these are using LD_PRELOAD to override system calls such as read(), write() and open(). In this design we choose to use FUSE as this simplifies some of the management and opens the possibility of allowing parallelism while the interface between FUSE and the filesystem calls is still thin enough it can be correlated.

- The filesystem can use a snapshot concurrency model with reference counts.

- Each file can maintain a special table that associates system calls with CSD kernels. To isolate this behavior (to specific users) we can use filehandles and process IDs (These should be available for most FUSE API calls anyway).

- The design should reuse existing operating system interfaces as much as possible. Any new API or call should be well motivated with solid arguments. As an initial idea we can investigate reusing POSIX fadvise.

- As requirements our FUSE LFS requires gc and snapshots. It would be nice to have parallelism.

- Crossing kernel and userspace boundaries can be achieved using ioctl should the need arise.

- As experiment for evaluation we should try to run RocksDB benchmarks on top of the FUSE LFS filesystem while offloading bloom filter computations from SST tables

- Filebench benchmark to identify filesystems calls. db_bench from RocksDB, run both with strace

- Filesystem design and CSD requirements, why FUSE, why build from scratch

- FUSE, is it enough? filesystem calls, does the API support what we need. Research question.

- How does it perform compared to other filesystems / solutions

- Characteristics to proof

- Data reduction

- Simplicity of algorithms (BPF) vs 'vanilla'

- Performance (static analysis of no. of clock cycles using LLVM-MCA)

- Experiments

- Write append in separate process and CSD averaging of file.

- Characteristics to proof

For convenience and reasonings sake a map between common POSIX I/O and FUSE API calls is needed.

POSIX

- close

- (p/w)read

- (p/w)write

- lseek

- open

- fcntl

- readdir

- posix_fadvise

FUSE

- getattr

- readdir

- open

- create

- read

- write

- unlink

- statfs

Required syscalls, by analysis of https://github.com/facebook/rocksdb/blob/7743f033b17bf3e0ea338bc6751b28adcc8dc559/env/io_posix.cc

- clearerr (stdio.h)

- close (unistd.h)

- fclose (stdio.h)

- feof (stdio.h)

- ferror (stdio.h)

- fread_unlocked (stdio.h)

- fseek (stdio.h)

- fstat (sys/stat.h)

- fstatfs (sys/statfs.h / sys/vfs.h)

- ioctl (sys/ioctl.h)

- major (sys/sysmacros.h)

- open (fcntl.h)

- posix_fadvise (fcntl.h)

- pread (unistd.h)

- pwrite (unistd.h)

- readahead (fcntl.h + _GNU_SOURCE)

- realpath (stdlib.h)

- sync_file_range (fcntl.h + _GNU_SOURCE)

- write (unistd.h)

Potential issues:

- Use of IOCTL

- Use of IO_URING

Filesystem design and architecture is continuously improving and being modified

see source files such as fuse_lfs_disc.hpp until design is frozen.

- Log-structured

- Persistent

- Directories / files with names up to 480 bytes

- File / directory renaming

- In memory snapshots

- Garbage Collection (GC)

- Non-persistent conditional extended attributes

- fsync must actually flush to disc

- In memory caching is only allowed if filesystem can recover to a valid state

- data_position struct and its validity and comparisons being controlled by their size property is clunky and counterintuitive.

- random zone can only be rewritten once it is completely full.

- compaction is only performed upon garbage collection, initial writes might use only partially filled data blocks.

- A kernel CAN NOT return more data than the snapshotted size of the file it is reading.

- A kernel CAN NOT return more data than is specified in the read request.

- A race condition in update_file_handle can potentially remove other unrelated file handles from open_inode_vect.

- Single read and write operations for kernels are limited to 512K strides.

- CSD kernels are written such that they require to be sector aligned.

- CSD write event kernels can only perform append operations, If the write partially overwrites pre-existing data an error will be raised.

- Endian conversions between eBPF and host architecture not covered, assumed to be the same.

- Write kernels are assumed to behave and communicate accurate information about operations they performed.

- Event write kernel in current form makes little practical sense, due to write first happening regularly submitted from host and afterwards kernel requires data to be read again before computing results. See practical write event kernel.

- Upon a write event kernel submission:

- The filesystem submits the write request data alongside its own write

kernel. In addition, the user submits 1 intermediate kernel and 1 finalize

kernel.

- This write kernel runs first and performs the native filesystem write keeping track of written sectors and their locations.

- After each sector the intermediate kernel runs and is shown the written sector data (ZERO COPY). It is allowed to submit state, an arbitrary segment of memory that will be made available the next time the user submitted kernel runs. THE INTERMEDIATE KERNEL IS NOT ALLOWED TO PERFORM ANY READ / WRITE OPERATIONS.

- After the filesystem write kernel has finalized and all intermediate kernels have run only then is the finalize kernel called. This kernel is allowed to perform read and write operations.

- The finalize kernel reports the actual final size of the write operation along with any written LBAs that are now also part of the file.

- The CSD runtime also reports read and written locations so the filesystem can verify the kernels behavior.

- Finally, the return data from the kernel is used to synchronize the file` datastructures and finalize the changes to the file.

- The filesystem submits the write request data alongside its own write

kernel. In addition, the user submits 1 intermediate kernel and 1 finalize

kernel.

- The main advantages of such an elaborate mechanism are

- It prevents the event kernel from having to reread the data that was just written for the write request (ZERO COPY).

- The data of the write request only has to be moved once instead of twice.

- It reduces the number of round-trips between the host and device to just one from two.

- Introduce nvme_zns ZONE_FULL error return code.

- Introduce a better state management callback function for nvme_zns_spdk.

- Make FluffleFS resilent to failing appends due to full zones (ONLY FOR LOG ZONE).

- Make FluffleFS ignore when written data does not exactly end up on the expected sector (ONLY FOR LOG ZONE).

- Kernels must return when it failed due to the LOG zone being full.

- Parallelism is managed through coarse grained locking.

- Almost all FUSE operations are subject to lock a rwlock in either read or write mode. Operations requiring exclusive locking such as create / mkdir and fsync take the writers lock. While open / readdir / write / read and truncate take the readers lock. Some FUSE operations might be able to operate lockless such as statfs

- Any operations regarding a particular inode must first obtain a lock for

this inode.

- Once it is determined a read or write operation will be performed on a snapshot the inode lock should be released.

- A potential optimizations is to have read / write check for snapshot before any other operation, only grabbing the lock if necessary.

- All individual datastructures are protected using reader writer locks with writer preference.

- Have the SPDK backend create a qpair for every unique thread id it encounters

std::this_thread::get_id()strongly binding this new qpair to the id. Each I/O request from this thread id is only queued to this qpair. - Having concurrent appends will cause some appends to fail due to the zone being full. The SPDK backend will raise an error in this case and functions in FluffleFS must be tuned to handle this, Primarily log_append.

Extended filesystem attributes support various namespaces with different behavior and responsibility. Since the underlying filesystem is still tasked with storing these attributes persistently regardless of namespace, the FUSE filesystem is effectively in full control on how to process these calls.

Given the already existing standard to use namespaces for permissions, roles and behavior an additional namespace is an easy and clean extension. Introducing the process namespace. Non-persistent extended file attributes that are only visible to the process that created them. Effectively an in memory map that lives inside the filesystem instead of in the calling process.

The state of these extended attributes is managed through the use of

fuse_req_ctx which can determine the callers pid, gid and uid for all FUSE

hooks except release / releasedir. To combat this limitation FluffleFS generates

a unique filehandle for each open file. The pid is only used at the moment the

first extended attribute is set.

Circumventing return data can be achieved by using

FUSE_CAP_EXPLICIT_INVAL_DATA and fuse_lowlevel_notify_inval_inode but this

requires a major overhaul because the call to fuse_lowlevel_notify_inval_inode

must be performed manually throughout the entire code base.

The alternative is by enabling FUSE_CAP_AUTO_INVAL_DATA (as is already the

case) and ensuring the size returned by getattr is sufficient for the return

data. In addition struct stat their timeout parameters need to be sufficiently

low such that the request is invalid by the time the next one comes in (so 0).

The problem with this is getattr is called before the kernel is run so the

size of the return data is still unknown.

Several safety mechanisms are necessary during the execution of the user provided kernels. The static assertion, using tools such as ebpf-verifier, is limited due to the requirement to populate datastructures from vm calls at runtime.

Safety mechanisms must be provided at runtime by vm. However, these safety mechanisms can not rely on realtime filesystem information as they are running on the CSD. Possible solutions fall into two categories:

- Device level

- A min and maximum range of acceptable LBAs to operate on can be provided alongside the submission of the user provided kernel. Should any request fall outside this range the execution would be terminated and the error would be returned to the caller (in reality this would require NVMe completion status commands or similar).

- Host level

- The device keeps tracks of the operations and on which LBA these are performed. Essentially two vectors of read and written LBAs. Upon completion of the kernel this information would be made available to the filesystem. The filesystem then decides if the execution was malicious or genuine.

Limitations:

- Both of these protections do not prevent against infinite loops.

- While this does not protect against arbitrary code execution it does protect against overwriting any preexisting data due to lack of access to the NVMe reset command.

A combination of both host and device level protections seems appropriate. This will incur an additional runtime cost. Potentially, the filesystem could have a set of verified kernels it keeps internally that disable all these runtime checks.

References

- https://lkml.iu.edu/hypermail/linux/kernel/1207.1/02414.html

- https://lkml.org/lkml/2012/7/5/136

- max_read_pages_code

- max_read_pages_patches

ftrace

trace-cmd record -e syscalls -p function_graph -c -F ./fuse-entry -- -d -o max_read=2147483647 test

sudo trace-cmd record -e syscalls -p function_graph -l 'fuse_*'

sysfs

/proc/sys/fs/pipe-max-size