A Tensorflow implementation of CapsNet in Hinton's paper Dynamic Routing Between Capsules

States:

- The code is runnable, but still some different from the paper, not a logical error, but misunstand the capsule, including The routing "for" loop, margin loss(issue #8, thanks for reminding, it's my carelessness)

- some results of the 'wrong' version has been pasted out, but not effective as the results in the paper,

Daily task

- Update the code of routing algorithm

- Adjust margin loss

- Improve the eval pipeline

Others

- Here(知乎) is my understanding of the section 4 of the paper (the core part of CapsNet), it might be helpful for understanding the code.

- If you find out any problems, please let me know. I will try my best to 'kill' it as quickly as possible.

In the day of waiting, be patient: Merry days will come, believe. ---- Alexander PuskinIf 😊

-

We have a lot of interesting discussion in the WeChat group, welcome to join us. But gitter & English first, please. Anyway, we will release the discussion results in the name of this group(pointing out the contribution of any contributors)

-

If you find out that the Wechat group QR is invalid, add my personal account.

- Python

- NumPy

- Tensorflow (I'm using 1.3.0, others should work, too)

- tqdm (for showing training progress info)

Step 1.

Clone this repository with git.

$ git clone https://github.com/naturomics/CapsNet-Tensorflow.git

$ cd CapsNet-Tensorflow

Step 2.

Download MNIST dataset, mv and extract them into data/mnist directory.(Be careful the backslash appeared around the curly braces when you copy the wget command to your terminal, remove it)

$ mkdir -p data/mnist

$ wget -c -P data/mnist http://yann.lecun.com/exdb/mnist/{train-images-idx3-ubyte.gz,train-labels-idx1-ubyte.gz,t10k-images-idx3-ubyte.gz,t10k-labels-idx1-ubyte.gz}

$ gunzip data/mnist/*.gz

Step 3. Start training with command line:

$ pip install tqdm # install it if you haven't installed yet

$ python train.py

the tqdm package is not necessary, just a tool for showing the training progress. If you don't want it, change the loop for in step ... to for step in range(num_batch) in train.py

$ python eval.py --is_training False

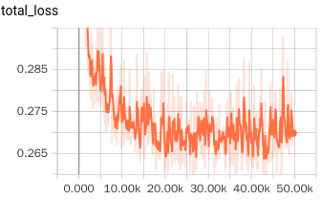

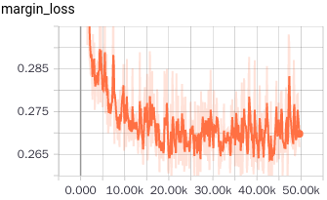

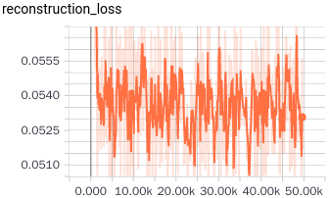

Results for the 'wrong' version(Issues #8):

- test acc |Epoch|49|51| |:----:|:----:|:--:| |test acc|94.69|94.71|

Results after fix Issues #8:

My simple comments for capsule

- A new version neural unit(vector in vector out, not scalar in scalar out)

- The routing algorithm is similar to attention mechanism

- Anyway, a work with great potential, we can do a lot of work on it

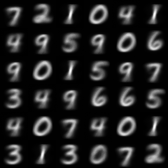

- Finish the MNIST version of capsNet (progress:90%)

- Do some different experiments for capsNet:

- Using other datasets

- Adjusting model structure

- There is another new paper about capsules(submitted to ICLR 2018), follow-up.