[Paper]

[arXiv]

[Poster]

[Slide]

[Project Page]

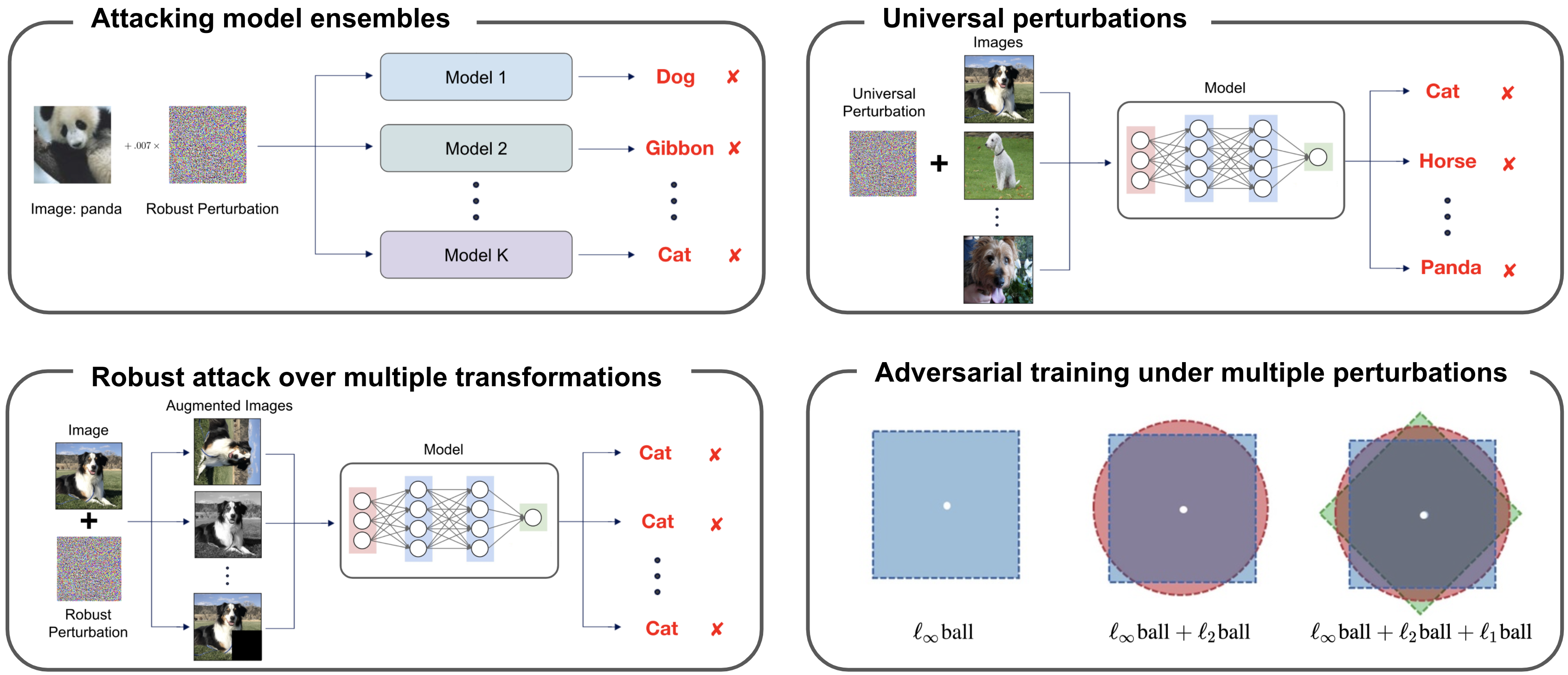

Adversarial Attack Generation Empowered by Min-Max Optimization

Jingkang Wang, Tianyun Zhang, Sijia Liu, Pin-Yu Chen, Jiacen Xu, Makan Fardad, Bo Li

NeurIPS 2021

Please check neurips21 folder for reproducing the robust adversarial attack results presented in the paper. We provide detailed instructions in neurips21/README.md and bash scripts neurips21/scripts. The code is based on tensorflow 1.x (tested from 1.10.0 - 1.15.0), which is a bit outdated. Currently, we do not have plans to upgrade it to tensorflow 2.x. If you do not aim to reproduce the exact numbers but use min-max attacks in your projects, we provide a pytorch implementation with latest pre-trained models (e.g., EfficientNet, ViT, etc) and ImageNet-1k supports. Please see the following section for more details.

TBD (stay tuned!)

If you find our code or paper useful, please consider citing

@inproceedings{wang2021adversarial,

title={Adversarial Attack Generation Empowered by Min-Max Optimization},

author={Wang, Jingkang and Zhang, Tianyun and Liu, Sijia and Chen, Pin-Yu and Xu, Jiacen and Fardad, Makan and Li, Bo},

booktitle={Advances in Neural Information Processing Systems (NeurIPS)},

year={2021}

}Please submit a Github issue or contact wangjk@cs.toronto.edu if you have any questions or find any bugs.