This repo shows how you can utilise CICD to speed up data development.

Key to this is isolating every change (each feature/branch) against production data. Rebuilding your entire warehouse for each feature/branch would be supper expensive. However, if know your data lineage, you can build all the models you have changed and point them to production dependencies. This drastically reduces cost and time to test your changes.

- 🚀 Speed up deployment

- 🚀 Speed up incident response - lineage

- 💥 Prevent breaking changes - regression, data profiling

- Optimize the infrastructure - reduce CICD build times

- Find the right data asset for the problem - data catalogue

- Reduce barriers to entry for changing data models. By providing guardrails such as testing, linting, and code review I can provide safety nets for devlopers/analysts to contribute code changes.

- Lower barrier for new users to start contributing.

- People understand each other’s code better and make fewer mistakes..

Data build tool DBT comes with some awesome features for CICD:

- Automated tests are configured or written in the same location as your data models.

- DBT generated documentation for this project are hosted on S3. This includes data lineage.

- Using DBT's 'slim' CI best practice we can identify only the models that have changed.

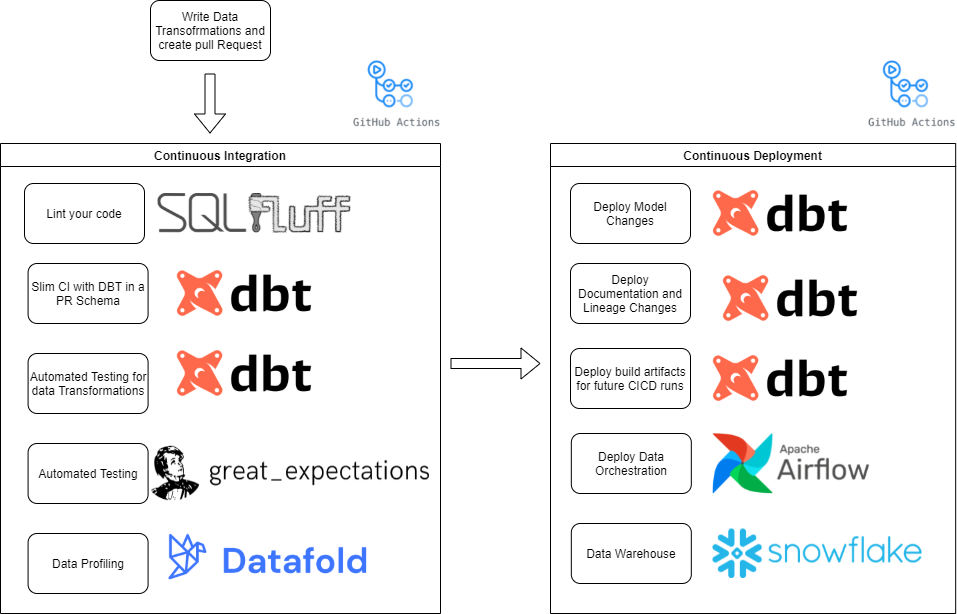

Github actions have been set up to perform CICD:

- Continious Deployment - Merging Pull Requests into main branch kicks off deploying into production.

- Creating a pull requests kicks off Continuous integration by deploying into a test schema, running testing, linting and data profiling .

- checkout code

- deploy dbt models

- save state (artefact at the end of each run)

- generate dbt docs

- deploy dbt docs on aws s3

Here we want to give the developer feedback on:

- sql conforms to coding standards via linting

- if models have regressed via DBT's automated testing.

- build out an isolated dev environment. This can be used to show end users and perform UAT.

- profile data between the pull request and production to highlight changes to data.

- checkout code

- linting code via sql fluff

- fetch manifest.json at start of each run from the prior run

- use DBT's slim CI to review what models state has changed

- dbt seed, run, test only models whos state was modified

- save state (artefact at the end of each run)

- run datafold to profile data changes

when running diff-quality on github pull requests with master watch out for the checkout action that will checkout the the megre of your latest commit with the base branch. See example of the issue here.

In order to standardise sql styles across developer you can use a linter like sqlfluff to set coding standards.

example usage:

sqlfluff fix test.sql🚧 for PRs only new / modified code is reviewed. Test this by doing:

diff-quality --violations sqlfluff --compare-branch origin/main TODO

I have set up two CI/CD jobs on a daily schedule to run all the automated tests, and check for source system freshness:

Another option is to use great expectations

- With great expectations as its decoupled from the transform layer (DBT) we can use great expectations during the ingest layer e.g. with spark.

With version control we are able to review code changes. However with data we also need to do regression testing to understand how our changes impact the state of the warehouse.

Datafold will automatically generate data profiling between your commit and a target e.g. production.

Checkout some of my pull/merge requests which contain a summary of data regression and links to more detailed profiling.

key features of Datafold data diffing

- table schema diff (which columns have changed)

- primary key not null or duplicate tests

- column data profiling (e.g. dev branch vs prod)

- data diff at a primary key level

- shows how datasets change over time

Other Datafold capabilities:

- data catalogue

- metric monitoring & alerting

- Use sampling when data profiling large data sets.

Datafold's CLI when using dbt, will grab the dbt_project/target/manifest.json and run_results.json and send them along with your commit.

With Github's pull_request event some extra steps are required: Github really runs Actions on merge commit. They merge PR branch into the base branch and run on top of that. Thus your SHA changes.

Thank you Alex Morozov (Datafold) for showing me the original PR_HEAD_SHA can be extracted via:

PR_HEAD_SHA=$(cat $GITHUB_EVENT_PATH | jq -r .pull_request.head.sha)

See airflow-dbt