- 🚀 Current version: 1.3

- ⌨️ Authors : Balthazar Neveu , Alain Neveu

- 🕹️ Testers: Pépin Hugonnot : Florine Pépin

- preprocess

- synchronize the RGB DJI camera with the NIR camera using a "QR code"

- best synchronized pairs: find the images pairs with lowest delay

- process

- convert RAW files to linear RGB files

- align NIR images with the visible RGB images

- postprocess for cartography

- prepare tiles to be stitched in OpenDroneMap

- and later visualize under QGIS with overlay on top of Google maps / IGN maps for instance.

- First of all

git clone https://github.com/wisescootering/infrareddrone.gitcd infrareddrone

- Once you have docker running,

- Build the docker image

docker build -t irdrone -f .\Dockerfile . - Download our sample folder FLY_TEST

- Run the docker image.

docker run -v C:\Users\xxxx\Documents\FLY_test:/home/samples/FLY_test -it irdronepython3 synchronization/synchro_by_aruco.py --folder /home/samples/FLY_test/Synchro/ --vis "*.DNG" --nir "*.RAW"python3 run.py --config /home/samples/FLY_test/config.json --clean-proxy --odm-multispectral --selection best-mapping

Note:

- press CTRL+D to escape

- There are no visual checks through graphs

For practical reasons, only windows is supported as of now... For anything else please use the docker version!

- install Anaconda with all default options. Anaconda for windows, python 3.9

- install.bat will set up the right python environment for you.

- you have to install raw therapee software at the default windows location.

- Download our sample folder FLY_TEST

- To use ODM (OpenDroneMap), install docker and make sure you have it running correctly

- Drone visible camera (DJI Mavic Air 2) is used to shoots RAW images

- An action camera with a wide FOV (SJCAM M20)

- IR cut filter has been removed is attached under the drone

- a dedicated IR band filter has been installed (which cuts visible light and only transmits IR - use 750nm or 850nm filters).

- 🏁 QR code (Aruco) chart can be downloaded here and has to be printed to A4 or A3 paper for calibration matters.

- Fly

- Process images

- Trigger the 3s interval timelapse on the SJCam action cam

- Start flying your drone.

- Start the 2s interval timelapse on the DJI app

- ⏳ Before flying a temporal synchronization procedure has to be performed to sync the 2 cameras clocks.

- It consists in making the drone stay in a stationary position while a QR code spins below. full sync procedure doc

Note: spinning the drone above the QR code (Aruco) chart is also possible, it does not require a turntable but it's less recommended

- 📷 Then the mandatory way to capture your data is to fly straight away

⚠️ without stopping the timelapse⚠️ - Keep both cameras shooting in timelapse modes.

- Try to cover your area in a grid fashion (fly straight forwerd, pan to the right or left, fly straight backward etc...)

- ✔️ Synchronization phase is key

- ⛔ Do not stop the timelapse ⛔ after the synchro phase!

- ✔️ keep on flying with the same DJI timelapse on until the end of the fly

- 0/ ⭐⭐⭐ Download our sample folder 📁 FLY_TEST

- 1/ 💾 Once finished, offload your SD cards from IR & Visible cameras into folders following the architecture below (refer to the sample example FLY_TEST)

- 📁

FLY_DATE- 📁

AerialPhotographyfolder where you put all pictures from the flight phase - 📁

Synchrofolder where you put all picture from the synchronization phase - 📝

config.json

- 📁

- 📁

- 2/ ❓ Configuration file can be changed (modify json or excel). folder names can be changed for instance, if you're interested looking at the "Configuration" section.

- 3/ ⏰ Double click on

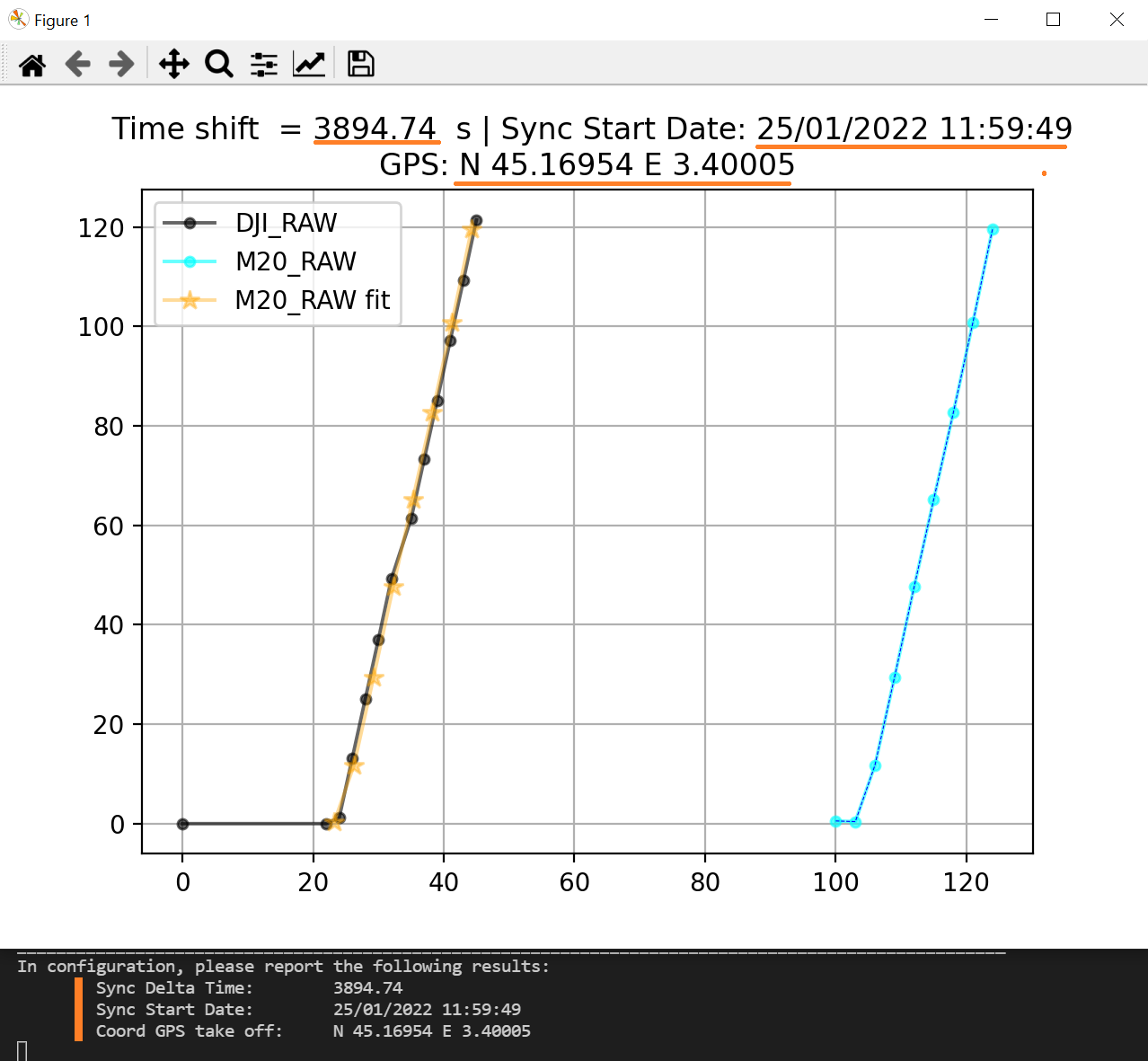

bat_helpers/synchro.batand select the 📁Synchrofolder.- This will open a graph, please make sure that the black and orange curves overlap correctly. Then you can close the graph.

- This will generate a

syncho.npybinary file in theSynchrofolder ... which you will re-use as a configuration parameter. Don't worry, if you re-use the sample example configuration and folder naming, you won't have to change anything.

- 4/ ⏯️ Now you're ready to process your images. Double click on

bat_helpers/run.batand select the configurationconfig.jsonfile. Note .json or .xlsx will do the same. - 5/ 📈 Check the altitude profile displayed (internet connection is needed here).

- 6/ 💤 Confirm you want to run processing on your images. Then be very patient, your files will be processed and stored in the 📁

ImgIRdronefolder.

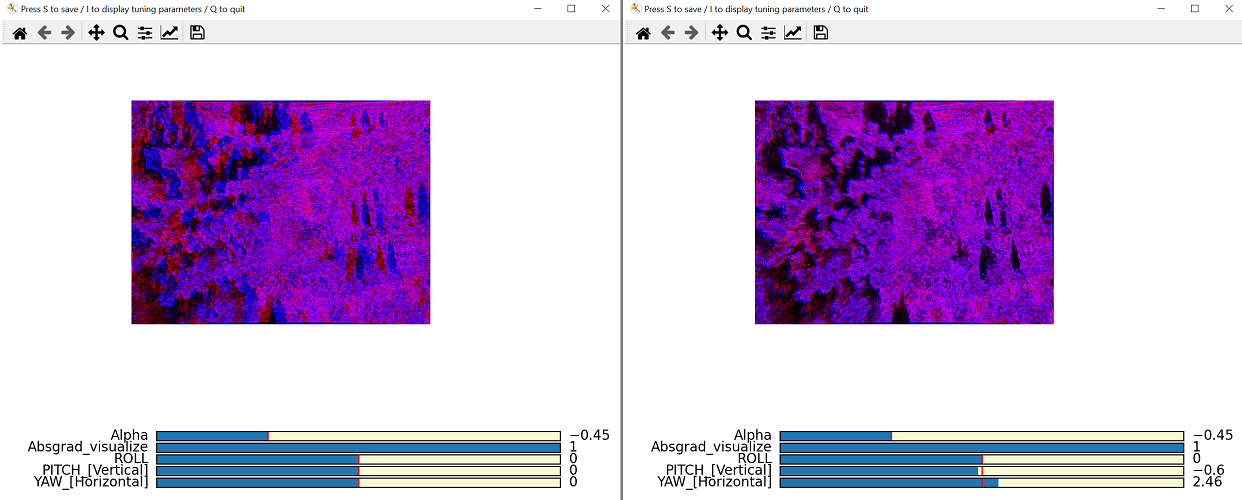

❌ Keep in mind that things are not perfect and there can sometimes be failures. If there's a picture which is very important, you are encouraged to re-run the processing with a manual option to assist the alignment (next to the processed image, you can find a .bat dedicated to reprocessing your image with manual assistance : example HYPERLAPSE_0008_REDO.bat).

- On the left, you can see that the red image has to be shifted to the left side to match the blue image.

- Use the yaw slider to align blue and green, see what happens on the right side? the overlap is correct!

- then press

Qto quit the GUI - process will continue the automatic processing. You have helped the initialization manually that way.

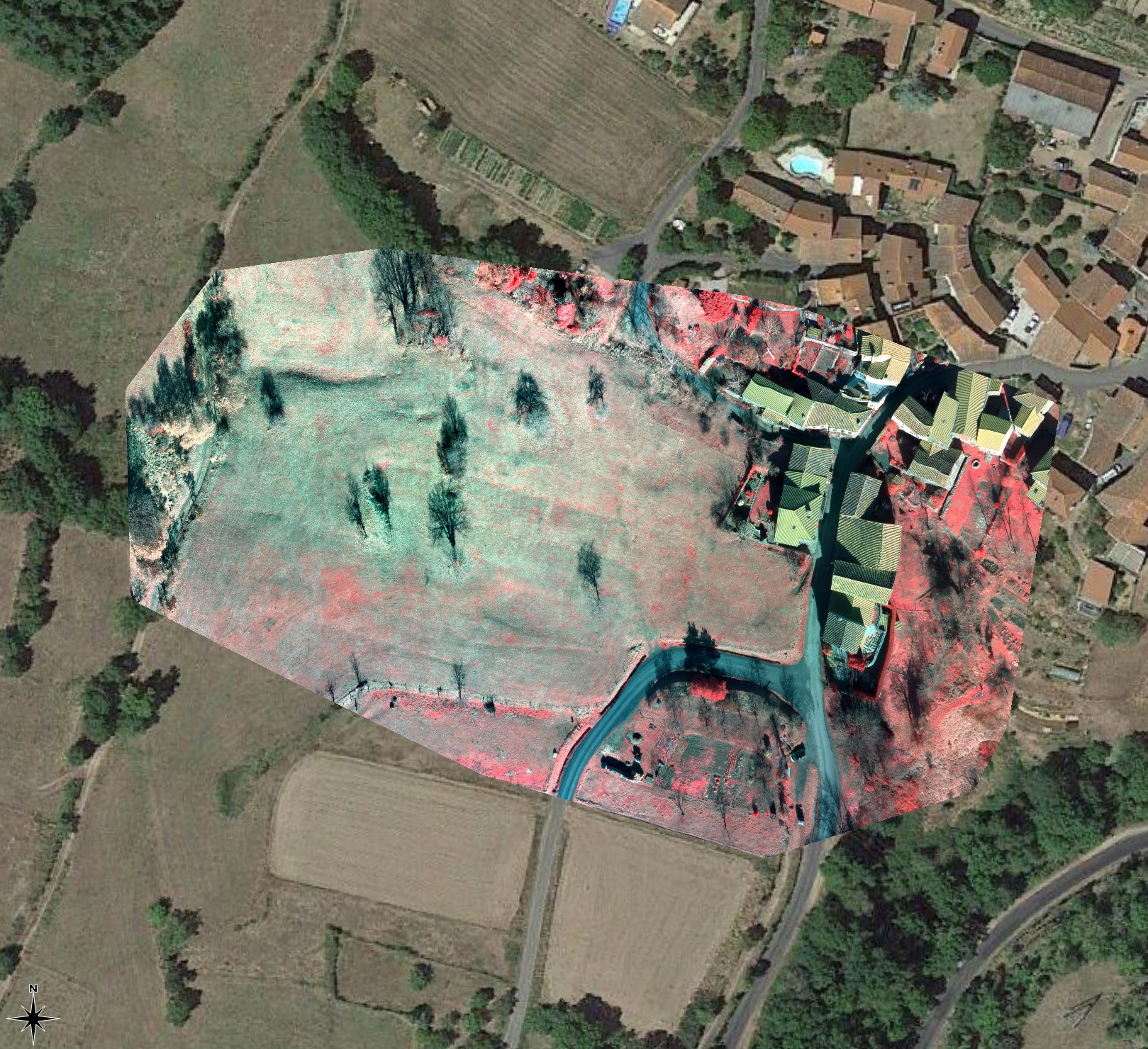

Original metadata from the DJI drone are copied into the output file therefore these images are ready to be re-used in thirdparty software. We'll prepare tiles to be stitched in OpenDroneMap and later allow you to visualize under QGIS with access to maps.

You'll need to have 🐋 Docker running to be able to stitch maps using OpenDroneMaps.

- Next to

ImgIRdronefolder, you can find a folder namedmapping_MULTI. - inside this folder, we have prepared everything you need to run the ODM stitching:

- images with correct overlap and correct exif

- camera calibration

odm_mapping.batallows you to run the right docker with the right options.

- Once the process has finished, you'll find a png with the stitched maps.

mapping_XXX/odm_orthophoto/odm_orthophoto.png

Note: In case you didn't use --odm-multispectral option in run.py you'll get a folder for each spectral modality (visible, nir, ndvi, vir)

Once stitched with ODM, you can visualize the map in QGIS and start annotating your map.

Please refer to the following tutorial (in french) on how to create vegetation visualization under QGIS.

For instance, creating a map is possible in Pix4DField in case you own a license (I used the Trial version which is available for 15days)

- When launching Pix4DFields, the DJI camera won't be supported by default. You need to import a custom camera file.

- Luckily, we created one for the DJI Mavic Air 2, see Pix4DFields_custom_camera

⚙️ run.py command line interface

run.py [--config CONFIG] [--clean-proxy] [--odm-multispectral] [--traces {vis,nir,vir,ndvi}] [--selection {all,best-synchro,best-mapping}]

--configpath to the flight configuration--clean-proxyclean proxy tif files to save storage--disable-altitude-apiforce not using altitude from IGN API--odm-multispectralODM multispectral .tif exports--traces {vis,nir,vir,ndvi}export specific spectral traces. when not provided: VIR by default if--odm-multispectral, otherwise all traces are saved--selection{all,best-synchro,best-mapping}all: take all images, do not make a specific image selectionbest-synchro: only pick pairs of images with gap < 1/4th of the TimeLapseDJI interval ~ 0.5 secondsbest-mapping: select best synchronized images + granting a decent overlap

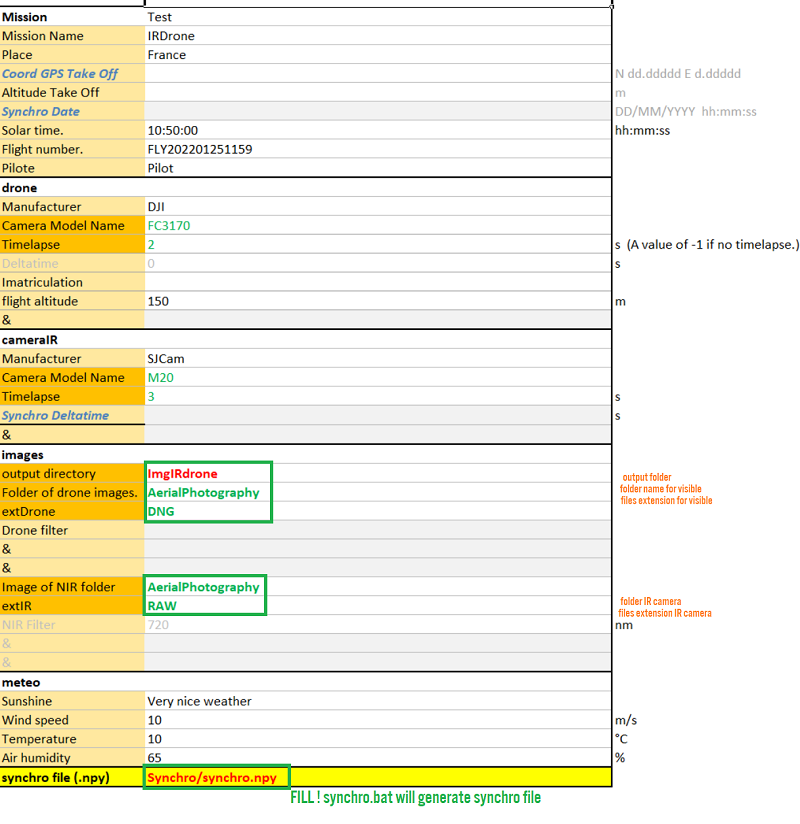

❓ At step 2/ of the tutorial, you have probably copy pasted the config.json from the sample folder. Here are some details on how configuration works.

⚙️ Create configuration file from the template ( .xlsx for beginers or .json for advanced users)

- based on excel on the provided template Templates/config.xlsx, fill mandatory information in green and red.

- another option is to create a json configuration from the following template Templates/config.json.

If you want to change the configuration, you have 2 options

{

"synchro":"Synchro/synchro.npy",

"output": "ImgIRdrone",

"visible": "AerialPhotography/*.DNG",

"nir": "AerialPhotography/*.RAW",

"visible_timelapse": 2.0,

"nir_timelapse": 3.0

}

synchrofield links to the synchronization file obtained when runningbat_helpers/synchro.batpreviously- You can change the

outputfolder here. - glob regexp use are suggested in

visibleornirkeys to search the images. Please not that this way you can use 2 different folders for NIR and visible images. - OPTIONAL :

- If you roughly know the angles between the drone & the NIR camera, you can mention the

offset_angleskey[Yaw, Pitch,Roll]. - Usually at the end of your processing, you'll see if the estimated angles differ from the gimbal estimate (retrieved from EXIF).

- Example: "offset_angles": [0.86, 1.43, 0.0]

- If you roughly know the angles between the drone & the NIR camera, you can mention the

🚫 EXCEL CONFIG IS NOT SUGGESTED / DEPRECATED 🚫

- You can simply put the relative path to

synchro.npyand you should be good to go

- Double click on `bat_helpers/synchro.bat. (Advanced users can use CLI obviously in case of other images format)

- Unless you want to do manual manipulations, do not paste the delay result to your configuration excel in

cameraIR/deltatime. You can simply close the window, the program saved a synchro.npy file in the Synchro folder!

- Mastering the config.json:

- optional: You can use the

inputkey (orrootdir) to provide a direct link to a specific folder (that would allow you to store all configurations at a unique place... including keeping your projects configurations under git revision). - optional fields in config.json, these fields exist to override synchronization parameters coming from synchro.npy

synchro_deltatimesynchro_datecoord_GPS_take_off

- optional: You can use the

- In the excel template, there are some fields marked in blue where you can also override the values from synchro.npy

for instance, you have to copy/paste into the excel

3894.74= delta time.25/01/2022 11:59:49= dateN 45.16954 E 3.40005= Coord GPS Take Off

-

Aruco (=QR code) chart can be downloaded here and has to be printed to A4 or A3 paper

-

Several procedures were tested:

- Spinning the chart under the static drone !RECOMMENDED!

- Rotating the drone above the static chart: more complicated to manipulate, not recommended description is available here.

-

Sample synchro data can be downloaded from here

-

Copy the selected synchronization images into a "Synchro" folder -> visible & NIR images (expected .RAW and .DNG files by default).

-

Double click on bat_helpers/synchro.bat. *(Advanced users can use CLI obviously in case of other images format)

- Data can be processed by double cliking on

bat_helpers/run.bat. This will use run.py to select a given config.json or excel file. - Advanced users can also use CLI (command line interface) to process the batch of images. Use

--configoption to - CLI users can register pairs of images automatic_registration.py

- SJCam M20 .RAW files are first converted to DNG using the following program sjcam_raw2dng

- SJCAM .DNG & DJI .DNG files are processed using Raw Therapee to linear demosaicked RGB 16bits TIF files.

- Lens Shading is automatically corrected while loading the TIF files.

- We first undistort the DJI visible image and undisort the infrared fisheye. In case of wind, the misalignment between both cameras can be of up to 20°s. Roll of the drone leads to camera yaw, Pitch of the drone leads to camera pitch. Camera roll misalignment is usually zero because of the gimbal lock when the camera points towards the ground. Hopefully, the fisheye has a much wider field of view than the DJI visible camera, therefore we're able to align the NIR image onto the visible image.

- Alignment is first performed by a "coarse alignment" to compensate coarsely camera misalignment yaw (well approximated by an horizontal translation)

and camera misalignment pitch (well approximated by a vertical translation).

- We first undistort the SJcam NIR fisheye and match magnification factor with the DJI camera. The virtual NIR undistorted camera has the same focal length as the DJI camera but a much wider field of view. If there's no misalignment, the center window of the SJcam image shall overlap the visible image

- The first step of the coarse search is performed at very low resolution first

- with a brute force approach (searching for the X Y tranlation which minimizes a certain cost function between the visible image and a moving window in the NIR image)

- The translation approximates the underlying yaw/pitch camera rotation which we can simply recover. It allows to warp the NIR image onto a finer scale.

- Alignment refinement is then performed on the coarsely aligned NIR image.

- A pyramidal approach is chosen.

- At each level of the pyramid, we estimate a vector field of displacements (split the images into 5x5 patches for instance and search for the best displacement)

- This local vector field is sort of regularized by a global motion model fit (homography) which allows to refine the yaw, pitch & roll angles.

- When going to a finer scale, we warp the image according to the global homography estimated at the previous level. This allows to reduce the search horizon.

- Once the alignment model has been found, the NIR image is warped (only once) on the Visible image.

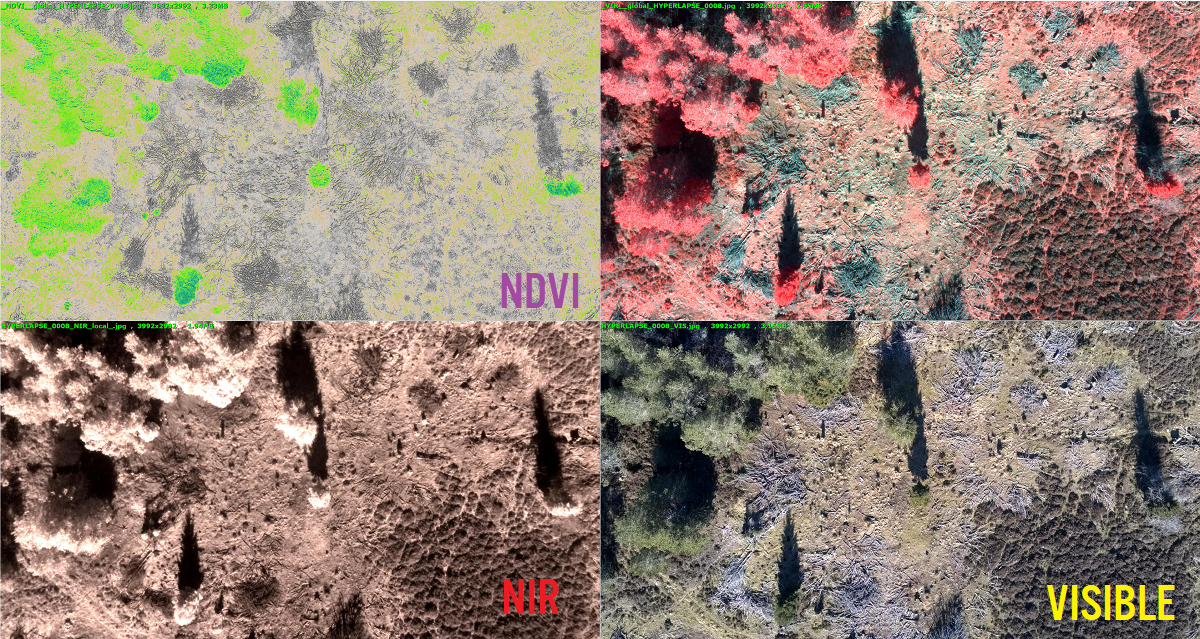

- Several color representation of the merge of NIR & Visible images can be computed such as NDVI or VIR.

- Important note : color calibration shall be tackled at some point, it may not be too difficult though.

While reviewing the data, there may still be some issues in alignment. Unfortunately multispectral alignment is an ill-posed problem and sometimes the automatic system fails. There's still a way to save your images.

- There's a .bat file next to the results which allows you to re-run manually the images which failed.

- It will open a GUI where you can simply pick the yaw, pitch & roll to replace the coarse alignment & make the images overlap. This will guide the automatic system to a manual initial guess which will eventually lead to an accurate automatic alignment.

- If synchronization does not seem correct (or there was big wind), you can try your luck with another image by uncommenting the right line in the .bat .

- Putting these data in Pix4DFields is possible

- Putting a timelapse into a panorama stitching software like Hugin allows to stictch these images.

- Putting a timelapse into VisualSFM allows to create a 3D map out of these images just like you'd do with your drone images

- Normalized Total Gradient: A New Measure for Multispectral Image Registration Shu-Jie Chen and Hui-Liang Paper

- Robust multi-sensor image alignment, Michal Irani and P Anandan at ICCV'98 Paper

- Burst photography for high dynamic range and low-light imaging on mobile cameras, Samuel W. Hasinoff, Dillon Sharlet, Ryan Geiss, Andrew Adams, Jonathan T. Barron, Florian Kainz, Jiawen Chen, and Marc Levoy, Supplemental Material

- Registration of visible and near infrared unmanned aerial vehicle images based on Fourier-Mellin transform Gilles Rabatel, S. Labbe Paper

- Two-step multi-spectral registration via key-point detector and gradient similarity. Application to agronomic scenes for proxy-sensing Jehan-Antoine Vayssade, Gawain Jones, Jean-Noël Paoli, Christelle Gée paper

- Please note that feature based method did not work correctly and generally speaking. Using Phase correlation/Fourier (including Melin Fourier) didn't bring fully satisfying results either for the coarse approach.

- The Normalized Total Gradient was retained as a cost function. Motion regularization is not implemented here and some form of regularization shall be implemented for the local estimation in the near future.

With this project comes a few useful tool, especially the interactive image processing GUI which makes complex pipelines easily tunable.

interactive.imagepipe.Imagepipe class is designed to build a simple image processing pipeline with interactive sliders. If you're interested, refer to the documentation

- Officially supported cameras (camera calibrations are pre-stored in the calibration/mycamera folder)

- DJI RAW

- SJcam M20 RAW

Refer to the calibration documents Details on calibration procedure & extra documents

- March 9, 2022

- First version based on standalone run.bat based on excel configuration

- install.bat to setup the environment on windows

- processing engin

- March 18, 2022

- Use config.json or excel (#1)

- Crop outputs with a few pixels to avoid black/red borders (#2)

- configuration re-uses synchro.npy (pickle) so there are is no need to copy paste values from synchronization phase. (#3)

- August 28, 2022

- Docker support (linux or basically any platform)

- Feature #10 Output data compatibility with OpenDroneMap (document, bat helpers to run ODM)

- Remove intermediate TIF to save storage using

--clean-proxyoption. - Bugs fixed: #19 #20 features #10 #16 #13 #12

- October 21, 2022

- Multispectral TIF support for ODM (#25)

- Possibility to visualize VIR jpg (or other by using the

--traceargument) still allowing the redo.bat manual to reprocess & generate the multispectral tif (#30) - Reduce computation by selecting less frames for processing:

--selectionoption inrun.pysupportsbest-synchro&best-mapping&all(#29) - Possibility to run alignment on pairs using file dialog (#21)

- Issues closed: #14 #18 #22 #26