-

This project is the work for the Google Summer of Code 2023, with the organisation INCF. The project is created by Soham Mulye under the mentoring of Suresh Krishna, PhD from McGill University. This project aims to develop a ready-to-deploy application suite that will address the limitations of traditional preferential looking tests in infants by integrating hardware devices or deep learning-based infant eye trackers, and visual stimuli analysis into a user-friendly graphical user interface (GUI).

The following open source packages are used in this project:

- Numpy

- Matplotlib

- Scikit-Learn

- TensorFlow

- Keras

- PsychoPy

- OpenCV

- Tkinter

The result of this work which was about 420 hours, is divided in the following parts:

-

Psychophysics Research and Experimentation:

- In-depth research into psychophysics, including the study of various psychophysical experiments and the underlying psychometric functions.Exploration and understanding of the theoretical aspects related to visual perception and responses. All the experiments were created using PsychoPy

-

Psychopy Experiment Development:

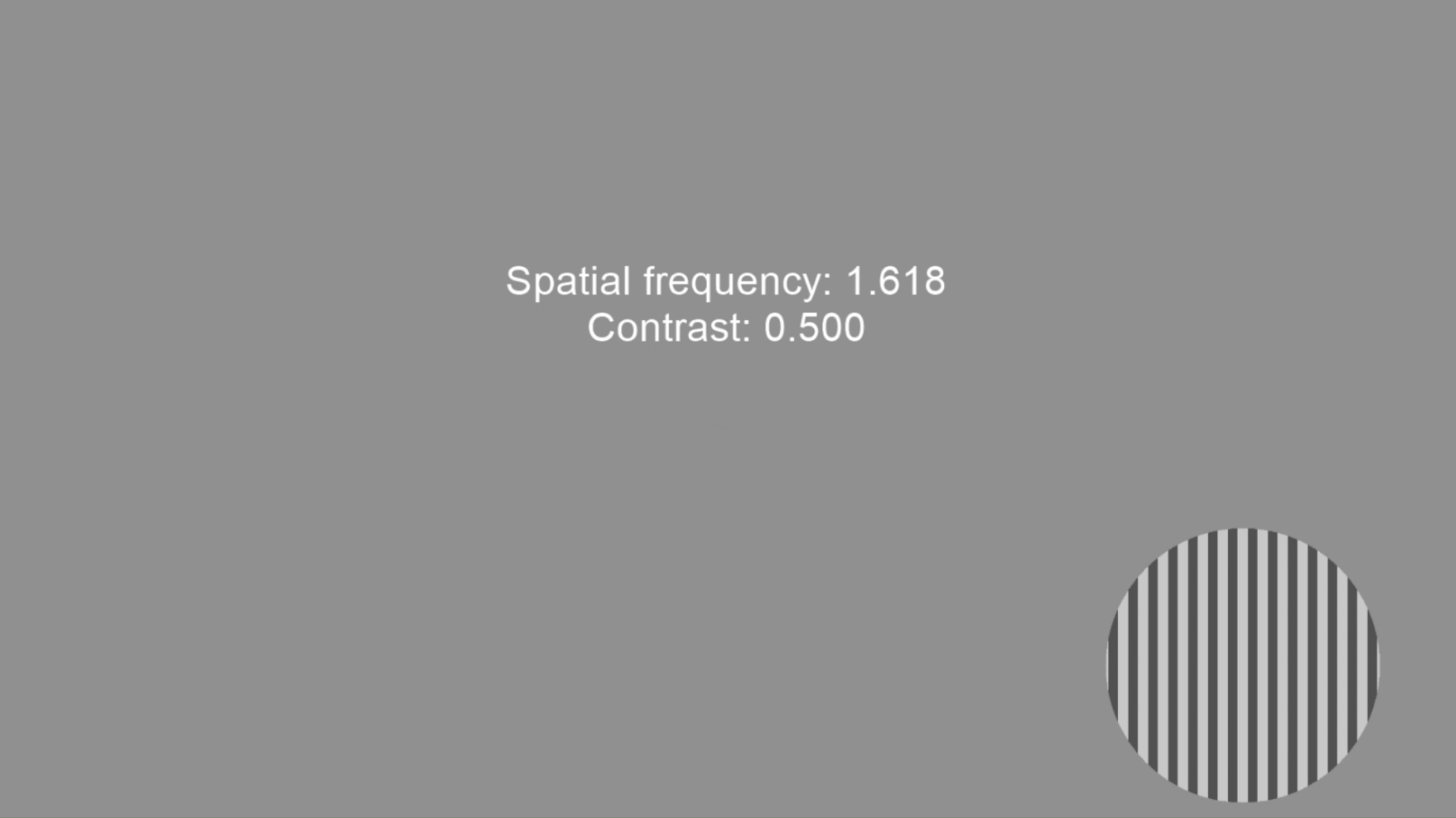

- Design and implementation of psychophysical experiments using the Psychopy library. Creation of controlled experimental environments with systematic manipulation of visual stimuli, such as grating acuity and contrast sensitivity tests.

-

Eye Tracking Integration:

- Seamless integration of an eye-tracking model into the developed experiments to enable automated gaze tracking Development of psychometric function to monitor and analyze infant gaze, eye movements, and responses to visual stimuli.

-

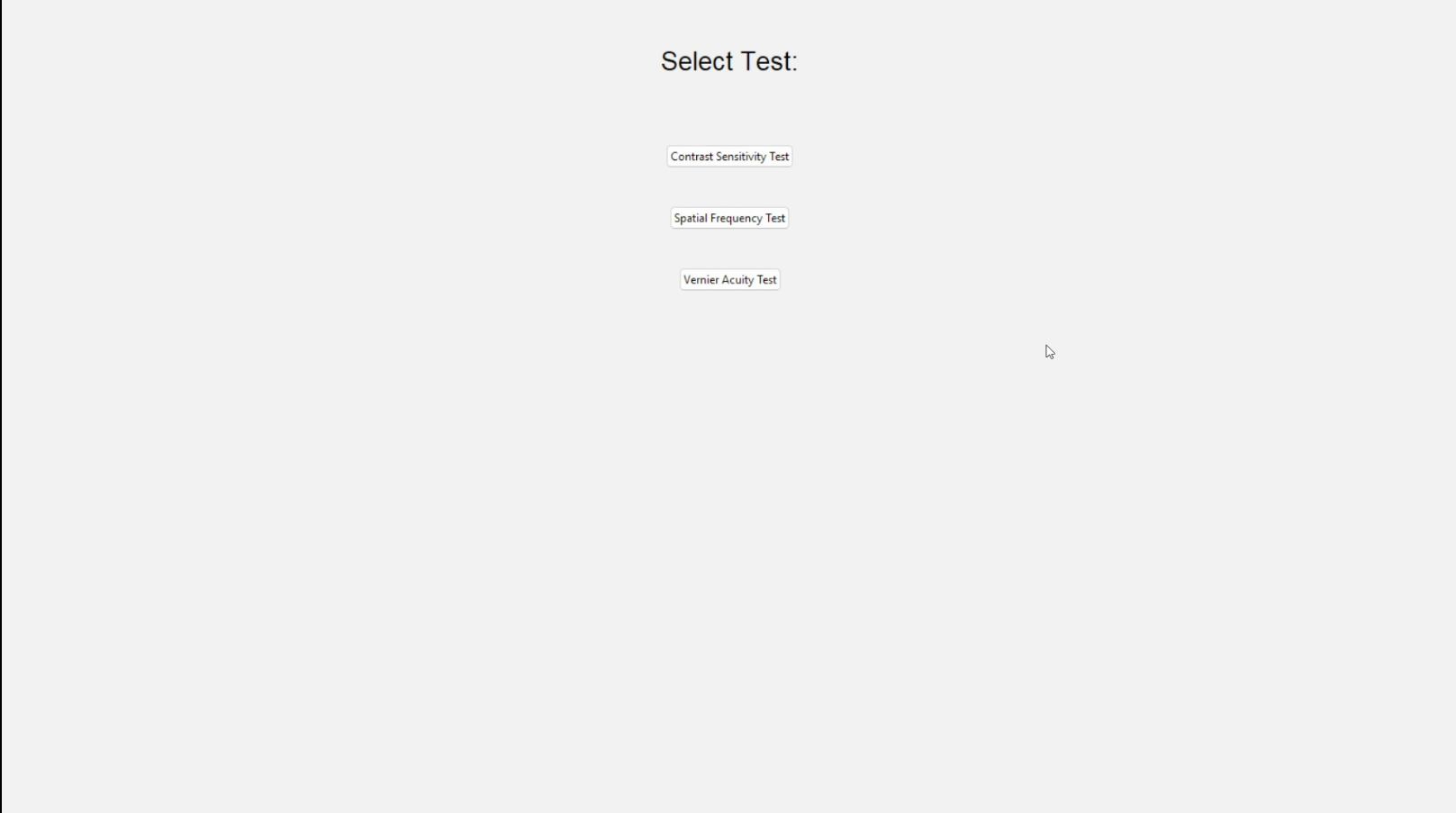

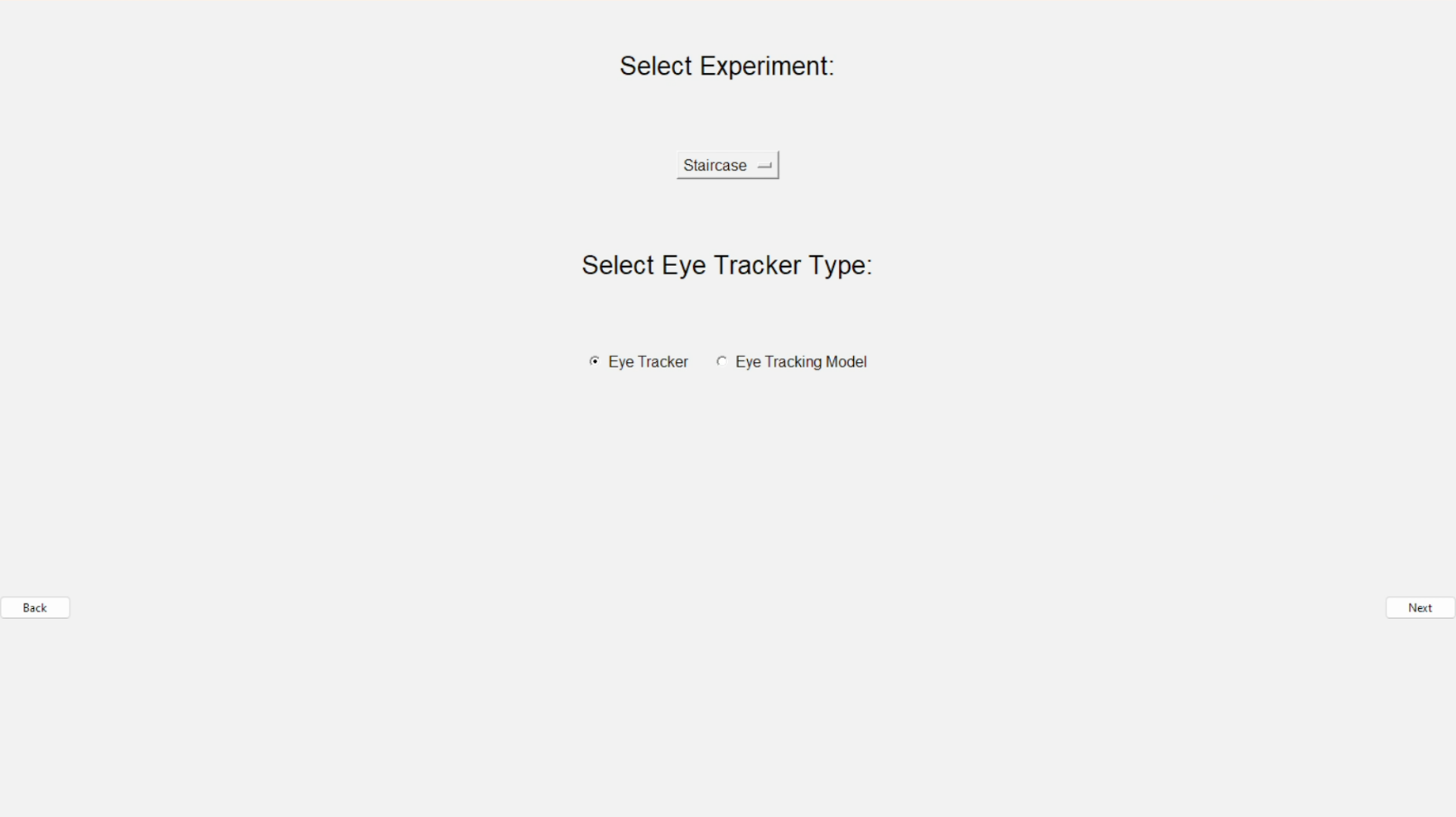

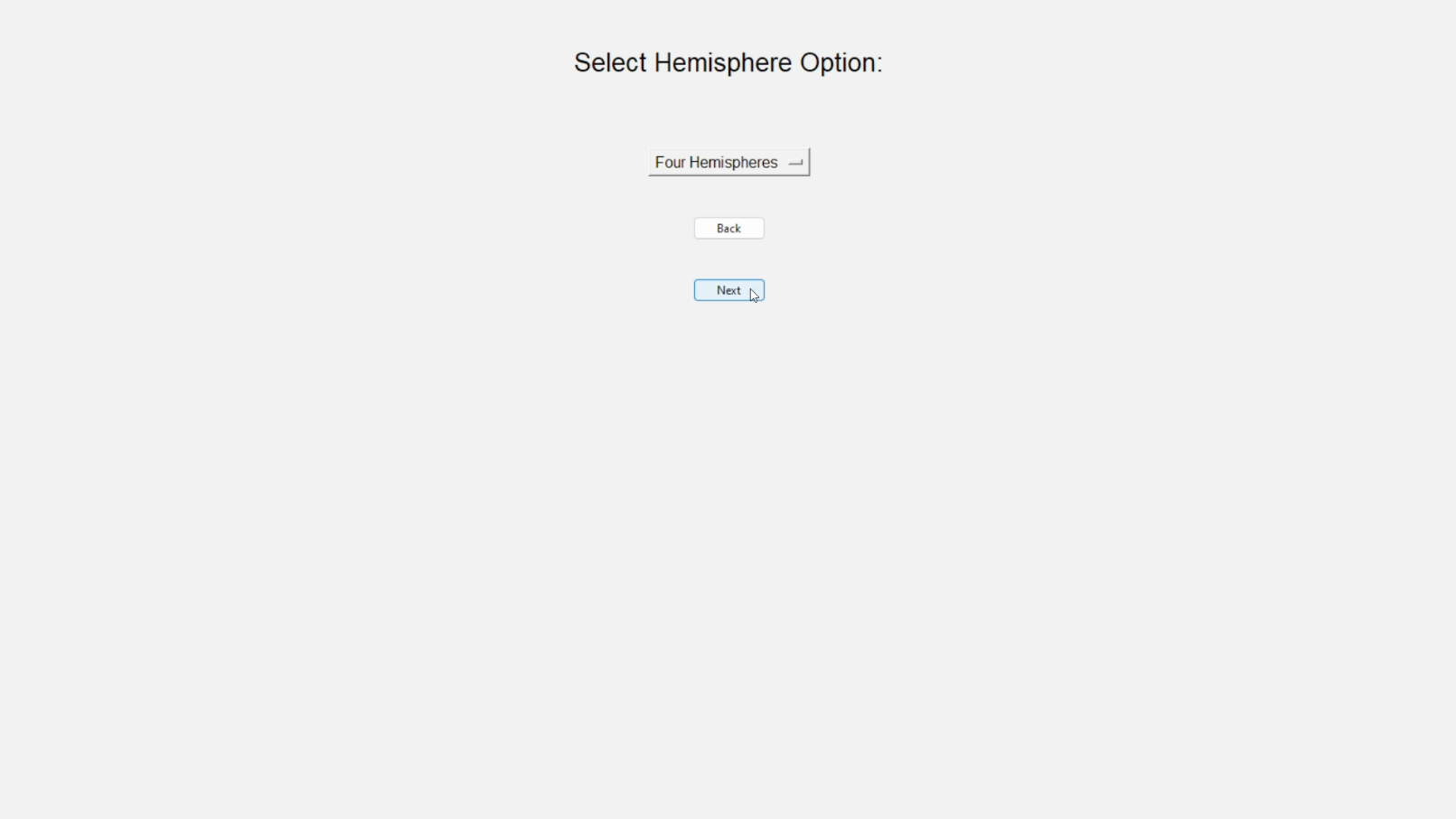

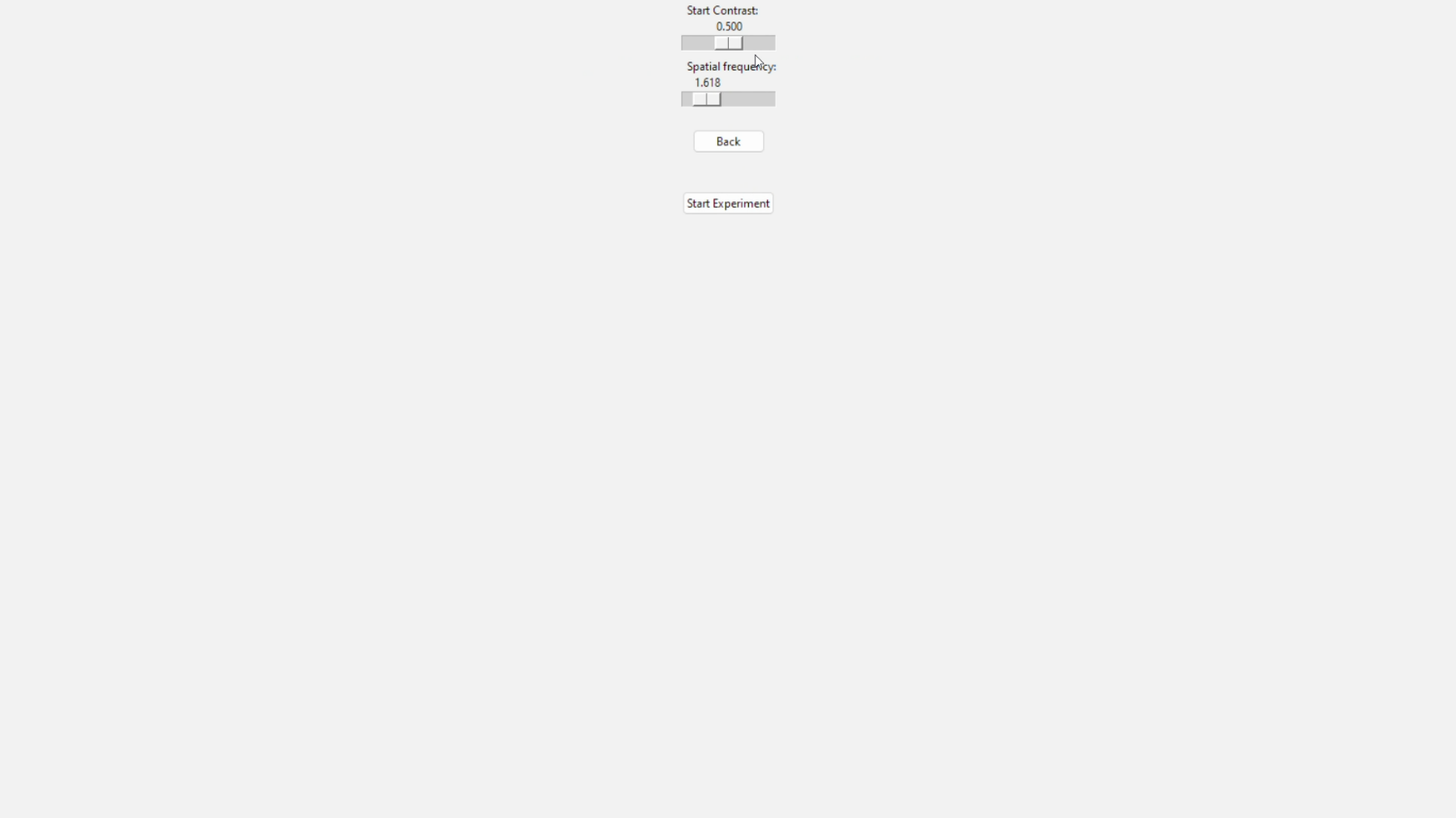

User-Friendly GUI and Program Integration:

- Development of an intuitive and user-friendly graphical user interface (GUI) using Tkinter. Integration of all three components, including the visual stimuli experiments, eye-tracking functionality, and data analysis, into a cohesive Python program.

. └── automated-preferential-looking/ ├── resources ├── src/ │ ├── data │ ├── pycache │ ├── icatcher/ │ ├── init.py │ ├── fixed_increment.py │ ├── fixed_increment_icatcher.py │ ├── gui.py │ ├── main.py │ ├── predict.py │ ├── psychometric_function.py │ ├── staircase.py │ └── staircase_icatcher.py ├── LICENSE ├── README.md └── requirements.txt

To execute the program on your system, please follow these steps.

-

Clone the repository into your local system:

git clone https://github.com/Shazam213/automated-preferential-looking.git

-

Navigate to the cloned directory:

cd automated-preferential-looking -

Download all the required packages:

pip install -r requirements.txt

-

Navigate to the src folder:

cd src -

Now execute the program:

python main.py

Ps- You might face errors while psychopy installations. You can refer this link.

- When running for the first time the app may take some time to load all the packages and also to download the eye tracking model.

- Starting the Program you first get the easy to use GUI.

- Once you've chosen the particular experiment and configured the parameter values, the experiment commences. Here is the example of staircase pattern of spatial frequency experiment using eye tracking model

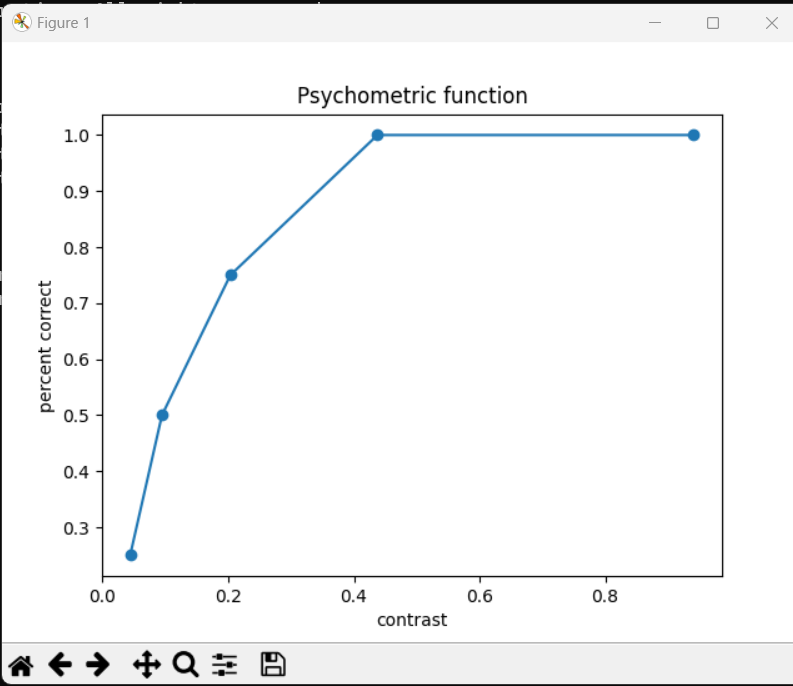

- Upon finishing the experiment, you'll see the psychometric function.

- Here's a look at the eye tracking model (iCatcher+) in operation. Because the model was trained for infants and young children, its accuracy suffers when tested on adults:

Alas, the end of Summer of Code shouldn't be the end of this project! With an amazing scope to go forward, I would love to put much more effort and create a full-working application that could be used in a clinical setting with help and testing from other researchers and labs we already had contact with.The project has laid a strong foundation for efficient measurement of visual functions in infants and young children. Future work may include:

-

Further refinement and optimization of the deep learning-based infant eye tracker model to improve accuracy.

-

Collaborations with healthcare professionals and researchers to refine and validate the application's use in clinical settings.

-

Deploy the program as a pip package or on a website.

Also due to the unavailibility of traditional eye tracking devices, eye tracker integration was not possible so instead for those experiments currently input is being taken through keyboard. But later it can also be implemented easily using the API developed by Ioannis Valasakis

I'd like to express my gratitude to my mentor, Dr. Suresh Krishna from McGill University, for providing invaluable insights and information throughout this project. While at times the information was quite complex, I understand that it will greatly benefit future iterations of the application and collaborative research with other scientists.

I would also like to extend my appreciation to the developers of iCatcher+, the eye-tracking model that has been seamlessly integrated into the program for gaze detection and test automation.

With the generous support of Google's open-source initiatives, I hope that I've sown the seeds of a useful program and provided valuable code suggestions. These contributions have the potential to make a significant impact in clinical settings in the future. Thank you, Google!

witch-world/automated-preferential-looking

This project aims to develop a ready-to-deploy application suite that will address these limitations by integrating hardware devices or deep learning-based infant eye trackers, and visual stimuli analysis into a user-friendly graphical user interface (GUI).

PythonMIT