This repository contains Python code to retrieve semantically similar Steam games.

- Install the latest version of Python 3.X.

- Install the required packages:

pip install -r requirements.txtEach game is described by the concatenation of:

- a short text below its banner on the Steam store:

- a long text in the section called "About the game":

The text is tokenized with spaCy by running utils.py.

The tokens are then fed as input to different methods to retrieve semantically similar game descriptions.

For instance, a word embedding can be learnt with Word2Vec and then used for a

sentence embedding based on a weighted average of word embeddings (cf. sif_embedding_perso.py).

A pre-trained GloVe embedding can also be used instead of the self-trained Word2Vec embedding.

Or a document embedding can be learnt with Doc2Vec (cf. doc2vec_model.py), although, in

our experience, this is more useful to learn document tags, e.g. game genres, rather than to retrieve similar documents.

Different baseline algorithms are suggested in sentence_baseline.py.

For Tf-Idf, the code is duplicated in export_tfidf_for_javascript_visualization.py, with the addition of an export for visualization of the matches as a graph in the web browser.

Embeddings can also be computed with Universal Sentence Encoder on Google Colab with this notebook.

Results are shown with

universal_sentence_encoder.py.

An in-depth commentary is provided on the Wiki. Matches obtained with Tf-Idf are shown as a graph in the web browser. Overall, I would suggest to match store descriptions with:

- or a weighted average of GloVe word embeddings, with Tf-Idf reweighting, after removing some components:

- either only sentence components,

- or both sentence and word components (for slighly better results, by a tiny margin).

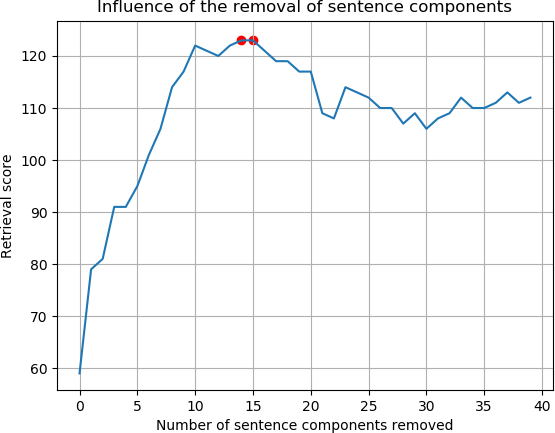

A retrieval score can be computed, thanks to a ground truth of games set in the same fictional universe. Alternative scores can be computed as the proportions of genres or tags shared between the query and the retrieved games.

When using average of word embeddings as sentence embeddings:

- removing only sentence components provided a very large increase of the score (+105%),

- removing only word components provided a large increase of the score (+51%),

- removing both components provided a very large increase of the score (+108%),

- relying on a weighted average instead of a simple average lead to better results,

- Tf-Idf reweighting lead to better results than Smooth Inverse Frequency reweighting,

- GloVe word embeddings lead to better results than Word2Vec.

A table with scores for each major experiment is available. For each game series, the score is the number of games from this series which are found among the top 10 most similar games (excluding the query). The higher the score, the better the retrieval.

Results can be accessed from the Wiki homepage.

It is possible to highlight games with unique store descriptions, by applying a threshold to similarity values output by the algorithm.

This is done in find_unique_games.py:

- the Tf-Idf model is used to compute similarity scores between store descriptions,

- a game is unique if the similarity score between a query game and its most similar game (other than itself) is lower than or equal to an arbitrary threshold.

Results are shown here.

- Microsoft's tutorial about sentence similarity,

- My answer on StackOverlow, about sentence embeddings

- Tutorial on the official website of 'gensim' module

- Tutorial on a blog

- Tool: spaCy

- Tool: Gensim

- Word2Vec

- GloVe

- Universal Sentence Encoder

- Sanjeev Arora, Yingyu Liang, Tengyu Ma, A Simple but Tough-to-Beat Baseline for Sentence Embeddings, in: ICLR 2017 conference.

- Jiaqi Mu, Pramod Viswanath, All-but-the-Top: Simple and Effective Postprocessing for Word Representations, in: ICLR 2018 conference.