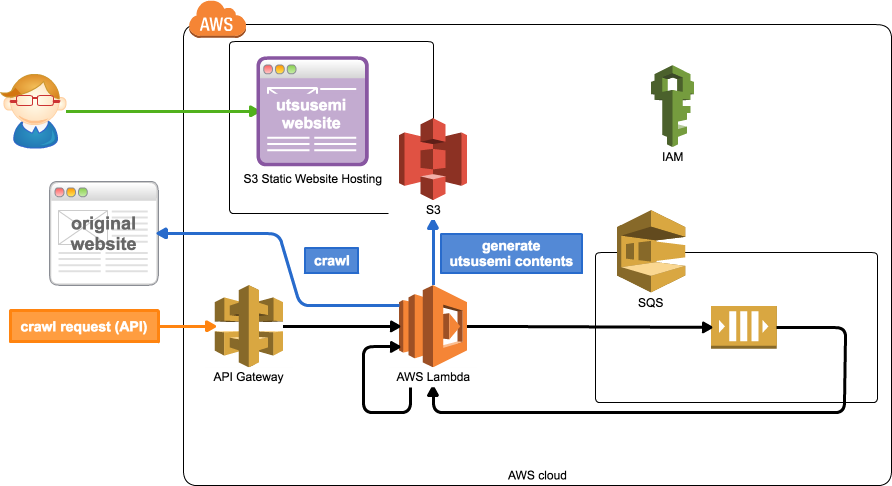

utsusemi = "空蝉"

A tool to generate a static website by crawling the original site.

- Serverless Framework ⚡

$ git clone https://github.com/k1LoW/utsusemi.git

$ cd utsusemi

$ npm installCopy config.example.yml to config.yml. And edit.

$ AWS_PROFILE=XXxxXXX npm run deployAnd get endpoints URL and UtsusemiWebsiteURL

- Call API

/delete?path=/ - Run following command.

$ AWS_PROFILE=XXxxXXX npm run destroyStart crawling to targetHost.

$ curl https://xxxxxxxxxx.execute-api.ap-northeast-1.amazonaws.com/v0/in?path=/&depth=3And, access UtsusemiWebsiteURL.

Disable cache

$ curl https://xxxxxxxxxx.execute-api.ap-northeast-1.amazonaws.com/v0/in?path=/&depth=3&force=1Cancel crawling.

$ curl https://xxxxxxxxxx.execute-api.ap-northeast-1.amazonaws.com/v0/purgeDelete S3 object.

$ curl https://xxxxxxxxxx.execute-api.ap-northeast-1.amazonaws.com/v0/delete?path=/$ curl https://xxxxxxxxxx.execute-api.ap-northeast-1.amazonaws.com/v0/statusStart crawling to targetHost with N crawling action.

$ curl -X POST -H "Content-Type: application/json" -d @nin-sample.json https://xxxxxxxxxx.execute-api.ap-northeast-1.amazonaws.com/v0/nin- HTML ->

depth = depth - 1 - CSS -> The source request in the CSS does not consume

depth. - Other contents -> End (

depth = 0) - 403, 404, 410 -> Delete S3 object