[DRAFT] Machine Learning for Development:

A method to Learn and Identify Earth Features from Satellite Images

Prepared by Antonio Zugaldia; commissioned by the Big Data team, Innovation Labs, the World Bank

All code is MIT licensed, and the text content is CC-BY. Please feel free to send edits and updates via Pull Requests.

“Machine Learning” is one of the tools on Big Data analysis. The need to process large amounts of data has contributed to accelerate the tools and application of new vastly scalable processing techniques. In this report we aim to facilitate the adoption of machine learning techniques to development outputs and outcomes.

In this particular case we aim to detect automatically distinct features such as playgrounds from standard aerial images, as a milestone towards automatic detection of more refined applications like paved (or not) roads, dams, schools, hospitals, non-formal settlements, wells, different stages on agricultural crops, ...

In this document we describe a complete methodology to detect earth features in satellite images using OpenCV, an open source computer vision and machine learning software. We describe the step by step process using documented Python software, and analyze potential ways of improving it. Finally, we review how to extend this analysis to an Apache Spark cluster hosted on AWS, and some recent developments on Google’s cloud offering. These tools can be used to analyze and visualize all kinds of data, including Sustainable Development Goals (SDG) data.[b]

Machine learning[1] “explores the construction and study of algorithms that can learn from and make predictions on data. Such algorithms operate by building a model from example inputs in order to make data-driven predictions or decisions, rather than following strictly static program instructions.”

Those inputs, or features, are at the core of any machine learning flow. For example, when doing a supervised regression those inputs are numbers, or when doing some document classification, we use a bag of words (where grammar is ignored or even word order but word multiplicity is kept).

However, when it comes to analyzing images, we cannot simply use the binary representation of every pixel as input. Not only this is inefficient from a memory handling perspective, raw pixels do not provide an useful representation of the content of an image, its shapes, corners, edges.

This is where Computer Vision plays a key role, extracting features from an image in order to be able to provide a useful input for machine learning algorithms. As there are many possibilities for extracting these features from images, in the following pages we are going to describe one well suited for our perspective, a cascade classifier using the open source tool OpenCV[2].

Our goal is to be able to identify specific features from a satellite image. In other words, we need to train a supervised classification model with some input images in order to be able to predict the existence and location of that element in other satellite images.

There are many ways to accomplish. In this document we use OpenCV (Open Source Computer Vision Library), which is able to bring significant results as we will show later. OpenCV was originally developed by Intel Corporation and is an open source computer vision and machine learning software library widely adopted by the industry. It comes with a large number of algorithms and tools that serve our purpose.

We are going to focus on the cascade classifier, which has two major stages: training and detection[3].

In order to train a high quality classifier we need a large number of high quality input images. For the purpose of this research, we are going to use 10,000 images acquired from the Mapbox service[4] using the author’s subscription to the service. Please note that these images were only used for the purpose of this research and were never released to a larger audience.

The information about the actual geographic location of the features was obtained from OSM (OpenStreetMap). In the following pages we describe the process in detail.

All the source code mentioned in these pages is available in the GitHub repository mentioned in the references section at the end of this document. All these commands were run in a 32-CPU virtual machine running Ubuntu Linux on Google’s Cloud[c] (type: n1-highcpu-32).

For this research, we have chosen a feature that is easily identifiable from space with abundant information in the OSM database: a tennis court. More importantly, we have chosen a feature and methodology that can be then adapted to any other features in the OSM database. Our goal is to create a flow useful for Development.

The first thing we did was to download the location of every pitch in the US available in OSM. These are technically defined by OSM by the leisure=pitch key/value. to automate this process we built a simple Python client to the Overpass API[5].

The query is as follows (the cryptic (._;>;);[d] asks for the nodes and ways that are referred by the relations and ways in the result):

[out:json];

way

[leisure=pitch]

({query_bb_s},{query_bb_w},{query_bb_n},{query_bb_e});

(._;>;);

out;

Where the {query_bb_s},{query_bb_w},{query_bb_n},{query_bb_e} parameters indicate the bounding box for the US[6]. Because querying for every pitch in the US is too big of a query (which will make the Overpass API server to timeout), we divided the US in 100 sub-bounding boxes. This is implemented in the 01_get_elements.py file.

This data contains 1,265,357 nodes and 170,070 ways, an average of about 7.5 nodes per way[e][f]. A sample node looks like:

{"lat": 27.1460817, "lon": -114.293208, "type": "node", "id": 3070587853}

And a way looks like:

{"nodes": [1860795336, 1860795357, 1860795382, 1860795346, 1860795336], "type": "way", "id": 175502416, "tags": {"sport": "basketball", "leisure": "pitch"}}

We cached the results to avoid unnecessary further queries to the server.

Once we had the data, we ran some sample statistics to see how many potential elements we had available for the US. The result is as follow, and the code is available on 02_get_stats.py.

Top 10 pitches types in the US according to OSM:

- Baseball = 61,573 ways

- Tennis = 38,482 ways

- Soccer = 19,129 ways

- Basketball = 15,797 ways

- Unknown = 11,914 ways

- Golf = 6,826 ways

- American football = 6,266 ways

- Volleyball = 2,127 ways

- Multi = 1,423 ways

- Softball = 695 ways

As we can see, we have 38,482 pitches identified in OSM as a way in the US. This will be our pool of data to train the system. The importance of having this elements tagged as ways (and not just nodes) will be shown later.

Next, for every way that we are interested in, we computed its centroid and its bounding box. The result is like the following and the code is in 03_build_ways_data.py:

"201850880": {"lat": 48.38813308204558, "lon": -123.68852695545081, "bounds": [48.3880748, -123.6886191, 48.3881912, -123.6884365]}

Where 201850880 is the way ID. We use the centroid as the point where we will download the satellite imagery, and we will use the bounding box to tell the machine learning algorithm the location within the larger image where our feature of interest is located.

We used the Python Shapely library (shapely.geometry.Polygon) to compute this information.

Now that we know the location of all tennis courts in the US, we can go ahead and download some sample satellite imagery from Mapbox. This is implemented in a Python script that allows some arguments (04_download_satellite.py):

04_download_satellite.py [-h] [--sport SPORT] [--count COUNT]

optional arguments:

-h, --help show this help message and exit

--sport SPORT Sport tag, for example: baseball.

--count COUNT The total number of images to download.

For example, to download 10,000 images of tennis courts (as we did), we would run the command as follows:

$ python 04_download_satellite.py --sport tennis --count 10000

The images were downloaded at maximum resolution (1280x1280) in PNG format. Also, to make sure we did not introduce a bias, we randomized the list of ways before downloading the actual imagery.

Please note that in some cases[g] imagery is not available in that location at that zoom level (19). Those images were identified by our script and deleted from our pool.

Now, for every image in our set (10,000) we need to find the actual location of the tennis court in the image. From step 3 we have the bounding box coordinates, we need to transform this into an image pixel location, and output the result in a specific format required by OpenCV.

The format looks like this:

satellite/gray/pitch_tennis_100027097.png 1 569 457 140 365

satellite/gray/pitch_tennis_100040542.png 1 559 549 161 180

satellite/gray/pitch_tennis_100042337.png 1 464 515 350 248

satellite/gray/pitch_tennis_100075597.png 1 471 366 337 546

satellite/gray/pitch_tennis_100077768.png 1 552 551 175 176

satellite/gray/pitch_tennis_100089034.png 1 521 548 237 183

...

It indicates, for example, that the image satellite/gray/pitch_tennis_100027097.png has 1 tennis court in the box defined by the bounding rectangle (569, 457, 140, 365). In all cases, we checked that the bounding rectangle was not larger than the image dimensions (1280x1280 pixels), or too small (less than 25x25 pixels), something entirely possible if they were incorrectly labeled by the OSM editor.

Also, note that this point we converted all images to grayscale (grayscale images are assumed by OpenCV’s scripts).

In order to convert from earth coordinates to image coordinates we used the following Python method:

def get_rectangle(bounds):

# This converts a latitude delta into an image delta.

# For USA, at zoom level 19, we know that we have 0.21

# meters/pixel. So, an image is showing

# about 1280 pixels * 0.21 meters/pixel = 268.8 meters.

# On the other hand we know that at the same angle,

# a degrees in latlon is

# (https://en.wikipedia.org/wiki/Latitude):

# latitude = 111,132 m

# longitude = 78,847 m

latitude_factor = 111132.0 / 0.21

longitude_factor = 78847.0 / 0.21

# Feature size

feature_width = longitude_factor *

math.fabs(bounds[1] - bounds[3])

feature_height = latitude_factor *

math.fabs(bounds[0] - bounds[2])

# CV params (int required)

x = int((image_width / 2) - (feature_width / 2))

y = int((image_height / 2) - (feature_height / 2))

w = int(feature_width)

h = int(feature_height)

return x, y, w, h

The correspondence between meters and pixel is shown in the following table (courtesy of Bruno Sánchez-Andrade Nuño):

Latitude |

||||||

Zoom level |

0 |

15 |

30 |

45 |

60 |

75 |

15 |

4.78 |

4.61 |

4.14 |

3.38 |

2.39 |

1.24 |

16 |

2.39 |

2.31 |

2.07 |

1.69 |

1.19 |

0.62 |

17 |

1.19 |

1.15 |

1.03 |

0.84 |

0.60 |

0.31 |

18 |

0.60 |

0.58 |

0.52 |

0.42 |

0.30 |

0.15 |

19 |

0.30 |

0.29 |

0.26 |

0.21 |

0.15 |

0.08 |

20 |

0.15 |

0.14 |

0.13 |

0.11 |

0.07 |

0.04 |

Ecuator |

Central America, India, North Australia, South Brazil |

Mexico, South US, South Australia, South Africa |

New Zealand, North US, most Europe, Tip of Argentina/Chile |

Russia, North Europe, Canada |

Polar bears |

A training process is incomplete if we don’t have “negatives”, that is, images used as a bad example were no features are present. To solve this we built the following script:

python 06_get_negatives.py [-h] [--count COUNT]

optional arguments:

-h, --help show this help message and exit

--count COUNT The total number of negative images to download.

We can use it like:

$ python 06_get_negatives.py --count 1000

It basically loads some random locations with actual pitches, but moves the location by a random amount to get the features out of the image:

target_lat = element.get('lat') + (random.random() - 0.5)

target_lon = element.get('lon') + (random.random() - 0.5)

Finally, we just need to put them all in one file with the following format:

satellite/negative/negative_1000200409.png

satellite/negative/negative_1001251446.png

satellite/negative/negative_1001532469.png

satellite/negative/negative_1001687068.png

satellite/negative/negative_1004891593.png

satellite/negative/negative_1006295843.png

satellite/negative/negative_1009904689.png

satellite/negative/negative_1011863337.png

...

In this case we don’t need to specify a bounding rectangle as these are images with no features in it.

We are finally equipped to use OpenCV’s tools to train the cascade classifier. First we need to create a .vec file using opencv_createsamples:

$ opencv_createsamples -info info_tennis.dat -num 10000 -vec info_tennis.vec

This would create the .vec file using 10,000 samples. And then, we can do the actual training with opencv_traincascade:

$ opencv_traincascade -data output -vec info_tennis.vec -bg negative.txt -numPos 2000 -numNeg 1000

This instructs to use 2,000 positive images, and 1,000 negative images (the default) and write the result in the output folder.

This is by far the most computing intensive step of the process. We ran it for different positive/negative values and the total time for the virtual machine ranged from a few hours (for about 2,000 positive images, default) to 5 days (for 8,000 positive images).

We now have a trained classifier in the form of a XML file that OpenCV can use to detect features in our images (we provide the resulting XML in the repository). Its usage can be as simple as:

tennis_cascade_file = 'output/cascade-8000-4000.xml'

tennis_cascade = cv2.CascadeClassifier(tennis_cascade_file)

img = cv2.imread(filename, 0)

pitches = tennis_cascade.detectMultiScale(

img, minNeighbors=min_neighbors)

Where filename represents the satellite image we want to analyze and pitches contain the location (if detected) of the feature in the image.

However, the cascade classifier has different parameters that will affect the result of the classification. A key parameter is minNeighbors, a parameter specifying how many neighbors each candidate rectangle should have to retain it.

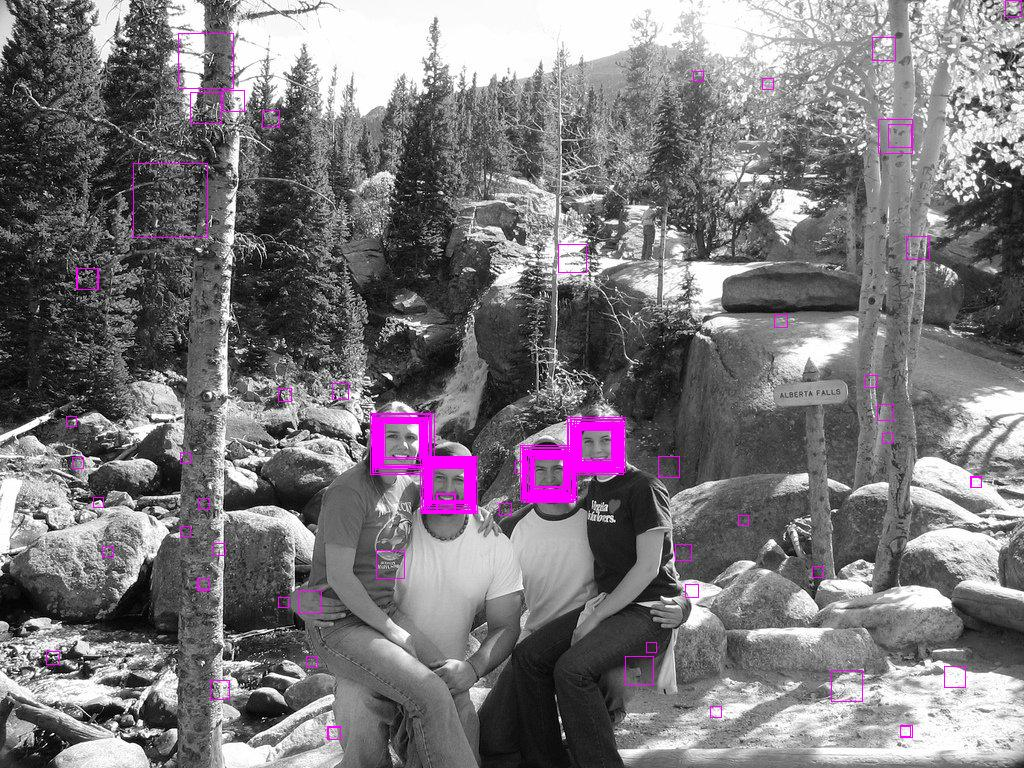

For example[7], if we were using this methodology to identify faces in a picture, and we set the minNeighbors value as zero, we would get too many false positives:

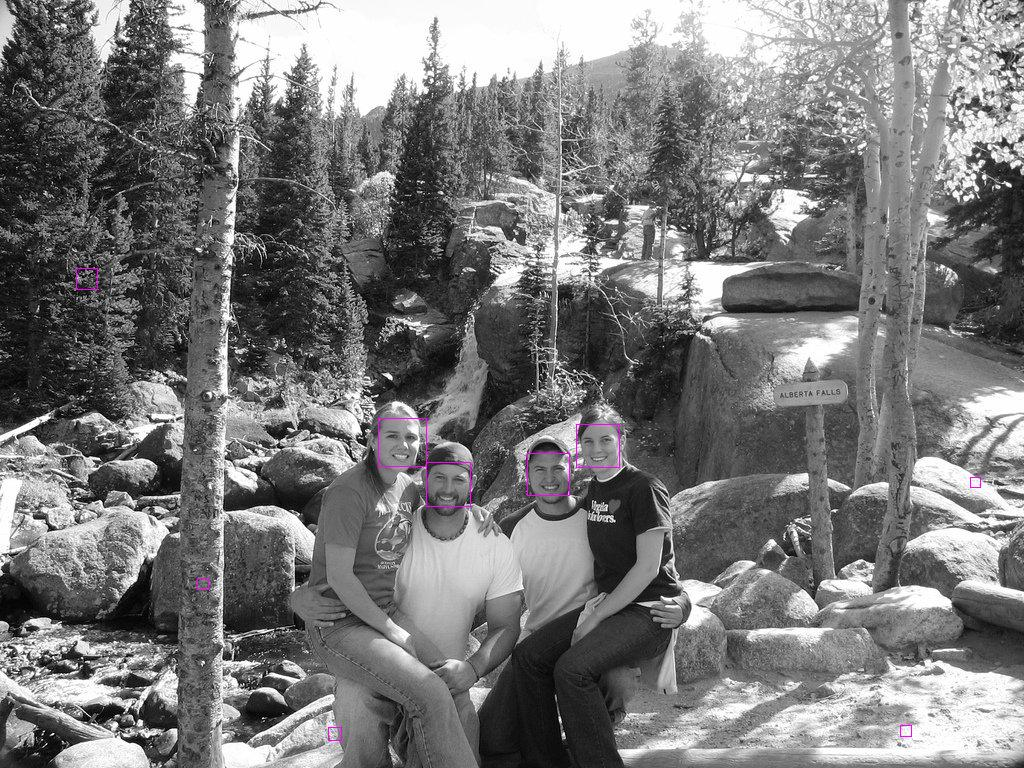

A larger value of minNeighbors, will bring a better result:

This is way, at this point we spent some time finding the value that brings the best results for us. A common way of approaching this situation is by defining:

- True positive: “There is a tennis court, and we found one.”

- False positive: “There is no tennis court, and we found one.”

- True negative: “There is no tennis court, and we found none.”

- False negative: “There is a tennis court, and we found none.”

In general, we are interested in maximizing true positives and true negatives, and minimizing false positives and false negatives. It is up to us to decide to what extent we want to do this. For example, in an algorithm to detect brain tumors we might want to focus on minimizing false negatives (missing an existing tumor), while in a crowdsourced system (like it could be this one), is better to target false positives because they are easy to dismiss by a human.

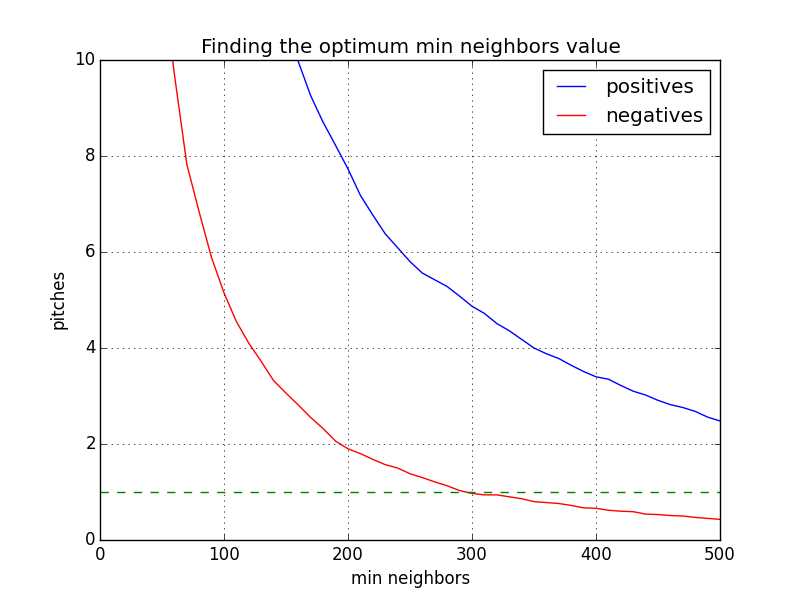

Knowing this, we obtained 100 random images with tennis courts in them, and 100 random images with no tennis courts, and we used our model to check for the existence or inexistence of a tennis court on them, calculating in every case the total number of courts identified. This is done by 07_fit_min_neighbors.py and 08_plot_fit.py and one of the results is the following:

This image was computed with the 4,000 positive images and 2,000 negative images. The red lines indicates the number of pitches detected in negative images as we increase minNeighbors, and the blue line does the same for positive images. The goal, is to make sure that the red line falls under the value of one (green dashed line = no pitches identified) while the blue one remains above one. This seems to happen when the value minNeighbors of goes over 300.

In fact, for a minNeighbors value of 500, we obtain:

- Percentage of true positives = 73.0%

- Percentage of true negatives = 82.0%

Which seems to indicate we are going on the right direction. Further analysis will require to compute precision (how accurate our positive predictions are) and recall (what fractions of the positives our model identified) values.

Step 9: Visualizing results[i]

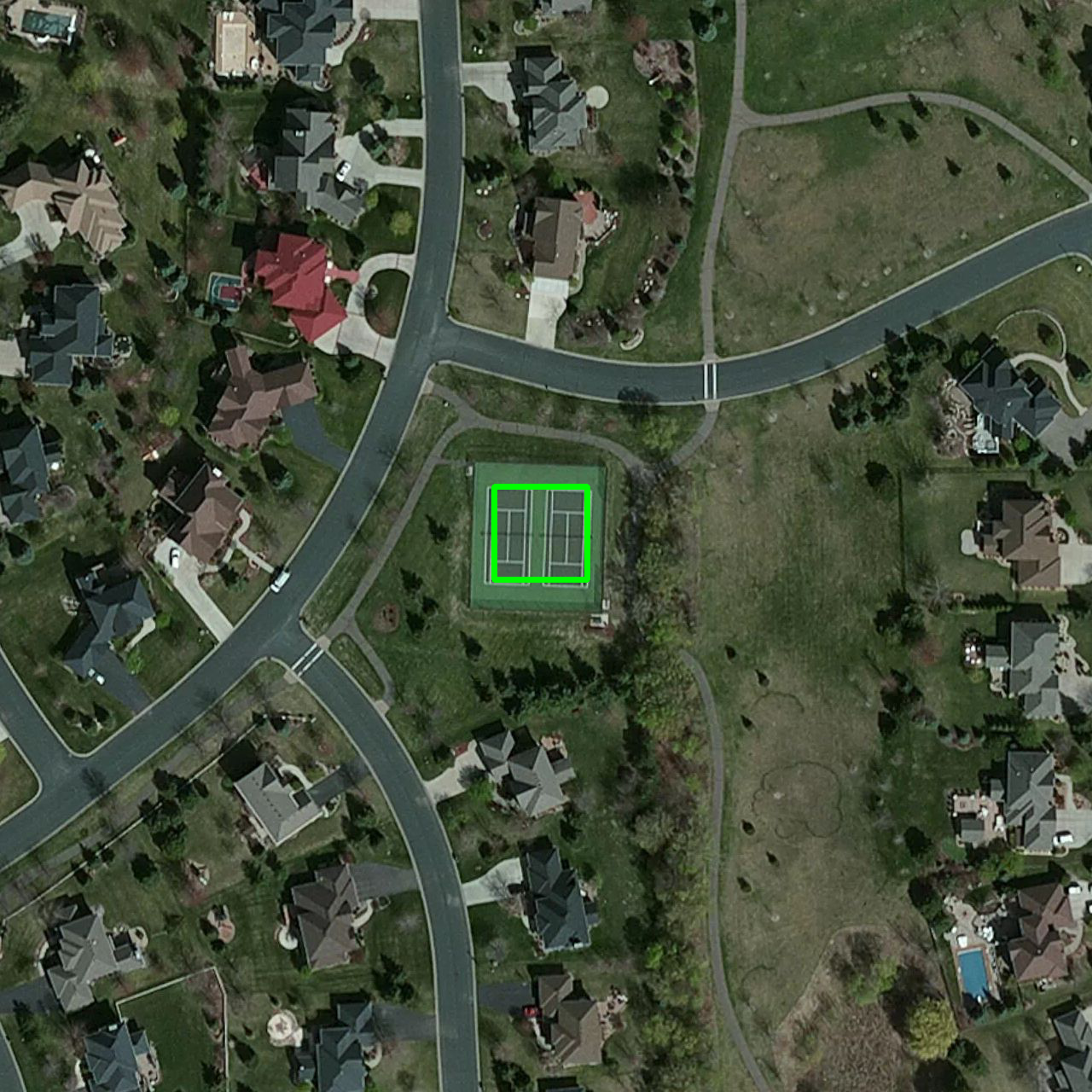

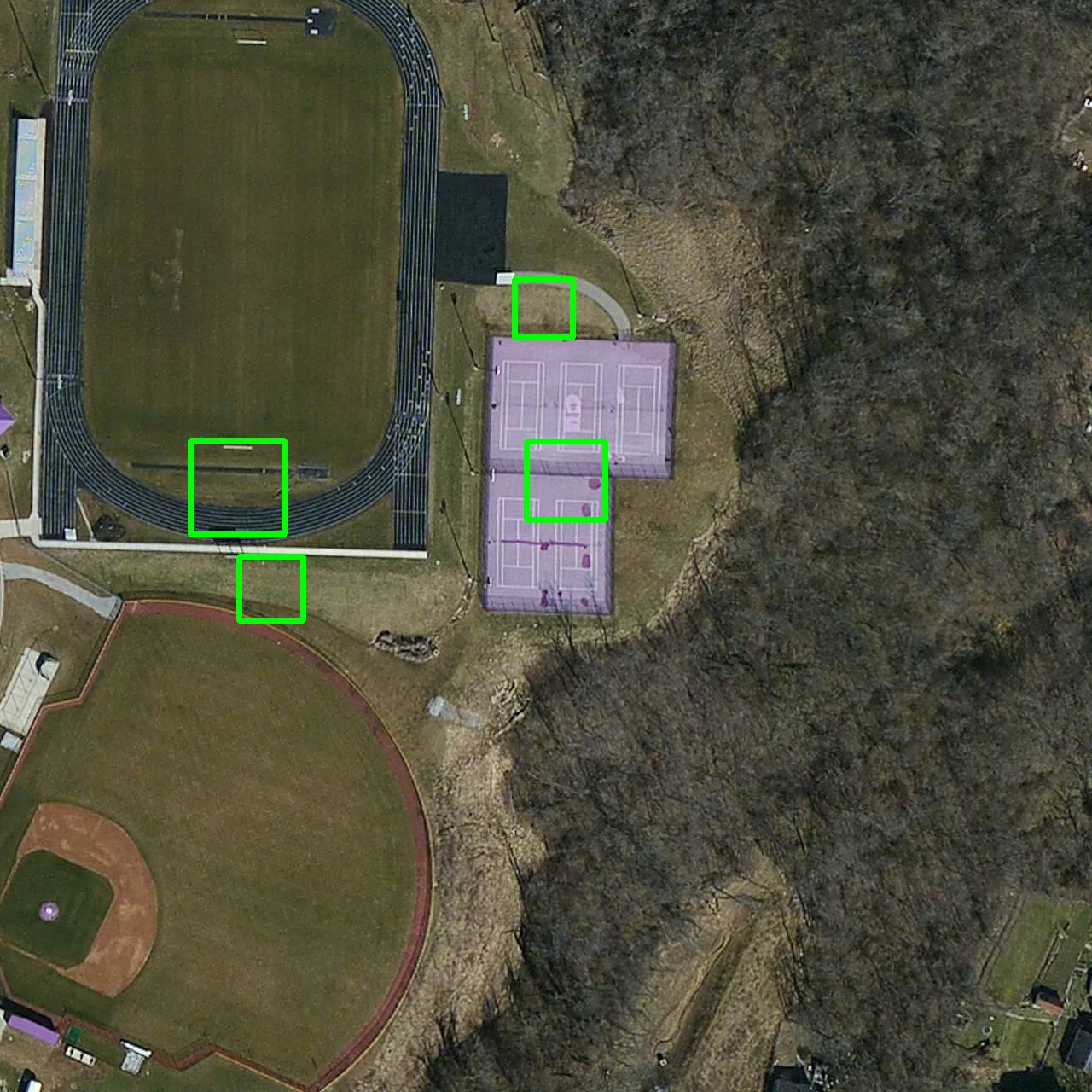

Finally, we have built 09_draw_results.py to visualize the predictions of our model. These are some actual results on images not included in the original training set:

True positive:

False positive:

True negative:

False negative:

As we have shown until now, the cascade classifier is producing solid results over our training dataset, however, not all features in the world are as identifiable as a tennis court (a specific type of building, for example), or as available in the OSM database (low interest objects, or very recent ones after a natural disaster, for example).

Because of these challenges, there are a number of improvements that still could be explored. Namely:

- Train the model with a larger dataset: We have used thousands of images to train the system. While this is not a small number, this is far from being “big data.” In the next section we describe how to scale this process to cluster size processing, making this virtually limitless for the number of images we would be capable of analyzing.

- Test the model with a different OSM feature: We have tried one particular feature but, as usual, we only learn how to generalize a method when we have tried a set of them. Applying this methodology to other OSM features would likely improve the overall fitting.

- Correcting the bounding box orientation: In the images, it’s rare that all the tennis courts are aligned in the same way, using the bounding box to rotate all features to have the same alignment would help algorithms where this is important (unlike local feature detector described below).

- Better features: We are using the bounding box to define the object we want to identify. However, but for more complex elements we might want to be more precise than that. This might require manual editing of every training image, but the better the training set, the better the results.

- Other algorithms: We have used opencv_traincascade, that supports both Haar and LBP (Local Binary Patterns) features but these are not the only ones. Two specific detectors that could be of use in this case are SIFT[8], and SURF[9], a local feature detector. These detectors improve extraction from the training image even under changes in image scale, noise and illumination, which is likely to happen in satellite imagery.

In the previous section, we mentioned that one obvious improvement to our machine learning flow is to substantially increase the number of images we have used for training purposes, or as Herman Narula[10] says: “the cool stuff only happens at scale”. In order to do this, we need a tool like Apache Spark to handle the extra load. Just relying on a more powerful machine VM won’t be enough for practical reasons.

In the following pages we show how to deploy a Spark Cluster in Amazon’s cloud, and how to run a simple mapreduce job. This is a cost efficient way of running Spark and a popular solution among startups. Other possibilities include platforms as service (PaaS) like Databricks[11] (founded by the creators of Spark), or IBM Bluemix[12]. This last approach is not covered in this document.

Apache Spark is a fast and general cluster computing system for big data that brings considerable performance improvements over existing tools. It was developed by the AMPLab[13] at UC Berkeley and, unlike Hadoop[14], “Spark's in-memory primitives provide performance up to 100 times faster for certain applications. By allowing user programs to load data into a cluster's memory and query it repeatedly, Spark is well suited to machine learning algorithms.”

Technically, from the official documentation:

“Spark is a fast and general-purpose cluster computing system. It provides high-level APIs in Java, Scala and Python, and an optimized engine that supports general execution graphs. It also supports a rich set of higher-level tools including Spark SQL for SQL and structured data processing, MLlib for machine learning, GraphX for graph processing, and Spark Streaming.”

According to Spark inventor and MIT professor, Matei Zaharia, Spark is one of the most active and fastest growing open source big data cluster computing projects[15]. Spark is supported by both Amazon’s and Google’s cloud, and it starting to have strong industry support[16].

We can install Spark on our laptop (or desktop computer) downloading one of the binaries available on the download page[17], Spark runs on both Windows and UNIX-like systems (e.g. Linux, Mac OS). The only requirement is to have an installation of Java[18] 6+ on your computer (Spark is written in Scala and runs on the Java Virtual Machine). Also, we need a Python[19] 2.7+ interpreter in order to run the scripts we will show in the following sections.

Follow these steps:

- Head to the downloads page.

- In “Choose a Spark release” choose the latest version available.

- In “Choose a package type” choose a “pre-built for Hadoop” version.

- Leave “Choose a download type” on its default value.

- Click on the “Download Spark” link and download the actual file.

Once you’ve downloaded the package, simply unpack it:

$ cd /your/target/folder

$ tar zxf spark-1.3.1-bin-hadoop2.4.tgz

$ cd spark-1.3.1-bin-hadoop2.4

You can verify that Spark is installed correctly running a sample application[20]:

$ ./bin/spark-submit examples/src/main/python/pi.py 10

You will see a long list of logging statements, and an output like the following:

Pi is roughly 3.142360

Although, as we can see, Spark can be run on someone’s laptop (or desktop computer, which is useful to prototype or quickly explore a dataset), its full potential comes when it’s run as part of a distributed cluster. Let’s see now a couple of ways we can use to run Spark on Amazon AWS (for real-world data analysis).

The official Spark distribution includes a script[21] that simplifies the setup of Spark Clusters on EC2. This script uses Boto[22] behind the scenes so you might want to set up your boto.cfg file so that you don’t have to type your aws_access_key_id and aws_secret_access_key every time.

Let’s assume that we have created a new keypair (ec2-keypair) with the AWS Console, and that we have saved it in a file (ec2-keypair.pem) with the right permissions (chmod 600). We are now going to create a 10 machines cluster called worldbank-cluster (please note that this will incur in some costs):

$ ./ec2/spark-ec2 \

--key-pair=ec2-keypair \

--identity-file=ec2-keypair.pem \

--slaves=10 \

launch worldbank-cluster

Once the cluster is created (it will take a few minutes), we can login (via SSH) to our brand new cluster with the following command:

$ ./ec2/spark-ec2 \

--key-pair=ec2-keypair \

--identity-file=ec2-keypair.pem \

login worldbank-cluster

And we can run the same example code (or any other Spark application) with:

$ cd spark

$ ./bin/spark-submit examples/src/main/python/pi.py 10

(Spark comes pre-installed[23] on /root/spark.)

Remember to destroy the cluster once you’re done with it:

$ ./ec2/spark-ec2 \

--delete-groups \

destroy worldbank-cluster

You could also stop (./ec2/spark-ec2 stop worldbank-cluster) and start (./ec2/spark-ec2 stop worldbank-cluster) the cluster without having to destroy it.

Running Spark on AWS EMR (Elastic MapReduce)[24]

Because Spark is compatible with Apache Hadoop[25] we can use EMR as our Spark cluster for data processing. We are going to use the AWS command line interface[26] to manage this new EMR, cluster and we assume you have it installed.

Before creating the cluster, make sure that you have created the default roles (this is a one-time setup command):

$ aws emr create-default-roles

Then, you can create the cluster with this command (again called worldbank-cluster with 10 machines).

$ aws emr create-cluster \

--name worldbank-cluster \

--ami-version 3.7.0 \

--instance-type m3.xlarge \

--instance-count 10 \

--ec2-attributes KeyName=ec2-keypair \

--applications Name=Hive \

--use-default-roles \

--bootstrap-actions \

Path=s3://support.elasticmapreduce/spark/install-spark

Make sure you take note of the cluster ID (for example, j-1A2BCD34EFG5H). Again, take into account this could incur in Amazon AWS costs.

If you are already familiar with AWS and AWS EMR, you will notice that this is a pretty standard setup. The big difference is in the last line, that includes the S3 location for the Spark installation bootstrap action.

You can then perform the usual tasks on EMR clusters, like describe:

$ aws emr describe-cluster \

--cluster-id j-1A2BCD34EFG5H

or list instances:

$ aws emr list-instances \

--cluster-id j-1A2BCD34EFG5H

Once the EMR cluster has been created (it will take a few minutes), you can login via SSH, like we did in the previous section:

$ aws emr ssh \

--cluster-id j-1A2BCD34EFG5H \

--key-pair-file ec2-keypair.pem

And execute your Spark application with:

$ cd spark

$ ./bin/spark-submit examples/src/main/python/pi.py 10

(Spark comes pre-installed on /home/hadoop.)

Finally, you can terminate the cluster with:

$ aws emr terminate-clusters \

--cluster-id j-1A2BCD34EFG5H

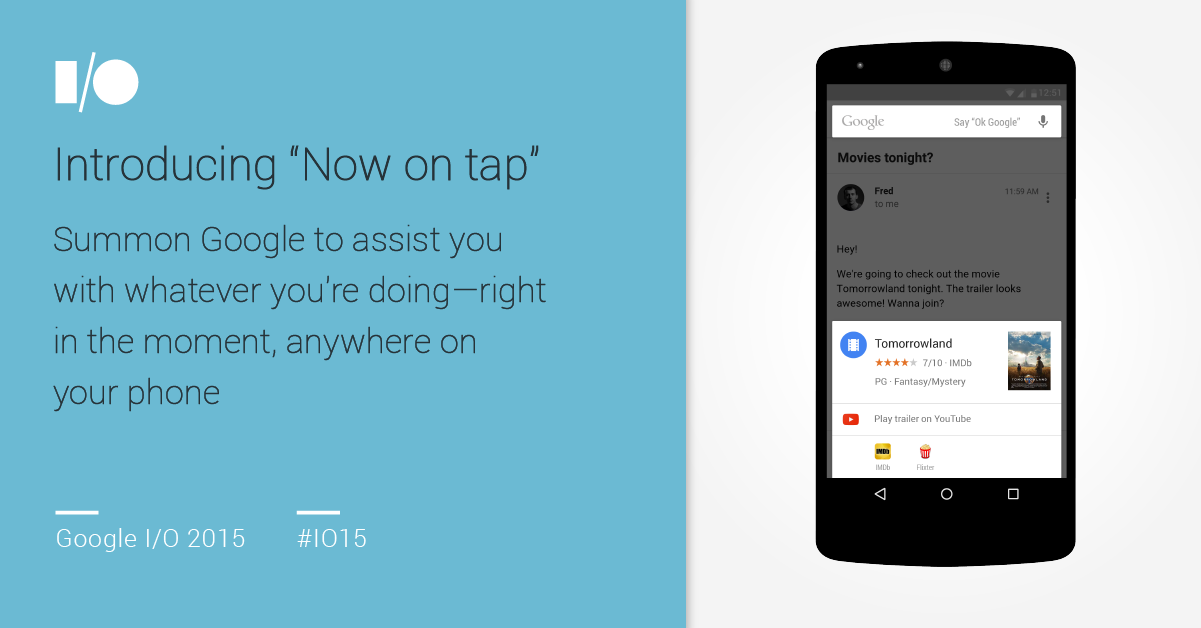

During the time of this assignment, Google held its annual developer conference, Google I/O 2015, where machine learning related topics were at the core of the new products and development. This section summarizes the current status of tools on Google’s Cloud to support machine learning processes and some of the announcements made during the conference.

During the conference keynote, machine learning was mentioned directly (“machine learning”) or indirectly (“computer vision”, “natural language processing”, “deep neural networks”) about a dozen times. This can be visualized in the following word cloud that we have built[27] where the terms “machine” and “learning” are highlighted:

The two main products benefitting from recent machine learning developments at Google, as stated during the keynote, are:

- Google Photos[28]. This new service is now available, and its search input is the main point of access for computer vision and machine learning functionality. Users can search by person, or image content, for example.

- Google Now and Google Now on Tap[29]. This new product will be present in the future version of Android M, still unpublished.

Source: Google

Unfortunately, Google is not releasing much information about the actual technologies they are using in their machine learning efforts. The exception might be deep neural networks. This is a quote from Sundar Pichai, Senior Vice President at Google for Android, Chrome, and Google Apps, during the keynote:

“You know, in this query, what looked like a simple query, we understood voice, we did natural language processing, we are doing image recognition, and, finally, translation, and making it all work in an instant. The reason we are able to do all of this is thanks to the investments we have made in machine learning. Machine learning is what helps us answer the question, what does a tree frog look like, from millions of images around the world. You know, the computers can go through a lot of data and understand patterns. It turns out the tree frog is actually the third picture there. The reason we are able to do that so much better in the last few years is thanks to an advance in the technology called deep neural nets. Deep neural nets are a hierarchical, layered learning system. So we learn in layers. The first layer can understand lines and edges and shadows and shapes. A second layer may understand things like like ears, legs, hands, and so on. And the final layer understands the entire image. We have the best investment in machine learning over the past many years, and we believe we have the best capability in the world. Our current deep neural nets are over 30 layers deep. It is what helps us when you speak to Google, our word error rate has dropped from a 23% to 8% in just over a year and that progress is due to our investment in machine learning.”

Artificial neural networks are a well known architecture in deep learning[30].

Deep neural networks during Google I/O 2015 keynote[31]

Unfortunately, the new products announced by Google are not immediately accompanied by new developer tools that we could benefit from to create advanced machine learning processing in an easier way, leveraging Google’s infrastructure.

We had conversations with Google engineers and Google Developer Experts and these are a few items we learned:

- No immediate announcements regarding new machine learning tools, related or not to the new Google Now on Tap product.

- No immediate announcements regarding new computer vision tools related to the new Google Photos product.

- The Prediction API remains the recommended tool for machine learning in the cloud with a 99.9% availability service level agreement.

- The Prediction API is better suited for numeric or text input that can output hundreds of discrete categories or continuous values. It is unclear the algorithms used by Google in order to generate the predictions.

- Computer Vision analysis requires custom tools on top of Google Compute Engine (the equivalent of Amazon’s EC2). Preemptible VMs (instances that might be terminated, or preempted, at any moment) remain the best option for affordable computing and are a common solution.

- Geographic analysis requires custom tools on top of Google Compute Engine as Cloud SQL has no support for PostgreSQL (and therefore, PostGIS). The Google Maps API for Work product is better suited for visualization than analysys. Internally, Google seems to solve this problem with Spanner[32].

- An alternative to GCE is the Container Engine, that allows to run Docker containers on Google Cloud Platform, powered by Kubernetes[33]. Google Container Engine schedules containers, based on declared needs, on a managed cluster of virtual machines.

Finally, and in order to be comprehensive, these are the current tools that Google provides for machine learning and big data as part of their cloud offering:

- BigQuery: Analyzes Big Data in the cloud, it runs fast, SQL-like queries against multi-terabyte datasets in seconds. Gives real-time insights about data.

- Dataflow (beta): Builds, deploys, and runs data processing pipelines that scales to solve key business challenges. It enables reliable execution for large-scale data processing scenarios such as ETL, analytics, real-time computation, and process orchestration.

- Pub/Sub (beta): Connects services with reliable, many-to-many, asynchronous messaging hosted on Google's infrastructure. Cloud Pub/Sub automatically scales as needed and provides a foundation for building global services.

- Prediction API: Uses Google’s machine learning algorithms to analyze data and predict future outcomes using a RESTful interface.

If there’s any interest in trying any of these technologies, we can provide a $500 discount code that was shared with attendees. To redeem it, please follow the following instructions (this offer must be claimed by June 15th):

- Go to http://g.co/CloudStarterCredit

- Click Apply Now

- Complete the form with code: GCP15

Finally, we present a few references that can help the reader deepen their knowledge of all the areas mentioned in this report.

We have set a GitHub repository[34] for the code of this project:

- Machine Learning for Dev: https://github.com/worldbank/ml4dev

These are a few good books that expand on the tools and methodologies that we’ve used throughout this document:

- Data Science from Scratch: First Principles with Python, by Joel Grus. Publisher: O'Reilly Media (release Date: April 2015).

- Learning Spark: Lightning-Fast Big Data Analysis, by Holden Karau, Andy Konwinski, Patrick Wendell, Matei Zaharia. Publisher: O'Reilly Media (release Date: January 2015).

- Advanced Analytics with Spark: Patterns for Learning from Data at Scale, by Sandy Ryza, Uri Laserson, Sean Owen, Josh Wills. Publisher: O'Reilly Media (release Date: April 2015).

- Apache Spark: Fast and general engine for large-scale data processing.

- Mahotas: Computer Vision in Python.

- OpenCV (Open Source Computer Vision Library): An open source computer vision and machine learning software library.

- scikit-learn: Machine Learning in Python.

[1] https://en.wikipedia.org/wiki/Machine_learning

[3] This process is described in detail here: http://docs.opencv.org/doc/user_guide/ug_traincascade.html

[4] https://www.mapbox.com/commercial-satellite/

[5] http://wiki.openstreetmap.org/wiki/Overpass_API

[6] https://www.flickr.com/places/info/24875662

[7] OpenCV detectMultiScale() minNeighbors parameter: http://stackoverflow.com/questions/22249579/opencv-detectmultiscale-minneighbors-parameter

[8] http://opencv-python-tutroals.readthedocs.org/en/latest/py_tutorials/py_feature2d/py_sift_intro/py_sift_intro.html

[9] http://opencv-python-tutroals.readthedocs.org/en/latest/py_tutorials/py_feature2d/py_surf_intro/py_surf_intro.html

[12] https://developer.ibm.com/bluemix/

[13]Algorithms, Machines, and People Lab: https://amplab.cs.berkeley.edu

[14] https://en.wikipedia.org/wiki/Apache_Spark

[15] “Andreessen Horowitz Podcast: A Conversation with the Inventor of Spark”: http://a16z.com/2015/06/24/a16z-podcast-a-conversation-with-the-inventor-of-spark/

[16] “IBM Pours Researchers And Resources Into Apache Spark Project”: http://techcrunch.com/2015/06/15/ibm-pours-researchers-and-resources-into-apache-spark-project/

[17] https://spark.apache.org/downloads.html

[18] https://java.com/en/download/

[19] https://www.python.org/downloads/

[20] https://github.com/apache/spark/blob/master/examples/src/main/python/pi.py

[21] https://spark.apache.org/docs/latest/ec2-scripts.html

[22] http://aws.amazon.com/sdk-for-python/

[23]Together with ephemeral-hdfs, hadoop-native, mapreduce, persistent-hdfs, scala, and tachyon.

[24] After this section was written, Amazon announced improved support for Apache Spark on EMR. We can now create an Amazon EMR cluster with Apache Spark from the AWS Management Console, AWS CLI, or SDK by choosing AMI 3.8.0 and adding Spark as an application. Amazon EMR currently supports Spark version 1.3.1 and utilizes Hadoop YARN as the cluster manager. To submit applications to Spark on your Amazon EMR cluster, you can add Spark steps with the Step API or interact directly with the Spark API on your cluster's master node.

[25] In fact, Spak can run in Hadoop clusters through YARN or Spark's standalone mode, and it can process data in HDFS, HBase, Cassandra, Hive, and any Hadoop InputFormat.

[26] http://aws.amazon.com/cli/

[29] http://arstechnica.com/gadgets/2015/05/android-ms-google-now-on-tap-shows-contextual-info-at-the-press-of-a-button/

[30] http://en.wikipedia.org/wiki/Deep_learning

[31] Photo courtesy of Allen Firstenberg, Google Developer Expert: https://plus.google.com/101852559274654726533/posts/Fp6Zz2fVmVz

[32] http://research.google.com/archive/spanner.html

[34] Contact Antonio Zugaldia <antonio@zugaldia.net> if you need access.

[f]From Google I get "The Tennis Industry Association website indicates in their National Database Court Report that there are an estimated 270,000 courts in the USA" so it seems fairly good