In this lesson, we'll learn about Sequence Models, and what makes them different from traditional Multi-Layer Perceptrons. We'll also examine some of the common things Sequence Models can be used for!

You will be able to:

- Identify the various types of problems sequence models can solve, such as text generation, machine translation, and sequence-to-sequence models

- Compare and contrast Sequence Models with traditional MLP and CNN architectures

A Sequence Model is a general term for a special class of Deep Neural Networks that work with a time series of data as an input. The series of data is any set of data where we want the model to consider the data one point at a time, in order. This means that they are great for problems where the order of the data matters--for instance, stock price data or text. In both cases, the data only makes sense in order. For instance, scrambling the words in a sentence destroys the meaning of the sentence, and it's impossible to predict if a stock price is going to go up or down if we don't see the prices in sequential order. In both cases, the sequence of the data matters.

Consider the following problem: You are given the sentence "we are going to" and asked to complete the sentence by generating at least 5 more words. The second word that you choose will depend heavily on the first word that you choose. The third word that you choose will depend heavily on the first and second words that you choose, and so on. Because of this, it is crucial that our models remember the previous words that they generated--in computer science, we call this being stateful. This means that when our model is generating the second word, it needs to know what it generated as the first word! To do this, Recurrent Neural Networks feed their output for timestep

There are many different kinds of Sequence Models, and they are most generally referred to as Recurrent Neural Networks, or RNNs. In the next lesson, we'll dig into how they work. Let's examine some of the things that RNNs can do!

One of the most common applications of RNNs is for plain old text classification. Recall that all the models that we've used so far for text generation have been incapable of focusing on the order of the words, which means that they're likely to miss out on more advanced pieces of information such as connotation, context, sarcasm, etc. However, since RNNs examine the words one at a time and remember what they've seen at each time step, they're able to capture this information quite effectively in most cases! For the final lab of this section, we'll actually build one of these models ourselves, which will be able to detect toxic comments from real-world wikipedia comments!

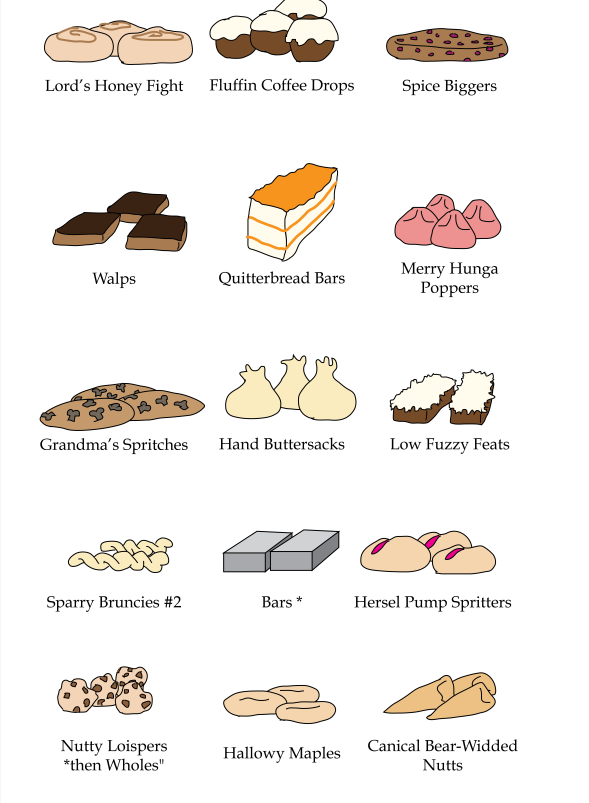

Sequence generation is probably some of the most fun that you can have with Neural Networks, because they excel at coming up with wacky, almost-human sounding names for things when fed the right data. For instance, all of the following cookie names were generated by feeding a dataset of actual cookie names from recipes. The model was built to generate it's own cookie names letter by letter, based on what it saw in the recipe names. Since the model is responsible for generating its own output letter by letter, one at a time, this makes it a prime example of Sequence Generation!

If you've ever used Google Translate before, then you've already interacted with a Sequence to Sequence Model. These models learn to map an input sequence to an output sequence, usually through an Encoder-Decoder architecture. You'll see more about encoder-decoder models in a future section--they're among the most useful types of RNNs currently in production. Note that although going from a sequence of English words to the corresponding sequence of French words is probably the basic example of Sequence to Sequence models, there are many other kinds of problems that are Sequence to Sequence that aren't immediately obvious. For instance, check out this example of a neural network that completes drawings of a mosquito based on how you start drawing the bug!

Here's another example from pix2pix. Now, stop what you're doing, follow that link, and take a few minutes to play around with pix2pix--watching it generate photos from your own drawings is really cool!

In this lesson, we learned about Sequence Models, and some of their more common use cases.