Automated prompting and scoring framework to evaluate LLMs using updated human knowledge prompts

Install and run:

git clone https://github.com/aigoopy/llm-jeopardy.git

npm install

node . --help

llm-jeopardy framework uses llama.cpp for model execution and GGML models from Hugging Face. Updated with GGMLv3 models.

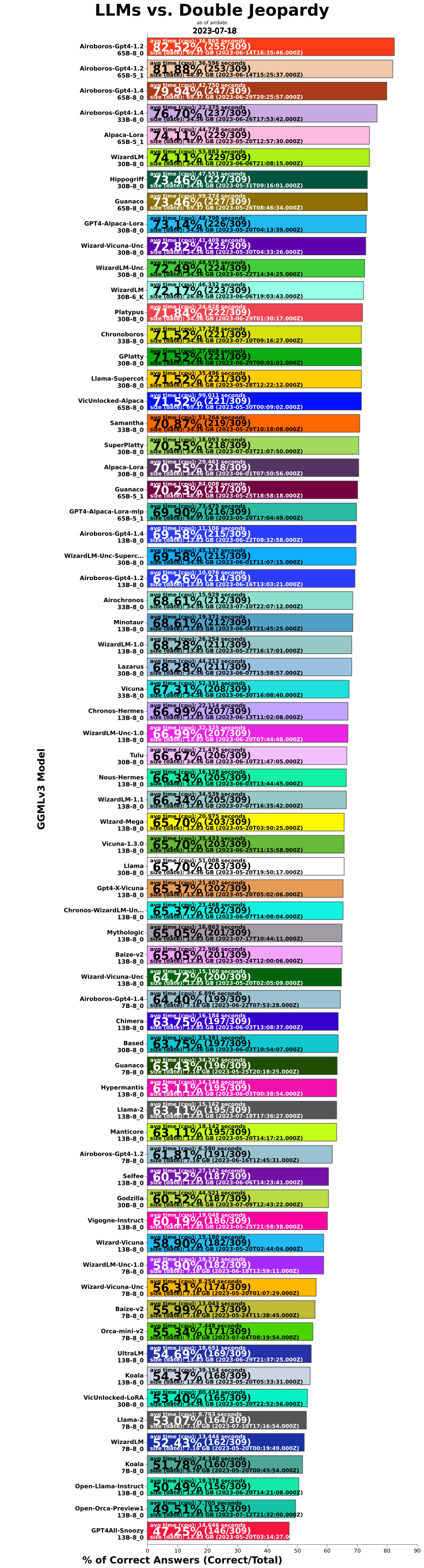

| name | percent | modelcorrect | modeltotal | elapsed | answerlen | msize | mdate |

|---|---|---|---|---|---|---|---|

| Airoboros-Gpt4-1.2 65B-8_0 | 82.52 | 255 | 309 | 36.865 | 12.50 | 69.37 | 2023/06/14 16:35:46 |

| Airoboros-Gpt4-1.2 65B-5_1 | 81.88 | 253 | 309 | 36.596 | 12.64 | 48.97 | 2023/06/14 15:25:37 |

| Airoboros-Gpt4-1.4 65B-8_0 | 79.94 | 247 | 309 | 42.750 | 21.01 | 69.37 | 2023/06/29 20:25:57 |

| Airoboros-Gpt4-1.4 33B-8_0 | 76.70 | 237 | 309 | 22.375 | 18.16 | 34.56 | 2023/06/26 17:53:42 |

| Alpaca-Lora 65B-5_1 | 74.11 | 229 | 309 | 44.778 | 35.37 | 48.97 | 2023/05/20 12:57:30 |

| WizardLM 30B-8_0 | 74.11 | 229 | 309 | 53.883 | 214.90 | 34.56 | 2023/06/06 21:08:15 |

| Hippogriff 30B-8_0 | 73.46 | 227 | 309 | 47.551 | 156.04 | 34.56 | 2023/05/31 09:16:01 |

| Guanaco 65B-8_0 | 73.46 | 227 | 309 | 99.274 | 183.93 | 69.37 | 2023/05/26 08:46:34 |

| GPT4-Alpaca-Lora 30B-8_0 | 73.14 | 226 | 309 | 48.790 | 158.55 | 34.56 | 2023/05/20 04:13:39 |

| Wizard-Vicuna-Unc 30B-8_0 | 72.82 | 225 | 309 | 41.409 | 124.85 | 34.56 | 2023/05/30 04:33:26 |

| WizardLM-Unc 30B-8_0 | 72.49 | 224 | 309 | 48.975 | 161.61 | 34.56 | 2023/05/22 14:34:25 |

| WizardLM 30B-6_K | 72.17 | 223 | 309 | 46.332 | 222.41 | 26.69 | 2023/06/06 19:03:43 |

| Platypus 30B-8_0 | 71.84 | 222 | 309 | 24.628 | 22.94 | 34.56 | 2023/06/29 01:30:17 |

| Chronoboros 33B-8_0 | 71.52 | 221 | 309 | 17.228 | 24.36 | 34.56 | 2023/07/10 09:16:27 |

| GPlatty 30B-8_0 | 71.52 | 221 | 309 | 27.669 | 35.75 | 34.56 | 2023/06/29 00:01:01 |

| Llama-Supercot 30B-8_0 | 71.52 | 221 | 309 | 35.496 | 92.76 | 34.56 | 2023/05/28 12:22:12 |

| VicUnlocked-Alpaca 65B-8_0 | 71.52 | 221 | 309 | 99.011 | 172.73 | 69.37 | 2023/05/30 00:09:02 |

| Samantha 33B-8_0 | 70.87 | 219 | 309 | 51.264 | 194.35 | 34.56 | 2023/05/29 10:18:08 |

| SuperPlatty 30B-8_0 | 70.55 | 218 | 309 | 18.093 | 22.98 | 34.56 | 2023/07/03 21:07:50 |

| Alpaca-Lora 30B-8_0 | 70.55 | 218 | 309 | 29.461 | 58.53 | 34.56 | 2023/06/01 07:50:56 |

| Guanaco 65B-5_1 | 70.23 | 217 | 309 | 84.008 | 187.11 | 48.97 | 2023/05/25 18:58:18 |

| GPT4-Alpaca-Lora-mlp 65B-5_1 | 69.90 | 216 | 309 | 73.475 | 149.61 | 48.97 | 2023/05/20 17:04:49 |

| Airoboros-Gpt4-1.4 13B-8_0 | 69.58 | 215 | 309 | 11.106 | 17.40 | 13.83 | 2023/06/22 08:32:58 |

| WizardLM-Unc-Supercot 30B-8_0 | 69.58 | 215 | 309 | 45.137 | 145.27 | 34.56 | 2023/06/01 11:07:15 |

| Airoboros-Gpt4-1.2 13B-8_0 | 69.26 | 214 | 309 | 10.076 | 12.65 | 13.83 | 2023/06/16 13:03:21 |

| Airochronos 33B-8_0 | 68.61 | 212 | 309 | 15.929 | 17.40 | 34.56 | 2023/07/10 22:07:12 |

| Minotaur 13B-8_0 | 68.61 | 212 | 309 | 19.371 | 169.47 | 13.83 | 2023/06/08 21:45:25 |

| WizardLM-1.0 13B-8_0 | 68.28 | 211 | 309 | 26.254 | 230.25 | 13.83 | 2023/05/27 16:17:01 |

| Lazarus 30B-8_0 | 68.28 | 211 | 309 | 44.213 | 144.72 | 34.56 | 2023/06/07 15:58:57 |

| Vicuna 33B-8_0 | 67.31 | 208 | 309 | 52.331 | 178.79 | 34.56 | 2023/06/30 16:08:40 |

| Chronos-Hermes 13B-8_0 | 66.99 | 207 | 309 | 22.114 | 186.44 | 13.83 | 2023/06/13 11:02:08 |

| WizardLM-Unc-1.0 13B-8_0 | 66.99 | 207 | 309 | 32.325 | 259.04 | 13.83 | 2023/06/20 07:44:48 |

| Tulu 30B-8_0 | 66.67 | 206 | 309 | 21.475 | 19.09 | 34.56 | 2023/06/10 21:47:05 |

| Nous-Hermes 13B-8_0 | 66.34 | 205 | 309 | 16.128 | 102.47 | 13.83 | 2023/06/03 13:44:45 |

| WizardLM-1.1 13B-8_0 | 66.34 | 205 | 309 | 34.539 | 441.67 | 13.83 | 2023/07/07 16:35:42 |

| Wlzard-Mega 13B-8_0 | 65.70 | 203 | 309 | 20.975 | 171.84 | 13.83 | 2023/05/20 03:50:25 |

| Vicuna-1.3.0 13B-8_0 | 65.70 | 203 | 309 | 35.433 | 313.52 | 13.83 | 2023/06/25 11:15:58 |

| Llama 30B-8_0 | 65.70 | 203 | 309 | 51.008 | 167.29 | 34.56 | 2023/05/20 19:50:17 |

| Gpt4-X-Vicuna 13B-8_0 | 65.37 | 202 | 309 | 21.407 | 184.04 | 13.83 | 2023/05/20 05:02:06 |

| Chronos-WizardLM-Unc-Sc 13B-8_0 | 65.37 | 202 | 309 | 23.468 | 202.76 | 13.83 | 2023/06/07 14:08:04 |

| Mythologic 13B-8_0 | 65.05 | 201 | 309 | 16.863 | 172.50 | 13.83 | 2023/07/17 10:44:11 |

| Baize-v2 13B-8_0 | 65.05 | 201 | 309 | 22.906 | 186.67 | 13.83 | 2023/05/24 12:00:06 |

| Wizard-Vicuna-Unc 13B-8_0 | 64.72 | 200 | 309 | 15.160 | 93.71 | 13.83 | 2023/05/20 02:05:09 |

| Airoboros-Gpt4-1.4 7B-8_0 | 64.40 | 199 | 309 | 6.896 | 20.88 | 7.16 | 2023/06/22 07:53:28 |

| Chimera 13B-8_0 | 63.75 | 197 | 309 | 16.184 | 118.74 | 13.83 | 2023/06/03 13:08:37 |

| Based 30B-8_0 | 63.75 | 197 | 309 | 23.381 | 35.11 | 34.56 | 2023/06/03 10:54:07 |

| Guanaco 7B-8_0 | 63.43 | 196 | 309 | 34.267 | 701.59 | 7.16 | 2023/05/25 20:18:25 |

| Hypermantis 13B-8_0 | 63.11 | 195 | 309 | 14.144 | 88.72 | 13.83 | 2023/06/03 00:38:54 |

| Llama-2 13B-8_0 | 63.11 | 195 | 309 | 15.162 | 117.56 | 13.83 | 2023/07/18 17:36:27 |

| Manticore 13B-8_0 | 63.11 | 195 | 309 | 18.142 | 133.34 | 13.83 | 2023/05/20 14:17:21 |

| Airoboros-Gpt4-1.2 7B-8_0 | 61.81 | 191 | 309 | 6.580 | 16.73 | 7.16 | 2023/06/16 12:45:31 |

| Selfee 13B-8_0 | 60.52 | 187 | 309 | 27.142 | 182.70 | 13.83 | 2023/06/06 14:23:41 |

| Godzilla 30B-8_0 | 60.52 | 187 | 309 | 44.521 | 219.55 | 34.56 | 2023/07/09 12:43:22 |

| Vigogne-Instruct 13B-8_0 | 60.19 | 186 | 309 | 19.048 | 126.03 | 13.83 | 2023/05/25 21:58:38 |

| Wizard-Vicuna 13B-8_0 | 58.90 | 182 | 309 | 15.180 | 99.68 | 13.83 | 2023/05/20 02:44:04 |

| WizardLM-Unc-1.0 7B-8_0 | 58.90 | 182 | 309 | 18.232 | 280.49 | 7.16 | 2023/06/18 12:59:11 |

| Wizard-Vicuna-Unc 7B-8_0 | 56.31 | 174 | 309 | 8.254 | 80.20 | 7.16 | 2023/05/20 01:07:29 |

| Baize-v2 7B-8_0 | 55.99 | 173 | 309 | 13.043 | 181.94 | 7.16 | 2023/05/24 11:38:45 |

| Orca-mini-v2 7B-8_0 | 55.34 | 171 | 309 | 7.448 | 126.56 | 7.16 | 2023/07/04 08:19:54 |

| UltraLM 13B-8_0 | 54.69 | 169 | 309 | 18.651 | 133.95 | 13.83 | 2023/06/29 21:37:25 |

| Koala 13B-8_0 | 54.37 | 168 | 309 | 39.154 | 423.01 | 13.83 | 2023/05/20 05:33:31 |

| VicUnlocked-LoRA 30B-8_0 | 53.40 | 165 | 309 | 80.434 | 261.73 | 34.56 | 2023/05/20 22:52:56 |

| Llama-2 7B-8_0 | 53.07 | 164 | 309 | 8.793 | 139.08 | 7.16 | 2023/07/18 17:16:54 |

| WizardLM 7B-8_0 | 52.43 | 162 | 309 | 13.444 | 191.54 | 7.16 | 2023/05/20 00:19:49 |

| Koala 7B-8_0 | 51.78 | 160 | 309 | 24.340 | 458.86 | 9.76 | 2023/05/20 00:45:54 |

| Open-Llama-Instruct 13B-8_0 | 50.49 | 156 | 309 | 19.378 | 124.00 | 13.83 | 2023/06/20 14:21:08 |

| Open-Orca-Preview1 13B-8_0 | 49.51 | 153 | 309 | 7.705 | 29.33 | 13.83 | 2023/07/12 21:32:00 |

| GPT4All-Snoozy 13B-8_0 | 47.25 | 146 | 309 | 14.646 | 92.30 | 13.83 | 2023/05/20 03:14:27 |