The annotation and classification of scientific literature is a crucial task to make scientific knowledge easily discoverable, accessible, and reusable. We address the annotation as a multilabel classification task using BERT and its different flavors specialized in the scientific domain: BioBert and SciBERT. In our experiments, using papers from Springer Nature SciGraph, we confirm that using transformers to train scientific classifiers generally results in greater accuracies compared to linear classifiers (e.g., LinearSVM, FastText)

To shed light on BERT internals, we analyze the self-attention mechanism inherent of the transformer architecture. Our findings show that the last layer of BERT attends to words that are semantically relevant for the scientific fields associated with each publication (see Exploring self-attention heads). This observation suggests that self-attention actually performs some type of feature selection for the fine-tuned model.

This repository includes jupyter notebooks to reproduce the experiments reported in the paper Classifying Scientific Publications with BERT - Is Self-Attention a Feature Selection Method? accepted in the 43rd European Conference on Information Retrieval ECIR 2021. In addition, we present some of the paper highlights.

- Jupyter notebooks

- Fine-tuning language models for text classification

- Exploring self-attention heads

- Feature selection

- Citation

In the notebooks directory of this repository we release self-contained notebooks, including dataset and required libraries, that allows to reproduce the following experiments:

-

Fine-tune BERT, SciBERT and BioBERT to classify research articles into multiple research fields in the ANZRSC taxonomy.

-

Visualize self-attention in the last layer of the BERT models

-

Get lists of most attended words above average in the last layer of the BERT models

While these notebooks can be run in google colaboratory with a subset of the articles, to train on the full set of articles used in the paper we advise to use other infrastructure.

To run the notebooks in Colaboratory go to https://colab.reasearch.google.com and use the option open notebook from GitHub where you can copy and paste the full url of each notebook.

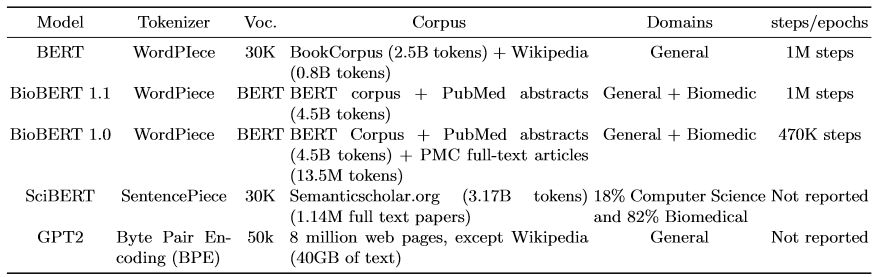

We put into test the following language models to classify research articles: i) BERT and GPT-2, pre-trained on a general-purpose corpus, ii) SciBERT, pre-trained solely on scientific documents, and iii) BioBERT, pre-trained on a combination of general and scientific text. Below we describe some properties about the pre-training of each language model.

Language models pre-training information.

To fine-tune BERT models we take the last layer encoding of the classification token <CLS> and add an N-dimensional linear layer, with N the number of classification labels. We use a binary cross-entropy loss function (BCEWithLogitsLoss)

to allow the model to assign independent probabilities to each label. We train the models for 4 epochs, with batch size 8 and 2e-5 learning rate.

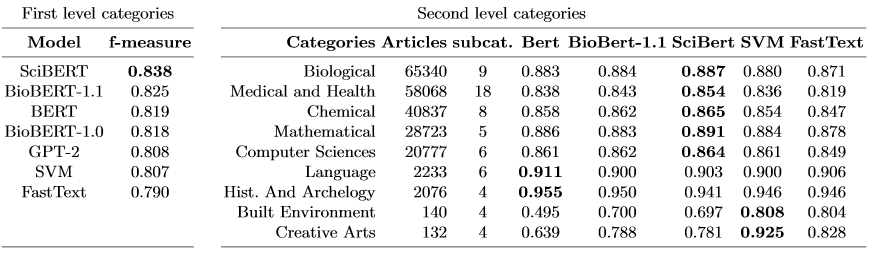

Below we present the evaluation of the classifiers for 22 first level categories in the ANZRSC (right hand side) and second level categories (left hand side)

Evaluation results of the multilabel classifiers (f-measure) on first level categories (a), and on second level categories (b).

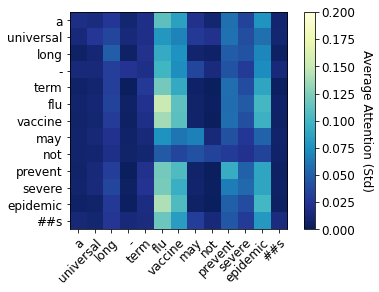

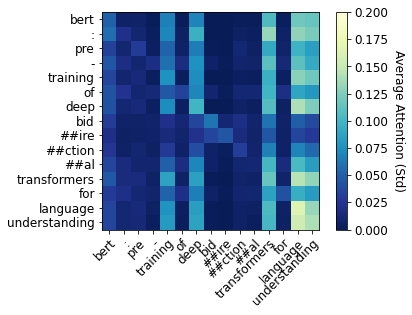

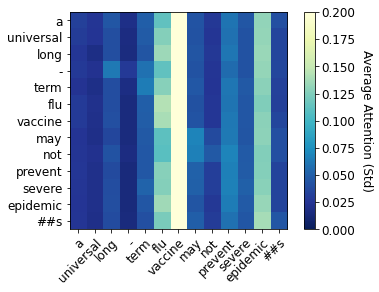

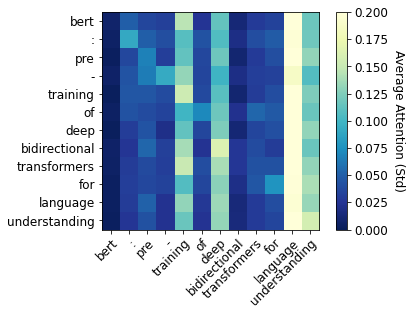

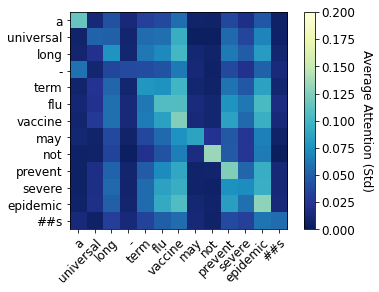

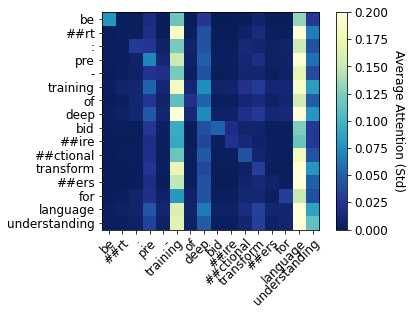

The following images depict the mean weights of the 12 self-attention heads in the last hidden state of the fine-tuned models for two papers titled BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding, and A universal long-term flu vaccine may not prevent severe epidemics.

The plots clearly show the so-called vertical pattern, where a few tokens receive most of the attention, such as training, deep, transformer, language, and understanding in the first sentence, and flu, vaccine, prevent, severe and epidemic in the second. We do not include in the plots <CLS> or <SEP>.

BERT |

BERT |

|---|---|

SciBERT |

SciBERT |

BioBERT-1.1 |

BioBERT-1.1 |

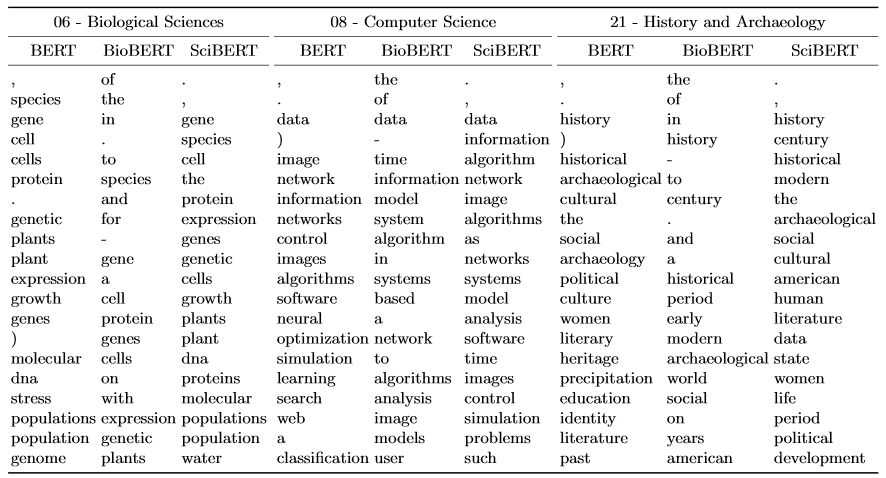

We also extract the words with higher attention than the average attention in each sentence. Note that the words are highly relevant to the corresponding scientific category.

Visualization of average weights in the self attention heads of the last layer.

Most attended words above average attention in the fine-tuned models.

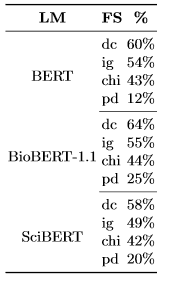

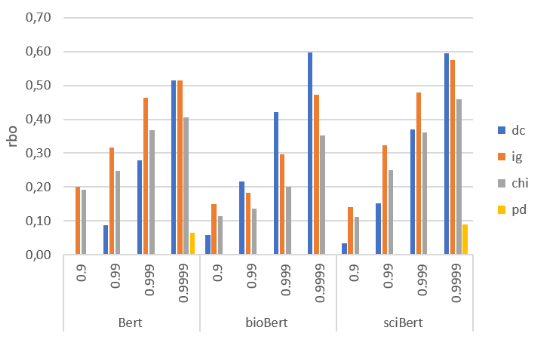

In this section we compare the lists of the attended words above average attention with feature selection algorithms frequently used in text classification.

Word overlap: most attended and feature selection results. algorithm.

Rank-biased overlap at different p values (X axis) between most attended words and selected by feature selection algorithm.

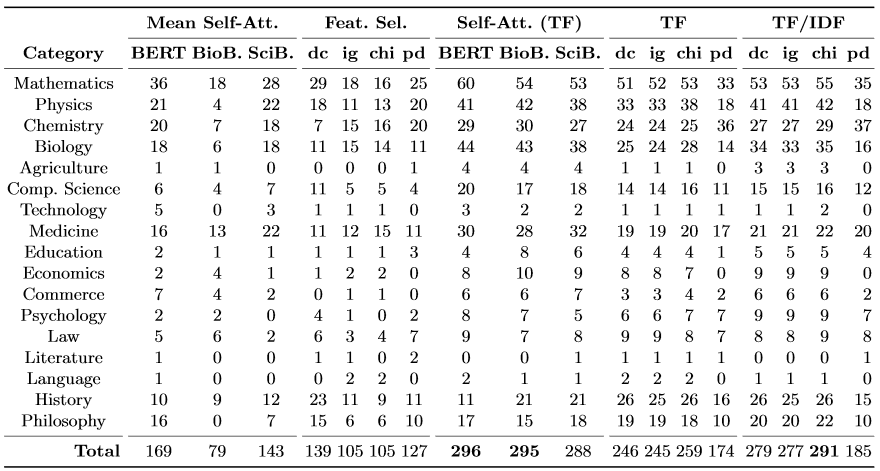

We search the words attended above average in ConceptNet and leverage the relation HasContext to identify the domains where they are commonly used:

Number of words per ANZRSC category that match the corresponding context in ConceptNet.

In BERT and SciBERT, self-attention identifies more domain-relevant words than feature selection methods. However, this is not the case for BioBert.

Weighing the words by their term frequency (TF), attended words remain more domain-relevant than those obtained through feature selection. In fact, the domain relevance of the frequent attended words is greater or on pair with those selected when TF/IDF is used to weigh the output of feature selection method

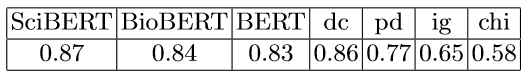

To measure the stability of the features we compute the mean Jaccard coefficient between the different subsets of words generated by each method using 5-folds:

Stability of the features measured using Jackard similarity coefficient.

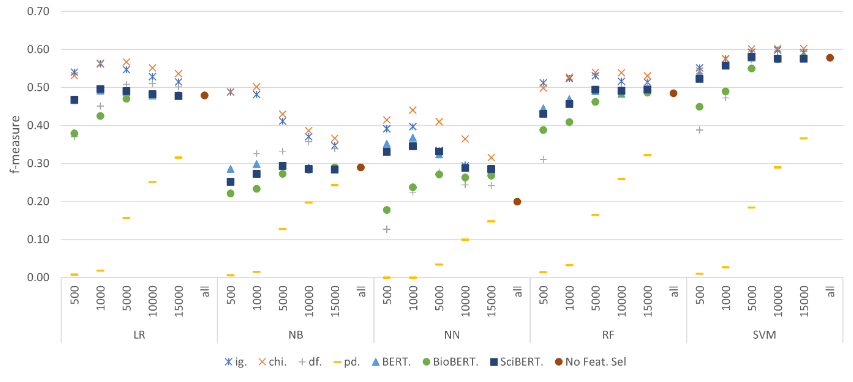

We use the set of features to learn classifiers for the 22 first level categories using Logistic Regression (LR), Naive Bayes (NB), Random Forest (RF), Neural Networks (NN), and SVM:

Classifiers performance using distinct feature sets and number of features. x axis is the number of features used to train each classifier.

We observe that traditional feature selection methods like chi-square and information gain mainly help to learn more accurate classifiers than the set of most attended words by the language models. This observation clearly indicates that the success of BERT models in this task is not only driven by the self-attention mechanism but also by the contextualized outputs of the transformer, which are the input of the added classification layer.

To reference this work use the following citation:

@ARTICLE{2021arXiv2101.08114,

author = {Andres Garcia-Silva and Jose Manuel Gomez-Perez},

title = "{Classifying Scientific Publications with BERT -- Is Self-Attention a Feature Selection Method?}",

journal = {arXiv e-prints},

keywords = {Computer Science - Computation and Language},

year = 2021,

month = jan,

eid = {arXiv:2101.08114},

pages = {arXiv:2101.08114},

archivePrefix = {arXiv},

eprint = {2101.08114},

primaryClass = {cs.CL},

}