Background Activation Suppression for Weakly Supervised Object Localization and Semantic Segmentation (IJCV)

PyTorch implementation of ''Background Activation Suppression for Weakly Supervised Object Localization and Semantic Segmentation''. This repository contains PyTorch training code, inference code and pretrained models. ''Background Activation Suppression for Weakly Supervised Object Localization and Semantic Segmentation'' is built upon our conference version (CVPR 2022).

📋 Table of content

- 📎 Paper Link

- 💡 Abstract

- ✨ Motivation

- 📖 Method

- 📃 Requirements

- ✏️ Usage

- 📊⛺ Experimental Results and Model Zoo

- ✉️ Statement

- 🔍 Citation

📎 Paper Link

-

Background Activation Suppression for Weakly Supervised Object Localization (CVPR2022) (link)

Authors: Pingyu Wu*, Wei Zhai*, Yang Cao

Institution: University of Science and Technology of China (USTC)

-

Background Activation Suppression for Weakly Supervised Object Localization and Semantic Segmentation (IJCV) (link)

Authors: Wei Zhai*, Pingyu Wu*, Kai Zhu, Yang Cao, Feng Wu, Zheng-Jun Zha

Institution: University of Science and Technology of China (USTC) & Institute of Artificial Intelligence, Hefei Comprehensive National Science Center

💡 Abstract

Weakly supervised object localization (WSOL) aims to localize objects using only image-level labels. Recently a new paradigm has emerged by generating a foreground prediction map (FPM) to achieve localization task. Existing FPM-based methods use cross-entropy (CE) to evaluate the foreground prediction map and to guide the learning of generator. We argue for using activation value to achieve more efficient learning. It is based on the experimental observation that, for a trained network, CE converges to zero when the foreground mask covers only part of the object region. While activation value increases until the mask expands to the object boundary, which indicates that more object areas can be learned by using activation value. In this paper, we propose a Background Activation Suppression (BAS) method. Specifically, an Activation Map Constraint (AMC) module is designed to facilitate the learning of generator by suppressing the background activation value. Meanwhile, by using foreground region guidance and area constraint, BAS can learn the whole region of the object. In the inference phase, we consider the prediction maps of different categories together to obtain the final localization results. Extensive experiments show that BAS achieves significant and consistent improvement over the baseline methods on the CUB-200-2011 and ILSVRC datasets. In addition, our method also achieves state-of-the-art weakly supervised semantic segmentation performance on the PASCAL VOC 2012 and MS COCO 2014 datasets. Code and models are available at https://github.com/wpy1999/BAS-Extension.

✨ Motivation

Motivation. (A) Experimental procedure and related definitions. (B) The entropy value of CE loss w.r.t foreground mask and foreground activation value w.r.t foreground mask. (C) The results with statistical significance. Implementation details of the experiment and further results are available in Section 3.5.

📖 Method

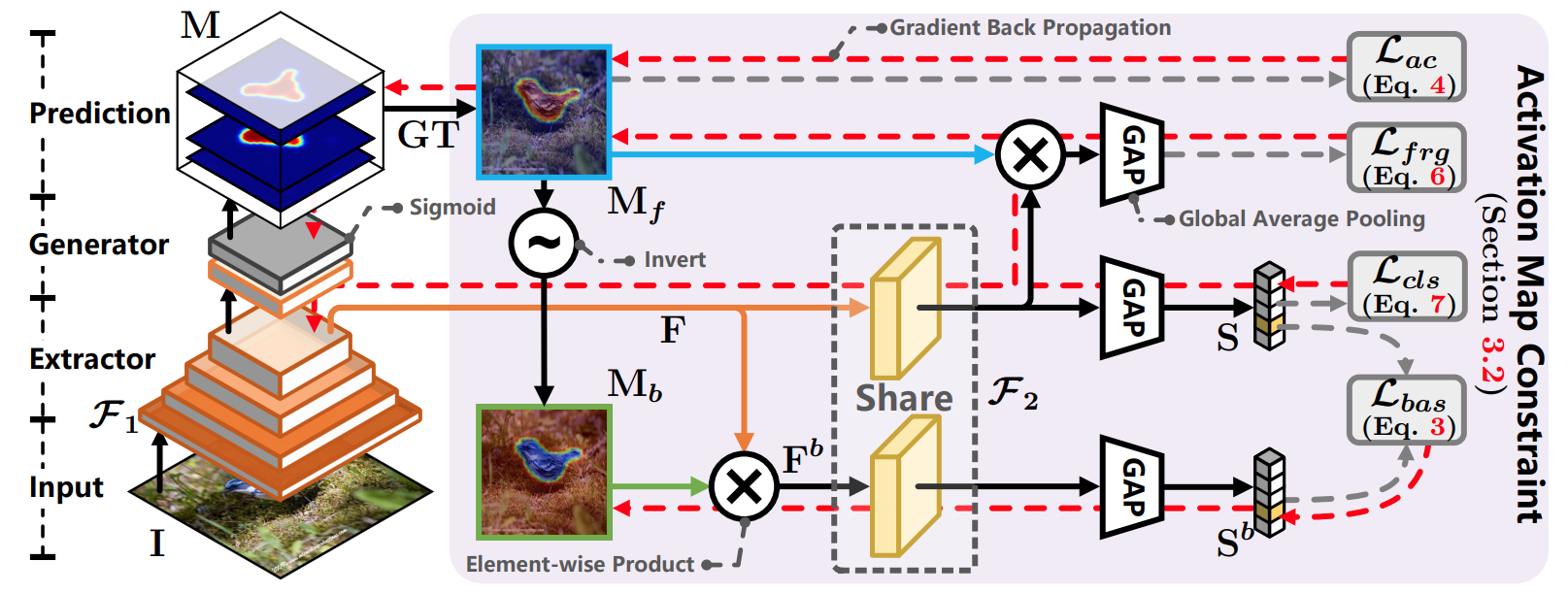

The architecture of the proposed BAS. The architecture of the proposed BAS in the training phase. The class-specific foreground prediction map Mf and the coupled background prediction map Mb are obtained by the generator according to the ground-truth class (GT), and then fed into the Activation Map Constraint module together with the feature map F.

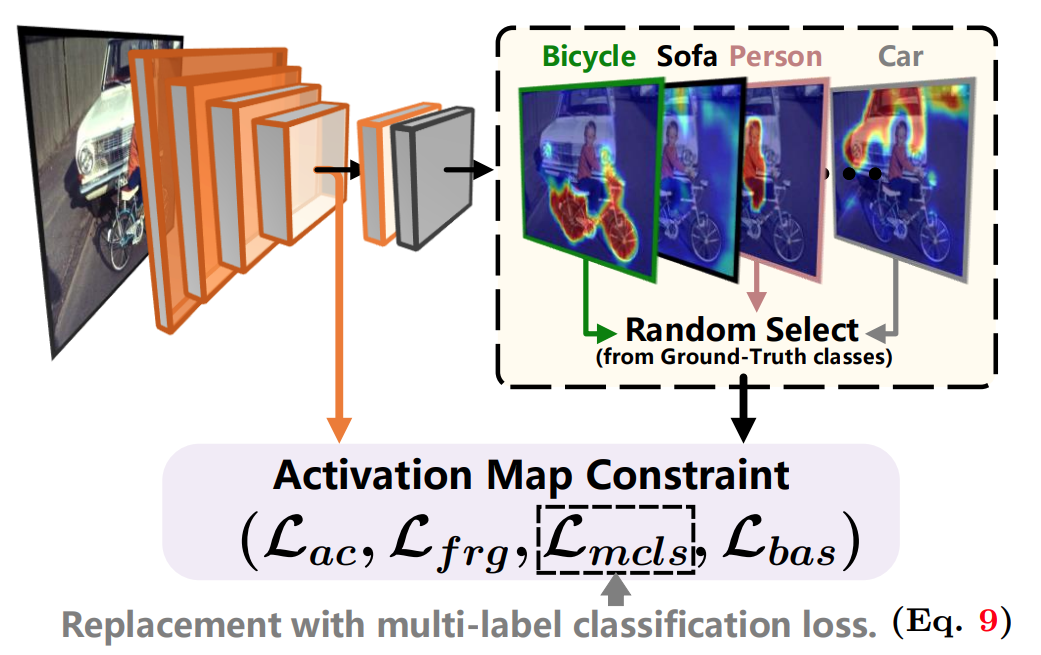

Applying BAS to weakly supervised semantic segmentation task..

📃 Requirements

- python 3.6.10

- torch 1.4.0

- torchvision 0.5.0

- opencv 4.5.3

✏️ Usage

Start

git clone https://github.com/wpy1999/BAS-Extension.git

cd BAS-ExtensionDownload Datasets

- CUB (http://www.vision.caltech.edu/visipedia/CUB-200-2011.html)

- ILSVRC (https://www.image-net.org/challenges/LSVRC/)

- PASCAL (http://host.robots.ox.ac.uk/pascal/VOC/)

WSOL task

cd WSOL

python train.py --arch ${Backbone}

CUDA_VISIBLE_DEVICES="0,1,2,3" python -m torch.distributed.launch --nproc_per_node 4 train_ILSVRC.py

To test the localization accuracy on CUB/ ILSVRC, you can download the trained models from Model Zoo, then run evaluator.py:

python evaluator.py To test the segmentation accuracy on CUB/ OpenImages, you can download the trained models from Model Zoo, then run count_pxap.py:

python count_pxap.py WSSS task

CUDA_VISIBLE_DEVICES="0,1,2,3" python -m torch.distributed.launch --nproc_per_node 4 train_bas.py

To test the segmentation accuracy on PASCAL, you can download the trained models from Model Zoo, then run run_sample.py:

python run_sample.py 📊⛺ Experimental Results and Model Zoo

You can download all the trained models here (WSOL (Google Drive, Baidu Drive); WSSS (Google Drive, Baidu Drive))

or download any one individually as follows:

CUB models

| Top1 Loc | Top5 Loc | GT Known | Weights | |

|---|---|---|---|---|

| VGG | 70.90 | 85.36 | 91.04 | Google Drive, Baidu Drive |

| MobileNet | 70.54 | 86.71 | 93.04 | Google Drive, Baidu Drive |

| ResNet | 76.75 | 90.04 | 95.41 | Google Drive, Baidu Drive |

| Inception | 72.09 | 88.11 | 94.63 | Google Drive, Baidu Drive |

ILSVRC models

| Top1 Loc | Top5 Loc | GT Known | Weights | |

|---|---|---|---|---|

| VGG | 52.94 | 65.38 | 69.66 | Google Drive, Baidu Drive |

| MobileNet | 53.05 | 66.68 | 72.03 | Google Drive, Baidu Drive |

| ResNet | 57.46 | 68.57 | 72.00 | Google Drive, Baidu Drive |

| Inception | 58.50 | 69.03 | 72.07 | Google Drive, Baidu Drive |

OpenImages

| PIoU | PxAP | Weights | |

|---|---|---|---|

| ResNet | 50.72 | 66.86 | Google Drive, Baidu Drive |

PASCAL VOC models

On the PASCAL VOC 2012 training set. The results on the other baseline methods can be obtained in the same way.

| Seed | Weights | Mask | Weights | |

|---|---|---|---|---|

| Our | 57.7 | Google Drive, Baidu Drive | ||

| Our + IRN | 58.2 | Google Drive, Baidu Drive | 71.1 | Google Drive, Baidu Drive |

On the PASCAL VOC 2012 val and test sets (DeepLabv2).

| Val | Test | Weights | |

|---|---|---|---|

| Ours | 69.6 | 69.9 | Google Drive, Baidu Drive |

✉️ Statement

This project is for research purpose only, please contact us for the licence of commercial use. For any other questions please contact wpy364755620@mail.ustc.edu.cn or wzhai056@mail.ustc.edu.cn.

🔍 Citation

@inproceedings{wu2022background,

title={Background Activation Suppression for Weakly Supervised Object Localization},

author={Wu, Pingyu and Zhai, Wei and Cao, Yang},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={14248--14257},

year={2022}

}

@article{zhai2023background,

title={Background Activation Suppression for Weakly Supervised Object Localization and Semantic Segmentation},

author={Zhai, Wei and Wu, Pingyu and Zhu, Kai and Cao, Yang and Wu, Feng and Zha, Zheng-Jun},

journal={International Journal of Computer Vision},

pages={1--26},

year={2023},

publisher={Springer}

}