Machine learning inference at scale using AWS serverless

This sample solution shows you how to run and scale ML inference using AWS serverless services: AWS Lambda and AWS Fargate. This is demonstrated using an image classification use case.

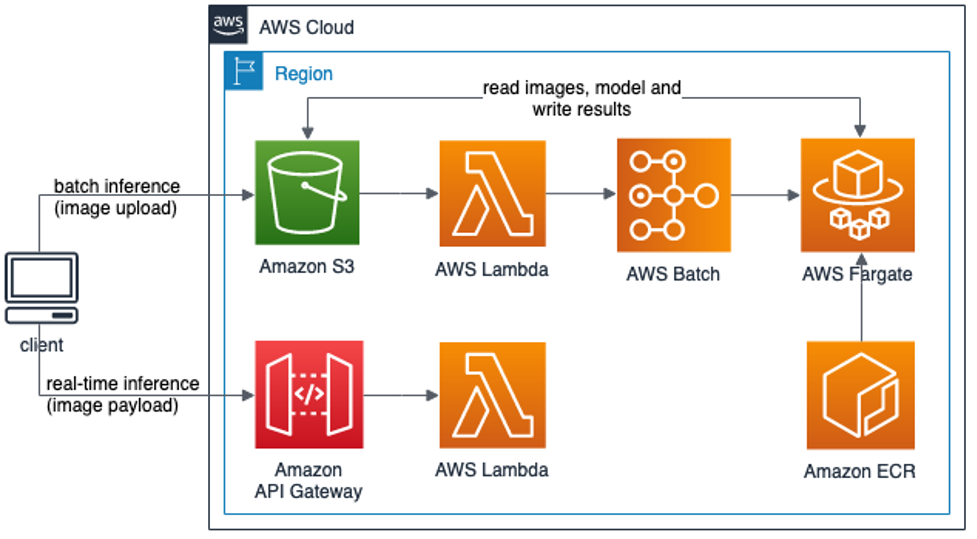

Architecture

The following diagram illustrates the solutions architecture for both batch and real-time inference options.

Deploying the solution

To deploy and run the solution, you need access to:

- An AWS account

- A terminal with AWS Command Line Interface (CLI), CDK, Docker, git, and Python installed.

- You may use the terminal on your local machine or use an AWS Cloud9 environment.

To deploy the solution, open your terminal window and complete the following steps.

-

Clone the GitHub repo

git clone https://github.com/aws-samples/aws-serverless-for-machine-learning-inference.git -

Navigate to the project directory and deploy the CDK application.

./install.sh

or

./cloud9_install.sh #If you are using AWS Cloud9

EnterYto proceed with the deployment.

Running inference

The solution lets you get predictions for either a set of images using batch inference or for a single image at a time using real-time API end-point.

Batch inference

Get batch predictions by uploading image files to Amazon S3.

- Upload one or more image files to the S3 bucket path, ml-serverless-bucket--/input, from Amazon S3 console or using AWS CLI.

aws s3 cp <path to jpeg files> s3://ml-serverless-bucket-<acct-id>-<aws-region>/input/ --recursive - This will trigger the batch job, which will spin-off Fargate tasks to run the inference. You can monitor the job status in AWS Batch console.

- Once the job is complete (this may take a few minutes), inference results can be accessed from the ml-serverless-bucket--/output path

Real-time inference

Get real-time predictions by invoking the API endpoint with an image payload.

- Navigate to the CloudFormation console and find the API endpoint URL (httpAPIUrl) from the stack output.

- Use a REST client, like Postman or curl command, to send a POST request to the /predict api endpoint with image file payload.

curl --request POST -H "Content-Type: application/jpeg" --data-binary @<your jpg file name> <your-api-endpoint-url>/predict - Inference results are returned in the API response.

Cleaning up

Navigate to the project directory from the terminal window and run the following command to destroy all resources and avoid incurring future charges.

cdk destroy

Security

See CONTRIBUTING for more information.

License

This library is licensed under the MIT-0 License. See the LICENSE file.