Pure-python distributable Attack-Defence CTF platform, created to be easily set up.

The name is pronounced as "forkád".

5 easy steps to start a game (assuming current working directory to be the project root):

-

Open

backend/config/config.ymlfile (or copybackend/config/config.yml.exampletobackend/config/config.yml, if the latter is missing). -

Add teams and tasks to corresponding config sections following the example's format, set

start_time(don't forget your timezone) andround_time(in seconds) (for recommendations see checker_timeout variable). -

Change default passwords (that includes

storages.db.password,storages.redis.passwordfor database and cache,flower.passwordforceleryvisualization, which contains flags). -

Install

control_requirements.txt(pip3 install -r control_requirements.txt) and run./control.py setupto transfer config variables -

Run

./control.py start --fastto start the system. Wait patiently for the images to build, it could take a few minutes, but happens only once. Notice that--fastoption uses the pre-built image, so if you modified the source code, omit this option to run the full build.

That's all! Now you should be able to access the scoreboard at http://0.0.0.0:8080/.

Before each new game run ./control.py reset to delete old database and temporary files (and docker networks).

Teams are identified by unique randomly generated on startup tokens (look for them in the logs of initializer

container or print using the following command after the system started: ./control.py print_tokens).

You can either share all tokens with all teams (as submitting flags for other teams is not really profitable), or send tokens privately. Tokens have one upside: all requests can be masqueraded.

Platform consists of several modules:

-

TCP flag submitter (over

socaton port 31338 or python tcp server on port 31337, both perform well). For each connection send team token in the first line, then flags, each in a new line. -

Celerybeat sends round start events to

celery. -

Celery is the main container which runs checkers. Can be scaled using docker command:

./control.py scale_celery -i Nto runNinstances (assuming system is already started). One instance for 60 team-tasks is recommended (so, if there're 80 teams and 4 tasks, run 5-6 instances ofcelery). -

Flower is a beautiful celery monitoring app

-

Redis acts as a cache, messaging query and backend for celery

-

Postgres is a persistent game storage

-

Webapi provides api for react frontend

-

Front builder builds frontend sources and copies them to the volume, from which they're served by nginx. It exits after it's finished

-

Nginx acts as a routing proxy that unites frontend, api and flower

-

Initializer starts with the system, waits for the database to become available (all other containers wait for the initializer to finish its job) then drops old tables and initializes database. From that point, changing team or task config is useless, as they're copied to database already. If changes are required, connect to the postgres container directly and run

psqlcommand (read the reference). For default database name and user (system_dbandsystem_admin) usedocker-compose exec postgres psql -U system_admin system_db(no password is required as it's a local connection). After changes in database you need to drop the cache. Easy way is to run FLUSHALL in redis, but that can lead to a round of unavailable scoreboard.

Platform has a somewhat-flexible rating system. Basically, rating system is a class that's initialized by 2 floats:

current attacker and victim scores and has calculate method that returns another 2 floats, attacker and

victim rating changes respectively. Having read that, you can easily replace default rating system in

C rating system by your own brand-new one. Default rating system is

based on Elo rating and performs quite well in practice. game_hardness and inflation configuration variables

can be set in global block in config.yml, the first one sets how much points team is earning for an attack

(the higher the hardness, the bigger the rating change is), and the second one states is there's an "inflation" of

points: whether a team earns points by attacking zero-rating victim. Current rating system with inflation results in

quite a dynamic and fast gameplay. Default value for game_hardness in both versions (with and w/o inflation) is

1300, recommended range is [500, 10000] (try to emulate it first). Initial score for task can also be configured in

global settings (that'll be the default value) and for each task independently.

System uses the most common flag format by default: [A-Z0-9]{31}=, the first symbol is the first letter of

corresponding service name. You can change flag generation in function generate_flag in

backend/helpers/flags.py

Each flag is valid (and can be checked by checker) for flag_lifetime rounds (global config variable).

Config file (backend/config/config.yml) is split into five main parts:

-

global describes global settings:

timezone: mainly used for celery to show better times in flower. Example: timezone:Europe/Moscowcheckers_path: path to checkers inside Docker container./checkers/if not changed specifically.default_score: default score for tasks. Example:2000env_path: path or defaultenv_pathfor checkers (see checkers section). Example:/checkers/bin/flag_lifetime: flag lifetime in rounds (see flag format section). Example:5game_hardness: game hardness parameter (see rating system section). Example:3000.0inflation: inflation (see rating system section). Example:trueround_time: round duration in seconds. Example:120start_time: Full datetime of game start. Example:2019-11-30 15:30:00+03:00(don't forget the timezone)

-

storages describes settings used to connect to PostgreSQL and Redis (examples provided):

-

db: PostgreSQL settings:dbname: system_dbhost: postgrespassword: **change_me**port: 5432user: system_admin

-

redis: Redis settings:db: 0host: redisport: 6379password: **change_me**

-

-

flower contains credentials to access visualization (

/flower/on scoreboard):password: **change_me**username: system_admin

-

teams contains playing teams. Example contents:

teams:

- ip: 10.70.0.2

name: Team1

- ip: 10.70.1.2

name: Team2- tasks contains configuration of checkers and task-related parameters. Example:

tasks:

- checker: collacode/checker.py

checker_returns_flag_id: true

checker_timeout: 30

gevent_optimized: true

default_score: 1500

gets: 3

name: collacode

places: 1

puts: 3

- checker: tiktak/checker.py

checker_returns_flag_id: true

checker_timeout: 30

gets: 2

name: tiktak

places: 3

puts: 2gevent_optimized is an experimental feature to make checkers faster. Don't use it if you're not absolutely sure

you know how it works. Example checker is here.

Checksystem is completely compatible with Hackerdom checkers, but some config-level enhancements were added (see below).

Checkers are configured for each task independently. It's recommended to put each checker in a separate folder

under checkers in project root. Checker is considered to consist of the main executable and some

auxiliary files in the same folder.

Checker-related configuration variables:

-

checker: path to the main checker executable (relative tocheckersfolder), which need to be world-executable (runchmod o+rx checker_executable) -

puts: number of flags to put for each team for each round -

gets: number of flags to check from the lastflag_lifetimerounds (see Configuration and usage for lifetime description). -

places: large tasks may contain a lot of possible places for a flag, that is the number. It's randomized for eachputfrom the range[1, places]and passed to the checker'sPUTandGETactions. -

checker_timeout(seconds): timeout for each checker action. As there're at minumum 3 actions run (depending onputsandgets), I recommend settinground_timeat least 4 times greater than the maximum checker timeout if possible. -

checker_returns_flag_id: whether the checker returns newflag_idfor theGETaction for this flag, or the passedflag_idshould be used when getting flag (see more in checker writing section) -

env_path: path or a combination of paths to be prepended toPATHenv variable (e.g. path to chromedriver). By default,checkers/binis used, so all auxiliary executables can be but there.

checkers folder in project root (containing all checker folders) is recommended to have the following structure:

checkers:

- requirements.txt <-- automatically installed (with pip) combined requirements of all checkers

- task1:

- checker.py <-- executable

- task2:

- checker.py <-- executableChecker is an app that checks whether the team's task is running normally, puts flags and then checks them after a few rounds.

Actions and arguments are passed to checker as command-line arguments, the first one is always command type, the second is team host.

Checker should terminate with one of the five return codes:

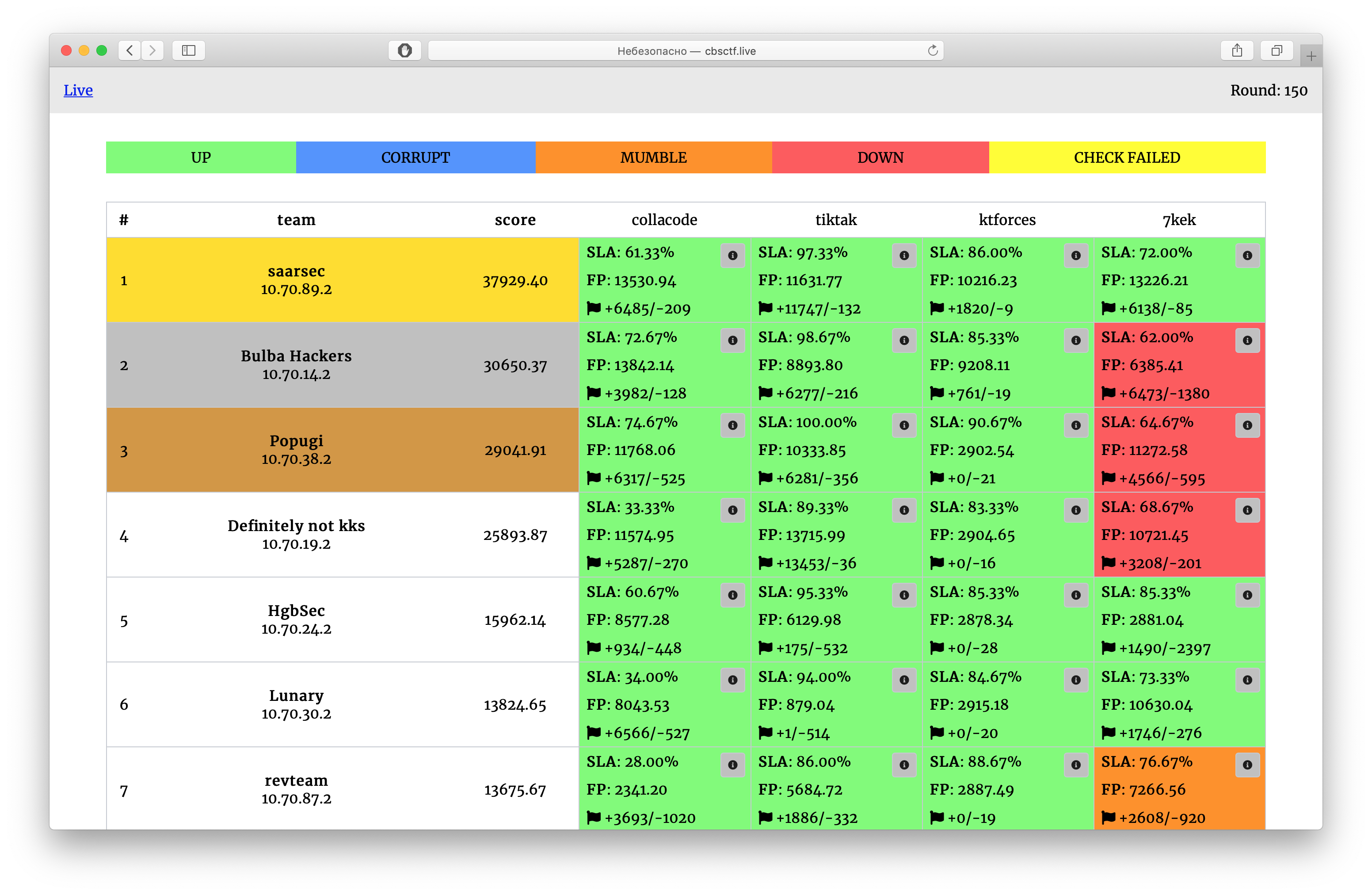

- 101:

OKcode, everything works - 102:

CORRUPT, service's working correctly, but didn't return flags from previous rounds (returned byGETonly) - 103:

MUMBLE, service's not working correctly - 104:

DOWN, could not connect normally - 110:

CHECKER_ERROR, unexpected error in checker

All other return codes are considered to be CHECKER_ERROR.

In case of unsuccessful invocation stdout output will be shown on scoreboard, stderr output is considered to

be the debug info and is stored in database. Also, in case of CHECKER_ERROR celery container prints warning

to console with detailed logs.

Checker must implement three main actions:

CHECK: checks that team's service is running normally. Visits some pages, checks registration, login, etc...

Example invocation: /checkers/task/check.py check 127.0.0.1

PUT: puts a flag to the team's service.

Example invocation: /checkers/task/check.py put 127.0.0.1 <flag_id> <flag> <vuln_number>

If the checker returns flag_id (see checker config), it should write some data

which helps to access flag later (username, password, etc) to stdout (that data will be the flag_id passed to GET

action). Otherwise, it ought to use flag_id as some "seed" to generate such data (on the next invocation flag_id

will be the same if checker_returns_flag_id is set to false).

PUT is run even if CHECK failed

GET: fetches one random old flag from lastflag_lifetimerounds.

Example invocation: /checkers/task/check.py get 127.0.0.1 <flag_id> <flag> <vuln_number>

This action should check if the flag can be acquired correctly.

GET is not run if CHECK (or previous GETs if gets > 1) fail.

A simple example of checker can be found here.

Be aware that to test task locally, LAN IP (not 127.0.0.1) needs to be specified for the team.

See this link to read more about

writing checkers for Hackerdom checksystem. Vulns' frequencies (e.g. put 1 flag for the first vuln for each

3 flags of the second) are not supported yet, but can be easily emulated with task place count and checker.

For example, for the above configuration (1:3) specify 4 places for the task, and then in checker PUT flag for the

first vuln if the supplied vuln is 1 and to the second vuln otherwise (vuln is 2, 3 or 4).

If checker is using local files, it should access them by the relative to its directory path, as checkers are run using

absolute paths inside Docker. For example, the following is incorrect: open('local_file.txt') as in Docker it'll try

to open /local_file.txt instead of /checkers/task/local_file.txt. Correct usage would be

import os

BASE_DIR = os.path.abspath(os.path.dirname(__file__))

open(os.path.join(BASE_DIR, 'local_file.txt'))As checkers run in celery container, open docker_config/celery/Dockerfile.fast

(or docker_config/celery/Dockerfile if you're not using fast build)

and install all necessary packages to the image. Any modification can be made in CUSTOMIZE block.

With enough confidence, even the base image of celery container could be changed

(python3.7 needs to be installed anyway).

Starting system without docker is quite easy too: just run all the needed parts of the system

(see Configuration and usage section for details) and provide correct values

for redis and postgres machine hosts.

Python version 3.7 (and higher) is required (may work with 3.6.7+, but it hasn't been tested at all).