Pytorch implementation of Who You Are Decides How You Tell which studies the impact of different human factors on the human-like image captions. It also contains the first human-centered image captioning dataset Knowledge5K.

We have built the first humlan-centered image captioning dataset Knowledge5K. It not only contains image caption pairs, but also human factors for each caption, and external knowledge for each image.

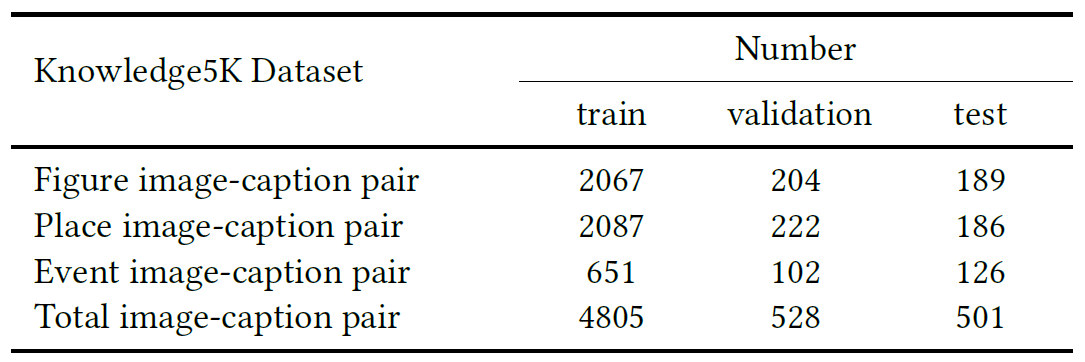

Statistics of the Knowledge5K:

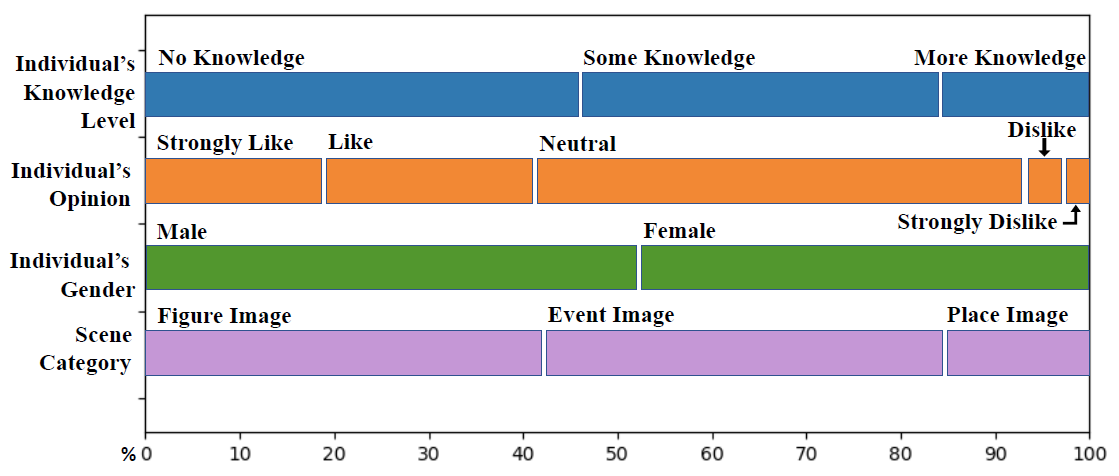

Distribution of the Knowledge5K:

Before starting to train the model, we need to convert the Knowledge5K into certain formats.

$ python create_input_files.pyRun the command below to train the model.

$ python train.pyIf you use this package for publications, please cite the paper as follows.

@inproceedings{10.1145/3394171.3413589,

author = {Wu, Shuang and Fan, Shaojing and Shen, Zhiqi and Kankanhalli, Mohan and Tung, Anthony K.H.},

title = {Who You Are Decides How You Tell},

year = {2020},

isbn = {9781450379885},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3394171.3413589},

doi = {10.1145/3394171.3413589},

abstract = {Image captioning is gaining significance in multiple applications such as content-based visual search and chat-bots. Much of the recent progress in this field embraces a data-driven approach without deep consideration of human behavioural characteristics. In this paper, we focus on human-centered automatic image captioning. Our study is based on the intuition that different people will generate a variety of image captions for the same scene, as their knowledge and opinion about the scene may differ. In particular, we first perform a series of human studies to investigate what influences human description of a visual scene. We identify three main factors: a person's knowledge level of the scene, opinion on the scene, and gender. Based on our human study findings, we propose a novel human-centered algorithm that is able to generate human-like image captions. We evaluate the proposed model through traditional evaluation metrics, diversity metrics, and human-based evaluation. Experimental results demonstrate the superiority of our proposed model on generating diverse human-like image captions.},

booktitle = {Proceedings of the 28th ACM International Conference on Multimedia},

pages = {4013–4022},

numpages = {10},

keywords = {human-centered, image captioning, deep learning, language and vision, multi-modal},

location = {Seattle, WA, USA},

series = {MM '20}

}