English | 简体中文

Reverse engineered ChatGPT proxy (bypass Cloudflare 403 Access Denied)

- API key acquisition

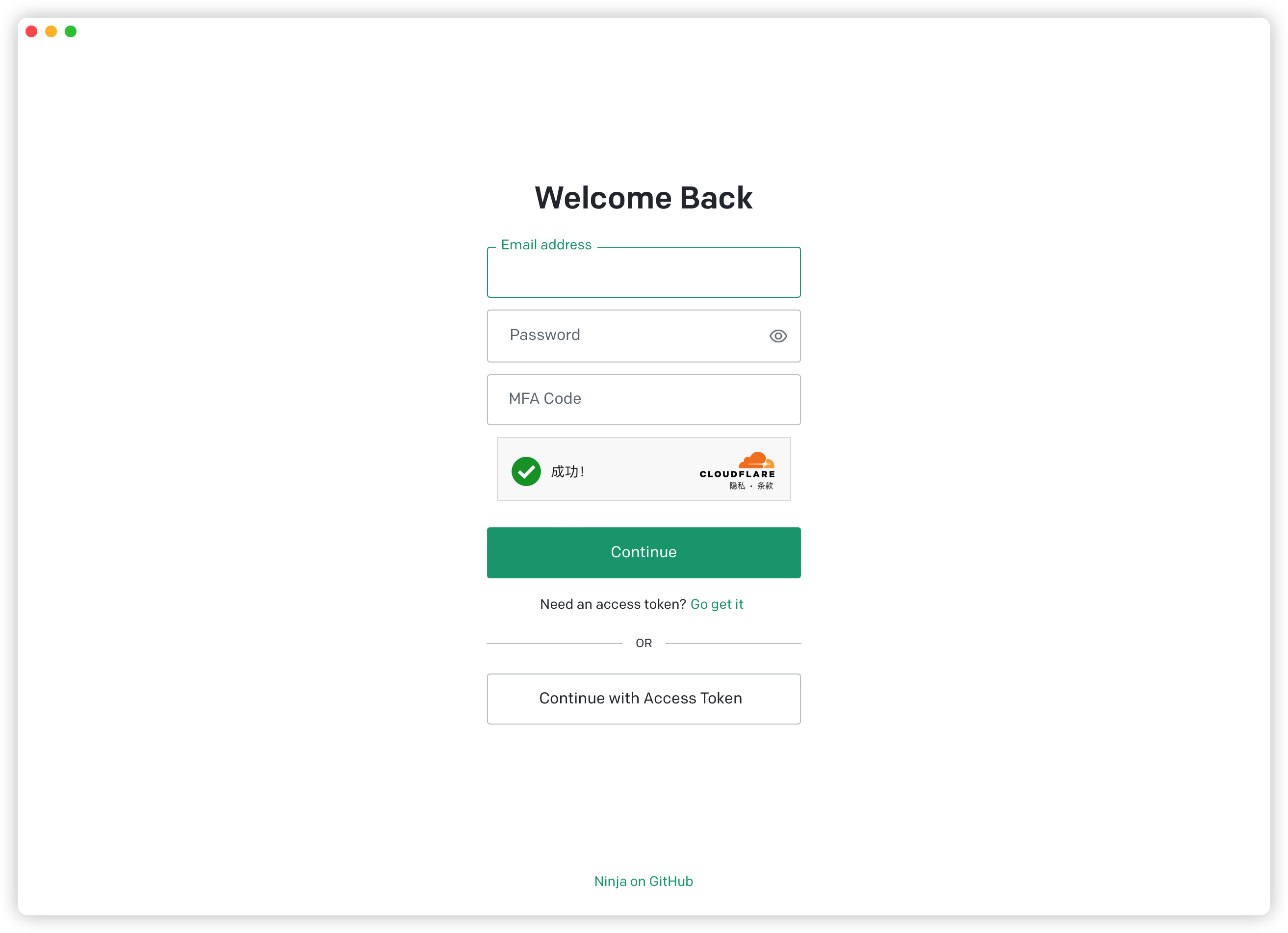

- Email/password account authentication (Google/Microsoft third-party login is temporarily not supported)

Unofficial/Official/ChatGPT-to-APIHttp API proxy (for third-party client access)- Support IP proxy pool

- Minimal memory usage

- ChatGPT WebUI

Limitations: This cannot bypass OpenAI's outright IP ban

Here IP limit refers to OpenAI's request rate limit for single IP. You need to understand what puid is. The default request models interface returns puid cookie.

In addition, the GPT-4 session must be sent with puid. When using a third-party client to send a GPT-4 conversation, the puid may not be saved or obtained. You need to handle it on the server side:

- Use the startup parameter

--puid-userto set theAccount Plusaccount to obtain thepuid, and it will be updated regularly

Sending a GPT4 conversation requires Arkose Token to be sent as a parameter, and there are only three supported solutions for the time being

-

The endpoint obtained by

Arkose Token, no matter what method you use, use--arkose-token-endpointto specify the endpoint to obtain the token. The supportedJSONformat is generally in accordance with the format of the community:{"token": "xxxxxx"} -

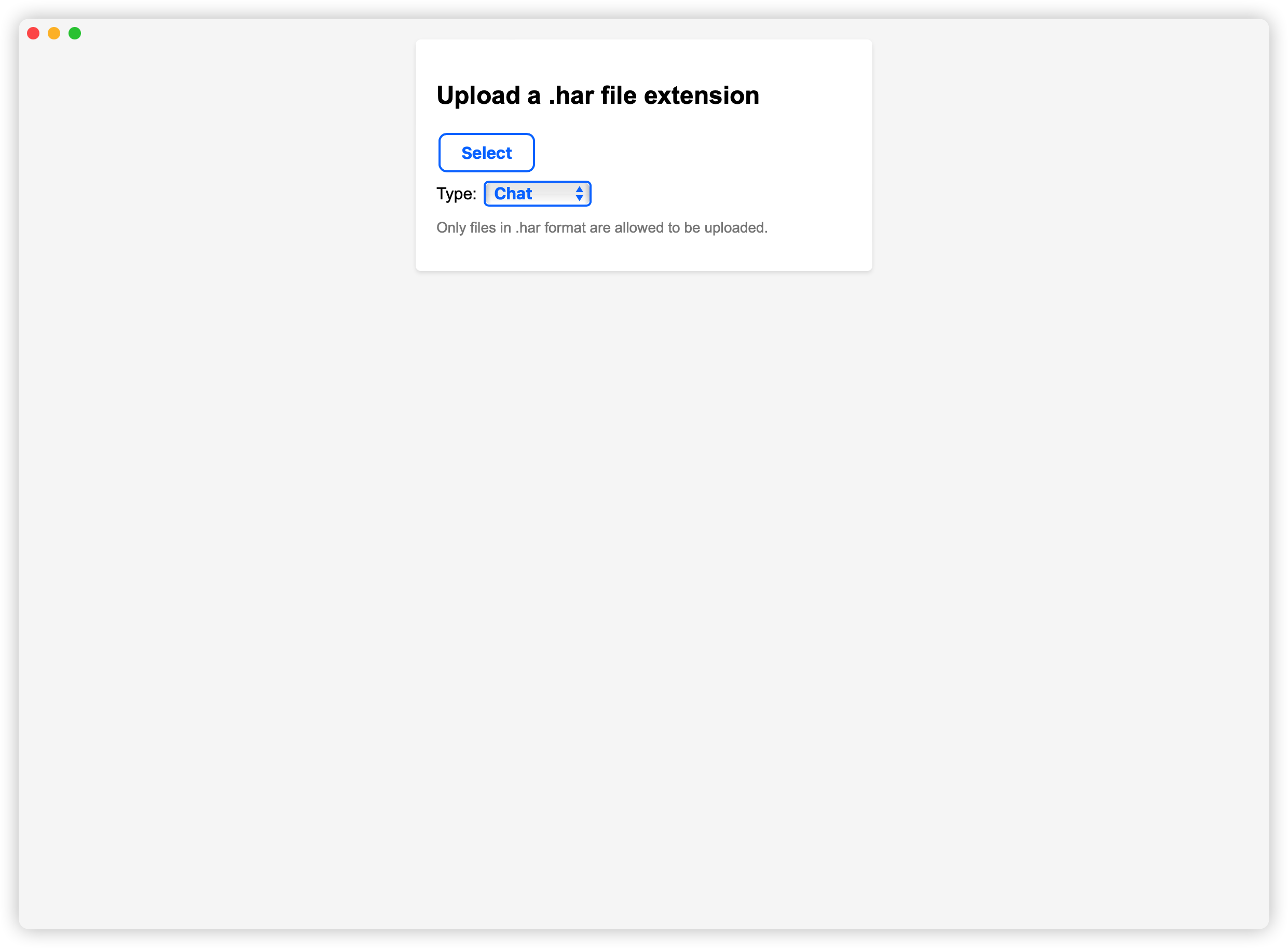

Using HAR,

ChatGPTofficial website sends aGPT4session message, and the browserF12downloadshttps://tcr9i.chat.openai.com/fc/gt2/public_key/35536E1E-65B4-4D96-9D97-6ADB7EFF8147For the HAR log file of the interface, use the startup parameter--arkose-chat-har-fileto specify the HAR file path to use (if the path is not specified, the default path~/.chat.openai.com.harwill be used, and updates can be uploaded directly HAR), supports uploading and updating HAR, request path:/har/upload, optional upload authentication parameter:--arkose-har-upload-key -

Use YesCaptcha/CapSolver platform for verification code parsing, start the parameter

--arkose-solverto select the platform (the default isYesCaptcha),--arkose-solver-keyfill inClient Key

- All three solutions are used, the priority is:

HAR>YesCaptcha/CapSolver>Arkose Token endpoint YesCaptcha/CapSolveris recommended to be used with HAR. When the verification code is generated, the parser is called for processing. After verification, HAR is more durable.

Currently OpenAI has updated that login requires verification of

Arkose Token. The solution is the same as GPT4. Fill in the startup parameters and specify the HAR file--arkose-auth-har-file. If you don't want to upload, you can log in through the browser code, which is not required.

- backend-api, https://host:port/backend-api/*

- public-api, https://host:port/public-api/*

- platform-api, https://host:port/v1/*

- dashboard-api, https://host:port/dashboard/*

- chatgpt-to-api, https://host:port/to/v1/chat/completions

About using

ChatGPTtoAPI, useAceessTokendirectly asAPI Key, interface path:/to/v1/chat/completions

-

Authentic ChatGPT WebUI

-

Expose

unofficial/official APIproxies -

The

APIprefix is consistent with the official -

ChatGPTToAPI -

Accessible to third-party clients

-

Access to IP proxy pool to improve concurrency

-

API documentation

-

Parameter Description

--level, environment variableLOG, log level: default info--host, environment variableHOST, service listening address: default 0.0.0.0,--port, environment variablePORT, listening port: default 7999--tls-cert, environment variableTLS_CERT', TLS certificate public key. Supported format: EC/PKCS8/RSA--tls-key, environment variableTLS_KEY, TLS certificate private key--proxies, Proxy, supports proxy pool, multiple proxies are separated by,, format: protocol://user:pass@ip:port, if the local IP is banned, you need to turn off the use of direct IP when using the proxy pool,-- disable-directturns off direct connection, otherwise your banned local IP will be used according to load balancing--workers, worker threads: default 1--disable-webui, if you don’t want to use the default built-in WebUI, use this parameter to turn it off

Making Releases has a precompiled deb package, binaries, in Ubuntu, for example:

wget https://github.com/gngpp/ninja/releases/download/v0.6.6/ninja-0.6.6-x86_64-unknown-linux-musl.deb

dpkg -i ninja-0.6.6-x86_64-unknown-linux-musl.deb

ninja serve runThere are pre-compiled ipk files in GitHub Releases, which currently provide versions of aarch64/x86_64 and other architectures. After downloading, use opkg to install, and use nanopi r4s as example:

wget https://github.com/gngpp/ninja/releases/download/v0.6.6/ninja_0.6.6_aarch64_generic.ipk

wget https://github.com/gngpp/ninja/releases/download/v0.6.6/luci-app-ninja_1.1.3-1_all.ipk

wget https://github.com/gngpp/ninja/releases/download/v0.6.6/luci-i18n-ninja-zh-cn_1.1.3-1_all.ipk

opkg install ninja_0.6.6_aarch64_generic.ipk

opkg install luci-app-ninja_1.1.3-1_all.ipk

opkg install luci-i18n-ninja-zh-cn_1.1.3-1_all.ipkdocker run --rm -it -p 7999:7999 --name=ninja \

-e WORKERS=1 \

-e LOG=info \

gngpp/ninja:latest serve run- Docker Compose

CloudFlare Warpis not supported in your region (China), please delete it, or if yourVPSIP can be directly connected toOpenAI, you can also delete it

version: '3'

services:

ninja:

image: ghcr.io/gngpp/ninja:latest

container_name: ninja

restart: unless-stopped

environment:

- TZ=Asia/Shanghai

- PROXIES=socks5://warp:10000

# - CONFIG=/serve.toml

# - PORT=8080

# - HOST=0.0.0.0

# - TLS_CERT=

# - TLS_KEY=

# volumes:

# - ${PWD}/ssl:/etc

# - ${PWD}/serve.toml:/serve.toml

command: serve run

ports:

- "8080:7999"

depends_on:

- warp

warp:

container_name: warp

image: ghcr.io/gngpp/warp:latest

restart: unless-stopped

watchtower:

container_name: watchtower

image: containrrr/watchtower

volumes:

- /var/run/docker.sock:/var/run/docker.sock

command: --interval 3600 --cleanup

restart: unless-stopped

$ ninja serve --help

Start the http server

Usage: ninja serve run [OPTIONS]

Options:

-C, --config <CONFIG>

Configuration file path (toml format file) [env: CONFIG=]

-H, --host <HOST>

Server Listen host [env: HOST=] [default: 0.0.0.0]

-L, --level <LEVEL>

Log level (info/debug/warn/trace/error) [env: LOG=] [default: info]

-P, --port <PORT>

Server Listen port [env: PORT=] [default: 7999]

-W, --workers <WORKERS>

Server worker-pool size (Recommended number of CPU cores) [default: 1]

--concurrent-limit <CONCURRENT_LIMIT>

Enforces a limit on the concurrent number of requests the underlying [default: 65535]

-x, --proxies <PROXIES>

Server proxies pool, Example: protocol://user:pass@ip:port [env: PROXIES=]

--disable-direct

Disable direct connection [env: DISABLE_DIRECT=]

--cookie-store

Enabled Cookie Store [env: COOKIE_STORE=]

--timeout <TIMEOUT>

Client timeout (seconds) [default: 600]

--connect-timeout <CONNECT_TIMEOUT>

Client connect timeout (seconds) [default: 60]

--tcp-keepalive <TCP_KEEPALIVE>

TCP keepalive (seconds) [default: 60]

--tls-cert <TLS_CERT>

TLS certificate file path [env: TLS_CERT=]

--tls-key <TLS_KEY>

TLS private key file path (EC/PKCS8/RSA) [env: TLS_KEY=]

--puid-user <PUID_USER>

Obtain the PUID of the Plus account user, Example: `user:pass`

--api-prefix <API_PREFIX>

WebUI api prefix [env: API_PREFIX=]

--preauth-api <PREAUTH_API>

PreAuth Cookie API URL [env: PREAUTH_API=] [default: https://ai.fakeopen.com/auth/preauth]

--arkose-endpoint <ARKOSE_ENDPOINT>

Arkose endpoint, Example: https://client-api.arkoselabs.com

-A, --arkose-token-endpoint <ARKOSE_TOKEN_ENDPOINT>

Get arkose token endpoint

--arkose-chat-har-file <ARKOSE_CHAT_HAR_FILE>

About the browser HAR file path requested by ChatGPT ArkoseLabs

--arkose-auth-har-file <ARKOSE_AUTH_HAR_FILE>

About the browser HAR file path requested by Auth ArkoseLabs

--arkose-platform-har-file <ARKOSE_PLATFORM_HAR_FILE>

About the browser HAR file path requested by Platform ArkoseLabs

-K, --arkose-har-upload-key <ARKOSE_HAR_UPLOAD_KEY>

HAR file upload authenticate key

-s, --arkose-solver <ARKOSE_SOLVER>

About ArkoseLabs solver platform [default: yescaptcha]

-k, --arkose-solver-key <ARKOSE_SOLVER_KEY>

About the solver client key by ArkoseLabs

-T, --tb-enable

Enable token bucket flow limitation

--tb-store-strategy <TB_STORE_STRATEGY>

Token bucket store strategy (mem/redis) [default: mem]

--tb-redis-url <TB_REDIS_URL>

Token bucket redis connection url [default: redis://127.0.0.1:6379]

--tb-capacity <TB_CAPACITY>

Token bucket capacity [default: 60]

--tb-fill-rate <TB_FILL_RATE>

Token bucket fill rate [default: 1]

--tb-expired <TB_EXPIRED>

Token bucket expired (seconds) [default: 86400]

--cf-site-key <CF_SITE_KEY>

Cloudflare turnstile captcha site key [env: CF_SECRET_KEY=]

--cf-secret-key <CF_SECRET_KEY>

Cloudflare turnstile captcha secret key [env: CF_SITE_KEY=]

-D, --disable-webui

Disable WebUI [env: DISABLE_WEBUI=]

-h, --help

Print help- Linux

x86_64-unknown-linux-muslaarch64-unknown-linux-muslarmv7-unknown-linux-musleabiarmv7-unknown-linux-musleabihfarm-unknown-linux-musleabiarm-unknown-linux-musleabihfarmv5te-unknown-linux-musleabi

- Windows

x86_64-pc-windows-msvc

- MacOS

x86_64-apple-darwinaarch64-apple-darwin

- Linux compile, Ubuntu machine for example:

git clone https://github.com/gngpp/ninja.git && cd ninja

cargo build --release- OpenWrt Compile

cd package

svn co https://github.com/gngpp/ninja/trunk/openwrt

cd -

make menuconfig # choose LUCI->Applications->luci-app-ninja

make V=s- Open source projects can be modified, but please keep the original author information to avoid losing technical support.

- Project is standing on the shoulders of other giants, thanks!

- Submit an issue if there are errors, bugs, etc., and I will fix them.