This repository contains code for the paper: "Diagnose and Explain: Medical Report Generation with Natural Language Explanations"

Our model takes a single Chest X-ray image as input and generates a complete radiology report using Natural Langugage. The generated report contains 2 sections:

- Findings: (the explanation) observations regarding each part of the chest examined. Generally a paragraph with 4+ sentences.

- Impression: generally a one-sentence diagnostic based on findings reported. Can contain multiple sentences.

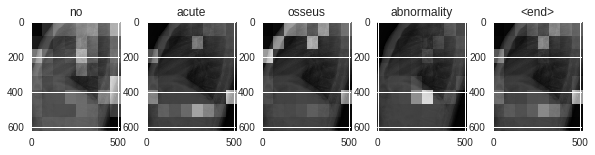

These are few samples of our model performance on unseen records.

Findings: the cardiomediastinal silhouette is within normal limits for size and contour. The lungs are normally inflated without evidence of focal airspace disease, pleural effusion or pneumothorax. Stable calcified granuloma within the right upper lung. No acute bone abnormality.

Impression: no acute cardiopulmonary process.

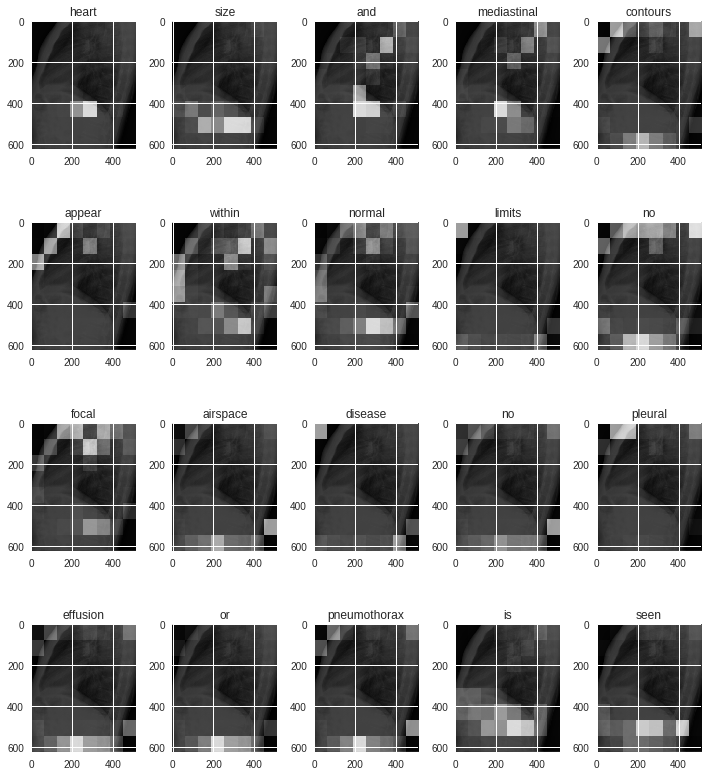

Findings: heart size and mediastinal contours appear within normal limits. No focal airspace disease. No pleural effusion or pneumothorax is seen.

Impression: no acute osseus abnormality.

Findings: no finding.

Impression: heart size is upper normal. No edema bandlike left base and lingular opacities. No scarring or atelectasis. No lobar consolidation pleural effusion or pneumothorax.

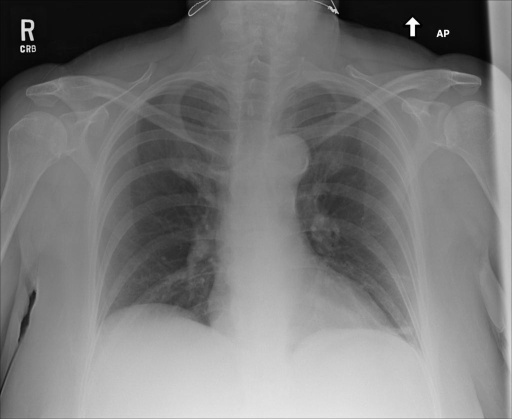

Findings: the heart is normal in size and contour. The lungs are clear bilaterally. Again, no evidence of focal airspace consolidation. No pleural effusion or pneumothorax.

Impression: no acute overt abnormality.

We trained our model on the Indiana University Chest X-Ray collection. The dataset comes with 3955 chest radiology reports from various hospital systems and 7470 associated chest x-rays (most reports are associated with 2 or more images representing frontal and lateral views).

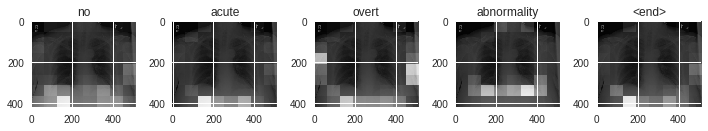

Our model uses a CNN-LSTM to generate words. Features extracted from a CNN model are encoded and used by an hierarchical RNN to generate paragraphs sentence by sentence. We use an attention mechanism at many levels of the decoding stage to extract visual and semantic features. These are used to guide the word decoder and provide additional context to the language model.

- Head over to TensorFlow's quickstart on setting up a TPU instance to get started with running models on Cloud TPU.

- Clone this repository and

cdinto directorygit clone https://github.com/wisdal/diagnose-and-explain && cd diagnose-and-explain - Start training

export STORAGE_BUCKET=<Your Storage Bucket> python main.py --tpu=$TPU_NAME --model_dir=${STORAGE_BUCKET}/tpu --train_steps=20 --iterations_per_loop=100 --batch_size=512

These experiments were possible thanks to the TensorFlow Research Cloud Program which offererd TPUs to train and run this model at scale.

Parts of the model code was inspired by this TensorFlow tutorial