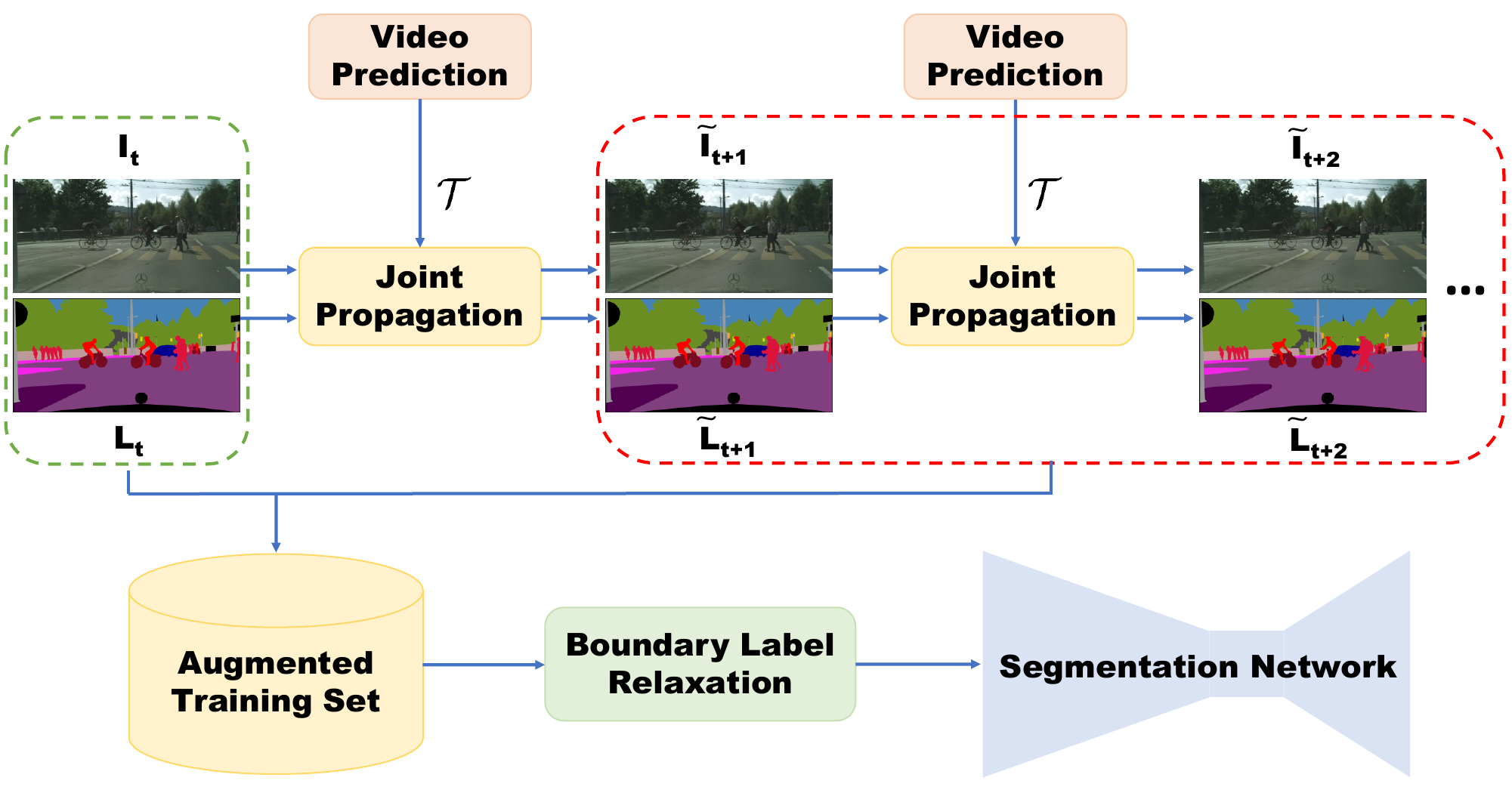

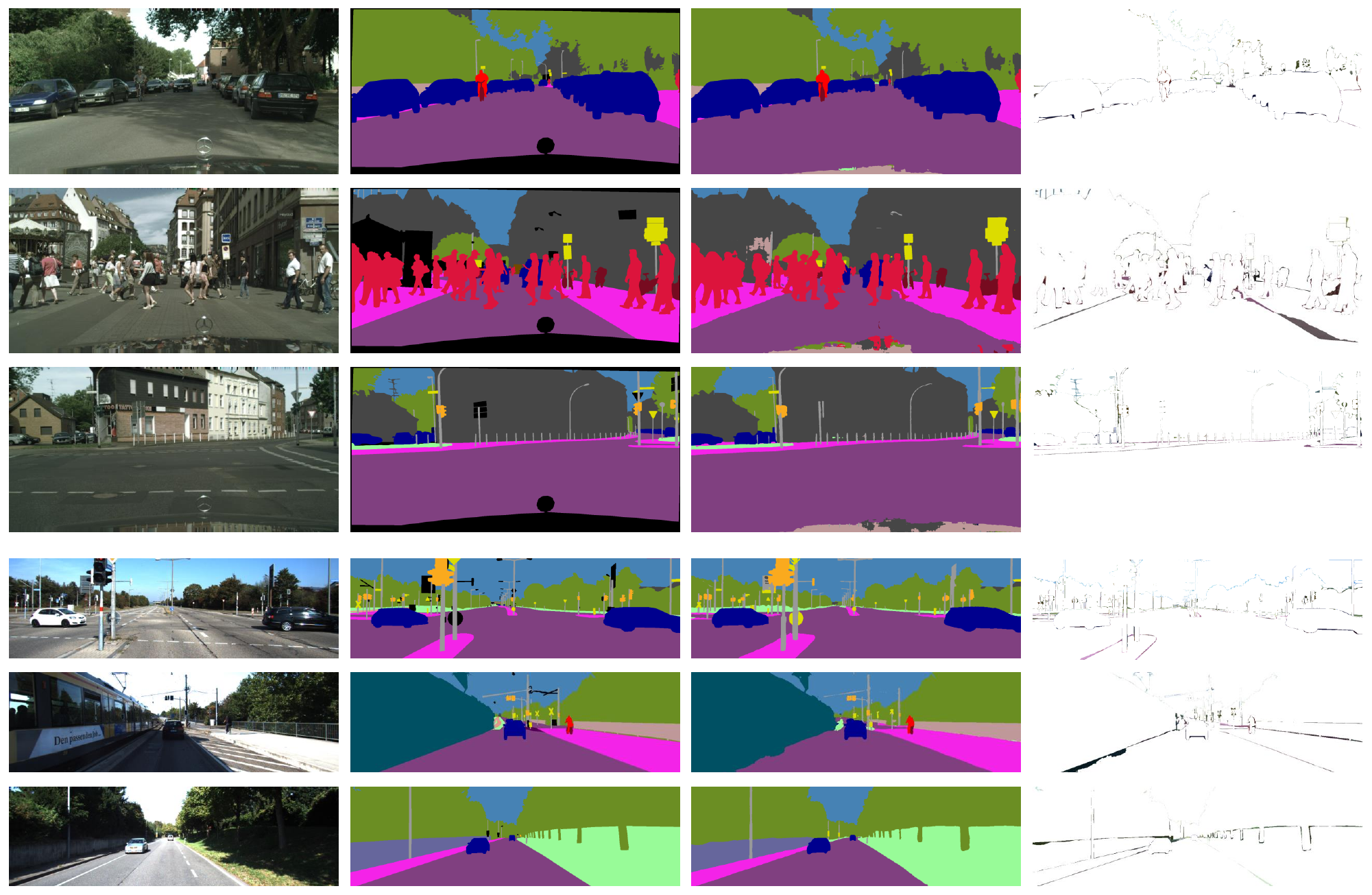

PyTorch implementation of our CVPR2019 paper on achieving state-of-the-art semantic segmentation using Deeplabv3-Plus like architecture with a WideResnet38 trunk. We present a video prediction-based methodology to scale up

training sets by synthesizing new training samples and propose a novel label relaxation technique to make training objectives robust to label propagation noise.

Improving Semantic Segmentation via Video Propagation and Label Relaxation

Yi Zhu1*, Karan Sapra2*, Fitsum A. Reda2, Kevin J. Shih2, Shawn Newsam1, Andrew Tao2, Bryan Catanzaro2

1UC Merced, 2NVIDIA Corporation

In CVPR 2019 (* equal contributions).

SDCNet: Video Prediction using Spatially Displaced Convolution

Fitsum A. Reda, Guilin Liu, Kevin J. Shih, Robert Kirby, Jon Barker, David Tarjan, Andrew Tao, Bryan Catanzaro

NVIDIA Corporation

In ECCV 2018.

# Get Semantic Segmentation source code

git clone https://github.com/NVIDIA/semantic-segmentation.git

cd semantic-segmentation

# Build Docker Image

docker build -t nvidia-segmgentation -f Dockerfile .

We are working on providing a detail report, please bear with us.

To propose a model or change for inclusion, please submit a pull request.

Multiple GPU training is supported, and the code provides examples for training or inference.

For more help, type

python3 scripts/train.py --help

Below are the different base network architectures that are currently provided.

- WideResnet38

- SEResnext(50)-Stride8

We have also support in our code for different model trunks but have not been tested with current repo.

- SEResnext(101)-Stride8

- Resnet(50,101)-Stride8

- Stride-16

We've included pre-trained models. Download checkpoints to a folder pretrained_models.

- pretrained_models/cityscapes_best.pth[1071MB]

- pretrained_models/camvid_best.pth[1071MB]

- pretrained_models/kitti_best.pth[1071MB]

- pretrained_models/sdc_cityscapes_vrec.pth.tar[38MB]

- pretrained_models/FlowNet2_checkpoint.pth.tar[620MB]

Dataloaders for Cityscapes, Mapillary, Camvid and Kitti are available in datasets.

Currently, the code supports

- Python 3

- Python Packages

- numpy

- PyTorch ( == 0.5.1, for <= 0.5.0 )

- numpy

- sklearn

- h5py

- scikit-image

- pillow

- piexif

- cffi

- tqdm

- dominate

- tensorboardX

- opencv-python

- nose

- ninja

- An NVIDIA GPU and CUDA 9.0 or higher. Some operations only have gpu implementation.

Dataloader: To run the code you will have to change the datapath location in config.py for your data.

Model Arch: You can change the architecture name using --arch flag available in scripts/train.py.

./train.sh

Our inference code supports two path pooling and sliding based eval. The pooling based eval is faster than sliding based eval but provides slightly lower numbers.

./eval.sh <weight_file_location> <result_save_location>

cd ./sdcnet

bash flownet2_pytorch/install.sh

./_eval.sh

Training results for WideResnet38 and SEResnext50 trained in fp16 on DGX-1 (8-GPU V100)

| Model Name | Mean IOU | Training Time |

|---|---|---|

| DeepWV3Plus(no sdc-aug) | 81.4 | ~14 hrs |

| DeepSRNX50V3PlusD_m1(no sdc-aug) | 80.0 | ~9 hrs |

If you find this implementation useful in your work, please acknowledge it appropriately and cite the paper or code accordingly:

@inproceedings{semantic_cvpr19,

author = {Yi Zhu*, Karan Sapra*, Fitsum A. Reda, Kevin J. Shih, Shawn Newsam, Andrew Tao, Bryan Catanzaro},

title = {Improving Semantic Segmentation via Video Propagation and Label Relaxation},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2019},

url = {https://nv-adlr.github.io/publication/2018-Segmentation}

}

* indicates equal contribution

@inproceedings{reda2018sdc,

title={SDC-Net: Video prediction using spatially-displaced convolution},

author={Reda, Fitsum A and Liu, Guilin and Shih, Kevin J and Kirby, Robert and Barker, Jon and Tarjan, David and Tao, Andrew and Catanzaro, Bryan},

booktitle={Proceedings of the European Conference on Computer Vision (ECCV)},

pages={718--733},

year={2018}

}

We encourage people to contribute to our code base and provide suggestions, point any issues, or solution using merge request, and we hope this repo is useful.

Parts of the code were heavily derived from pytorch-semantic-segmentation, inplace-abn, Pytorch, ClementPinard/FlowNetPytorch and Cadene

Our initial models used SyncBN from Synchronized Batch Norm but since then have been ported to Apex SyncBN developed by Jie Jiang.

We would also like to thank Ming-Yu Liu and Peter Kontschieder.

- 4 spaces for indentation rather than tabs

- 100 character line length

- PEP8 formatting