This repository provides datasets and code for preprocessing, training and testing models for the paper:

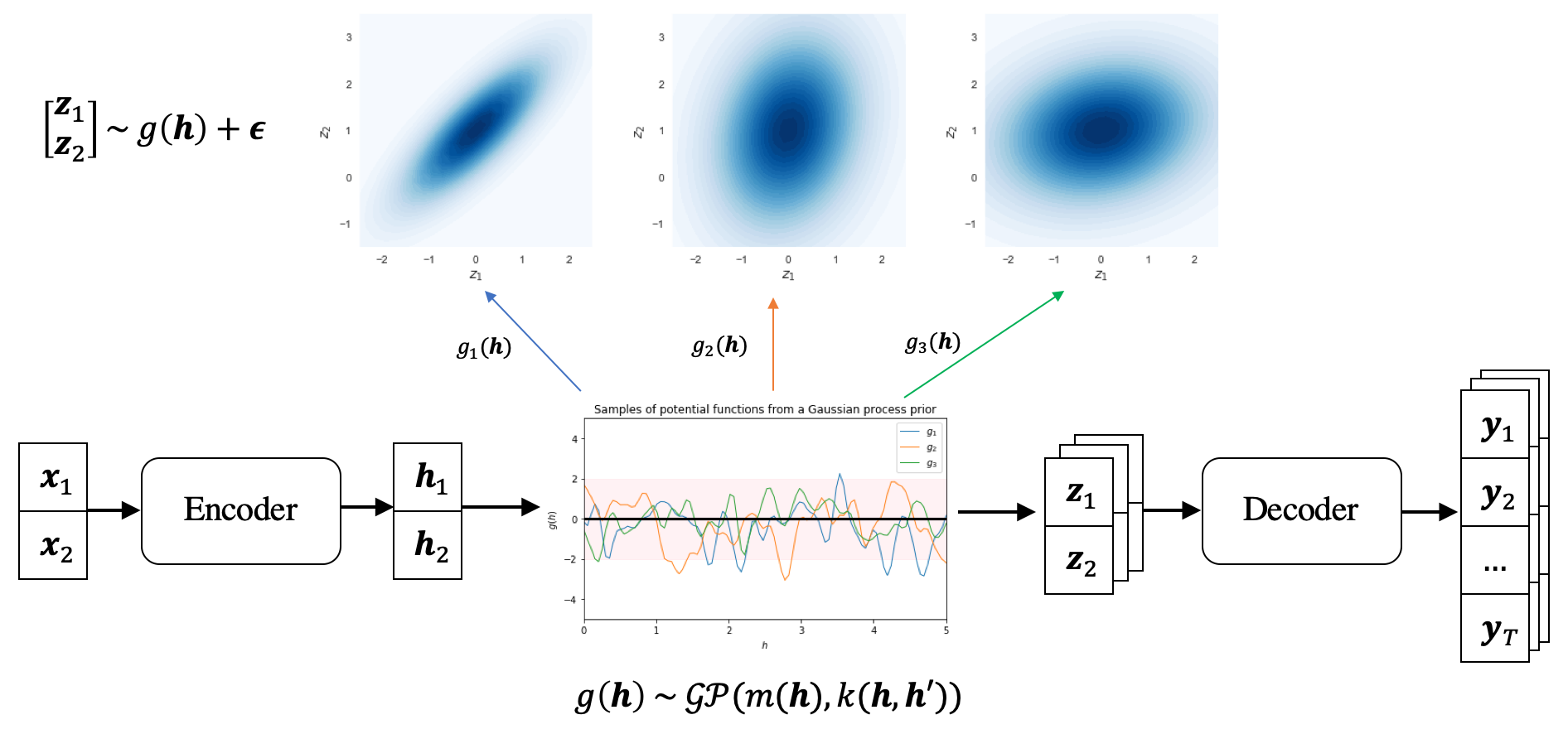

Diverse Text Generation via Variational Encoder-Decoder Models with Gaussian Process Priors

Wanyu Du, Jianqiao Zhao, Liwei Wang and Yangfeng Ji

ACL 2022 6th Workshop on Structured Prediction for NLP

The following command installs all necessary packages:

pip install -r requirements.txt

The project was tested using Python 3.6.6.

- Twitter URL includes

trn/val/tst.tsv, which has the following format in each line:

source_sentence \t reference_sentence

- GYAFC has two sub-domains

emandfr, please request and download the data from the original paper here.

Train the LSTM-based variational encoder-decoder with GP priors:

cd models/pg/

python main.py --task train --data_file ../../data/twitter_url \

--model_type gp_full --kernel_v 65.0 --kernel_r 0.0001

where --data_file indicates the data path for the training data,

--model_type indicates which prior to use, including copynet/normal/gp_full,

--kernel_v and --kernel_r specifies the hyper-parameters for the kernel of GP prior.

Train the transformer-based variational encoder-decoder with GP priors:

cd models/t5/

python t5_gpvae.py --task train --dataset twitter_url \

--kernel_v 512.0 --kernel_r 0.001

where --data_file indicates the data path for the training data,

--kernel_v and --kernel_r specifies the hyper-parameters for the kernel of GP prior.

Test the LSTM-based variational encoder-decoder with GP priors:

cd models/pg/

python main.py --task decode --data_file ../../data/twitter_url \

--model_type gp_full --kernel_v 65.0 --kernel_r 0.0001 \

--decode_from sample \

--model_file /path/to/best/checkpoint

where --data_file indicates the data path for the testing data,

--model_type indicates which prior to use, including copynet/normal/gp_full,

--kernel_v and --kernel_r specifies the hyper-parameters for the kernel of GP prior,

--decode_from indicates generating results conditioning on z_mean or randomly sampled z, including mean/sample.

Test the transformer-based variational encoder-decoder with GP priors:

cd models/t5/

python t5_gpvae.py --task eval --dataset twitter_url \

--kernel_v 512.0 --kernel_r 0.001 \

--from_mean \

--timestamp '2021-02-14-04-57-04' \

--ckpt '30000' # load best checkpoint

where --data_file indicates the data path for the testing data,

--kernel_v and --kernel_r specifies the hyper-parameters for the kernel of GP prior,

--from_mean indicates whether to generate results conditioning on z_mean or randomly sampled z,

--timestamp and --ckpt indicate the file path for the best checkpoint.

If you find this work useful for your research, please cite our paper:

@article{du2022diverse,

title={Diverse Text Generation via Variational Encoder-Decoder Models with Gaussian Process Priors},

author={Du, Wanyu and Zhao, Jianqiao and Wang, Liwei and Ji, Yangfeng},

journal={arXiv preprint arXiv:2204.01227},

year={2022},

url={https://arxiv.org/abs/2204.01227}

}