For those who want to use the piglet dataset for CT denoising research and use this work as a baseline, please refer to this issue for details on how I used it.

The piglet dataset we used in the publication is now open for download! Please find the link in my personal webpage. (Note: for non-commercial use only)

This repo provides the trained denoising model and testing code for low dose CT denoising as described in our paper.

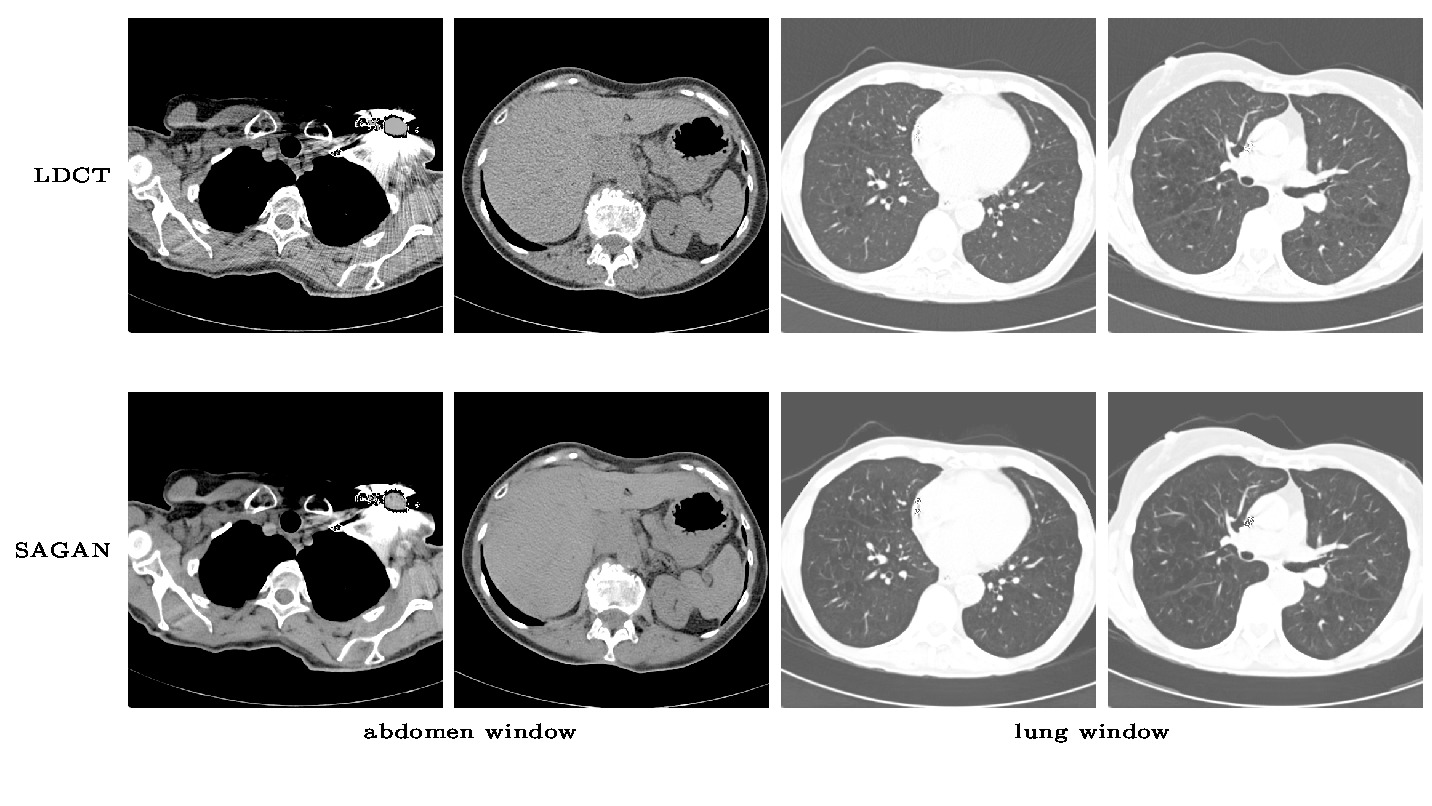

Here are some randomly picked denoised results on low dose CTs from this kaggle challenge.

To better use this repo, please make sure the dose level of the LDCTs are larger than 0.71 mSv.

- Linux or OSX

- NVIDIA GPU

- Python 3.x

- Torch7

- Install Torch7

- Install torch packages nngraph and hdf5

luarocks install nngraph

luarocks install hdf5- Install Python 3.x (recommend using Anaconda)

- Install python dependencies

pip install -r requirements.txt

- Clone this repo:

git clone git@github.com:xinario/SAGAN.git

cd SAGAN-

Download the pretrained denoising model from here and put it into the "checkpoints/SAGAN" folder

-

Prepare your test set with the provided python script

#make a directory inside the root SAGAN folder to store your raw dicoms, e.g. ./dicoms

mkdir dicoms

#then put all your low dose CT images of dicom format into this folder and run

python pre_process.py --input ./dicoms --output ./datasets/experiment/test

#all your test images would now be saved as uint16 png format inside folder ./datasets/experiment/test.

- Test the model:

DATA_ROOT=./datasets/experiment name=SAGAN which_direction=AtoB phase=test th test.lua

#the results are saved in ./result/SAGAN/latest_net_G_test/result.h5- Display the result with a specific window, e.g. abdomen. Window type can be changed to 'abdomen', 'bone' or 'none'

python post_process.py --window 'abdomen'Now you can view the result by open the html file index.html sitting in the root folder

If you find it useful and are using the code/model/dataset provided here in a publication, please cite our paper:

Yi, X. & Babyn, P. J Digit Imaging (2018). https://doi.org/10.1007/s10278-018-0056-0

Code borrows heavily from pix2pix