This repo is a suite of GPGPU benchmarks that will be used to collect both motivation data for GPU resource virtualization and to evaluate our proposed solutions.

- bfs

- cutcp

- histo

- lbm

- mri-gridding

- mri-q

- sad

- sgemm

- spmv

- stencil

- tpacf

- sgemm

- wmma

- tensorTensorCoreGemm

- ImageClassification-Inception_v3

- MachineTranslation-Seq2Seq

You can modify the following parameters in config file PROJECT_ROOT/scripts/run/path.sh

usrname: Your own username. Some of your tests might need to run with sudo rights and hence the experiment result folders will be sudo owned by default. The run scripts will automatically change ownership of these folders to you at the end of the test run.CUDAHOME: Path to CUDA root folder.Arch version: SM version of your CUDA device.

- Download and extract datasets to

PARBOIL_ROOT/datasets - To compile all benchmarks:

source PARBOIL_ROOT/compile.sh - To clean all benchmarks:

source PARBOIL_ROOT/clean.sh

Run $PROJECT_ROOT/virbench.sh <compile | clean> <parboil | cutlass | cuda-sdk> to compile/clean

projects. The script will build ALL benchmarks within the benchmark suite.

Run $PROJECT_ROOT/virbench.sh run <timeline | duration | metrics | nvvp> <# of iterations> <test.config>

to run benchmarks. The run options and test.config and explained in the following sections.

The # of iterations indicates how many times the tests defined in test.config are run, which

is useful when multiple runs are required for data processing.

Concurrent execution type of options will invoke isolated run (one application at a time), time multiplexed run (all applications within the same test simultaneously using time-sliced scheduler on the hardware) and MPS run (similar to time multiplexed run but using MPS' spatial multiplexing scheduler).

Concurent execution:

-

timeline: Capture execution timeline of the benchmark only. Output is a .prof file that can be imported by Nvidia's visual profiler (nvvp).

-

duration: Capture runtime duration of each kernel within the benchmark. Output is a .csv file.

Isolated execution:

-

metrics: Evaluate each kernel within the benchmark in terms of a predefined metrics supported by nvprof. The default metrics are listed in NVPROF Metrics Considered. The metrics evaluated by the script can be modified in

PROJECT_ROOT/scripts/run/metricsfile. You may runCUDAHOME/bin/nvprof --query-metricson Volta and earlier devices to find a complete list of metrics supported by nvprof. The metrics option is not available for Turing and newer devices due to deprecation of nvprof (future work for support of new profile tools). Output is a .csv file. -

nvvp: Capture both timeline and all analysis metric statistics of the benchmark. The output is a .prof file that can be imported by nvvp. With the analysis metric information, nvvp will also provide detailed performance analysis (memory/compute-bound etc.) and optimization suggestions on the benchmark.

The test config describes what benchmarks you would like to run in parallel for each test. An example config looks like the following:

testcase_no, device_name, exec1_name, exec2_name, keyword_exec1, keyword_exec2, time, mps

f7,Volta,cutlass_sgemm_512,cutlass_sgemm_2048,spmv,Sgemm, 1, 1

The first line is always the same headers. Each of the following line is a new test case.

testcase_no should be a unique identifier of the test which will be the name of the generated

experiment result folder under $PROJECT_ROOT/tests/experiment.

device name is a user friendly

description of your device used by the postprocessing scripts for plot titles.

exec1_name and exec2_name are the benchmark name defined

in $PROJECT_ROOT/scripts/run/run_path.sh.

keyword_exec1 and keyword_exec2 are user-friendly description of the benchmarks for

postprocessing scripts.

time indicates whether to run benchmark combination in time-multiplexed mode. A value of 1

indicates True while 0 indicates False.

mps indicates whether to run benchmark combination in MPS mode. A value of 1

indicates True while 0 indicates False.

All profiled results generated will be stored under PROJECT_ROOT/tests/experiment. A set of

postproecssing scripts written using Python Matplotlib are available for your convenience. They

are located in PROJECT_ROOT/scripts/process-data. Follow the readme in that folder to set up

Python virtual environment to use the sample scripts.

Under the subfolder process-data/primitives, frontend.py includes some common functions

for reading/parsing experiment results while backend.py includes functions for calculating

metrics (weighted throughput, weighted speedup, fairness).

Some sample wrapper scripts under the process-data are available to demonstrate how to

use these primitive scripts. sample-individual.py takes in a test.config file and outputs

bar graphs (pyplot by default, uncomment tikz_save in the script to get pgfplot in Latex format)

showing weighted speedup and fairness of each individual test (benchmark combination).

sameple-geomean takes in a list of test.config file and outputs geomean of weighted speedup/

fairness within all tests within each test.config file. This is useful for comparing test cases

that fall into different categories such as compute+compute intensive, compute+memory intensive etc..

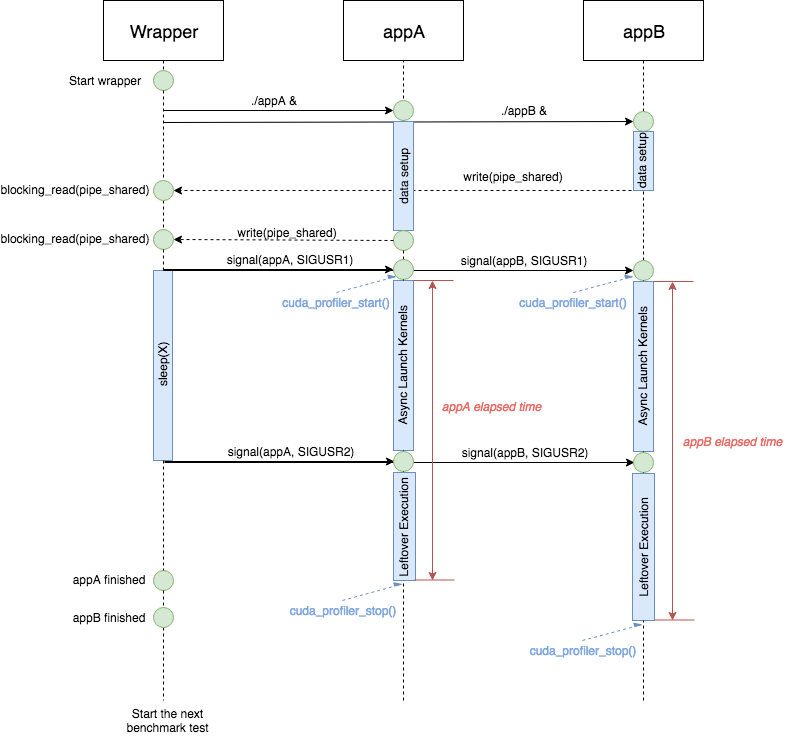

To use our benchmark wrapper script, each benchmark should implement the same interface to synchronize kernel launches. Consider a test comprises two applications A and B, with each application invoking different GPU compute kernels. The goal is to capture performance data during the period where both kernels from A and B execute concurrently. Hence, kernel execution in each application should start at the same time and ideally end around the same time. Since it's impossible to guarantee all kernels end simultaneously due to kernel runtime difference, the data processing script will calculate the delta of kernel elapased time between A and B and discard profiled statistics in the tail where only one application is running. The timing relationship among A, B and the wrapper script is shown in the timing diagram below.

Each benchmark application should be structured similar to the code snippet

in $PROJECT_ROOT/benchmarks/interface.c

Your Makefile or CMakeLists should source/include $PROJECT_ROOT/scripts/config for build

information such as CUDA path, CUDA device compute capability etc..

Also, modify the script $PROJECT_ROOT/scripts/compile/compile.sh to add support

for compilation through the main driver script virbench.sh.

Add a unique key for your benchmark and associated command to run the benchmark

in $PROJECT_ROOT/scripts/run/run_path.sh. The unique key will be used by your test.config file

and the run.sh script will load the appropriate command based on keys listed in the

input test.config. from

- DP util: double precision function unit utilization on a scale of 0 to 10 [double_precision_fu_utilization]

- DP effic: ratio of achieved to peak double-precision FP operations [flop_dp_efficiency]

- SP util: [single_precision_fu_utilization]

- SP effic: [flop_sp_efficiency]

- HP util: [half_precision_fu_utilization]

- HP effic: [flop_hp_efficiency]

- DRAM util: [dram_utilization]

- DRAM read throughput: [dram_read_throughput]

- DRAM write throughput: [dram_write_throughput]

- L1/tex hit rate: Hit rate for global load and store in unified l1/tex cache [global_hit_rate]

- L2 hit rate: Hit rate at L2 cache for all requests from texture cache [l2_tex_hit_rate] (not avail. on Volta)

- Shared memory util: on a scale of 0 to 10 [shared_utilization]

- Special func unit util: on a scale of 0 to 10 [special_fu_utilization]

- tensor precision util: [tensor_precision_fu_utilization] (not avail. on CUDA 9.0)

- tensor int8 util: [tensor_int_fu_utilization] (not avail. on CUDA 9.0)