This is the codebase for the paper: Non-stationary Transformers: Exploring the Stationarity in Time Series Forecasting, NeurIPS 2022.

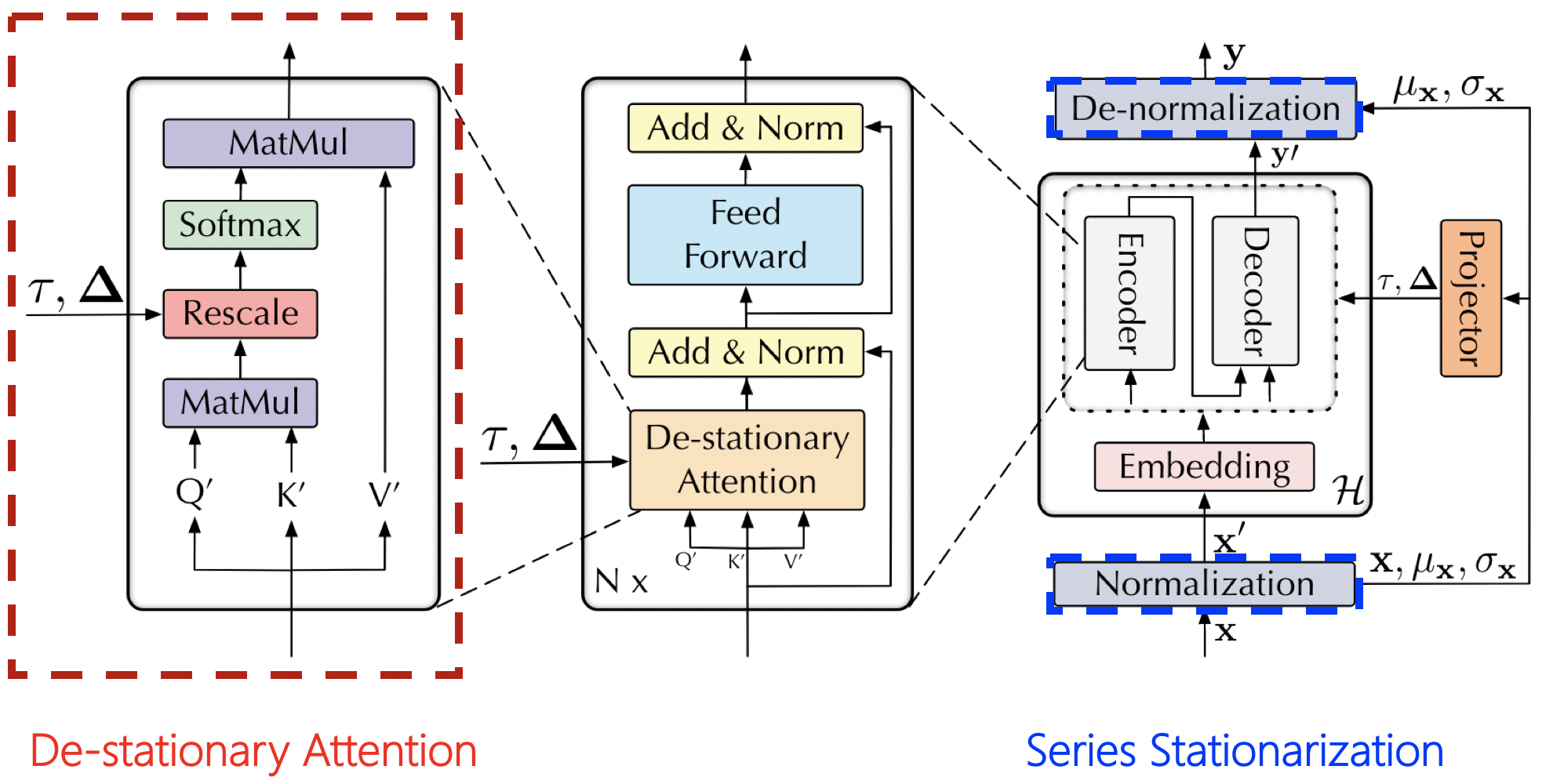

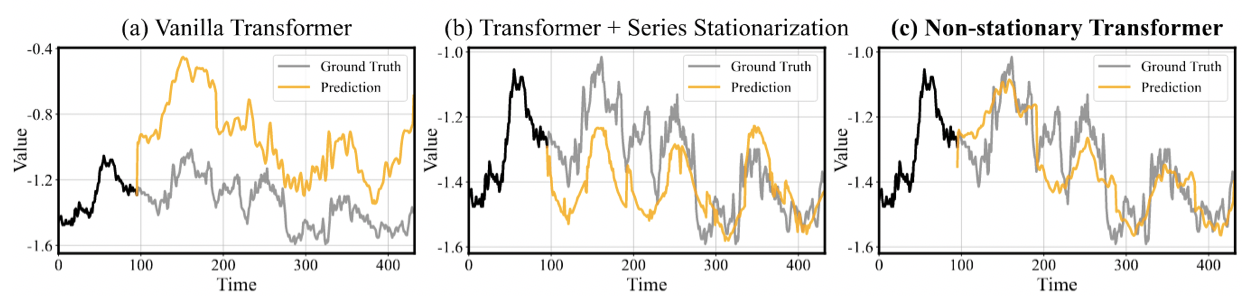

Series Stationarization unifies the statistics of each input and converts the output with restored statistics for better predictability.

De-stationary Attention is devised to recover the intrinsic non-stationary information into temporal dependencies by approximating distinguishable attentions learned from unstationarized series.

- Install Python 3.7 and neccessary dependencies.

pip install -r requirements.txt

- All the six benchmark datasets can be obtained from Google Drive or Tsinghua Cloud.

We provide the Non-stationary Transformer experiment scripts and hyperparameters of all benchmark dataset under the folder ./scripts.

# Transformer with our framework

bash ./scripts/ECL_script/ns_Transformer.sh

bash ./scripts/Traffic_script/ns_Transformer.sh

bash ./scripts/Weather_script/ns_Transformer.sh

bash ./scripts/ILI_script/ns_Transformer.sh

bash ./scripts/Exchange_script/ns_Transformer.sh

bash ./scripts/ETT_script/ns_Transformer.sh# Transformer baseline

bash ./scripts/ECL_script/Transformer.sh

bash ./scripts/Traffic_script/Transformer.sh

bash ./scripts/Weather_script/Transformer.sh

bash ./scripts/ILI_script/Transformer.sh

bash ./scripts/Exchange_script/Transformer.sh

bash ./scripts/ETT_script/Transformer.shWe also provide the scripts for other Attention-based models (Informer, Autoformer), for example:

# Informer promoted by our Non-stationary framework

bash ./scripts/Exchange_script/Informer.sh

bash ./scripts/Exchange_script/ns_Informer.sh

# Autoformer promoted by our Non-stationary framework

bash ./scripts/Weather_script/Autoformer.sh

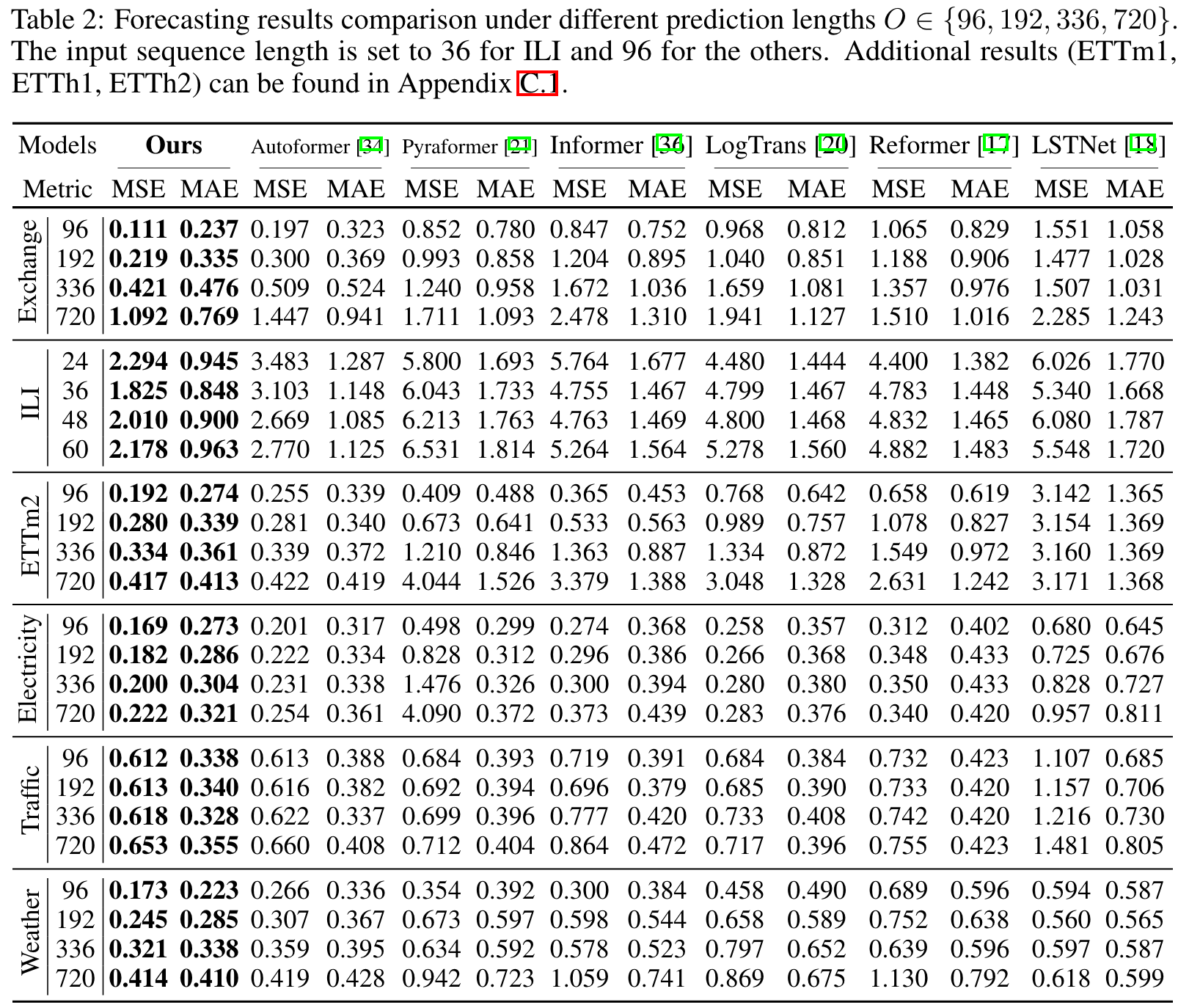

bash ./scripts/Weather_script/ns_Autoformer.shFor multivariate forecasting results, the vanilla Transformer equipped with our framework consistently achieves state-of-the-art performance in all six benchmarks and prediction lengths.

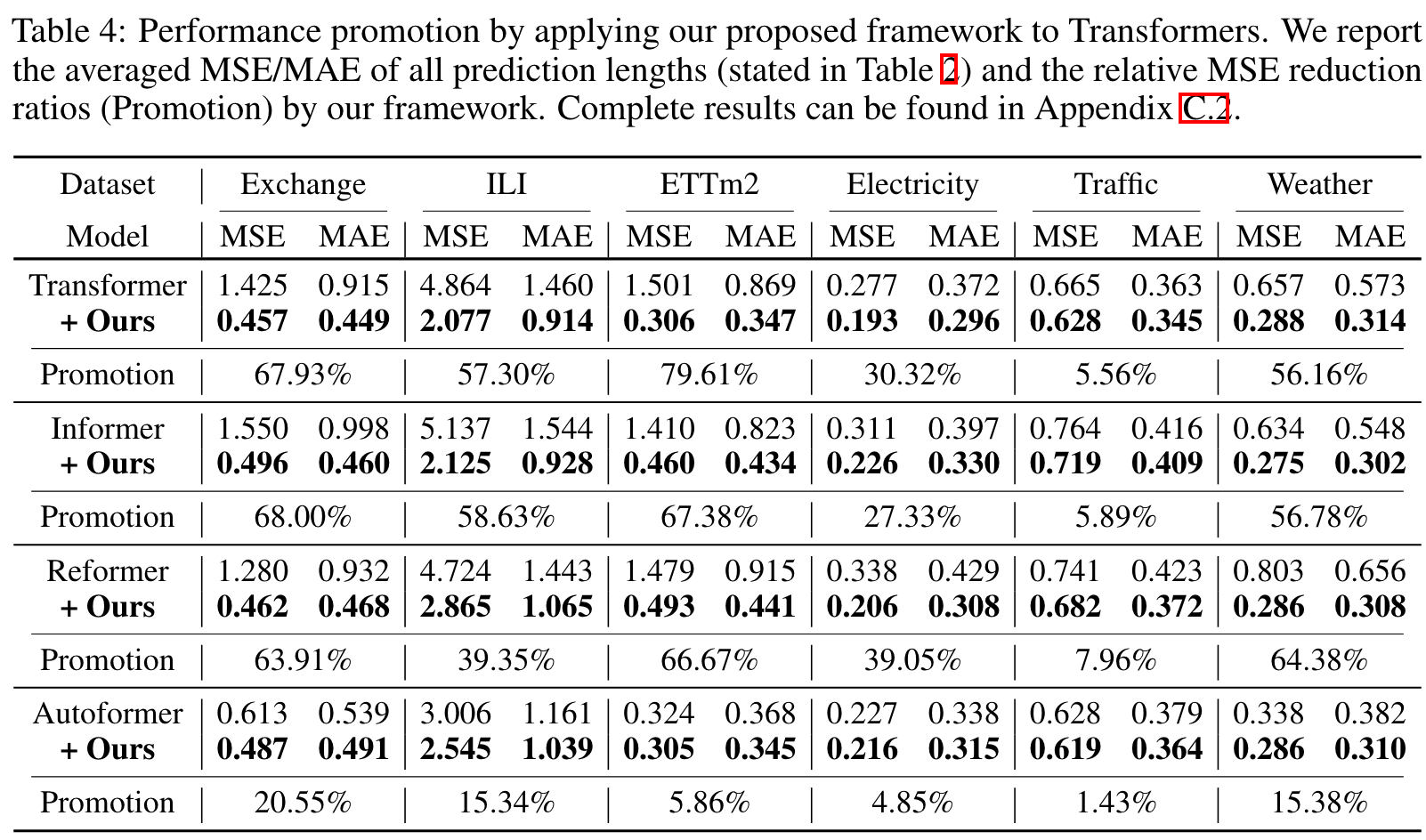

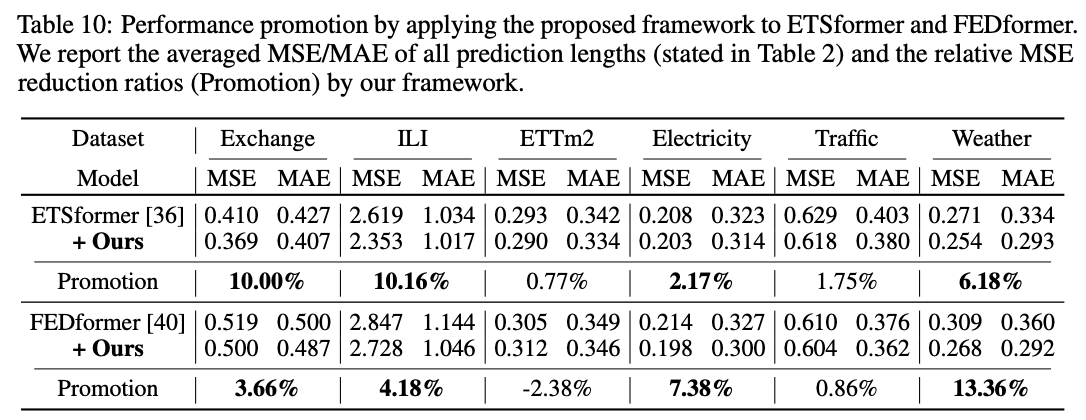

By applying our framework to six mainstream Attention-based models. Our method consistently improves the forecasting ability. Overall, it achieves averaged 49.43% promotion on Transformer, 47.34% on Informer, 46.89% on Reformer, 10.57% on Autoformer, 5.17% on ETSformer and 4.51% on FEDformer, making each of them surpass previous state-of-the-art.

We will keep equip the following models with our proposed Non-stationary Transformers framework:

- Transformer

- Autoformer

- Informer

- LogTrans

- Reformer

- FEDformer

- Pyraformer

- ETSformer

If you find this repo useful, please cite our paper.

@article{liu2022non,

title={Non-stationary Transformers: Exploring the Stationarity in Time Series Forecasting},

author={Liu, Yong and Wu, Haixu and Wang, Jianmin and Long, Mingsheng},

booktitle={Advances in Neural Information Processing Systems},

year={2022}

}

If you have any questions or want to use the code, please contact liuyong21@mails.tsinghua.edu.cn.

This repo is built on the Autoformer repo, we appreciate the authors a lot for their valuable code and efforts.