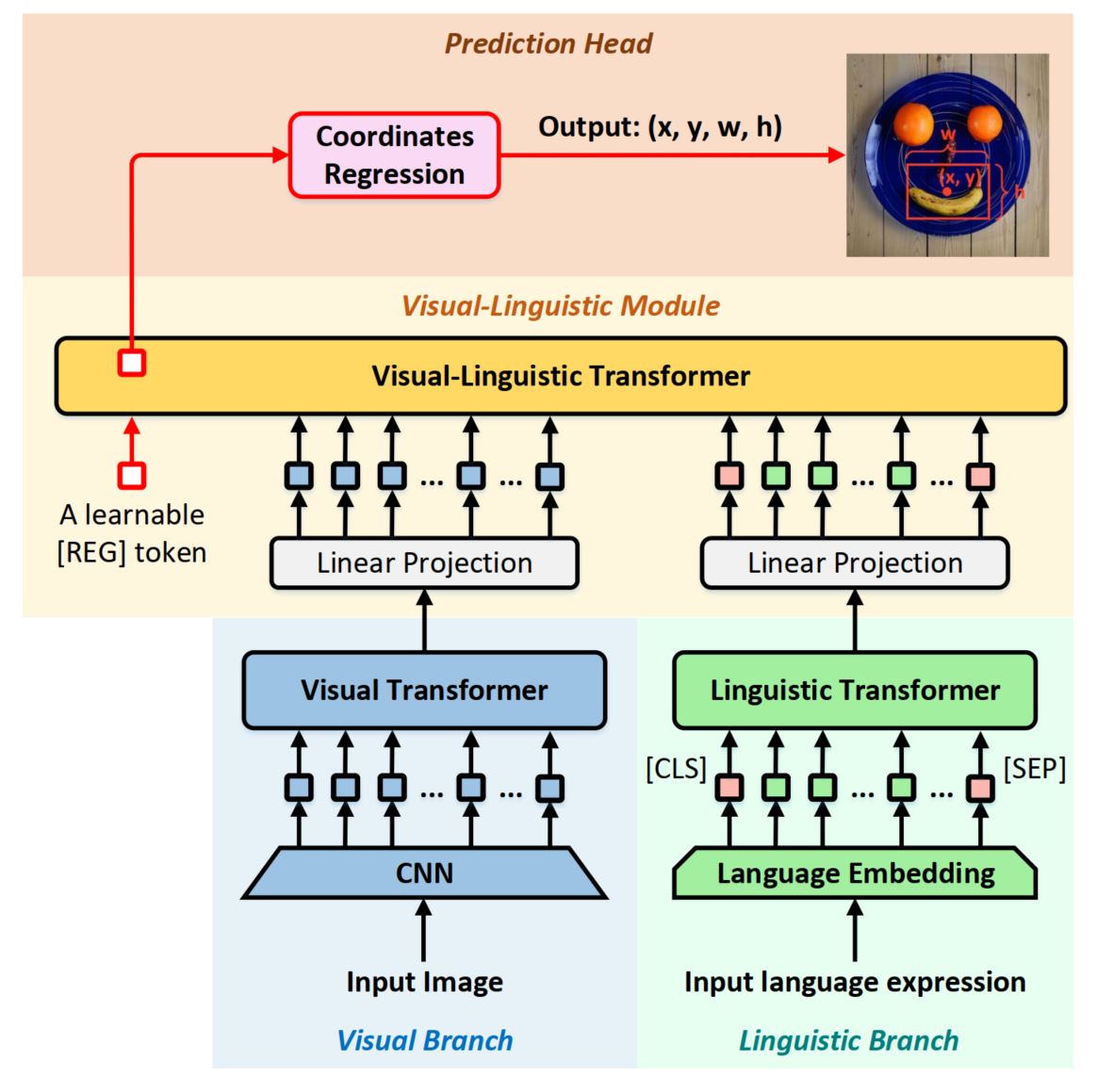

This is the official implementation of TransVG: End-to-End Visual Grounding with Transformers. This paper has been accepted by ICCV 2021.

@article{deng2021transvg,

title={TransVG: End-to-End Visual Grounding with Transformers},

author={Deng, Jiajun and Yang, Zhengyuan and Chen, Tianlang and Zhou, Wengang and Li, Houqiang},

journal={arXiv preprint arXiv:2104.08541},

year={2021}

}

-

Clone this repository.

git clone https://github.com/djiajunustc/TransVG -

Prepare for the running environment.

You can either use the docker image we provide, or follow the installation steps in

ReSC.docker pull djiajun1206/vg:pytorch1.5

Please refer to GETTING_STARGTED.md to learn how to prepare the datasets and pretrained checkpoints.

The models with ResNet-50 backbone are available in [Gdrive]

| RefCOCO | RefCOCO+ | RefCOCOg | ReferItGame | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| val | testA | testB | val | testA | testB | g-val | u-val | u-test | val | test |

| 80.5 | 83.2 | 75.2 | 66.4 | 70.5 | 57.7 | 66.4 | 67.9 | 67.4 | 71.6 | 69.3 |

-

Training

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python -m torch.distributed.launch --nproc_per_node=8 --use_env train.py --batch_size 8 --lr_bert 0.00001 --aug_crop --aug_scale --aug_translate --backbone resnet50 --detr_model ./checkpoints/detr-r50-referit.pth --bert_enc_num 12 --detr_enc_num 6 --dataset referit --max_query_len 20 --output_dir outputs/referit_r50 --epochs 90 --lr_drop 60We recommend to set --max_query_len 40 for RefCOCOg, and --max_query_len 20 for other datasets.

We recommend to set --epochs 180 (--lr_drop 120 acoordingly) for RefCOCO+, and --epochs 90 (--lr_drop 60 acoordingly) for other datasets.

-

Evaluation

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python -m torch.distributed.launch --nproc_per_node=8 --use_env eval.py --batch_size 32 --num_workers 4 --bert_enc_num 12 --detr_enc_num 6 --backbone resnet50 --dataset referit --max_query_len 20 --eval_set test --eval_model ./outputs/referit_r50/best_checkpoint.pth --output_dir ./outputs/referit_r50