This repository contains the implemetation of Dynamic Nerual Garments proposed in Siggraph Asia 2021.

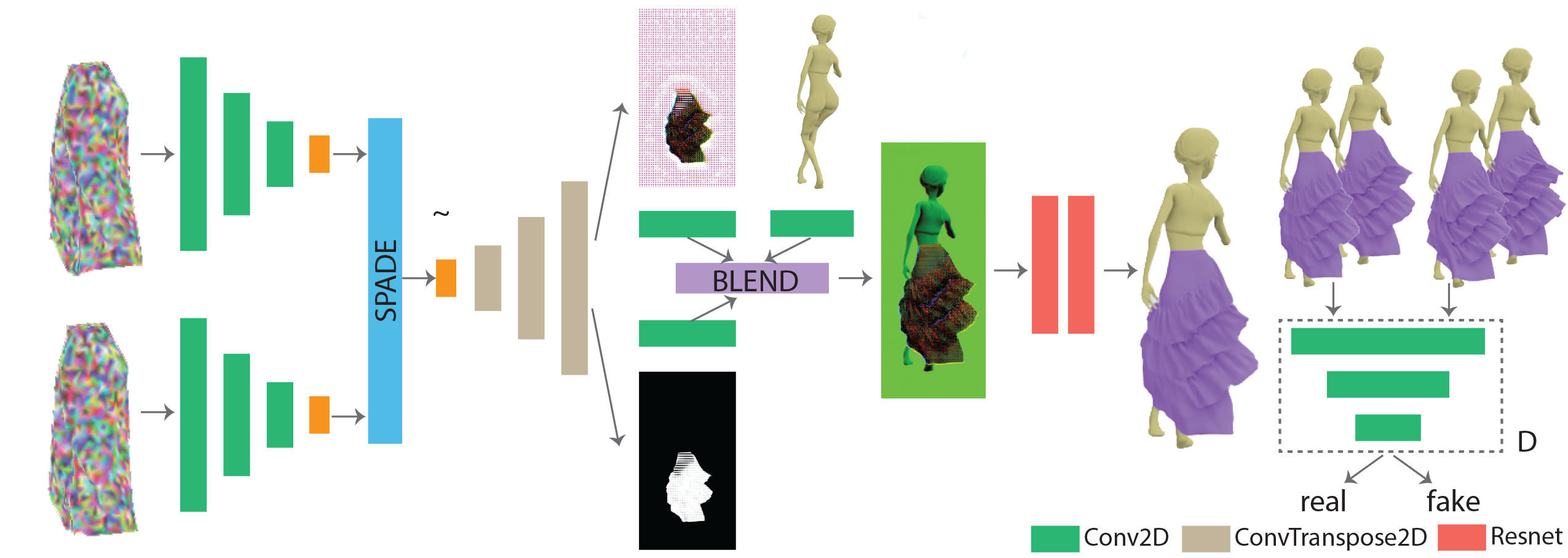

This folder contains the pytorch implementation of the rendering network.

You can play with the code by running "run.py" after downloading data and checkpoint from here. You will get the similar results as shown below by respectively running the check point from multilayers, tango, twolayers

In case you want to retrain the network, you can download the training data from here. We provide the 3D meshes of coarse garment, and the target garments including multilayers, tango, and twolayers. You will first run the blender project to generate the groundtruth rendering, background rendering and save the corresponding camera poses. Next, you need to compute the texture sampling map by referring to the code in ./PixelSample. After gathering all needed data, you can give a shot at "train.py".

This folder contains the code to generate texture sampling map. The C++ code relies on OpenCV, and Embree.

This folder contains python scripts for blender.

If you use our code or model, please cite our paper:

@article{10.1145/3478513.3480497, author = {Zhang, Meng and Wang, Tuanfeng Y. and Ceylan, Duygu and Mitra, Niloy J.}, title = {Dynamic Neural Garments}, year = {2021}, issue_date = {December 2021}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, volume = {40}, number = {6}, issn = {0730-0301}}, journal = {ACM Trans. Graph.}, month = {dec}, articleno = {235}, numpages = {15}}