This is the PyTorch implementation of the

- Arxiv paper: Towards Transferable Unrestricted Adversarial Examples with Minimum Changes,

- No.1 solution of of CVPR’21 Security AI Challenger: Unrestricted Adversarial Attacks on ImageNet Competition.

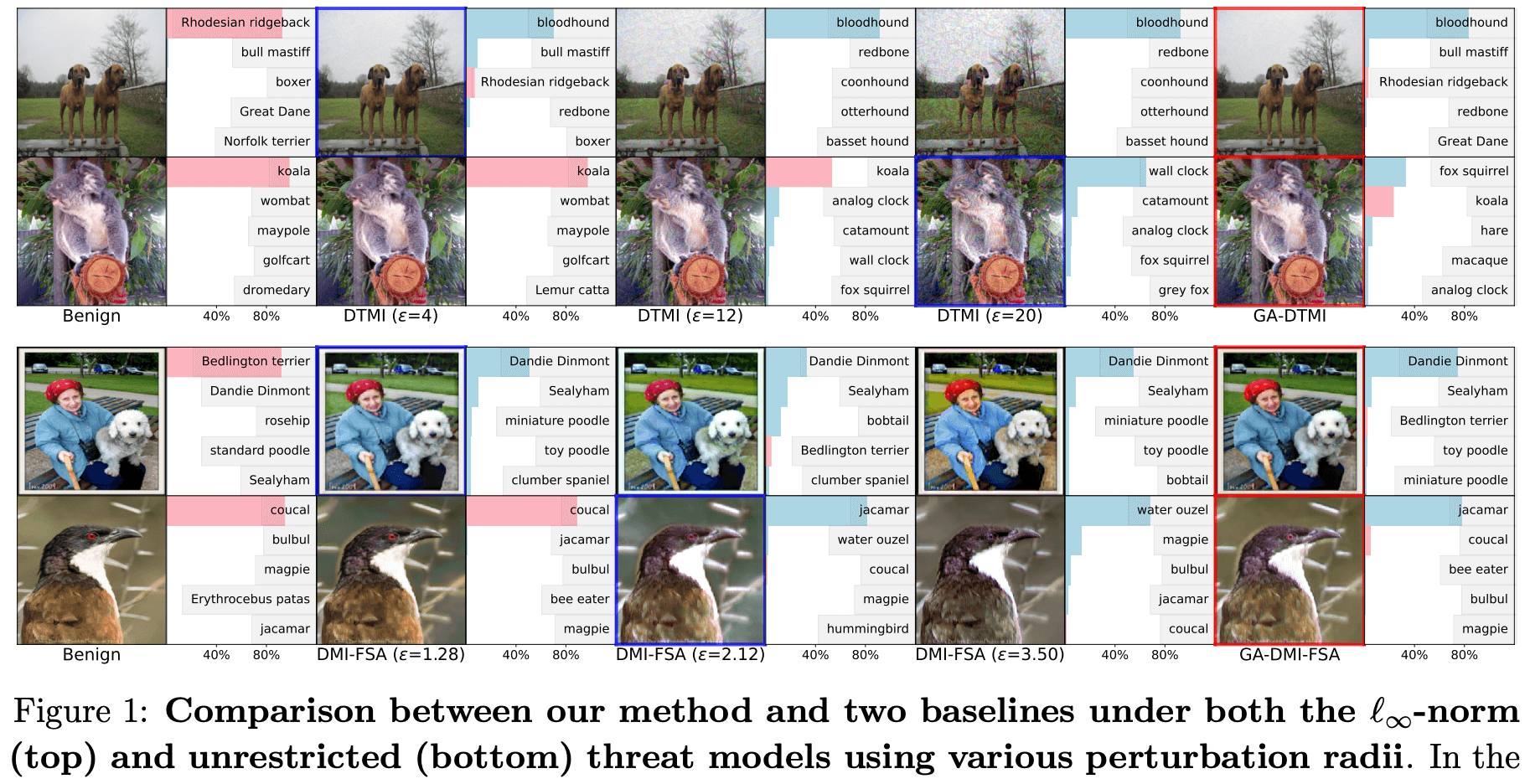

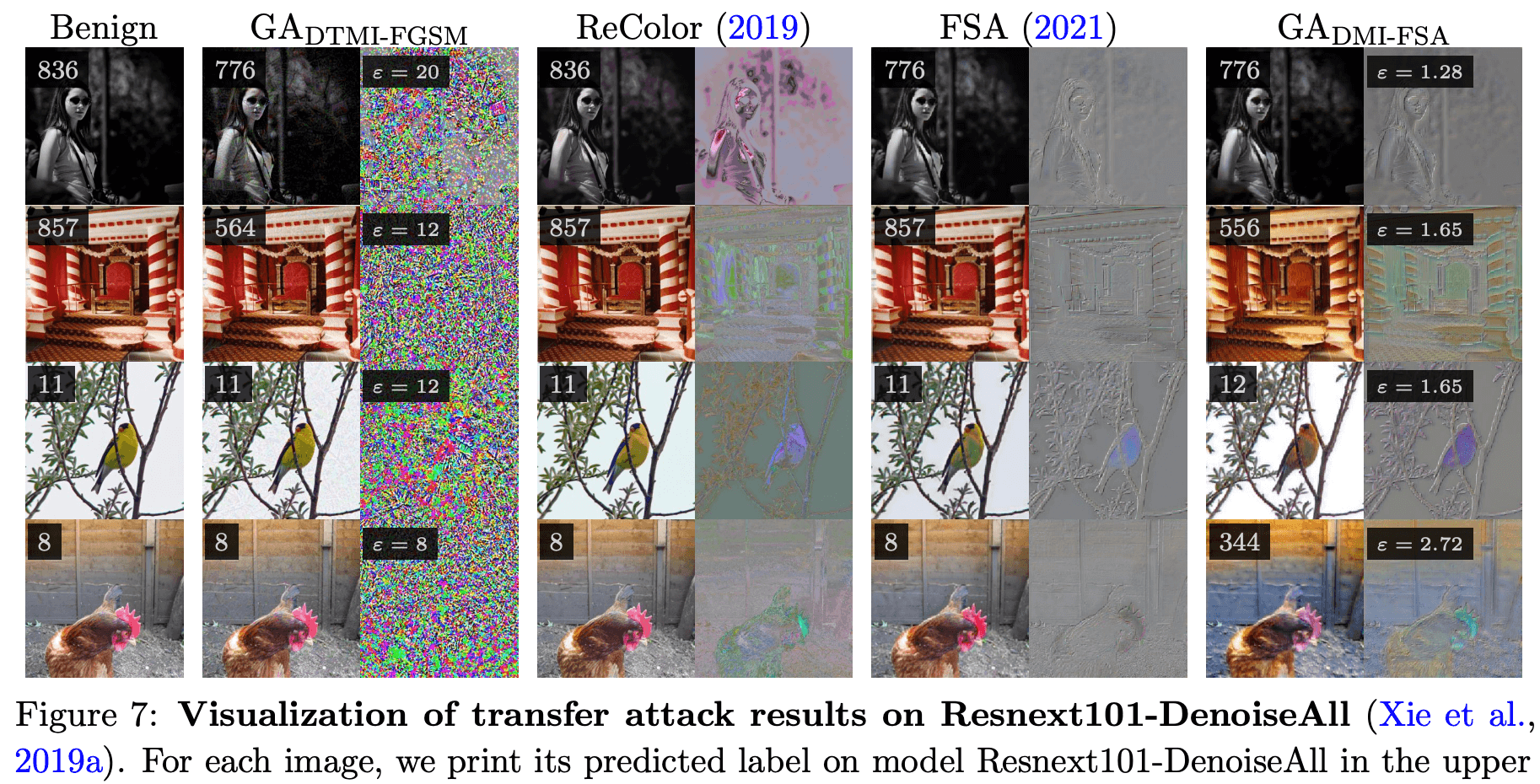

In this work, we propose a geometry-aware framework to generate transferable unrestricted adversarial examples with minimum changes.

The perturbation budgets required for transfer-based attack are different for distinct images.

GA-DMI-FSA generates semantic-preserving unrestricted adversarial examples by adjusting the images' color, texture, etc.

- Python >= 3.6

- Pytorch >= 1.0

- timm = 0.4.12

- einops = 0.3.2

- perceptual_advex = 0.2.6

- Numpy

- CUDA

The workspace is like this

├── assets

├── attacks

├── Competition

│ ├── code

│ ├── input_dir

│ │ └── images

│ └── output_dir

├── data

│ ├── ckpts

│ └── images

├── scripts

└── utils

You could download the data/images dataset from google drive (140M) and the data/ckpts from google drive (1.63G).

To reproduce the results of GA-DTMI-FGSM in Tab.3, run

bash scripts/run_main_ens.sh

# or with DistributedSampler

bash scripts/run_main_ens_dist.shTo reproduce the results of GA-DMI-FSA in Tab.3, run

# with DistributedSampler

bash scripts/run_main_ens_fea_dist.shTo run the attack on a single GPU

python main_bench_mark.py --input_path "path/of/adv_examples"or with a DistributedSampler, i.e.,

OMP_NUM_THREADS=1 python -m torch.distributed.launch --nproc_per_node=8 --master_port 26667 main_bench_mark.py --distributed --batch_size 40 --input_path "path/of/adv_examples"To run the pgd-20 attack on model idx (0, 4, 7, etc.) in Tab. 3

python pgd_attack.py --source_id idx