Official PyTorch Implementation of Mask Again: Masked Knowledge Distillation for Masked Video Modeling (ACM MM 2023).

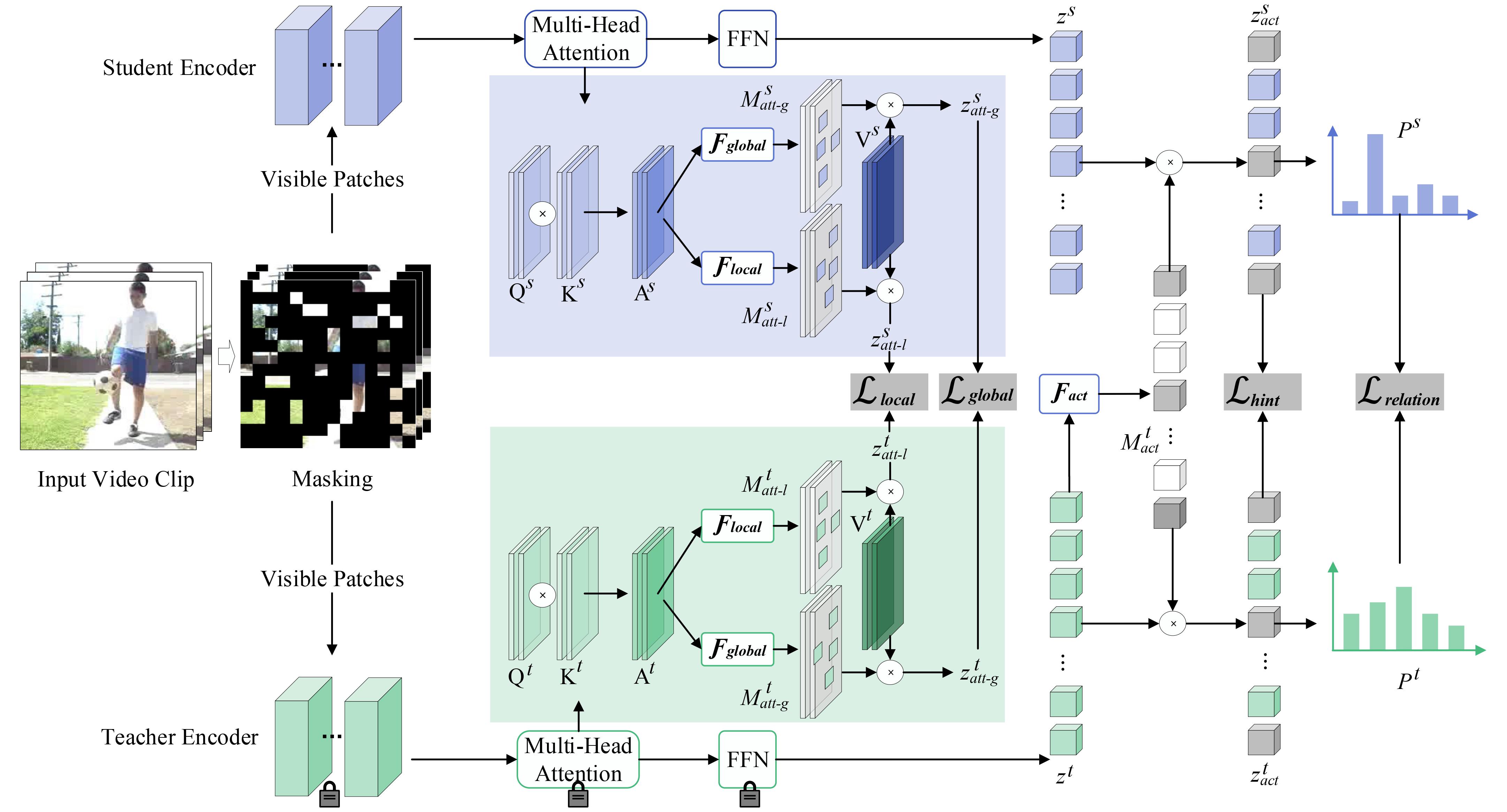

Mask Again: Masked Knowledge Distillation for Masked Video Modeling [PDF]

Xiaojie Li^1,2, Shaowei He^1, Jianlong Wu^1*, Yue Yu^2, Liqiang Nie^1*, Min Zhang^1

^1Harbin Institute of Technology, Shenzhen, ^2Peng Cheng Laboratory *Corresponding Author

| Method | Extra Data | Backbone | Resolution | #Frames x Clips x Crops | Top-1 | Top-5 |

|---|---|---|---|---|---|---|

| VideoMAE | no | ViT-S | 224x224 | 16x5x3 | 78.7 | 93.6 |

| VideoMAE | no | ViT-B | 224x224 | 16x5x3 | 81.0 | 94.6 |

| Method | Extra Data | Backbone | UCF101 | HMDB51 |

|---|---|---|---|---|

| VideoMAE | Kinetics-400 | ViT-S | 92.9 | 72.0 |

| VideoMAE | Kinetics-400 | ViT-B | 96.2 | 77.1 |

Please follow the instructions in INSTALL.md.

We provide pre-trained and fine-tuned models in MODEL_ZOO.md.

We provide the script for visualization in vis_kd.sh.

If you find this project useful for your research, please considering leaving a star⭐️ and citing our paper:

@inproceedings{li2023mask,

title={Mask Again: Masked Knowledge Distillation for Masked Video Modeling},

author={Li, Xiaojie and He, Shaowei and Wu, Jianlong and Yu, Yue and Nie, Liqiang and Zhang, Min},

booktitle={Proceedings of the 31st ACM International Conference on Multimedia},

pages={2221--2232},

year={2023}

}

This project is made available under the Apache 2.0 license.

This project is built upon VideoMAE. Thanks to the contributors of this great codebase.