News | Main Results | Usage | Citation | Acknowledgement

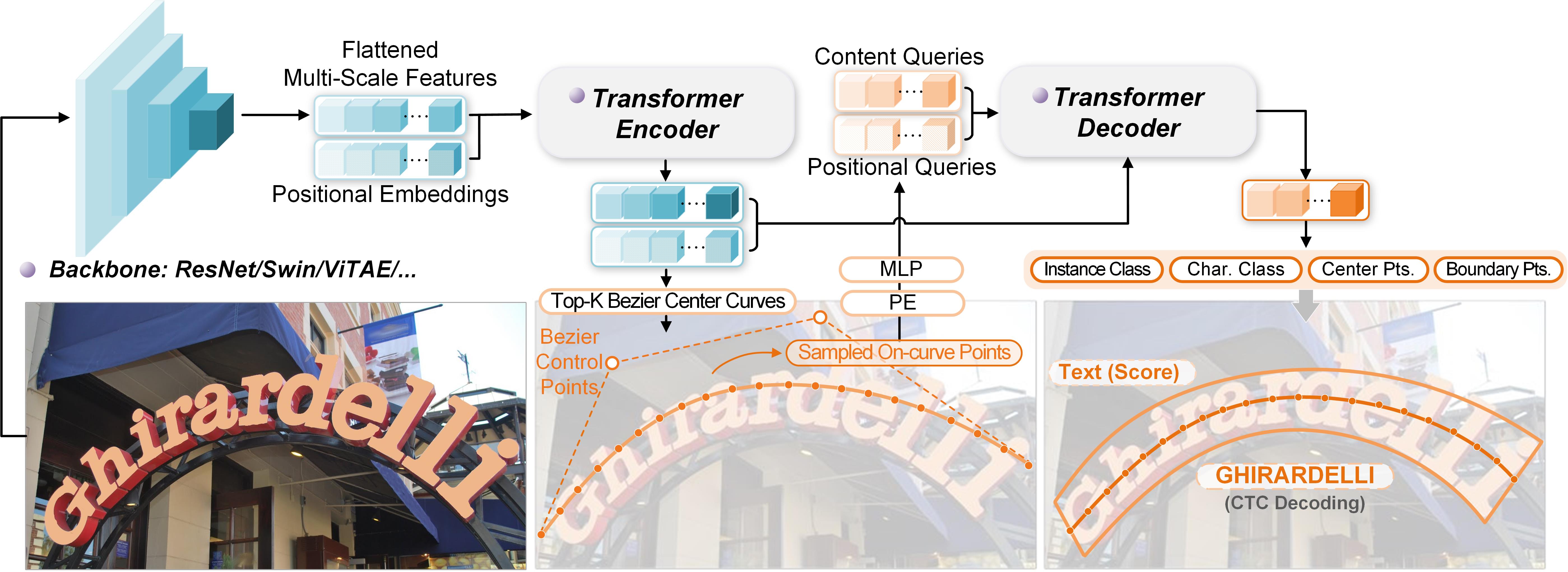

This is the official repo for the papers:DeepSolo: Let Transformer Decoder with Explicit Points Solo for Text Spotting

DeepSolo++: Let Transformer Decoder with Explicit Points Solo for Text Spotting

2023.06.2 Update the pre-trained and fine-tuned Chinese scene text spotting model (78.3% 1-NED on ICDAR 2019 ReCTS).

2023.05.31 The extension paper (DeepSolo++) is submitted to ArXiv. The code and models will be released soon.

2023.02.28 DeepSolo is accepted by CVPR 2023. 🎉🎉

Relevant Project:

DPText-DETR: Towards Better Scene Text Detection with Dynamic Points in Transformer | Code

Other applications of ViTAE inlcude: ViTPose | Remote Sensing | Matting | VSA | Video Object Segmentation

Total-Text

| Backbone | External Data | Det-P | Det-R | Det-F1 | E2E-None | E2E-Full | Weights |

|---|---|---|---|---|---|---|---|

| Res-50 | Synth150K | 93.9 | 82.1 | 87.6 | 78.8 | 86.2 | OneDrive |

| Res-50 | Synth150K+MLT17+IC13+IC15 | 93.1 | 82.1 | 87.3 | 79.7 | 87.0 | OneDrive |

| Res-50 | Synth150K+MLT17+IC13+IC15+TextOCR | 93.2 | 84.6 | 88.7 | OneDrive | ||

| Res-101 | Synth150K+MLT17+IC13+IC15 | 93.2 | 83.5 | 88.1 | 80.1 | 87.1 | OneDrive |

| Swin-T | Synth150K+MLT17+IC13+IC15 | 92.8 | 83.5 | 87.9 | 79.7 | 87.1 | OneDrive |

| Swin-S | Synth150K+MLT17+IC13 +C15 | 93.7 | 84.2 | 88.7 | 81.3 | 87.8 | OneDrive |

| ViTAEv2-S | Synth150K+MLT17+IC13+IC15 | 92.6 | 85.5 | 81.8 | 88.4 | OneDrive | |

| ViTAEv2-S | Synth150K+MLT17+IC13+IC15+TextOCR | 92.9 | 87.4 | 90.0 | 83.6 | 89.6 | OneDrive |

ICDAR 2015 (IC15)

| Backbone | External Data | Det-P | Det-R | Det-F1 | E2E-S | E2E-W | E2E-G | Weights |

|---|---|---|---|---|---|---|---|---|

| Res-50 | Synth150K+Total-Text+MLT17+IC13 | 92.8 | 87.4 | 90.0 | 86.8 | 81.9 | 76.9 | OneDrive |

| Res-50 | Synth150K+Total-Text+MLT17+IC13+TextOCR | 92.5 | 87.2 | 89.8 | OneDrive | |||

| ViTAEv2-S | Synth150K+Total-Text+MLT17+IC13 | 93.7 | 87.3 | 90.4 | 87.5 | 82.8 | 77.7 | OneDrive |

| ViTAEv2-S | Synth150K+Total-Text+MLT17+IC13+TextOCR | 92.4 | 87.9 | 88.1 | 83.9 | 79.5 | OneDrive |

CTW1500

| Backbone | External Data | Det-P | Det-R | Det-F1 | E2E-None | E2E-Full | Weights |

|---|---|---|---|---|---|---|---|

| Res-50 | Synth150K+Total-Text+MLT17+IC13+IC15 | 93.2 | 85.0 | 88.9 | 64.2 | 81.4 | OneDrive |

ICDAR 2019 ReCTS

| Backbone | External Data | Det-P | Det-R | Det-H | 1-NED | Weights |

|---|---|---|---|---|---|---|

| Res-50 | SynChinese130K+ArT+LSVT | 92.6 | 89.0 | 90.7 | 78.3 | OneDrive |

| ViTAEv2-S | SynChinese130K+ArT+LSVT | 92.6 | 89.9 | 91.2 | 79.6 | OneDrive |

Pre-trained Models for Total-Text & ICDAR 2015

| Backbone | Training Data | Weights |

|---|---|---|

| Res-50 | Synth150K+Total-Text | OneDrive |

| Res-50 | Synth150K+Total-Text+MLT17+IC13+IC15 | OneDrive |

| Res-50 | Synth150K+Total-Text+MLT17+IC13+IC15+TextOCR | OneDrive |

| Res-101 | Synth150K+Total-Text+MLT17+IC13+IC15 | OneDrive |

| Swin-T | Synth150K+Total-Text+MLT17+IC13+IC15 | OneDrive |

| Swin-S | Synth150K+Total-Text+MLT17+IC13+IC15 | OneDrive |

| ViTAEv2-S | Synth150K+Total-Text+MLT17+IC13+IC15 | OneDrive |

| ViTAEv2-S | Synth150K+Total-Text+MLT17+IC13+IC15+TextOCR | OneDrive |

Pre-trained Model for CTW1500

| Backbone | Training Data | Weights |

|---|---|---|

| Res-50 | Synth150K+Total-Text+MLT17+IC13+IC15 | OneDrive |

Pre-trained Model for ReCTS

| Backbone | Training Data | Weights |

|---|---|---|

| Res-50 | SynChinese130K+ArT+LSVT+ReCTS | OneDrive |

| ViTAEv2-S | SynChinese130K+ArT+LSVT+ReCTS | OneDrive |

Python 3.8 + PyTorch 1.9.0 + CUDA 11.1 + Detectron2 (v0.6)

git clone https://github.com/ViTAE-Transformer/DeepSolo.git

cd DeepSolo

conda create -n deepsolo python=3.8 -y

conda activate deepsolo

pip install torch==1.9.0+cu111 torchvision==0.10.0+cu111 -f https://download.pytorch.org/whl/torch_stable.html

pip install -r requirements.txt

python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu111/torch1.9/index.html

python setup.py build develop

Datasets

[SynthText150K (CurvedSynText150K)] images | annotations(Part1) | annotations(Part2)

[MLT] images | annotations

[ICDAR2013] images | annotations

[ICDAR2015] images | annotations

[Total-Text] images | annotations

[CTW1500] images | annotations

[TextOCR] images | annotations

[Inverse-Text] images | annotations

[SynChinese130K] images | annotations

[ArT] images | annotations

[LSVT] images | annotations

[ReCTS] images | annotations

[Evaluation ground-truth] Link

Some image files need to be renamed. Organize them as follows (lexicon files are not listed here):

|- ./datasets

|- syntext1

| |- train_images

| └ annotations

| |- train_37voc.json

| └ train_96voc.json

|- syntext2

| |- train_images

| └ annotations

| |- train_37voc.json

| └ train_96voc.json

|- mlt2017

| |- train_images

| |- train_37voc.json

| └ train_96voc.json

|- totaltext

| |- train_images

| |- test_images

| |- train_37voc.json

| |- train_96voc.json

| └ test.json

|- ic13

| |- train_images

| |- train_37voc.json

| └ train_96voc.json

|- ic15

| |- train_images

| |- test_images

| |- train_37voc.json

| |- train_96voc.json

| └ test.json

|- ctw1500

| |- train_images

| |- test_images

| |- train_96voc.json

| └ test.json

|- textocr

| |- train_images

| |- train_37voc_1.json

| └ train_37voc_2.json

|- inversetext

| |- test_images

| └ test.json

|- chnsyntext

| |- syn_130k_images

| └ chn_syntext.json

|- ArT

| |- rename_artimg_train

| └ art_train.json

|- LSVT

| |- rename_lsvtimg_train

| └ lsvt_train.json

|- ReCTS

| |- ReCTS_train_images # 18,000 images

| |- ReCTS_val_images # 2,000 images

| |- ReCTS_test_images # 5,000 images

| |- rects_train.json

| |- rects_val.json

| └ rects_test.json

|- evaluation

| |- gt_*.zip

ImageNet Pre-trained Backbone

If you want to pre-train DeepSolo with ResNet-101, ViTAEv2-S or SwinTransformer , you can download the converted backbone weights and put them under pretrained_backbone for initialization: Swin-T | ViTAEv2-S | Res101 | Swin-S. You can also refer to the python files in pretrained_backbone and convert the backbones by yourself.

If you want to use the model trained on Chinese data, please download the font (simsun.ttc) and Chinese character list (chn_cls_list, a binary file) first.

wget https://drive.google.com/file/d/1dcR__ZgV_JOfpp8Vde4FR3bSR-QnrHVo/view?usp=sharing -O simsun.ttc

wget https://drive.google.com/file/d/1wqkX2VAy48yte19q1Yn5IVjdMVpLzYVo/view?usp=sharing -O chn_cls_list

Total-Text & ICDAR2015

1. Pre-train

For example, pre-train DeepSolo with Synth150K+Total-Text+MLT17+IC13+IC15:

python tools/train_net.py --config-file configs/R_50/pretrain/150k_tt_mlt_13_15.yaml --num-gpus 4

2. Fine-tune

Fine-tune on Total-Text or ICDAR2015:

python tools/train_net.py --config-file configs/R_50/TotalText/finetune_150k_tt_mlt_13_15.yaml --num-gpus 4

python tools/train_net.py --config-file configs/R_50/IC15/finetune_150k_tt_mlt_13_15.yaml --num-gpus 4

CTW1500

1. Pre-train

python tools/train_net.py --config-file configs/R_50/CTW1500/pretrain_96voc_50maxlen.yaml --num-gpus 4

2. Fine-tune

python tools/train_net.py --config-file configs/R_50/CTW1500/finetune_96voc_50maxlen.yaml --num-gpus 4

ReCTS

1. Pre-train

python tools/train_net.py --config-file configs/R_50/ReCTS/pretrain.yaml --num-gpus 8

2. Fine-tune

python tools/train_net.py --config-file configs/R_50/ReCTS/finetune.yaml --num-gpus 8

python tools/train_net.py --config-file ${CONFIG_FILE} --eval-only MODEL.WEIGHTS ${MODEL_PATH}

Note: To conduct evaluation on ICDAR 2019 ReCTS, you can directly submit the saved file output/R50/rects/finetune/inference/rects_submit.txt to the official website for evaluation.

python demo/demo.py --config-file ${CONFIG_FILE} --input ${IMAGES_FOLDER_OR_ONE_IMAGE_PATH} --output ${OUTPUT_PATH} --opts MODEL.WEIGHTS <MODEL_PATH>

If you find DeepSolo helpful, please consider giving this repo a star:star: and citing:

@inproceedings{ye2023deepsolo,

title={DeepSolo: Let Transformer Decoder with Explicit Points Solo for Text Spotting},

author={Ye, Maoyuan and Zhang, Jing and Zhao, Shanshan and Liu, Juhua and Liu, Tongliang and Du, Bo and Tao, Dacheng},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={19348--19357},

year={2023}

}

@article{ye2023deepsolo++,

title={DeepSolo++: Let Transformer Decoder with Explicit Points Solo for Text Spotting},

author={Ye, Maoyuan and Zhang, Jing and Zhao, Shanshan and Liu, Juhua and Liu, Tongliang and Du, Bo and Tao, Dacheng},

booktitle={arxiv preprint arXiv:2305.19957},

year={2023}

}This project is based on Adelaidet. For academic use, this project is licensed under the 2-clause BSD License.